Gowri Srinivasan

Learning the Factors Controlling Mineralization for Geologic Carbon Sequestration

Dec 20, 2023Abstract:We perform a set of flow and reactive transport simulations within three-dimensional fracture networks to learn the factors controlling mineral reactions. CO$_2$ mineralization requires CO$_2$-laden water, dissolution of a mineral that then leads to precipitation of a CO$_2$-bearing mineral. Our discrete fracture networks (DFN) are partially filled with quartz that gradually dissolves until it reaches a quasi-steady state. At the end of the simulation, we measure the quartz remaining in each fracture within the domain. We observe that a small backbone of fracture exists, where the quartz is fully dissolved which leads to increased flow and transport. However, depending on the DFN topology and the rate of dissolution, we observe a large variability of these changes, which indicates an interplay between the fracture network structure and the impact of geochemical dissolution. In this work, we developed a machine learning framework to extract the important features that support mineralization in the form of dissolution. In addition, we use structural and topological features of the fracture network to predict the remaining quartz volume in quasi-steady state conditions. As a first step to characterizing carbon mineralization, we study dissolution with this framework. We studied a variety of reaction and fracture parameters and their impact on the dissolution of quartz in fracture networks. We found that the dissolution reaction rate constant of quartz and the distance to the flowing backbone in the fracture network are the two most important features that control the amount of quartz left in the system. For the first time, we use a combination of a finite-volume reservoir model and graph-based approach to study reactive transport in a complex fracture network to determine the key features that control dissolution.

Machine Learning in Heterogeneous Porous Materials

Feb 04, 2022

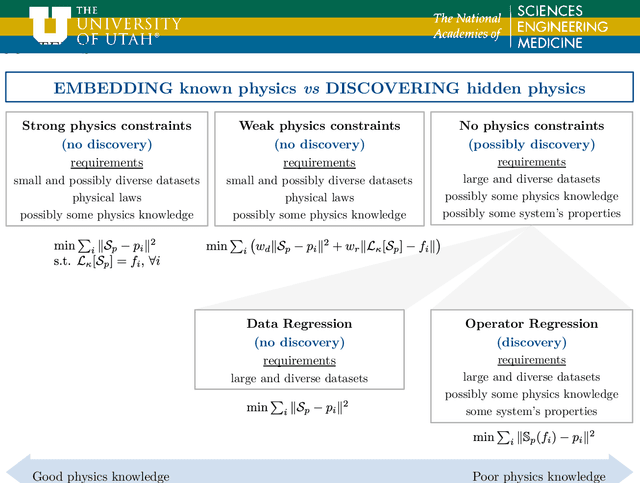

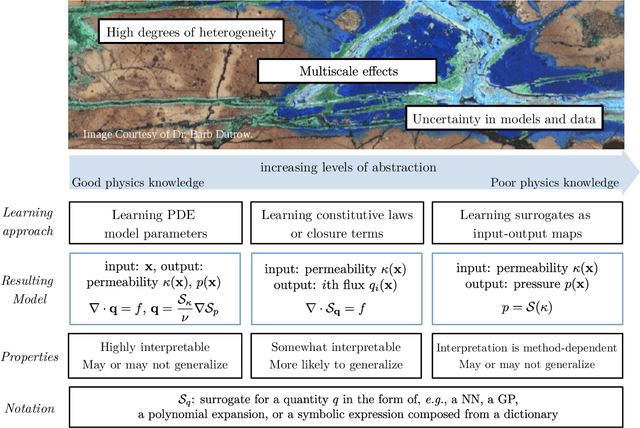

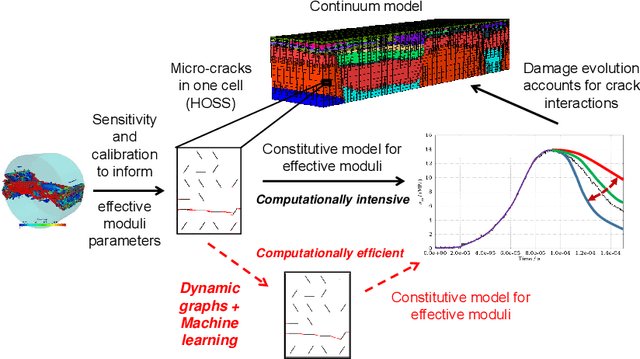

Abstract:The "Workshop on Machine learning in heterogeneous porous materials" brought together international scientific communities of applied mathematics, porous media, and material sciences with experts in the areas of heterogeneous materials, machine learning (ML) and applied mathematics to identify how ML can advance materials research. Within the scope of ML and materials research, the goal of the workshop was to discuss the state-of-the-art in each community, promote crosstalk and accelerate multi-disciplinary collaborative research, and identify challenges and opportunities. As the end result, four topic areas were identified: ML in predicting materials properties, and discovery and design of novel materials, ML in porous and fractured media and time-dependent phenomena, Multi-scale modeling in heterogeneous porous materials via ML, and Discovery of materials constitutive laws and new governing equations. This workshop was part of the AmeriMech Symposium series sponsored by the National Academies of Sciences, Engineering and Medicine and the U.S. National Committee on Theoretical and Applied Mechanics.

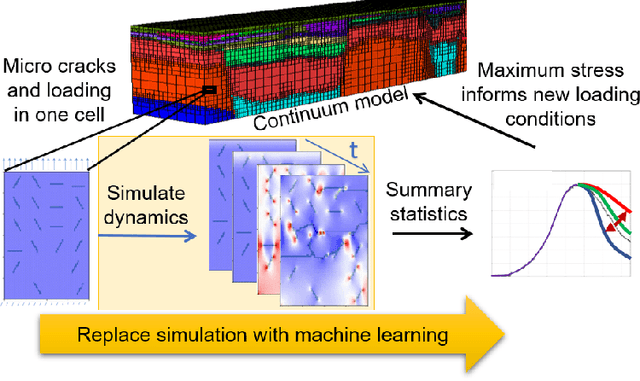

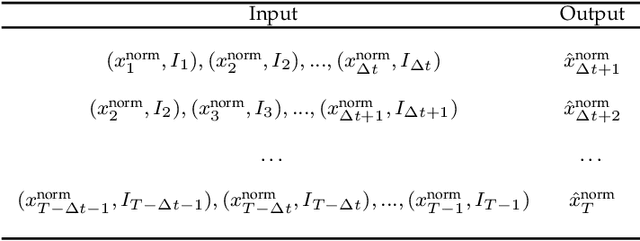

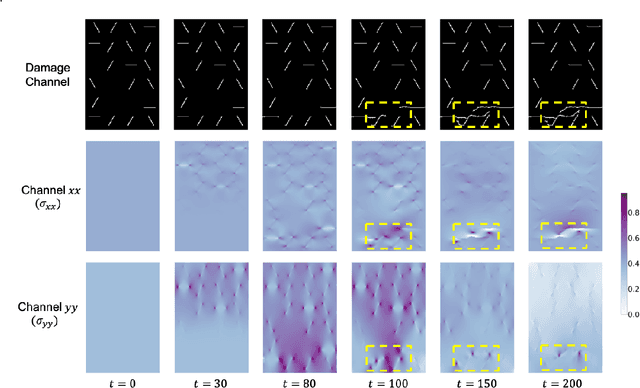

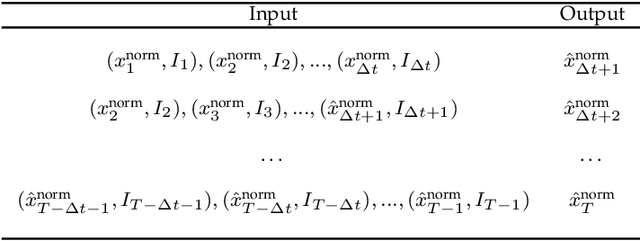

StressNet: Deep Learning to Predict Stress With Fracture Propagation in Brittle Materials

Nov 20, 2020

Abstract:Catastrophic failure in brittle materials is often due to the rapid growth and coalescence of cracks aided by high internal stresses. Hence, accurate prediction of maximum internal stress is critical to predicting time to failure and improving the fracture resistance and reliability of materials. Existing high-fidelity methods, such as the Finite-Discrete Element Model (FDEM), are limited by their high computational cost. Therefore, to reduce computational cost while preserving accuracy, a novel deep learning model, "StressNet," is proposed to predict the entire sequence of maximum internal stress based on fracture propagation and the initial stress data. More specifically, the Temporal Independent Convolutional Neural Network (TI-CNN) is designed to capture the spatial features of fractures like fracture path and spall regions, and the Bidirectional Long Short-term Memory (Bi-LSTM) Network is adapted to capture the temporal features. By fusing these features, the evolution in time of the maximum internal stress can be accurately predicted. Moreover, an adaptive loss function is designed by dynamically integrating the Mean Squared Error (MSE) and the Mean Absolute Percentage Error (MAPE), to reflect the fluctuations in maximum internal stress. After training, the proposed model is able to compute accurate multi-step predictions of maximum internal stress in approximately 20 seconds, as compared to the FDEM run time of 4 hours, with an average MAPE of 2% relative to test data.

Identifying Entangled Physics Relationships through Sparse Matrix Decomposition to Inform Plasma Fusion Design

Oct 28, 2020

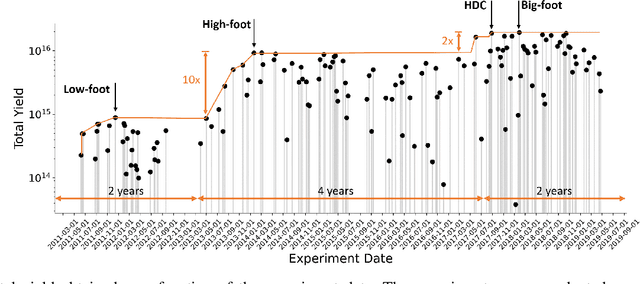

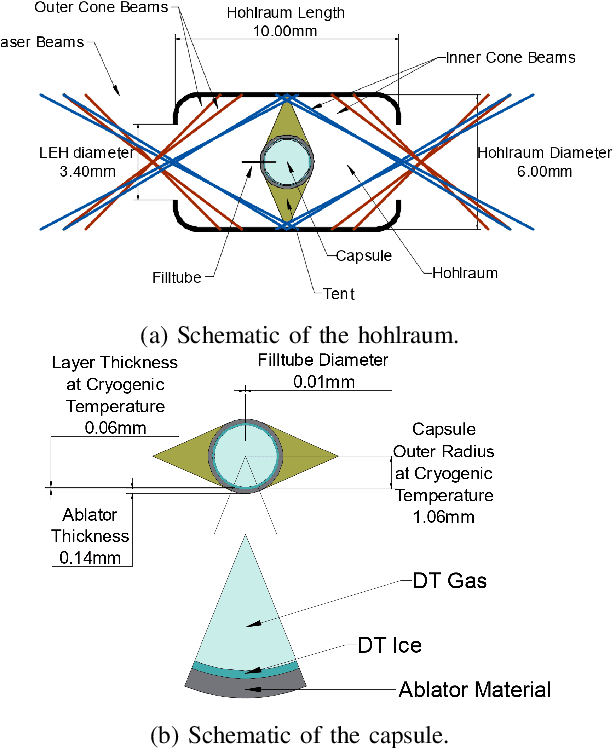

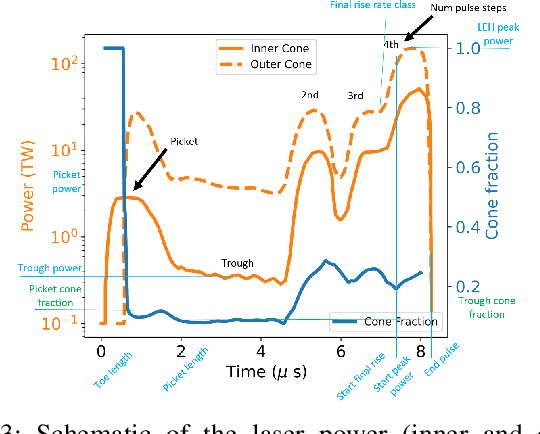

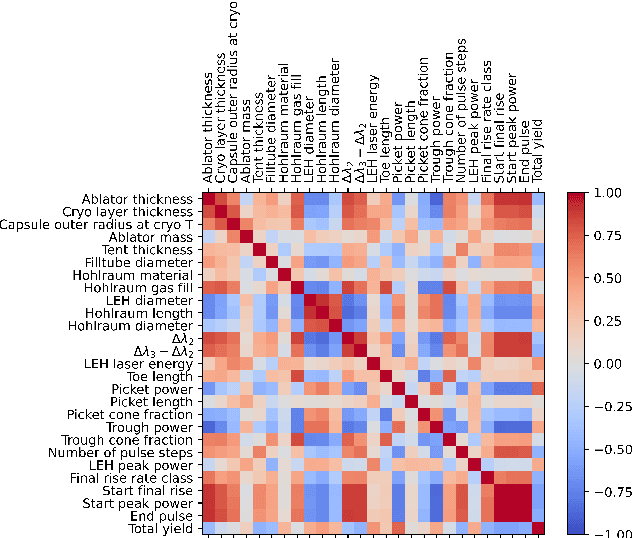

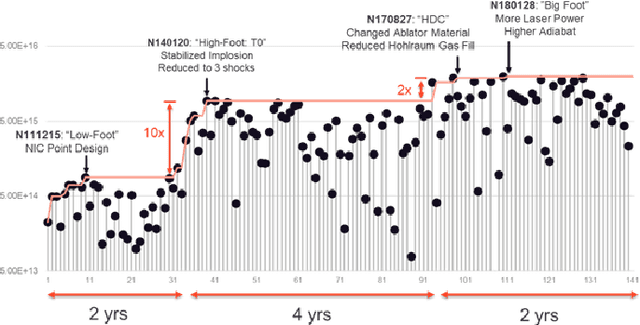

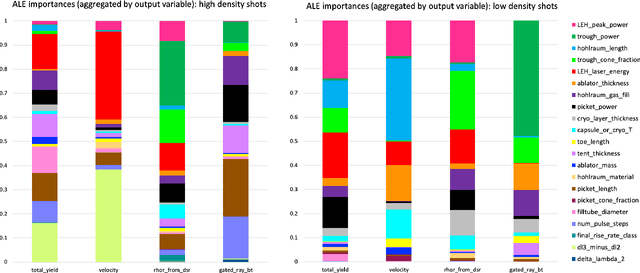

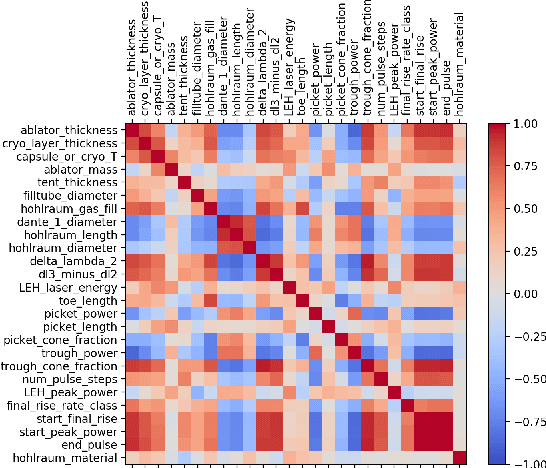

Abstract:A sustainable burn platform through inertial confinement fusion (ICF) has been an ongoing challenge for over 50 years. Mitigating engineering limitations and improving the current design involves an understanding of the complex coupling of physical processes. While sophisticated simulations codes are used to model ICF implosions, these tools contain necessary numerical approximation but miss physical processes that limit predictive capability. Identification of relationships between controllable design inputs to ICF experiments and measurable outcomes (e.g. yield, shape) from performed experiments can help guide the future design of experiments and development of simulation codes, to potentially improve the accuracy of the computational models used to simulate ICF experiments. We use sparse matrix decomposition methods to identify clusters of a few related design variables. Sparse principal component analysis (SPCA) identifies groupings that are related to the physical origin of the variables (laser, hohlraum, and capsule). A variable importance analysis finds that in addition to variables highly correlated with neutron yield such as picket power and laser energy, variables that represent a dramatic change of the ICF design such as number of pulse steps are also very important. The obtained sparse components are then used to train a random forest (RF) surrogate for predicting total yield. The RF performance on the training and testing data compares with the performance of the RF surrogate trained using all design variables considered. This work is intended to inform design changes in future ICF experiments by augmenting the expert intuition and simulations results.

Exploring Sensitivity of ICF Outputs to Design Parameters in Experiments Using Machine Learning

Oct 08, 2020

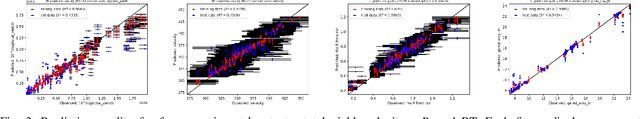

Abstract:Building a sustainable burn platform in inertial confinement fusion (ICF) requires an understanding of the complex coupling of physical processes and the effects that key experimental design changes have on implosion performance. While simulation codes are used to model ICF implosions, incomplete physics and the need for approximations deteriorate their predictive capability. Identification of relationships between controllable design inputs and measurable outcomes can help guide the future design of experiments and development of simulation codes, which can potentially improve the accuracy of the computational models used to simulate ICF implosions. In this paper, we leverage developments in machine learning (ML) and methods for ML feature importance/sensitivity analysis to identify complex relationships in ways that are difficult to process using expert judgment alone. We present work using random forest (RF) regression for prediction of yield, velocity, and other experimental outcomes given a suite of design parameters, along with an assessment of important relationships and uncertainties in the prediction model. We show that RF models are capable of learning and predicting on ICF experimental data with high accuracy, and we extract feature importance metrics that provide insight into the physical significance of different controllable design inputs for various ICF design configurations. These results can be used to augment expert intuition and simulation results for optimal design of future ICF experiments.

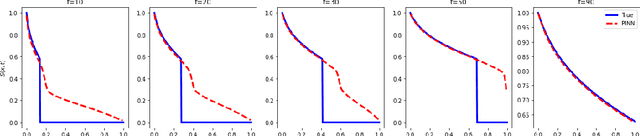

Learning to fail: Predicting fracture evolution in brittle materials using recurrent graph convolutional neural networks

Oct 14, 2018

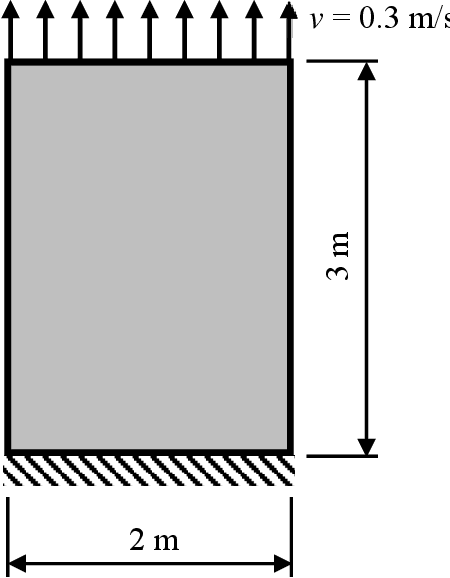

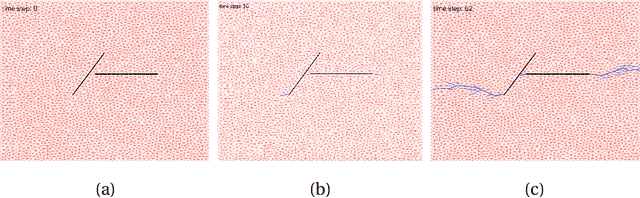

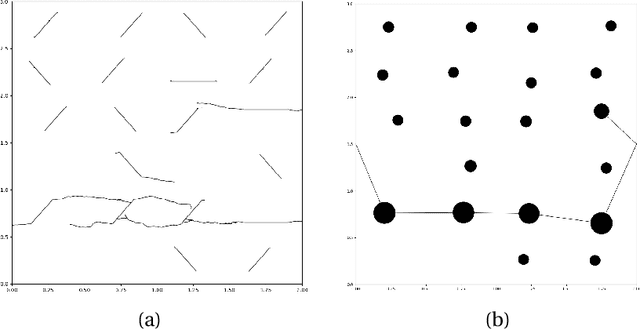

Abstract:Understanding dynamic fracture propagation is essential to predicting how brittle materials fail. Various mathematical models and computational applications have been developed to predict fracture evolution and coalescence, including finite-discrete element methods such as the Hybrid Optimization Software Suite (HOSS). While such methods achieve high fidelity results, they can be computationally prohibitive: a single simulation takes hours to run, and thousands of simulations are required for a statistically meaningful ensemble. We propose a machine learning approach that, once trained on data from HOSS simulations, can predict fracture growth statistics within seconds. Our method uses deep learning, exploiting the capabilities of a graph convolutional network to recognize features of the fracturing material, along with a recurrent neural network to model the evolution of these features. In this way, we simultaneously generate predictions for qualitatively distinct material properties. Our prediction for total damage in a coalesced fracture, at the final simulation time step, is within 3% of its actual value, and our prediction for total length of a coalesced fracture is within 2%. We also develop a novel form of data augmentation that compensates for the modest size of our training data, and an ensemble learning approach that enables us to predict when the material fails, with a mean absolute error of approximately 15%.

Machine learning for graph-based representations of three-dimensional discrete fracture networks

Jan 30, 2018

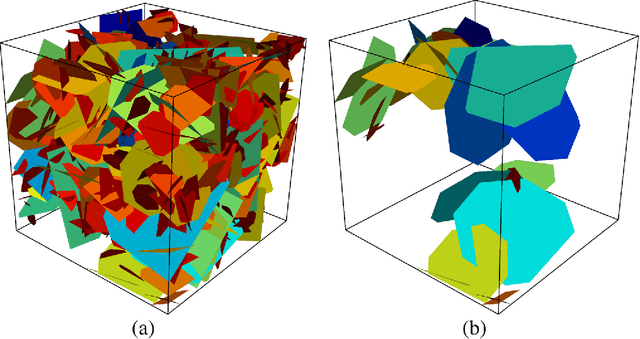

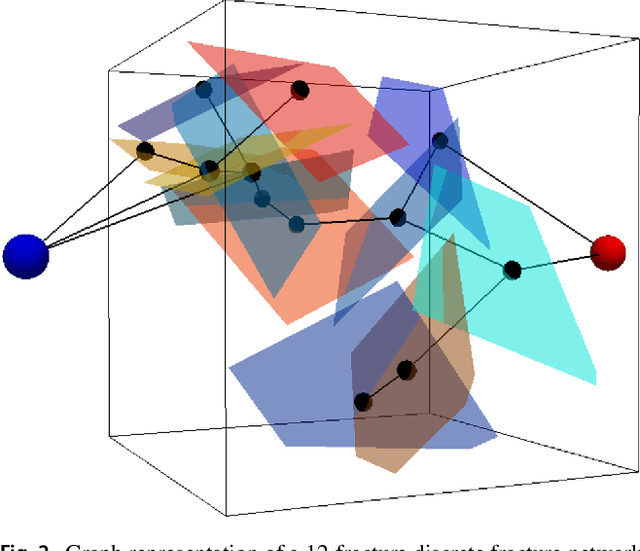

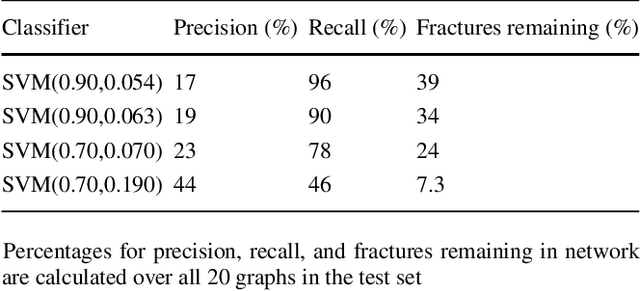

Abstract:Structural and topological information play a key role in modeling flow and transport through fractured rock in the subsurface. Discrete fracture network (DFN) computational suites such as dfnWorks are designed to simulate flow and transport in such porous media. Flow and transport calculations reveal that a small backbone of fractures exists, where most flow and transport occurs. Restricting the flowing fracture network to this backbone provides a significant reduction in the network's effective size. However, the particle tracking simulations needed to determine the reduction are computationally intensive. Such methods may be impractical for large systems or for robust uncertainty quantification of fracture networks, where thousands of forward simulations are needed to bound system behavior. In this paper, we develop an alternative network reduction approach to characterizing transport in DFNs, by combining graph theoretical and machine learning methods. We consider a graph representation where nodes signify fractures and edges denote their intersections. Using random forest and support vector machines, we rapidly identify a subnetwork that captures the flow patterns of the full DFN, based primarily on node centrality features in the graph. Our supervised learning techniques train on particle-tracking backbone paths found by dfnWorks, but run in negligible time compared to those simulations. We find that our predictions can reduce the network to approximately 20% of its original size, while still generating breakthrough curves consistent with those of the original network.

* Computational Geosciences (2018)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge