Hari S. Viswanathan

Sensitivity Analysis in the Presence of Intrinsic Stochasticity for Discrete Fracture Network Simulations

Dec 07, 2023

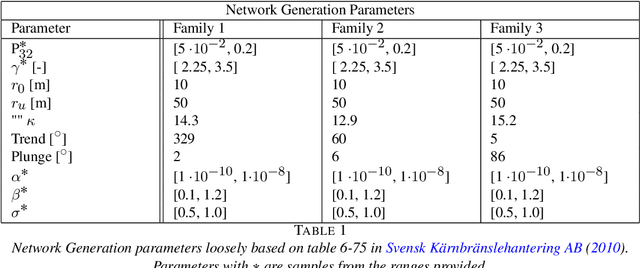

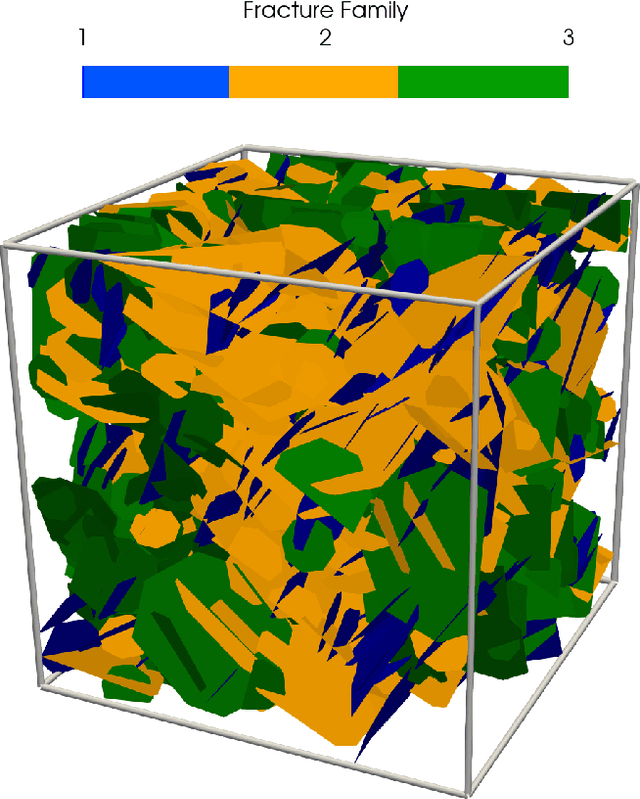

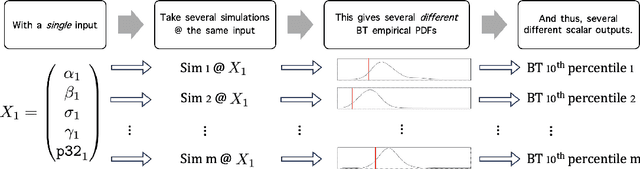

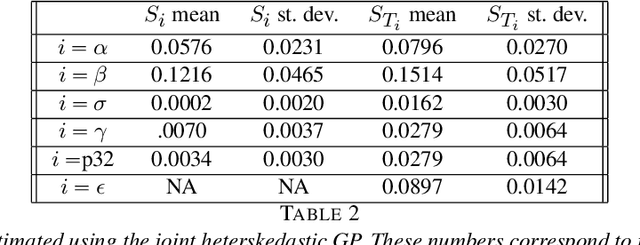

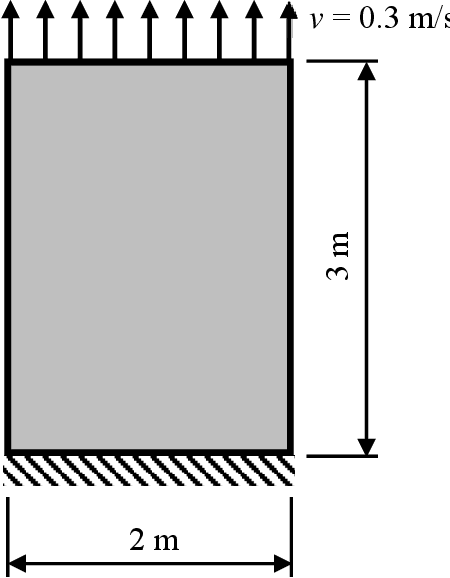

Abstract:Large-scale discrete fracture network (DFN) simulators are standard fare for studies involving the sub-surface transport of particles since direct observation of real world underground fracture networks is generally infeasible. While these simulators have seen numerous successes over several engineering applications, estimations on quantities of interest (QoI) - such as breakthrough time of particles reaching the edge of the system - suffer from a two distinct types of uncertainty. A run of a DFN simulator requires several parameter values to be set that dictate the placement and size of fractures, the density of fractures, and the overall permeability of the system; uncertainty on the proper parameter choices will lead to some amount of uncertainty in the QoI, called epistemic uncertainty. Furthermore, since DFN simulators rely on stochastic processes to place fractures and govern flow, understanding how this randomness affects the QoI requires several runs of the simulator at distinct random seeds. The uncertainty in the QoI attributed to different realizations (i.e. different seeds) of the same random process leads to a second type of uncertainty, called aleatoric uncertainty. In this paper, we perform a Sensitivity Analysis, which directly attributes the uncertainty observed in the QoI to the epistemic uncertainty from each input parameter and to the aleatoric uncertainty. We make several design choices to handle an observed heteroskedasticity in DFN simulators, where the aleatoric uncertainty changes for different inputs, since the quality makes several standard statistical methods inadmissible. Beyond the specific takeaways on which input variables affect uncertainty the most for DFN simulators, a major contribution of this paper is the introduction of a statistically rigorous workflow for characterizing the uncertainty in DFN flow simulations that exhibit heteroskedasticity.

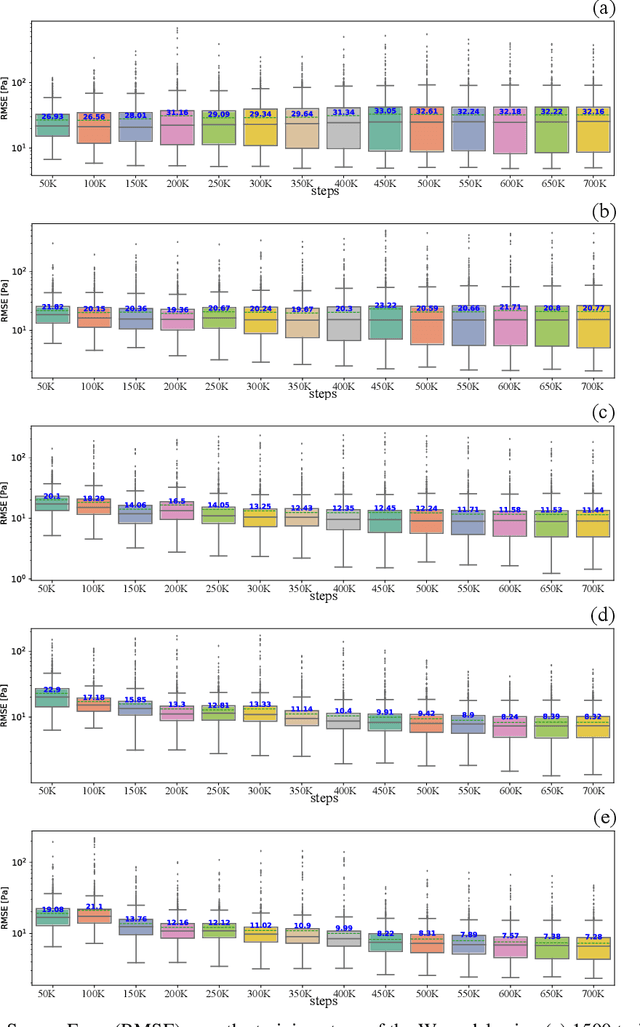

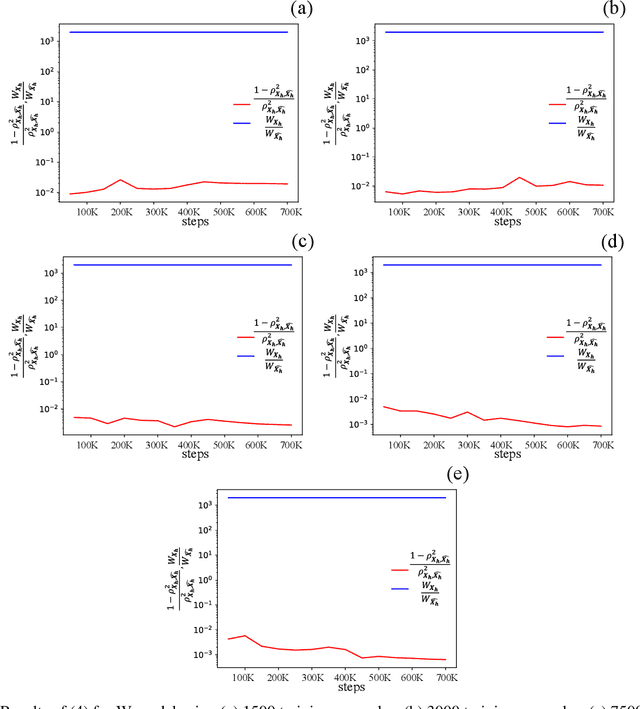

Progressive reduced order modeling: empowering data-driven modeling with selective knowledge transfer

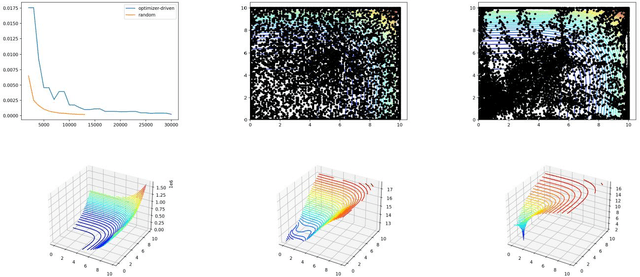

Oct 04, 2023Abstract:Data-driven modeling can suffer from a constant demand for data, leading to reduced accuracy and impractical for engineering applications due to the high cost and scarcity of information. To address this challenge, we propose a progressive reduced order modeling framework that minimizes data cravings and enhances data-driven modeling's practicality. Our approach selectively transfers knowledge from previously trained models through gates, similar to how humans selectively use valuable knowledge while ignoring unuseful information. By filtering relevant information from previous models, we can create a surrogate model with minimal turnaround time and a smaller training set that can still achieve high accuracy. We have tested our framework in several cases, including transport in porous media, gravity-driven flow, and finite deformation in hyperelastic materials. Our results illustrate that retaining information from previous models and utilizing a valuable portion of that knowledge can significantly improve the accuracy of the current model. We have demonstrated the importance of progressive knowledge transfer and its impact on model accuracy with reduced training samples. For instance, our framework with four parent models outperforms the no-parent counterpart trained on data nine times larger. Our research unlocks data-driven modeling's potential for practical engineering applications by mitigating the data scarcity issue. Our proposed framework is a significant step toward more efficient and cost-effective data-driven modeling, fostering advancements across various fields.

Predictive Scale-Bridging Simulations through Active Learning

Sep 20, 2022

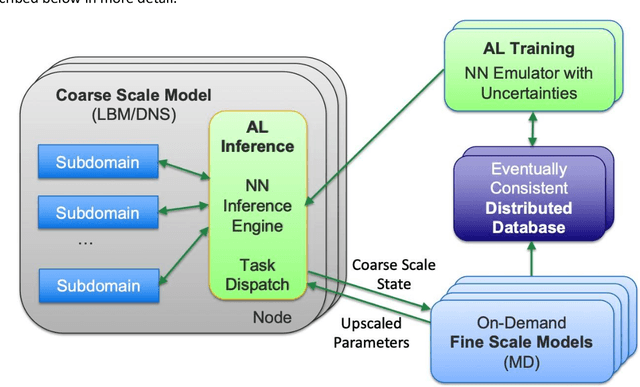

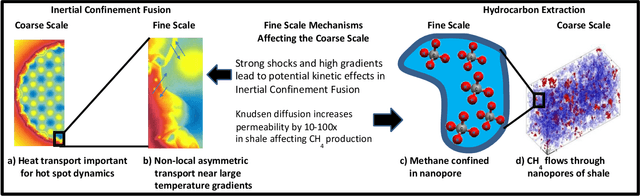

Abstract:Throughout computational science, there is a growing need to utilize the continual improvements in raw computational horsepower to achieve greater physical fidelity through scale-bridging over brute-force increases in the number of mesh elements. For instance, quantitative predictions of transport in nanoporous media, critical to hydrocarbon extraction from tight shale formations, are impossible without accounting for molecular-level interactions. Similarly, inertial confinement fusion simulations rely on numerical diffusion to simulate molecular effects such as non-local transport and mixing without truly accounting for molecular interactions. With these two disparate applications in mind, we develop a novel capability which uses an active learning approach to optimize the use of local fine-scale simulations for informing coarse-scale hydrodynamics. Our approach addresses three challenges: forecasting continuum coarse-scale trajectory to speculatively execute new fine-scale molecular dynamics calculations, dynamically updating coarse-scale from fine-scale calculations, and quantifying uncertainty in neural network models.

A framework for data-driven solution and parameter estimation of PDEs using conditional generative adversarial networks

May 27, 2021

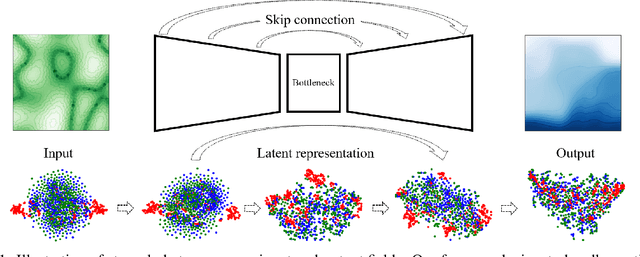

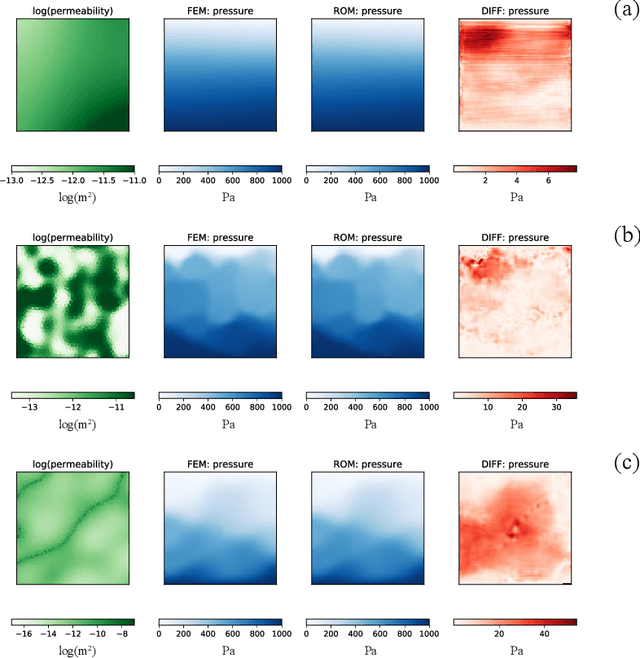

Abstract:This work is the first to employ and adapt the image-to-image translation concept based on conditional generative adversarial networks (cGAN) towards learning a forward and an inverse solution operator of partial differential equations (PDEs). Even though the proposed framework could be applied as a surrogate model for the solution of any PDEs, here we focus on steady-state solutions of coupled hydro-mechanical processes in heterogeneous porous media. Strongly heterogeneous material properties, which translate to the heterogeneity of coefficients of the PDEs and discontinuous features in the solutions, require specialized techniques for the forward and inverse solution of these problems. Additionally, parametrization of the spatially heterogeneous coefficients is excessively difficult by using standard reduced order modeling techniques. In this work, we overcome these challenges by employing the image-to-image translation concept to learn the forward and inverse solution operators and utilize a U-Net generator and a patch-based discriminator. Our results show that the proposed data-driven reduced order model has competitive predictive performance capabilities in accuracy and computational efficiency as well as training time requirements compared to state-of-the-art data-driven methods for both forward and inverse problems.

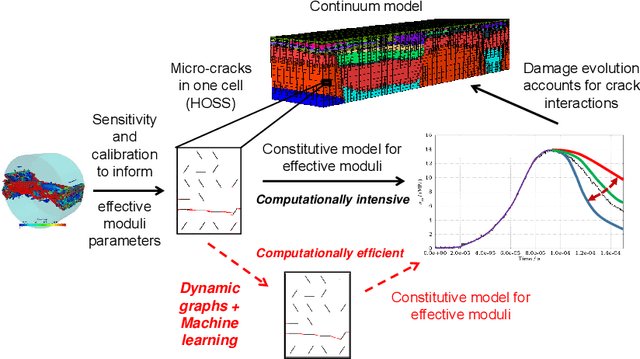

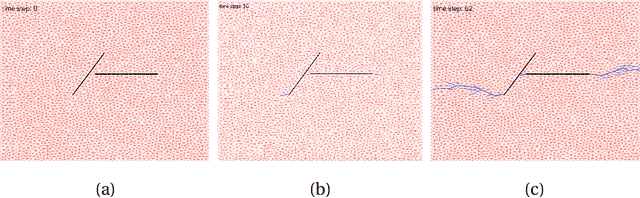

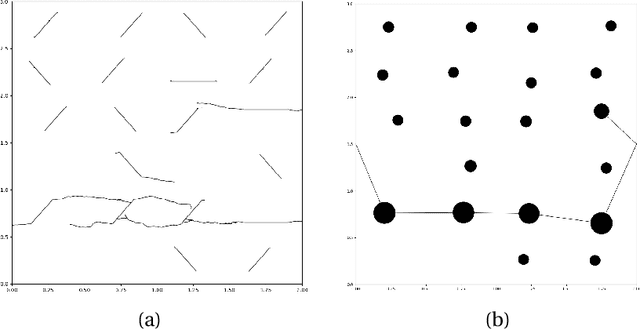

Learning to fail: Predicting fracture evolution in brittle materials using recurrent graph convolutional neural networks

Oct 14, 2018

Abstract:Understanding dynamic fracture propagation is essential to predicting how brittle materials fail. Various mathematical models and computational applications have been developed to predict fracture evolution and coalescence, including finite-discrete element methods such as the Hybrid Optimization Software Suite (HOSS). While such methods achieve high fidelity results, they can be computationally prohibitive: a single simulation takes hours to run, and thousands of simulations are required for a statistically meaningful ensemble. We propose a machine learning approach that, once trained on data from HOSS simulations, can predict fracture growth statistics within seconds. Our method uses deep learning, exploiting the capabilities of a graph convolutional network to recognize features of the fracturing material, along with a recurrent neural network to model the evolution of these features. In this way, we simultaneously generate predictions for qualitatively distinct material properties. Our prediction for total damage in a coalesced fracture, at the final simulation time step, is within 3% of its actual value, and our prediction for total length of a coalesced fracture is within 2%. We also develop a novel form of data augmentation that compensates for the modest size of our training data, and an ensemble learning approach that enables us to predict when the material fails, with a mean absolute error of approximately 15%.

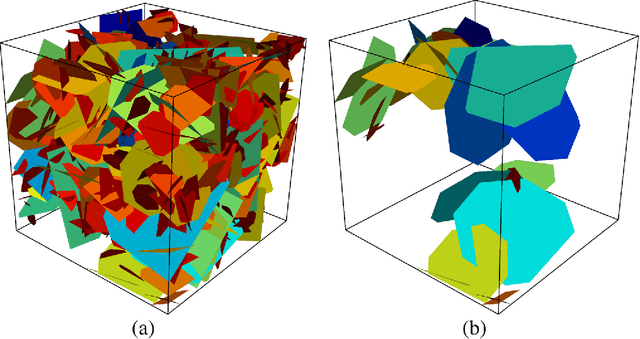

Machine learning for graph-based representations of three-dimensional discrete fracture networks

Jan 30, 2018

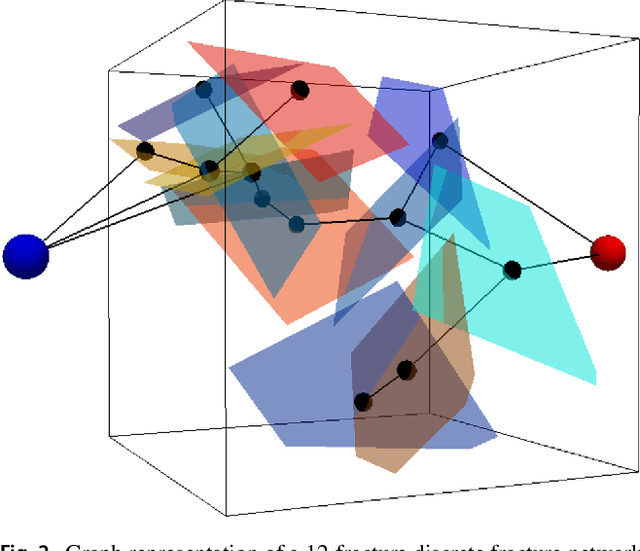

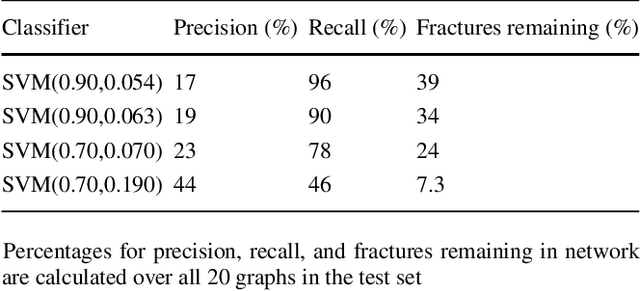

Abstract:Structural and topological information play a key role in modeling flow and transport through fractured rock in the subsurface. Discrete fracture network (DFN) computational suites such as dfnWorks are designed to simulate flow and transport in such porous media. Flow and transport calculations reveal that a small backbone of fractures exists, where most flow and transport occurs. Restricting the flowing fracture network to this backbone provides a significant reduction in the network's effective size. However, the particle tracking simulations needed to determine the reduction are computationally intensive. Such methods may be impractical for large systems or for robust uncertainty quantification of fracture networks, where thousands of forward simulations are needed to bound system behavior. In this paper, we develop an alternative network reduction approach to characterizing transport in DFNs, by combining graph theoretical and machine learning methods. We consider a graph representation where nodes signify fractures and edges denote their intersections. Using random forest and support vector machines, we rapidly identify a subnetwork that captures the flow patterns of the full DFN, based primarily on node centrality features in the graph. Our supervised learning techniques train on particle-tracking backbone paths found by dfnWorks, but run in negligible time compared to those simulations. We find that our predictions can reduce the network to approximately 20% of its original size, while still generating breakthrough curves consistent with those of the original network.

* Computational Geosciences (2018)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge