Frank Allgöwer

Localization and path following for an autonomous e-scooter

May 08, 2025Abstract:In order to mitigate economical, ecological, and societal challenges in electric scooter (e-scooter) sharing systems, we develop an autonomous e-scooter prototype. Our vision is to design a fully autonomous prototype that can find its way to the next parking spot, high-demand area, or charging station. In this work, we propose a path following solution to enable localization and navigation in an urban environment with a provided path to follow. We design a closed-loop architecture that solves the localization and path following problem while allowing the e-scooter to maintain its balance with a previously developed reaction wheel mechanism. Our approach facilitates state and input constraints, e.g., adhering to the path width, while remaining executable on a Raspberry Pi 5. We demonstrate the efficacy of our approach in a real-world experiment on our prototype.

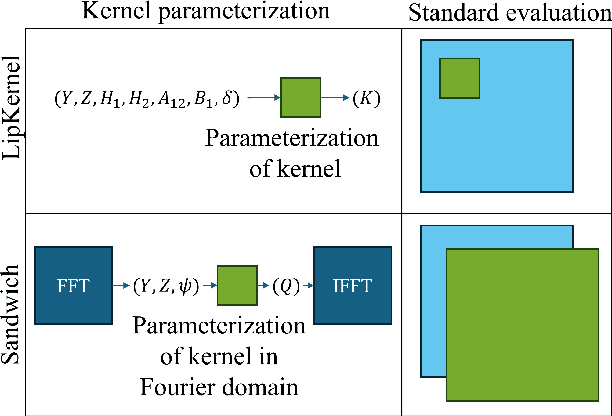

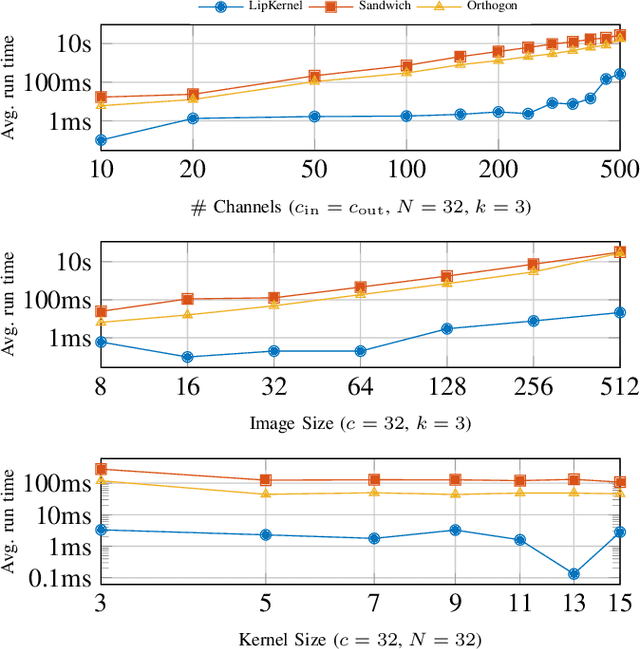

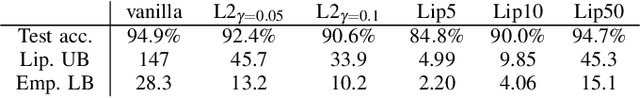

LipKernel: Lipschitz-Bounded Convolutional Neural Networks via Dissipative Layers

Oct 29, 2024

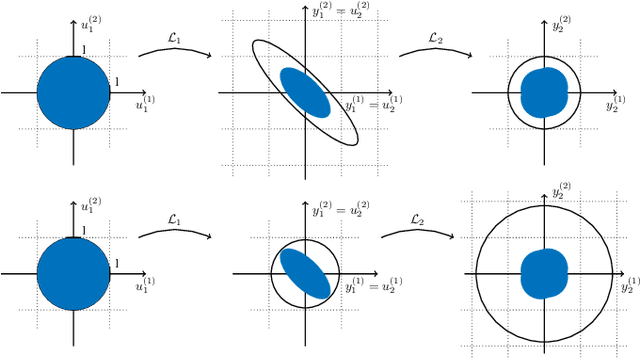

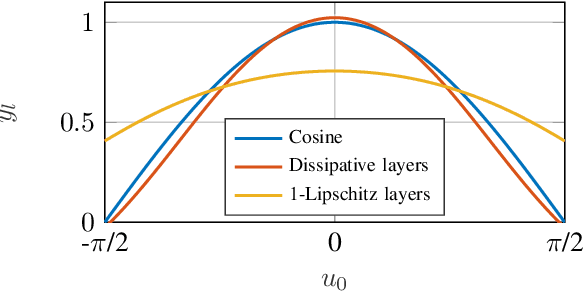

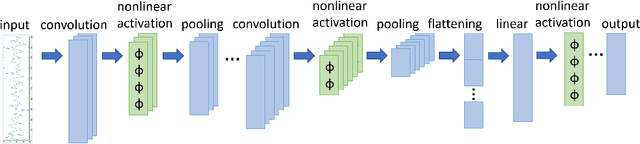

Abstract:We propose a novel layer-wise parameterization for convolutional neural networks (CNNs) that includes built-in robustness guarantees by enforcing a prescribed Lipschitz bound. Each layer in our parameterization is designed to satisfy a linear matrix inequality (LMI), which in turn implies dissipativity with respect to a specific supply rate. Collectively, these layer-wise LMIs ensure Lipschitz boundedness for the input-output mapping of the neural network, yielding a more expressive parameterization than through spectral bounds or orthogonal layers. Our new method LipKernel directly parameterizes dissipative convolution kernels using a 2-D Roesser-type state space model. This means that the convolutional layers are given in standard form after training and can be evaluated without computational overhead. In numerical experiments, we show that the run-time using our method is orders of magnitude faster than state-of-the-art Lipschitz-bounded networks that parameterize convolutions in the Fourier domain, making our approach particularly attractive for improving robustness of learning-based real-time perception or control in robotics, autonomous vehicles, or automation systems. We focus on CNNs, and in contrast to previous works, our approach accommodates a wide variety of layers typically used in CNNs, including 1-D and 2-D convolutional layers, maximum and average pooling layers, as well as strided and dilated convolutions and zero padding. However, our approach naturally extends beyond CNNs as we can incorporate any layer that is incrementally dissipative.

End-to-end guarantees for indirect data-driven control of bilinear systems with finite stochastic data

Sep 26, 2024

Abstract:In this paper we propose an end-to-end algorithm for indirect data-driven control for bilinear systems with stability guarantees. We consider the case where the collected i.i.d. data is affected by probabilistic noise with possibly unbounded support and leverage tools from statistical learning theory to derive finite sample identification error bounds. To this end, we solve the bilinear identification problem by solving a set of linear and affine identification problems, by a particular choice of a control input during the data collection phase. We provide a priori as well as data-dependent finite sample identification error bounds on the individual matrices as well as ellipsoidal bounds, both of which are structurally suitable for control. Further, we integrate the structure of the derived identification error bounds in a robust controller design to obtain an exponentially stable closed-loop. By means of an extensive numerical study we showcase the interplay between the controller design and the derived identification error bounds. Moreover, we note appealing connections of our results to indirect data-driven control of general nonlinear systems through Koopman operator theory and discuss how our results may be applied in this setup.

Lipschitz constant estimation for general neural network architectures using control tools

May 02, 2024

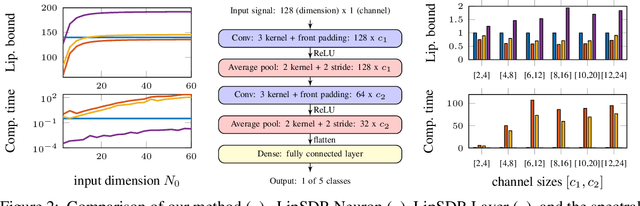

Abstract:This paper is devoted to the estimation of the Lipschitz constant of neural networks using semidefinite programming. For this purpose, we interpret neural networks as time-varying dynamical systems, where the $k$-th layer corresponds to the dynamics at time $k$. A key novelty with respect to prior work is that we use this interpretation to exploit the series interconnection structure of neural networks with a dynamic programming recursion. Nonlinearities, such as activation functions and nonlinear pooling layers, are handled with integral quadratic constraints. If the neural network contains signal processing layers (convolutional or state space model layers), we realize them as 1-D/2-D/N-D systems and exploit this structure as well. We distinguish ourselves from related work on Lipschitz constant estimation by more extensive structure exploitation (scalability) and a generalization to a large class of common neural network architectures. To show the versatility and computational advantages of our method, we apply it to different neural network architectures trained on MNIST and CIFAR-10.

Collision Avoidance Safety Filter for an Autonomous E-Scooter using Ultrasonic Sensors

Mar 22, 2024

Abstract:In this paper, we propose a collision avoidance safety filter for autonomous electric scooters to enable safe operation of such vehicles in pedestrian areas. In particular, we employ multiple low-cost ultrasonic sensors to detect a wide range of possible obstacles in front of the e-scooter. Based on possibly faulty distance measurements, we design a filter to mitigate measurement noise and missing values as well as a gain-scheduled controller to limit the velocity commanded to the e-scooter when required due to imminent collisions. The proposed controller structure is able to prevent collisions with unknown obstacles by deploying a reduced safe velocity ensuring a sufficiently large safety distance. The collision avoidance approach is designed such that it may be easily deployed in similar applications of general micromobility vehicles. The effectiveness of our proposed safety filter is demonstrated in real-world experiments.

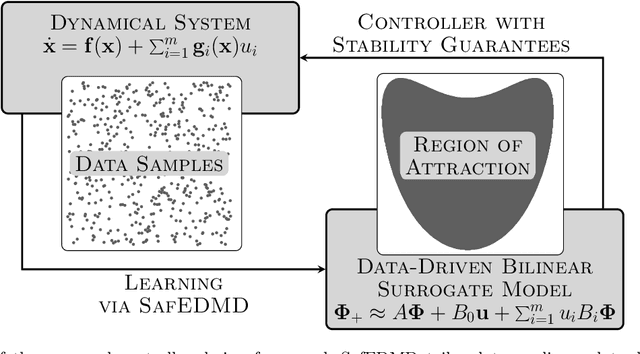

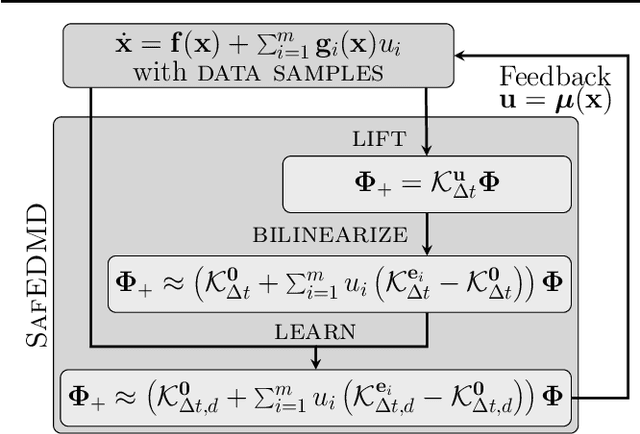

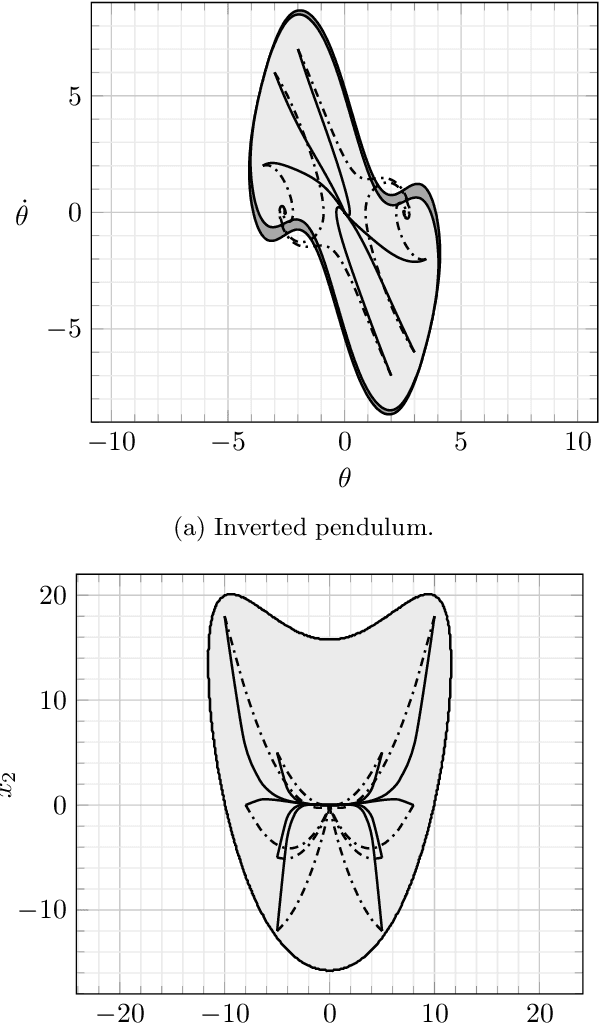

SafEDMD: A certified learning architecture tailored to data-driven control of nonlinear dynamical systems

Feb 05, 2024

Abstract:The Koopman operator serves as the theoretical backbone for machine learning of dynamical control systems, where the operator is heuristically approximated by extended dynamic mode decomposition (EDMD). In this paper, we propose Stability- and certificate-oriented EDMD (SafEDMD): a novel EDMD-based learning architecture which comes along with rigorous certificates, resulting in a reliable surrogate model generated in a data-driven fashion. To ensure trustworthiness of SafEDMD, we derive proportional error bounds, which vanish at the origin and are tailored for control tasks, leading to certified controller design based on semi-definite programming. We illustrate the developed machinery by means of several benchmark examples and highlight the advantages over state-of-the-art methods.

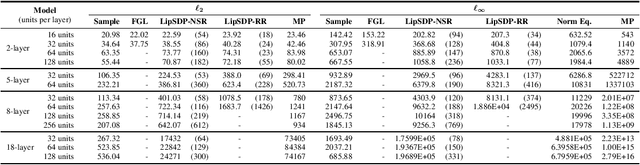

Novel Quadratic Constraints for Extending LipSDP beyond Slope-Restricted Activations

Jan 25, 2024

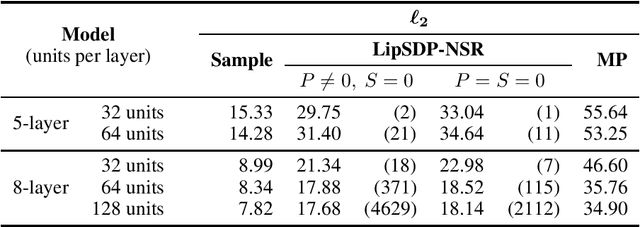

Abstract:Recently, semidefinite programming (SDP) techniques have shown great promise in providing accurate Lipschitz bounds for neural networks. Specifically, the LipSDP approach (Fazlyab et al., 2019) has received much attention and provides the least conservative Lipschitz upper bounds that can be computed with polynomial time guarantees. However, one main restriction of LipSDP is that its formulation requires the activation functions to be slope-restricted on $[0,1]$, preventing its further use for more general activation functions such as GroupSort, MaxMin, and Householder. One can rewrite MaxMin activations for example as residual ReLU networks. However, a direct application of LipSDP to the resultant residual ReLU networks is conservative and even fails in recovering the well-known fact that the MaxMin activation is 1-Lipschitz. Our paper bridges this gap and extends LipSDP beyond slope-restricted activation functions. To this end, we provide novel quadratic constraints for GroupSort, MaxMin, and Householder activations via leveraging their underlying properties such as sum preservation. Our proposed analysis is general and provides a unified approach for estimating $\ell_2$ and $\ell_\infty$ Lipschitz bounds for a rich class of neural network architectures, including non-residual and residual neural networks and implicit models, with GroupSort, MaxMin, and Householder activations. Finally, we illustrate the utility of our approach with a variety of experiments and show that our proposed SDPs generate less conservative Lipschitz bounds in comparison to existing approaches.

Lipschitz-bounded 1D convolutional neural networks using the Cayley transform and the controllability Gramian

Mar 20, 2023

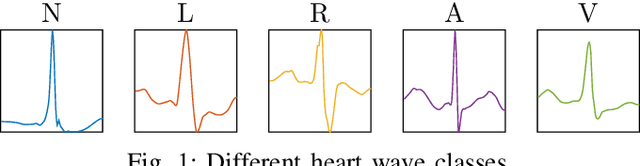

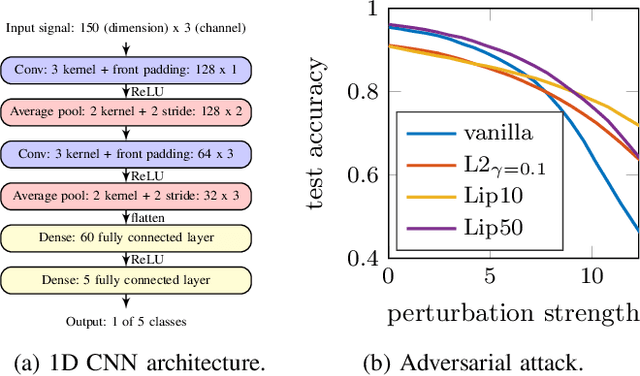

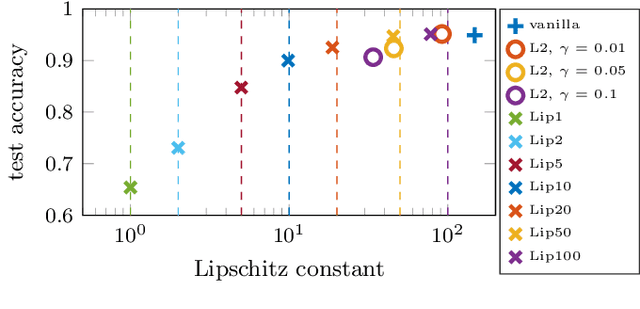

Abstract:We establish a layer-wise parameterization for 1D convolutional neural networks (CNNs) with built-in end-to-end robustness guarantees. Herein, we use the Lipschitz constant of the input-output mapping characterized by a CNN as a robustness measure. We base our parameterization on the Cayley transform that parameterizes orthogonal matrices and the controllability Gramian for the state space representation of the convolutional layers. The proposed parameterization by design fulfills linear matrix inequalities that are sufficient for Lipschitz continuity of the CNN, which further enables unconstrained training of Lipschitz-bounded 1D CNNs. Finally, we train Lipschitz-bounded 1D CNNs for the classification of heart arrythmia data and show their improved robustness.

Convolutional Neural Networks as 2-D systems

Mar 06, 2023

Abstract:This paper introduces a novel representation of convolutional Neural Networks (CNNs) in terms of 2-D dynamical systems. To this end, the usual description of convolutional layers with convolution kernels, i.e., the impulse responses of linear filters, is realized in state space as a linear time-invariant 2-D system. The overall convolutional Neural Network composed of convolutional layers and nonlinear activation functions is then viewed as a 2-D version of a Lur'e system, i.e., a linear dynamical system interconnected with static nonlinear components. One benefit of this 2-D Lur'e system perspective on CNNs is that we can use robust control theory much more efficiently for Lipschitz constant estimation than previously possible.

Lipschitz constant estimation for 1D convolutional neural networks

Nov 28, 2022

Abstract:In this work, we propose a dissipativity-based method for Lipschitz constant estimation of 1D convolutional neural networks (CNNs). In particular, we analyze the dissipativity properties of convolutional, pooling, and fully connected layers making use of incremental quadratic constraints for nonlinear activation functions and pooling operations. The Lipschitz constant of the concatenation of these mappings is then estimated by solving a semidefinite program which we derive from dissipativity theory. To make our method as efficient as possible, we take the structure of convolutional layers into account realizing these finite impulse response filters as causal dynamical systems in state space and carrying out the dissipativity analysis for the state space realizations. The examples we provide show that our Lipschitz bounds are advantageous in terms of accuracy and scalability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge