Feng Qiu

Retrieval Augmented Comic Image Generation

Jun 14, 2025

Abstract:We present RaCig, a novel system for generating comic-style image sequences with consistent characters and expressive gestures. RaCig addresses two key challenges: (1) maintaining character identity and costume consistency across frames, and (2) producing diverse and vivid character gestures. Our approach integrates a retrieval-based character assignment module, which aligns characters in textual prompts with reference images, and a regional character injection mechanism that embeds character features into specified image regions. Experimental results demonstrate that RaCig effectively generates engaging comic narratives with coherent characters and dynamic interactions. The source code will be publicly available to support further research in this area.

7ABAW-Compound Expression Recognition via Curriculum Learning

Mar 11, 2025Abstract:With the advent of deep learning, expression recognition has made significant advancements. However, due to the limited availability of annotated compound expression datasets and the subtle variations of compound expressions, Compound Emotion Recognition (CE) still holds considerable potential for exploration. To advance this task, the 7th Affective Behavior Analysis in-the-wild (ABAW) competition introduces the Compound Expression Challenge based on C-EXPR-DB, a limited dataset without labels. In this paper, we present a curriculum learning-based framework that initially trains the model on single-expression tasks and subsequently incorporates multi-expression data. This design ensures that our model first masters the fundamental features of basic expressions before being exposed to the complexities of compound emotions. Specifically, our designs can be summarized as follows: 1) Single-Expression Pre-training: The model is first trained on datasets containing single expressions to learn the foundational facial features associated with basic emotions. 2) Dynamic Compound Expression Generation: Given the scarcity of annotated compound expression datasets, we employ CutMix and Mixup techniques on the original single-expression images to create hybrid images exhibiting characteristics of multiple basic emotions. 3) Incremental Multi-Expression Integration: After performing well on single-expression tasks, the model is progressively exposed to multi-expression data, allowing the model to adapt to the complexity and variability of compound expressions. The official results indicate that our method achieves the \textbf{best} performance in this competition track with an F-score of 0.6063. Our code is released at https://github.com/YenanLiu/ABAW7th.

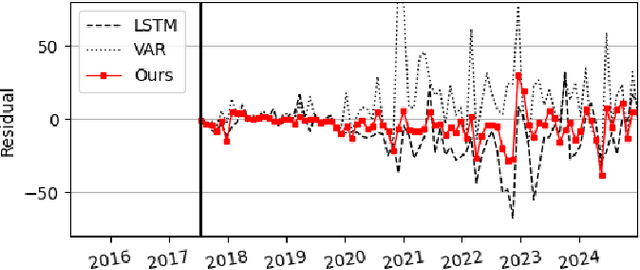

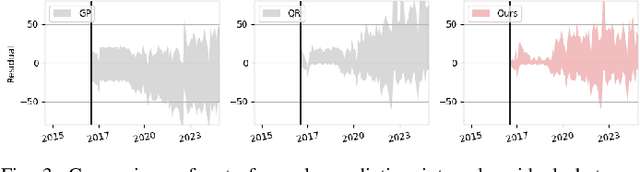

Global-Decision-Focused Neural ODEs for Proactive Grid Resilience Management

Feb 25, 2025Abstract:Extreme hazard events such as wildfires and hurricanes increasingly threaten power systems, causing widespread outages and disrupting critical services. Recently, predict-then-optimize approaches have gained traction in grid operations, where system functionality forecasts are first generated and then used as inputs for downstream decision-making. However, this two-stage method often results in a misalignment between prediction and optimization objectives, leading to suboptimal resource allocation. To address this, we propose predict-all-then-optimize-globally (PATOG), a framework that integrates outage prediction with globally optimized interventions. At its core, our global-decision-focused (GDF) neural ODE model captures outage dynamics while optimizing resilience strategies in a decision-aware manner. Unlike conventional methods, our approach ensures spatially and temporally coherent decision-making, improving both predictive accuracy and operational efficiency. Experiments on synthetic and real-world datasets demonstrate significant improvements in outage prediction consistency and grid resilience.

Spatio-Temporal Conformal Prediction for Power Outage Data

Nov 26, 2024Abstract:In recent years, increasingly unpredictable and severe global weather patterns have frequently caused long-lasting power outages. Building resilience, the ability to withstand, adapt to, and recover from major disruptions, has become crucial for the power industry. To enable rapid recovery, accurately predicting future outage numbers is essential. Rather than relying on simple point estimates, we analyze extensive quarter-hourly outage data and develop a graph conformal prediction method that delivers accurate prediction regions for outage numbers across the states for a time period. We demonstrate the effectiveness of this method through extensive numerical experiments in several states affected by extreme weather events that led to widespread outages.

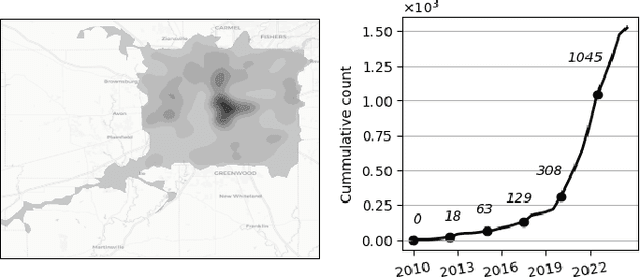

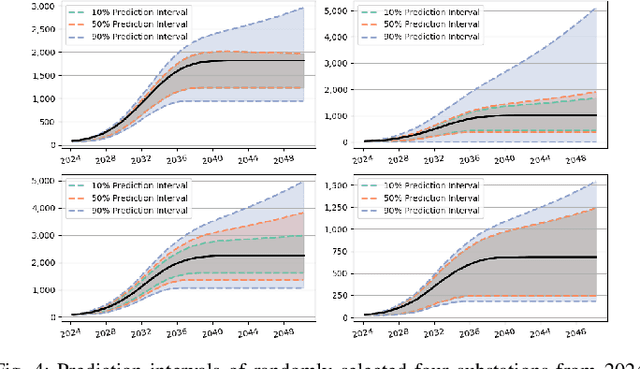

Hierarchical Spatio-Temporal Uncertainty Quantification for Distributed Energy Adoption

Nov 19, 2024

Abstract:The rapid deployment of distributed energy resources (DER) has introduced significant spatio-temporal uncertainties in power grid management, necessitating accurate multilevel forecasting methods. However, existing approaches often produce overly conservative uncertainty intervals at individual spatial units and fail to properly capture uncertainties when aggregating predictions across different spatial scales. This paper presents a novel hierarchical spatio-temporal model based on the conformal prediction framework to address these challenges. Our approach generates circuit-level DER growth predictions and efficiently aggregates them to the substation level while maintaining statistical validity through a tailored non-conformity score. Applied to a decade of DER installation data from a local utility network, our method demonstrates superior performance over existing approaches, particularly in reducing prediction interval widths while maintaining coverage.

Affective Behaviour Analysis via Progressive Learning

Jul 26, 2024

Abstract:Affective Behavior Analysis aims to develop emotionally intelligent technology that can recognize and respond to human emotions. To advance this, the 7th Affective Behavior Analysis in-the-wild (ABAW) competition establishes two tracks: i.e., the Multi-task Learning (MTL) Challenge and the Compound Expression (CE) challenge based on Aff-Wild2 and C-EXPR-DB datasets. In this paper, we present our methods and experimental results for the two competition tracks. Specifically, it can be summarized in the following four aspects: 1) To attain high-quality facial features, we train a Masked-Auto Encoder in a self-supervised manner. 2) We devise a temporal convergence module to capture the temporal information between video frames and explore the impact of window size and sequence length on each sub-task. 3) To facilitate the joint optimization of various sub-tasks, we explore the impact of sub-task joint training and feature fusion from individual tasks on each task performance improvement. 4) We utilize curriculum learning to transition the model from recognizing single expressions to recognizing compound expressions, thereby improving the accuracy of compound expression recognition. Extensive experiments demonstrate the superiority of our designs.

ECNet: Effective Controllable Text-to-Image Diffusion Models

Mar 27, 2024Abstract:The conditional text-to-image diffusion models have garnered significant attention in recent years. However, the precision of these models is often compromised mainly for two reasons, ambiguous condition input and inadequate condition guidance over single denoising loss. To address the challenges, we introduce two innovative solutions. Firstly, we propose a Spatial Guidance Injector (SGI) which enhances conditional detail by encoding text inputs with precise annotation information. This method directly tackles the issue of ambiguous control inputs by providing clear, annotated guidance to the model. Secondly, to overcome the issue of limited conditional supervision, we introduce Diffusion Consistency Loss (DCL), which applies supervision on the denoised latent code at any given time step. This encourages consistency between the latent code at each time step and the input signal, thereby enhancing the robustness and accuracy of the output. The combination of SGI and DCL results in our Effective Controllable Network (ECNet), which offers a more accurate controllable end-to-end text-to-image generation framework with a more precise conditioning input and stronger controllable supervision. We validate our approach through extensive experiments on generation under various conditions, such as human body skeletons, facial landmarks, and sketches of general objects. The results consistently demonstrate that our method significantly enhances the controllability and robustness of the generated images, outperforming existing state-of-the-art controllable text-to-image models.

Affective Behaviour Analysis via Integrating Multi-Modal Knowledge

Mar 16, 2024

Abstract:Affective Behavior Analysis aims to facilitate technology emotionally smart, creating a world where devices can understand and react to our emotions as humans do. To comprehensively evaluate the authenticity and applicability of emotional behavior analysis techniques in natural environments, the 6th competition on Affective Behavior Analysis in-the-wild (ABAW) utilizes the Aff-Wild2, Hume-Vidmimic2, and C-EXPR-DB datasets to set up five competitive tracks, i.e., Valence-Arousal (VA) Estimation, Expression (EXPR) Recognition, Action Unit (AU) Detection, Compound Expression (CE) Recognition, and Emotional Mimicry Intensity (EMI) Estimation. In this paper, we present our method designs for the five tasks. Specifically, our design mainly includes three aspects: 1) Utilizing a transformer-based feature fusion module to fully integrate emotional information provided by audio signals, visual images, and transcripts, offering high-quality expression features for the downstream tasks. 2) To achieve high-quality facial feature representations, we employ Masked-Auto Encoder as the visual features extraction model and fine-tune it with our facial dataset. 3) Considering the complexity of the video collection scenes, we conduct a more detailed dataset division based on scene characteristics and train the classifier for each scene. Extensive experiments demonstrate the superiority of our designs.

Federated Battery Diagnosis and Prognosis

Oct 14, 2023Abstract:Battery diagnosis, prognosis and health management models play a critical role in the integration of battery systems in energy and mobility fields. However, large-scale deployment of these models is hindered by a myriad of challenges centered around data ownership, privacy, communication, and processing. State-of-the-art battery diagnosis and prognosis methods require centralized collection of data, which further aggravates these challenges. Here we propose a federated battery prognosis model, which distributes the processing of battery standard current-voltage-time-usage data in a privacy-preserving manner. Instead of exchanging raw standard current-voltage-time-usage data, our model communicates only the model parameters, thus reducing communication load and preserving data confidentiality. The proposed model offers a paradigm shift in battery health management through privacy-preserving distributed methods for battery data processing and remaining lifetime prediction.

Detecting Electricity Service Equity Issues with Transfer Counterfactual Learning on Large-Scale Outage Datasets

Oct 05, 2023Abstract:Energy justice is a growing area of interest in interdisciplinary energy research. However, identifying systematic biases in the energy sector remains challenging due to confounding variables, intricate heterogeneity in treatment effects, and limited data availability. To address these challenges, we introduce a novel approach for counterfactual causal analysis centered on energy justice. We use subgroup analysis to manage diverse factors and leverage the idea of transfer learning to mitigate data scarcity in each subgroup. In our numerical analysis, we apply our method to a large-scale customer-level power outage data set and investigate the counterfactual effect of demographic factors, such as income and age of the population, on power outage durations. Our results indicate that low-income and elderly-populated areas consistently experience longer power outages, regardless of weather conditions. This points to existing biases in the power system and highlights the need for focused improvements in areas with economic challenges.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge