Shixiang Zhu

Score-Based Change-Point Detection and Region Localization for Spatio-Temporal Point Processes

Feb 04, 2026Abstract:We study sequential change-point detection for spatio-temporal point processes, where actionable detection requires not only identifying when a distributional change occurs but also localizing where it manifests in space. While classical quickest change detection methods provide strong guarantees on detection delay and false-alarm rates, existing approaches for point-process data predominantly focus on temporal changes and do not explicitly infer affected spatial regions. We propose a likelihood-free, score-based detection framework that jointly estimates the change time and the change region in continuous space-time without assuming parametric knowledge of the pre- or post-change dynamics. The method leverages a localized and conditionally weighted Hyvärinen score to quantify event-level deviations from nominal behavior and aggregates these scores using a spatio-temporal CUSUM-type statistic over a prescribed class of spatial regions. Operating sequentially, the procedure outputs both a stopping time and an estimated change region, enabling real-time detection with spatial interpretability. We establish theoretical guarantees on false-alarm control, detection delay, and spatial localization accuracy, and demonstrate the effectiveness of the proposed approach through simulations and real-world spatio-temporal event data.

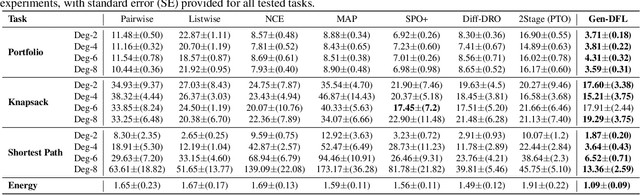

When Robustness Meets Conservativeness: Conformalized Uncertainty Calibration for Balanced Decision Making

Oct 09, 2025Abstract:Robust optimization safeguards decisions against uncertainty by optimizing against worst-case scenarios, yet their effectiveness hinges on a prespecified robustness level that is often chosen ad hoc, leading to either insufficient protection or overly conservative and costly solutions. Recent approaches using conformal prediction construct data-driven uncertainty sets with finite-sample coverage guarantees, but they still fix coverage targets a priori and offer little guidance for selecting robustness levels. We propose a new framework that provides distribution-free, finite-sample guarantees on both miscoverage and regret for any family of robust predict-then-optimize policies. Our method constructs valid estimators that trace out the miscoverage-regret Pareto frontier, enabling decision-makers to reliably evaluate and calibrate robustness levels according to their cost-risk preferences. The framework is simple to implement, broadly applicable across classical optimization formulations, and achieves sharper finite-sample performance than existing approaches. These results offer the first principled data-driven methodology for guiding robustness selection and empower practitioners to balance robustness and conservativeness in high-stakes decision-making.

Conformalized Decision Risk Assessment

May 19, 2025Abstract:High-stakes decisions in domains such as healthcare, energy, and public policy are often made by human experts using domain knowledge and heuristics, yet are increasingly supported by predictive and optimization-based tools. A dominant approach in operations research is the predict-then-optimize paradigm, where a predictive model estimates uncertain inputs, and an optimization model recommends a decision. However, this approach often lacks interpretability and can fail under distributional uncertainty -- particularly when the outcome distribution is multi-modal or complex -- leading to brittle or misleading decisions. In this paper, we introduce CREDO, a novel framework that quantifies, for any candidate decision, a distribution-free upper bound on the probability that the decision is suboptimal. By combining inverse optimization geometry with conformal prediction and generative modeling, CREDO produces risk certificates that are both statistically rigorous and practically interpretable. This framework enables human decision-makers to audit and validate their own decisions under uncertainty, bridging the gap between algorithmic tools and real-world judgment.

Adaptive Robust Optimization with Data-Driven Uncertainty for Enhancing Distribution System Resilience

May 16, 2025Abstract:Extreme weather events are placing growing strain on electric power systems, exposing the limitations of purely reactive responses and prompting the need for proactive resilience planning. However, existing approaches often rely on simplified uncertainty models and decouple proactive and reactive decisions, overlooking their critical interdependence. This paper proposes a novel tri-level optimization framework that integrates proactive infrastructure investment, adversarial modeling of spatio-temporal disruptions, and adaptive reactive response. We construct high-probability, distribution-free uncertainty sets using conformal prediction to capture complex and data-scarce outage patterns. To solve the resulting nested decision problem, we derive a bi-level reformulation via strong duality and develop a scalable Benders decomposition algorithm. Experiments on both real and synthetic data demonstrate that our approach consistently outperforms conventional robust and two-stage methods, achieving lower worst-case losses and more efficient resource allocation, especially under tight operational constraints and large-scale uncertainty.

Topology-Aware Conformal Prediction for Stream Networks

Mar 06, 2025

Abstract:Stream networks, a unique class of spatiotemporal graphs, exhibit complex directional flow constraints and evolving dependencies, making uncertainty quantification a critical yet challenging task. Traditional conformal prediction methods struggle in this setting due to the need for joint predictions across multiple interdependent locations and the intricate spatio-temporal dependencies inherent in stream networks. Existing approaches either neglect dependencies, leading to overly conservative predictions, or rely solely on data-driven estimations, failing to capture the rich topological structure of the network. To address these challenges, we propose Spatio-Temporal Adaptive Conformal Inference (\texttt{STACI}), a novel framework that integrates network topology and temporal dynamics into the conformal prediction framework. \texttt{STACI} introduces a topology-aware nonconformity score that respects directional flow constraints and dynamically adjusts prediction sets to account for temporal distributional shifts. We provide theoretical guarantees on the validity of our approach and demonstrate its superior performance on both synthetic and real-world datasets. Our results show that \texttt{STACI} effectively balances prediction efficiency and coverage, outperforming existing conformal prediction methods for stream networks.

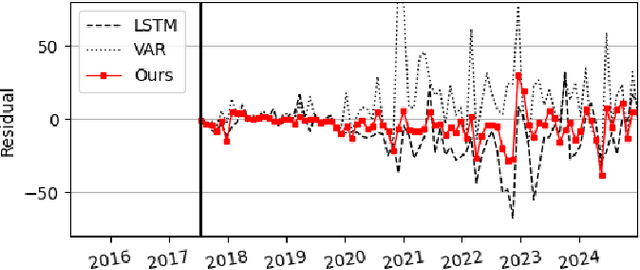

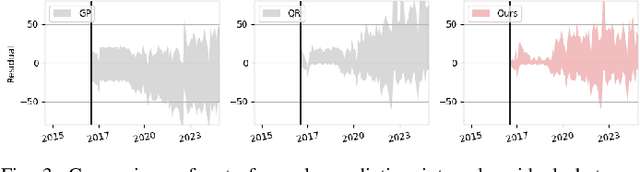

Global-Decision-Focused Neural ODEs for Proactive Grid Resilience Management

Feb 25, 2025Abstract:Extreme hazard events such as wildfires and hurricanes increasingly threaten power systems, causing widespread outages and disrupting critical services. Recently, predict-then-optimize approaches have gained traction in grid operations, where system functionality forecasts are first generated and then used as inputs for downstream decision-making. However, this two-stage method often results in a misalignment between prediction and optimization objectives, leading to suboptimal resource allocation. To address this, we propose predict-all-then-optimize-globally (PATOG), a framework that integrates outage prediction with globally optimized interventions. At its core, our global-decision-focused (GDF) neural ODE model captures outage dynamics while optimizing resilience strategies in a decision-aware manner. Unlike conventional methods, our approach ensures spatially and temporally coherent decision-making, improving both predictive accuracy and operational efficiency. Experiments on synthetic and real-world datasets demonstrate significant improvements in outage prediction consistency and grid resilience.

Gen-DFL: Decision-Focused Generative Learning for Robust Decision Making

Feb 08, 2025

Abstract:Decision-focused learning (DFL) integrates predictive models with downstream optimization, directly training machine learning models to minimize decision errors. While DFL has been shown to provide substantial advantages when compared to a counterpart that treats the predictive and prescriptive models separately, it has also been shown to struggle in high-dimensional and risk-sensitive settings, limiting its applicability in real-world settings. To address this limitation, this paper introduces decision-focused generative learning (Gen-DFL), a novel framework that leverages generative models to adaptively model uncertainty and improve decision quality. Instead of relying on fixed uncertainty sets, Gen-DFL learns a structured representation of the optimization parameters and samples from the tail regions of the learned distribution to enhance robustness against worst-case scenarios. This approach mitigates over-conservatism while capturing complex dependencies in the parameter space. The paper shows, theoretically, that Gen-DFL achieves improved worst-case performance bounds compared to traditional DFL. Empirically, it evaluates Gen-DFL on various scheduling and logistics problems, demonstrating its strong performance against existing DFL methods.

Political-LLM: Large Language Models in Political Science

Dec 09, 2024

Abstract:In recent years, large language models (LLMs) have been widely adopted in political science tasks such as election prediction, sentiment analysis, policy impact assessment, and misinformation detection. Meanwhile, the need to systematically understand how LLMs can further revolutionize the field also becomes urgent. In this work, we--a multidisciplinary team of researchers spanning computer science and political science--present the first principled framework termed Political-LLM to advance the comprehensive understanding of integrating LLMs into computational political science. Specifically, we first introduce a fundamental taxonomy classifying the existing explorations into two perspectives: political science and computational methodologies. In particular, from the political science perspective, we highlight the role of LLMs in automating predictive and generative tasks, simulating behavior dynamics, and improving causal inference through tools like counterfactual generation; from a computational perspective, we introduce advancements in data preparation, fine-tuning, and evaluation methods for LLMs that are tailored to political contexts. We identify key challenges and future directions, emphasizing the development of domain-specific datasets, addressing issues of bias and fairness, incorporating human expertise, and redefining evaluation criteria to align with the unique requirements of computational political science. Political-LLM seeks to serve as a guidebook for researchers to foster an informed, ethical, and impactful use of Artificial Intelligence in political science. Our online resource is available at: http://political-llm.org/.

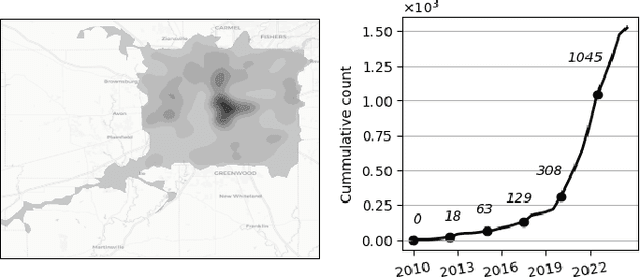

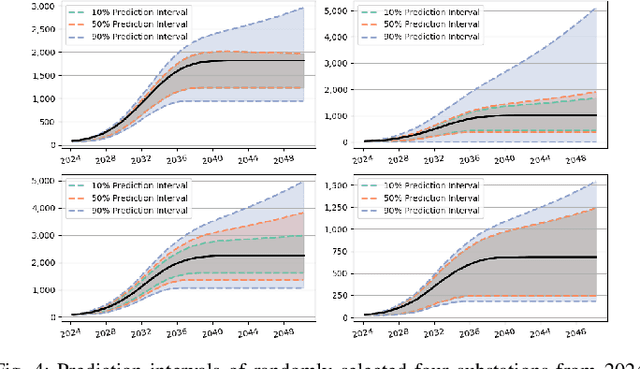

Hierarchical Spatio-Temporal Uncertainty Quantification for Distributed Energy Adoption

Nov 19, 2024

Abstract:The rapid deployment of distributed energy resources (DER) has introduced significant spatio-temporal uncertainties in power grid management, necessitating accurate multilevel forecasting methods. However, existing approaches often produce overly conservative uncertainty intervals at individual spatial units and fail to properly capture uncertainties when aggregating predictions across different spatial scales. This paper presents a novel hierarchical spatio-temporal model based on the conformal prediction framework to address these challenges. Our approach generates circuit-level DER growth predictions and efficiently aggregates them to the substation level while maintaining statistical validity through a tailored non-conformity score. Applied to a decade of DER installation data from a local utility network, our method demonstrates superior performance over existing approaches, particularly in reducing prediction interval widths while maintaining coverage.

Optimizing Probabilistic Conformal Prediction with Vectorized Non-Conformity Scores

Oct 17, 2024

Abstract:Generative models have shown significant promise in critical domains such as medical diagnosis, autonomous driving, and climate science, where reliable decision-making hinges on accurate uncertainty quantification. While probabilistic conformal prediction (PCP) offers a powerful framework for this purpose, its coverage efficiency -- the size of the uncertainty set -- is limited when dealing with complex underlying distributions and a finite number of generated samples. In this paper, we propose a novel PCP framework that enhances efficiency by first vectorizing the non-conformity scores with ranked samples and then optimizing the shape of the prediction set by varying the quantiles for samples at the same rank. Our method delivers valid coverage while producing discontinuous and more efficient prediction sets, making it particularly suited for high-stakes applications. We demonstrate the effectiveness of our approach through experiments on both synthetic and real-world datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge