Feng Ji

Uncertainty Principle for Vertex-Time Graph Signal Processing

Feb 03, 2026Abstract:We present an uncertainty principle for graph signals in the vertex-time domain, unifying the classical time-frequency and graph uncertainty principles within a single framework. By defining vertex-time and spectral-frequency spreads, we quantify signal localization across these domains. Our framework identifies a class of signals that achieve maximum concentration in both the spatial and temporal domains. These signals serve as fundamental atoms for a new vertex-time dictionary, enhancing signal reconstruction under practical constraints, such as intermittent data commonly encountered in sensor and social networks. Furthermore, we introduce a novel graph topology inference method leveraging the uncertainty principle. Numerical experiments on synthetic and real datasets validate the effectiveness of our approach, demonstrating improved reconstruction accuracy, greater robustness to noise, and enhanced graph learning performance compared to existing methods.

Conformal Prediction for Multi-Source Detection on a Network

Nov 12, 2025

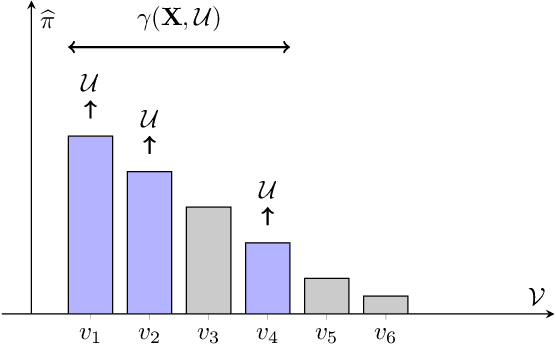

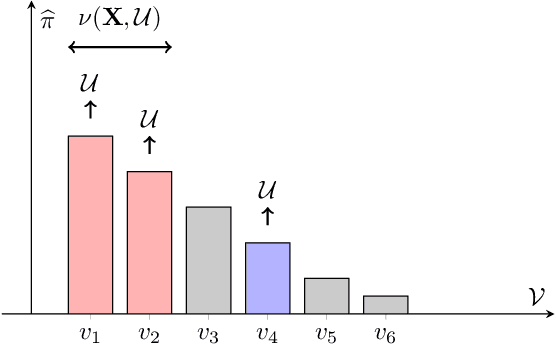

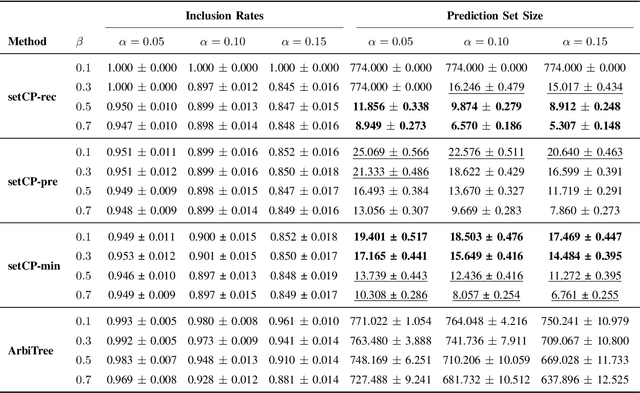

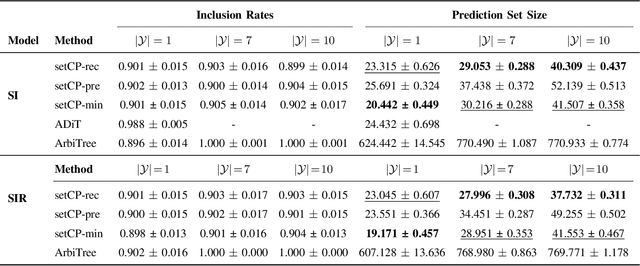

Abstract:Detecting the origin of information or infection spread in networks is a fundamental challenge with applications in misinformation tracking, epidemiology, and beyond. We study the multi-source detection problem: given snapshot observations of node infection status on a graph, estimate the set of source nodes that initiated the propagation. Existing methods either lack statistical guarantees or are limited to specific diffusion models and assumptions. We propose a novel conformal prediction framework that provides statistically valid recall guarantees for source set detection, independent of the underlying diffusion process or data distribution. Our approach introduces principled score functions to quantify the alignment between predicted probabilities and true sources, and leverages a calibration set to construct prediction sets with user-specified recall and coverage levels. The method is applicable to both single- and multi-source scenarios, supports general network diffusion dynamics, and is computationally efficient for large graphs. Empirical results demonstrate that our method achieves rigorous coverage with competitive accuracy, outperforming existing baselines in both reliability and scalability.The code is available online.

Modulo Video Recovery via Selective Spatiotemporal Vision Transformer

Nov 09, 2025

Abstract:Conventional image sensors have limited dynamic range, causing saturation in high-dynamic-range (HDR) scenes. Modulo cameras address this by folding incident irradiance into a bounded range, yet require specialized unwrapping algorithms to reconstruct the underlying signal. Unlike HDR recovery, which extends dynamic range from conventional sampling, modulo recovery restores actual values from folded samples. Despite being introduced over a decade ago, progress in modulo image recovery has been slow, especially in the use of modern deep learning techniques. In this work, we demonstrate that standard HDR methods are unsuitable for modulo recovery. Transformers, however, can capture global dependencies and spatial-temporal relationships crucial for resolving folded video frames. Still, adapting existing Transformer architectures for modulo recovery demands novel techniques. To this end, we present Selective Spatiotemporal Vision Transformer (SSViT), the first deep learning framework for modulo video reconstruction. SSViT employs a token selection strategy to improve efficiency and concentrate on the most critical regions. Experiments confirm that SSViT produces high-quality reconstructions from 8-bit folded videos and achieves state-of-the-art performance in modulo video recovery.

Adaptive Multi-view Graph Contrastive Learning via Fractional-order Neural Diffusion Networks

Nov 09, 2025Abstract:Graph contrastive learning (GCL) learns node and graph representations by contrasting multiple views of the same graph. Existing methods typically rely on fixed, handcrafted views-usually a local and a global perspective, which limits their ability to capture multi-scale structural patterns. We present an augmentation-free, multi-view GCL framework grounded in fractional-order continuous dynamics. By varying the fractional derivative order $α\in (0,1]$, our encoders produce a continuous spectrum of views: small $α$ yields localized features, while large $α$ induces broader, global aggregation. We treat $α$ as a learnable parameter so the model can adapt diffusion scales to the data and automatically discover informative views. This principled approach generates diverse, complementary representations without manual augmentations. Extensive experiments on standard benchmarks demonstrate that our method produces more robust and expressive embeddings and outperforms state-of-the-art GCL baselines.

Omne-R1: Learning to Reason with Memory for Multi-hop Question Answering

Aug 24, 2025Abstract:This paper introduces Omne-R1, a novel approach designed to enhance multi-hop question answering capabilities on schema-free knowledge graphs by integrating advanced reasoning models. Our method employs a multi-stage training workflow, including two reinforcement learning phases and one supervised fine-tuning phase. We address the challenge of limited suitable knowledge graphs and QA data by constructing domain-independent knowledge graphs and auto-generating QA pairs. Experimental results show significant improvements in answering multi-hop questions, with notable performance gains on more complex 3+ hop questions. Our proposed training framework demonstrates strong generalization abilities across diverse knowledge domains.

Simple Graph Contrastive Learning via Fractional-order Neural Diffusion Networks

Apr 24, 2025Abstract:Graph Contrastive Learning (GCL) has recently made progress as an unsupervised graph representation learning paradigm. GCL approaches can be categorized into augmentation-based and augmentation-free methods. The former relies on complex data augmentations, while the latter depends on encoders that can generate distinct views of the same input. Both approaches may require negative samples for training. In this paper, we introduce a novel augmentation-free GCL framework based on graph neural diffusion models. Specifically, we utilize learnable encoders governed by Fractional Differential Equations (FDE). Each FDE is characterized by an order parameter of the differential operator. We demonstrate that varying these parameters allows us to produce learnable encoders that generate diverse views, capturing either local or global information, for contrastive learning. Our model does not require negative samples for training and is applicable to both homophilic and heterophilic datasets. We demonstrate its effectiveness across various datasets, achieving state-of-the-art performance.

Falcon: Faster and Parallel Inference of Large Language Models through Enhanced Semi-Autoregressive Drafting and Custom-Designed Decoding Tree

Dec 17, 2024Abstract:Striking an optimal balance between minimal drafting latency and high speculation accuracy to enhance the inference speed of Large Language Models remains a significant challenge in speculative decoding. In this paper, we introduce Falcon, an innovative semi-autoregressive speculative decoding framework fashioned to augment both the drafter's parallelism and output quality. Falcon incorporates the Coupled Sequential Glancing Distillation technique, which fortifies inter-token dependencies within the same block, leading to increased speculation accuracy. We offer a comprehensive theoretical analysis to illuminate the underlying mechanisms. Additionally, we introduce a Custom-Designed Decoding Tree, which permits the drafter to generate multiple tokens in a single forward pass and accommodates multiple forward passes as needed, thereby boosting the number of drafted tokens and significantly improving the overall acceptance rate. Comprehensive evaluations on benchmark datasets such as MT-Bench, HumanEval, and GSM8K demonstrate Falcon's superior acceleration capabilities. The framework achieves a lossless speedup ratio ranging from 2.91x to 3.51x when tested on the Vicuna and LLaMA2-Chat model series. These results outstrip existing speculative decoding methods for LLMs, including Eagle, Medusa, Lookahead, SPS, and PLD, while maintaining a compact drafter architecture equivalent to merely two Transformer layers.

Distributed-Order Fractional Graph Operating Network

Nov 08, 2024

Abstract:We introduce the Distributed-order fRActional Graph Operating Network (DRAGON), a novel continuous Graph Neural Network (GNN) framework that incorporates distributed-order fractional calculus. Unlike traditional continuous GNNs that utilize integer-order or single fractional-order differential equations, DRAGON uses a learnable probability distribution over a range of real numbers for the derivative orders. By allowing a flexible and learnable superposition of multiple derivative orders, our framework captures complex graph feature updating dynamics beyond the reach of conventional models. We provide a comprehensive interpretation of our framework's capability to capture intricate dynamics through the lens of a non-Markovian graph random walk with node feature updating driven by an anomalous diffusion process over the graph. Furthermore, to highlight the versatility of the DRAGON framework, we conduct empirical evaluations across a range of graph learning tasks. The results consistently demonstrate superior performance when compared to traditional continuous GNN models. The implementation code is available at \url{https://github.com/zknus/NeurIPS-2024-DRAGON}.

Edge centrality and the total variation of graph distributional signals

Nov 01, 2024Abstract:This short note is a supplement to [1], in which the total variation of graph distributional signals is introduced and studied. We introduce a different formulation of total variation and relate it to the notion of edge centrality. The relation provides a different perspective of total variation and may facilitate its computation.

Control the GNN: Utilizing Neural Controller with Lyapunov Stability for Test-Time Feature Reconstruction

Oct 13, 2024

Abstract:The performance of graph neural networks (GNNs) is susceptible to discrepancies between training and testing sample distributions. Prior studies have attempted to enhance GNN performance by reconstructing node features during the testing phase without modifying the model parameters. However, these approaches lack theoretical analysis of the proximity between predictions and ground truth at test time. In this paper, we propose a novel node feature reconstruction method grounded in Lyapunov stability theory. Specifically, we model the GNN as a control system during the testing phase, considering node features as control variables. A neural controller that adheres to the Lyapunov stability criterion is then employed to reconstruct these node features, ensuring that the predictions progressively approach the ground truth at test time. We validate the effectiveness of our approach through extensive experiments across multiple datasets, demonstrating significant performance improvements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge