Qinji Shu

Subset Random Sampling of Finite Time-vertex Graph Signals

Oct 30, 2024

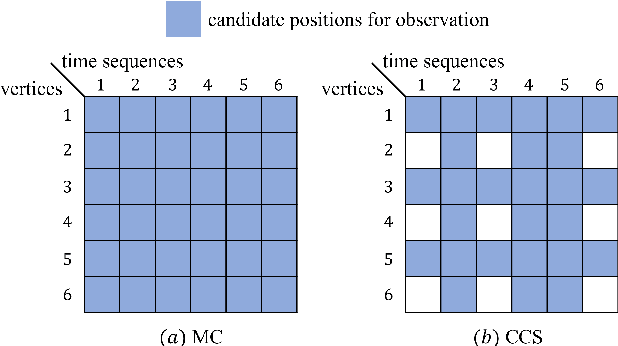

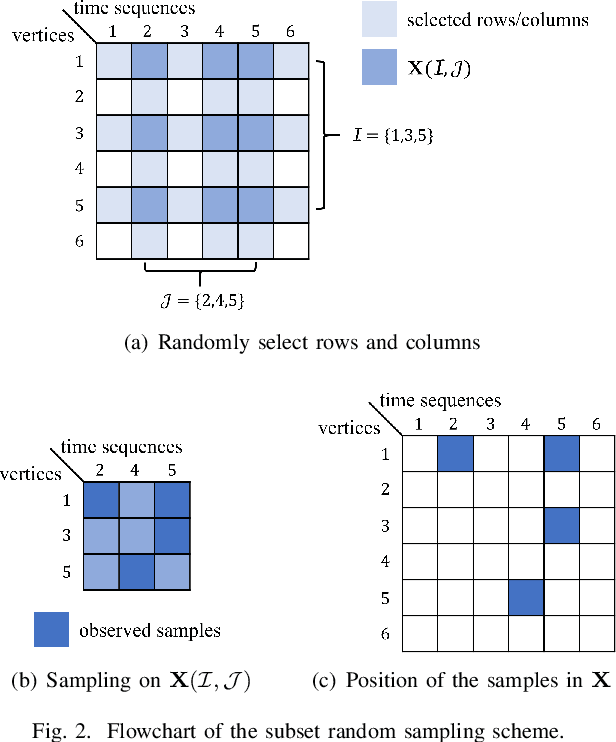

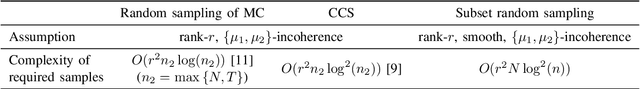

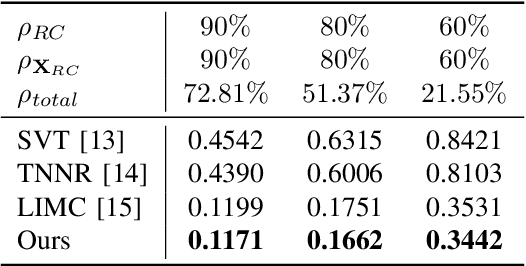

Abstract:Time-varying data with irregular structures can be described by finite time-vertex graph signals (FTVGS), which represent potential temporal and spatial relationships among multiple sources. While sampling and corresponding reconstruction of FTVGS with known spectral support are well investigated, methods for the case of unknown spectral support remain underdeveloped. Existing random sampling schemes may acquire samples from any vertex at any time, which is uncommon in practical applications where sampling typically involves only a subset of vertices and time instants. In sight of this requirement, this paper proposes a subset random sampling scheme for FTVGS. We first randomly select some rows and columns of the FTVGS to form a submatrix, and then randomly sample within the submatrix. Theoretically, we prove sufficient conditions to ensure that the original FTVGS is reconstructed with high probability. Also, we validate the feasibility of reconstructing the original FTVGS by experiments.

The Transferability of Downsamped Sparse Graph Convolutional Networks

Sep 08, 2024Abstract:To accelerate the training of graph convolutional networks (GCNs) on real-world large-scale sparse graphs, downsampling methods are commonly employed as a preprocessing step. However, the effects of graph sparsity and topological structure on the transferability of downsampling methods have not been rigorously analyzed or theoretically guaranteed, particularly when the topological structure is affected by graph sparsity. In this paper, we introduce a novel downsampling method based on a sparse random graph model and derive an expected upper bound for the transfer error. Our findings show that smaller original graph sizes, higher expected average degrees, and increased sampling rates contribute to reducing this upper bound. Experimental results validate the theoretical predictions. By incorporating both sparsity and topological similarity into the model, this study establishes an upper bound on the transfer error for downsampling in the training of large-scale sparse graphs and provides insight into the influence of topological structure on transfer performance.

The Transferability of Downsampling Sparse Graph Convolutional Networks

Aug 30, 2024Abstract:In this paper, we propose a large-scale sparse graph downsampling method based on a sparse random graph model, which allows for the adjustment of different sparsity levels. We combine sparsity and topological similarity: the sparse graph model reduces the node connection probability as the graph size increases, while the downsampling method preserves a specific topological connection pattern during this change. Based on the downsampling method, we derive a theoretical transferability bound about downsampling sparse graph convolutional networks (GCNs), that higher sampling rates, greater average degree expectations, and smaller initial graph sizes lead to better downsampling transferability performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge