Edwin Simpson

Self-Supervised Animal Identification for Long Videos

Jan 14, 2026Abstract:Identifying individual animals in long-duration videos is essential for behavioral ecology, wildlife monitoring, and livestock management. Traditional methods require extensive manual annotation, while existing self-supervised approaches are computationally demanding and ill-suited for long sequences due to memory constraints and temporal error propagation. We introduce a highly efficient, self-supervised method that reframes animal identification as a global clustering task rather than a sequential tracking problem. Our approach assumes a known, fixed number of individuals within a single video -- a common scenario in practice -- and requires only bounding box detections and the total count. By sampling pairs of frames, using a frozen pre-trained backbone, and employing a self-bootstrapping mechanism with the Hungarian algorithm for in-batch pseudo-label assignment, our method learns discriminative features without identity labels. We adapt a Binary Cross Entropy loss from vision-language models, enabling state-of-the-art accuracy ($>$97\%) while consuming less than 1 GB of GPU memory per batch -- an order of magnitude less than standard contrastive methods. Evaluated on challenging real-world datasets (3D-POP pigeons and 8-calves feeding videos), our framework matches or surpasses supervised baselines trained on over 1,000 labeled frames, effectively removing the manual annotation bottleneck. This work enables practical, high-accuracy animal identification on consumer-grade hardware, with broad applicability in resource-constrained research settings. All code written for this paper are \href{https://huggingface.co/datasets/tonyFang04/8-calves}{here}.

Clinically-aligned Multi-modal Chest X-ray Classification

Nov 12, 2025Abstract:Radiology is essential to modern healthcare, yet rising demand and staffing shortages continue to pose major challenges. Recent advances in artificial intelligence have the potential to support radiologists and help address these challenges. Given its widespread use and clinical importance, chest X-ray classification is well suited to augment radiologists' workflows. However, most existing approaches rely solely on single-view, image-level inputs, ignoring the structured clinical information and multi-image studies available at the time of reporting. In this work, we introduce CaMCheX, a multimodal transformer-based framework that aligns multi-view chest X-ray studies with structured clinical data to better reflect how clinicians make diagnostic decisions. Our architecture employs view-specific ConvNeXt encoders for frontal and lateral chest radiographs, whose features are fused with clinical indications, history, and vital signs using a transformer fusion module. This design enables the model to generate context-aware representations that mirror reasoning in clinical practice. Our results exceed the state of the art for both the original MIMIC-CXR dataset and the more recent CXR-LT benchmarks, highlighting the value of clinically grounded multimodal alignment for advancing chest X-ray classification.

How well can LLMs Grade Essays in Arabic?

Jan 27, 2025Abstract:This research assesses the effectiveness of state-of-the-art large language models (LLMs), including ChatGPT, Llama, Aya, Jais, and ACEGPT, in the task of Arabic automated essay scoring (AES) using the AR-AES dataset. It explores various evaluation methodologies, including zero-shot, few-shot in-context learning, and fine-tuning, and examines the influence of instruction-following capabilities through the inclusion of marking guidelines within the prompts. A mixed-language prompting strategy, integrating English prompts with Arabic content, was implemented to improve model comprehension and performance. Among the models tested, ACEGPT demonstrated the strongest performance across the dataset, achieving a Quadratic Weighted Kappa (QWK) of 0.67, but was outperformed by a smaller BERT-based model with a QWK of 0.88. The study identifies challenges faced by LLMs in processing Arabic, including tokenization complexities and higher computational demands. Performance variation across different courses underscores the need for adaptive models capable of handling diverse assessment formats and highlights the positive impact of effective prompt engineering on improving LLM outputs. To the best of our knowledge, this study is the first to empirically evaluate the performance of multiple generative Large Language Models (LLMs) on Arabic essays using authentic student data.

Out-of-Distribution Detection with Attention Head Masking for Multimodal Document Classification

Aug 20, 2024

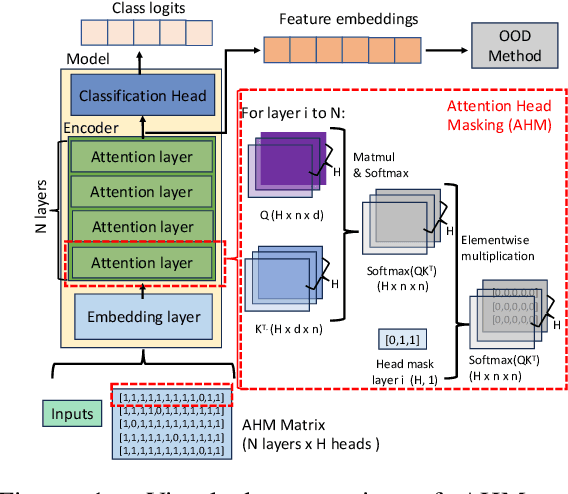

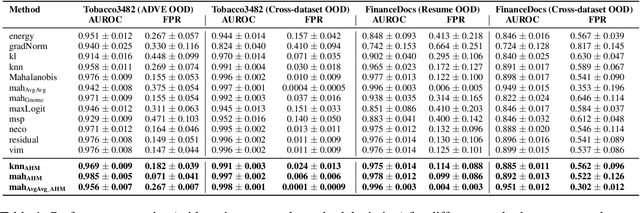

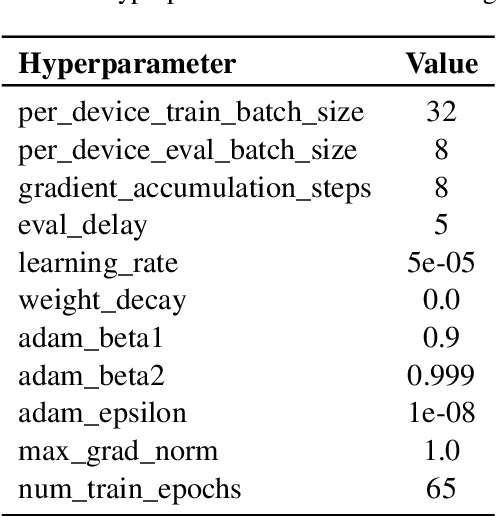

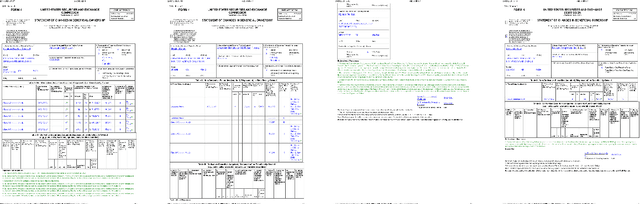

Abstract:Detecting out-of-distribution (OOD) data is crucial in machine learning applications to mitigate the risk of model overconfidence, thereby enhancing the reliability and safety of deployed systems. The majority of existing OOD detection methods predominantly address uni-modal inputs, such as images or texts. In the context of multi-modal documents, there is a notable lack of extensive research on the performance of these methods, which have primarily been developed with a focus on computer vision tasks. We propose a novel methodology termed as attention head masking (AHM) for multi-modal OOD tasks in document classification systems. Our empirical results demonstrate that the proposed AHM method outperforms all state-of-the-art approaches and significantly decreases the false positive rate (FPR) compared to existing solutions up to 7.5\%. This methodology generalizes well to multi-modal data, such as documents, where visual and textual information are modeled under the same Transformer architecture. To address the scarcity of high-quality publicly available document datasets and encourage further research on OOD detection for documents, we introduce FinanceDocs, a new document AI dataset. Our code and dataset are publicly available.

Automated essay scoring in Arabic: a dataset and analysis of a BERT-based system

Jul 15, 2024Abstract:Automated Essay Scoring (AES) holds significant promise in the field of education, helping educators to mark larger volumes of essays and provide timely feedback. However, Arabic AES research has been limited by the lack of publicly available essay data. This study introduces AR-AES, an Arabic AES benchmark dataset comprising 2046 undergraduate essays, including gender information, scores, and transparent rubric-based evaluation guidelines, providing comprehensive insights into the scoring process. These essays come from four diverse courses, covering both traditional and online exams. Additionally, we pioneer the use of AraBERT for AES, exploring its performance on different question types. We find encouraging results, particularly for Environmental Chemistry and source-dependent essay questions. For the first time, we examine the scale of errors made by a BERT-based AES system, observing that 96.15 percent of the errors are within one point of the first human marker's prediction, on a scale of one to five, with 79.49 percent of predictions matching exactly. In contrast, additional human markers did not exceed 30 percent exact matches with the first marker, with 62.9 percent within one mark. These findings highlight the subjectivity inherent in essay grading, and underscore the potential for current AES technology to assist human markers to grade consistently across large classes.

Automated Radiology Report Generation: A Review of Recent Advances

May 17, 2024Abstract:Increasing demands on medical imaging departments are taking a toll on the radiologist's ability to deliver timely and accurate reports. Recent technological advances in artificial intelligence have demonstrated great potential for automatic radiology report generation (ARRG), sparking an explosion of research. This survey paper conducts a methodological review of contemporary ARRG approaches by way of (i) assessing datasets based on characteristics, such as availability, size, and adoption rate, (ii) examining deep learning training methods, such as contrastive learning and reinforcement learning, (iii) exploring state-of-the-art model architectures, including variations of CNN and transformer models, (iv) outlining techniques integrating clinical knowledge through multimodal inputs and knowledge graphs, and (v) scrutinising current model evaluation techniques, including commonly applied NLP metrics and qualitative clinical reviews. Furthermore, the quantitative results of the reviewed models are analysed, where the top performing models are examined to seek further insights. Finally, potential new directions are highlighted, with the adoption of additional datasets from other radiological modalities and improved evaluation methods predicted as important areas of future development.

Interactively Learning to Summarise Timelines by Reinforcement Learning

Nov 14, 2022

Abstract:Timeline summarisation (TLS) aims to create a time-ordered summary list concisely describing a series of events with corresponding dates. This differs from general summarisation tasks because it requires the method to capture temporal information besides the main idea of the input documents. This paper proposes a TLS system which can interactively learn from the user's feedback via reinforcement learning and generate timelines satisfying the user's interests. We define a compound reward function that can update automatically according to the received feedback through interaction with the user. The system utilises the reward function to fine-tune an abstractive summarisation model via reinforcement learning to guarantee topical coherence, factual consistency and linguistic fluency of the generated summaries. The proposed system avoids the need of preference feedback from individual users. The experiments show that our system outperforms the baseline on the benchmark TLS dataset and can generate accurate and timeline precises that better satisfy real users.

Ranking Scientific Papers Using Preference Learning

Sep 02, 2021

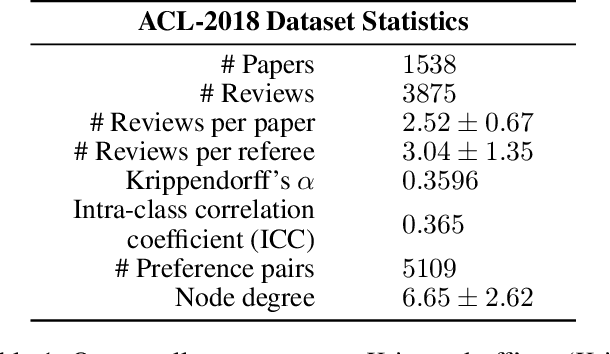

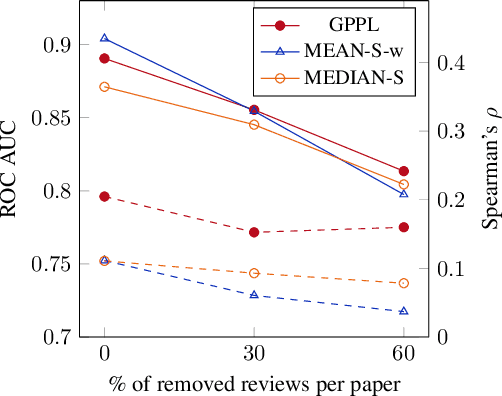

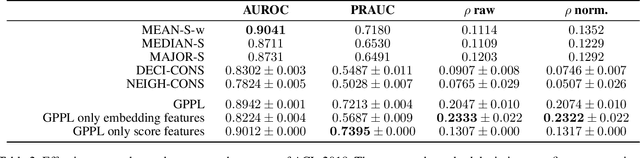

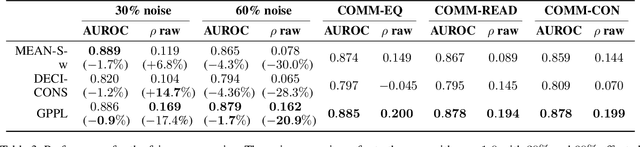

Abstract:Peer review is the main quality control mechanism in academia. Quality of scientific work has many dimensions; coupled with the subjective nature of the reviewing task, this makes final decision making based on the reviews and scores therein very difficult and time-consuming. To assist with this important task, we cast it as a paper ranking problem based on peer review texts and reviewer scores. We introduce a novel, multi-faceted generic evaluation framework for making final decisions based on peer reviews that takes into account effectiveness, efficiency and fairness of the evaluated system. We propose a novel approach to paper ranking based on Gaussian Process Preference Learning (GPPL) and evaluate it on peer review data from the ACL-2018 conference. Our experiments demonstrate the superiority of our GPPL-based approach over prior work, while highlighting the importance of using both texts and review scores for paper ranking during peer review aggregation.

Ranking Creative Language Characteristics in Small Data Scenarios

Oct 23, 2020

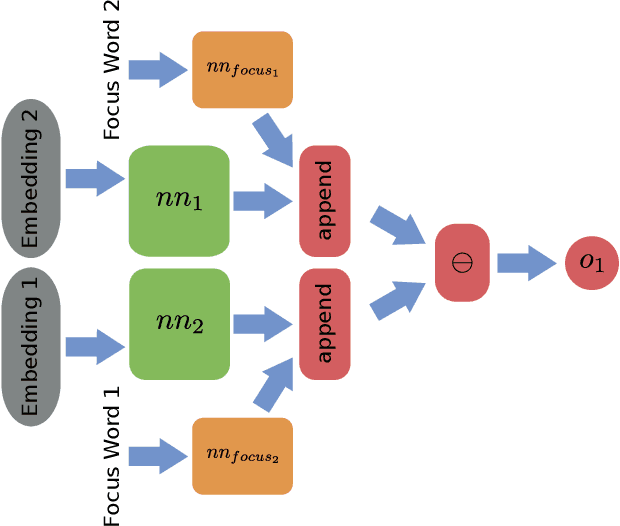

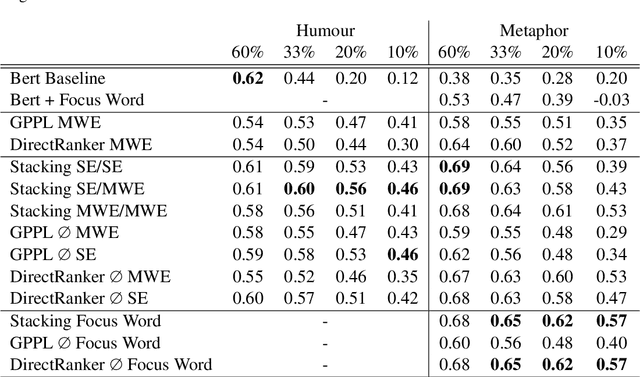

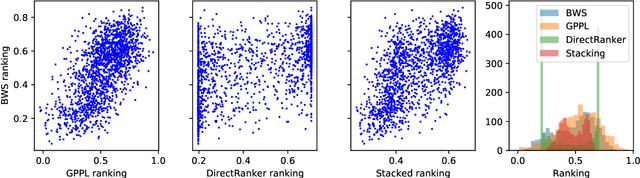

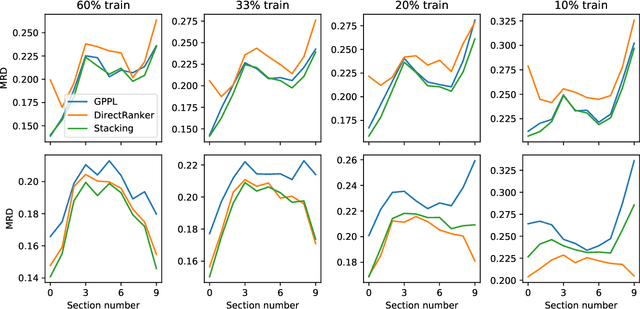

Abstract:The ability to rank creative natural language provides an important general tool for downstream language understanding and generation. However, current deep ranking models require substantial amounts of labeled data that are difficult and expensive to obtain for different domains, languages and creative characteristics. A recent neural approach, the DirectRanker, promises to reduce the amount of training data needed but its application to text isn't fully explored. We therefore adapt the DirectRanker to provide a new deep model for ranking creative language with small data. We compare DirectRanker with a Bayesian approach, Gaussian process preference learning (GPPL), which has previously been shown to work well with sparse data. Our experiments with sparse training data show that while the performance of standard neural ranking approaches collapses with small training datasets, DirectRanker remains effective. We find that combining DirectRanker with GPPL increases performance across different settings by leveraging the complementary benefits of both models. Our combined approach outperforms the previous state-of-the-art on humor and metaphor novelty tasks, increasing Spearman's $\rho$ by 14% and 16% on average.

OFAI-UKP at HAHA@IberLEF2019: Predicting the Humorousness of Tweets Using Gaussian Process Preference Learning

Aug 03, 2020

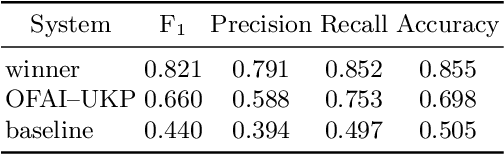

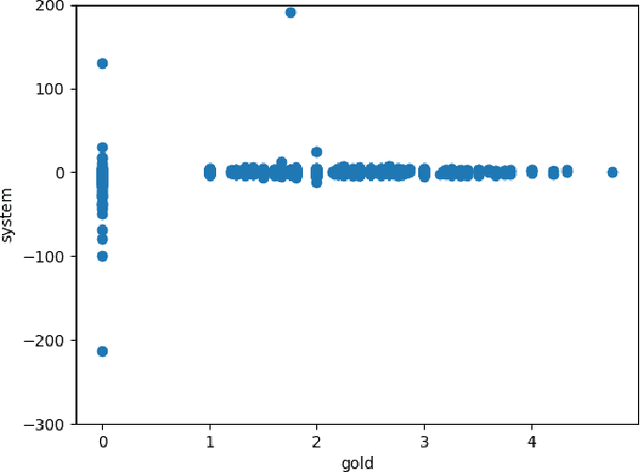

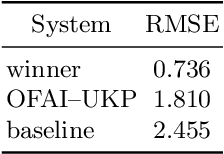

Abstract:Most humour processing systems to date make at best discrete, coarse-grained distinctions between the comical and the conventional, yet such notions are better conceptualized as a broad spectrum. In this paper, we present a probabilistic approach, a variant of Gaussian process preference learning (GPPL), that learns to rank and rate the humorousness of short texts by exploiting human preference judgments and automatically sourced linguistic annotations. We apply our system, which had previously shown good performance on English-language one-liners annotated with pairwise humorousness annotations, to the Spanish-language data set of the HAHA@IberLEF2019 evaluation campaign. We report system performance for the campaign's two subtasks, humour detection and funniness score prediction, and discuss some issues arising from the conversion between the numeric scores used in the HAHA@IberLEF2019 data and the pairwise judgment annotations required for our method.

* 11 pages, 1 figure

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge