Dawei Chen

GreenAuto: An Automated Platform for Sustainable AI Model Design on Edge Devices

Jan 25, 2025

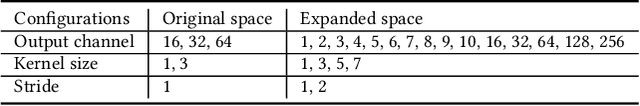

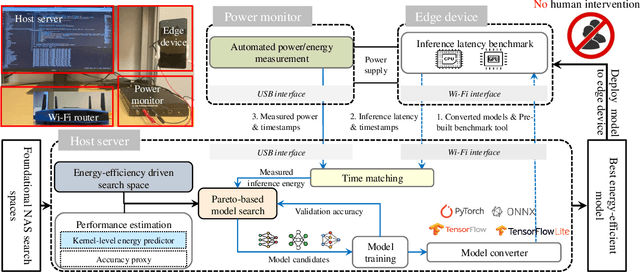

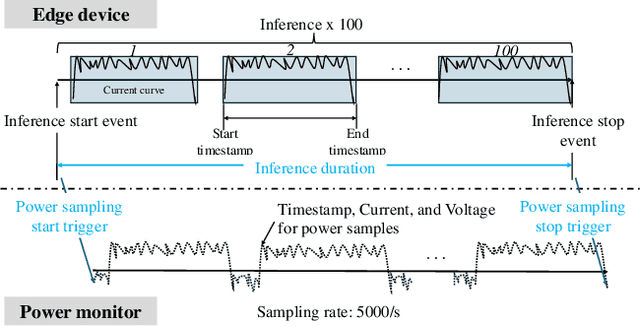

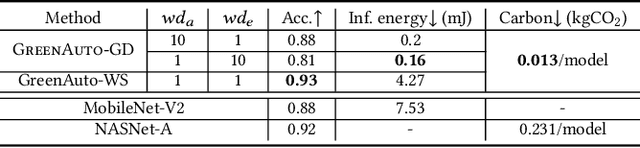

Abstract:We present GreenAuto, an end-to-end automated platform designed for sustainable AI model exploration, generation, deployment, and evaluation. GreenAuto employs a Pareto front-based search method within an expanded neural architecture search (NAS) space, guided by gradient descent to optimize model exploration. Pre-trained kernel-level energy predictors estimate energy consumption across all models, providing a global view that directs the search toward more sustainable solutions. By automating performance measurements and iteratively refining the search process, GreenAuto demonstrates the efficient identification of sustainable AI models without the need for human intervention.

Unveiling Energy Efficiency in Deep Learning: Measurement, Prediction, and Scoring across Edge Devices

Oct 19, 2023

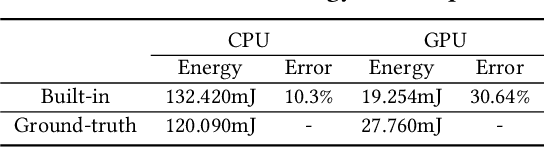

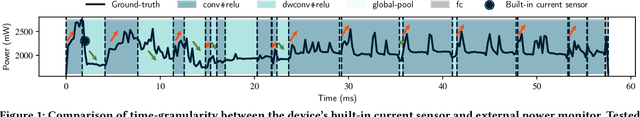

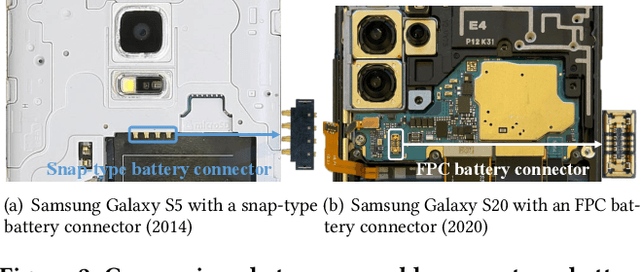

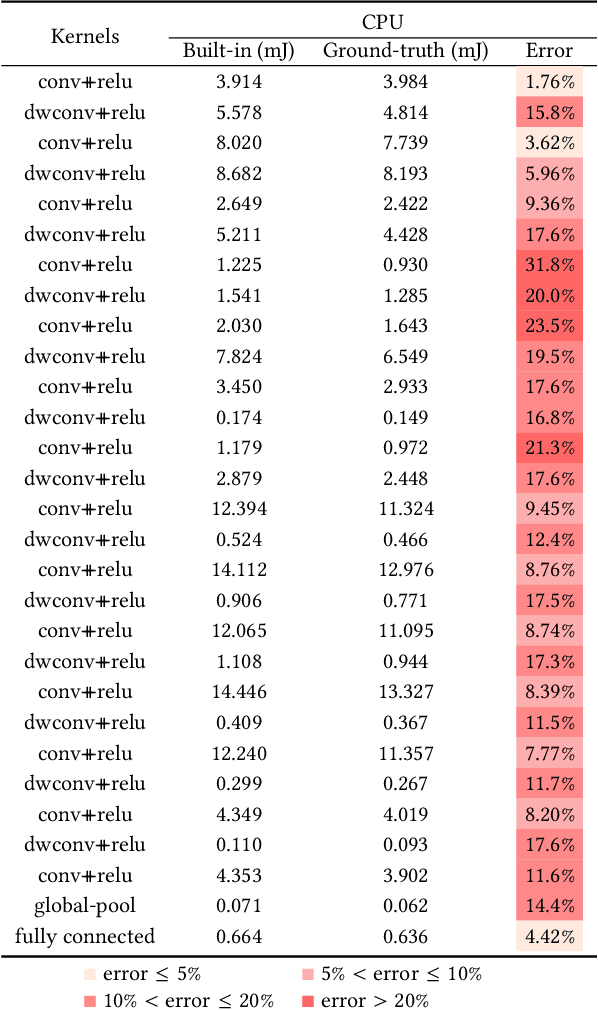

Abstract:Today, deep learning optimization is primarily driven by research focused on achieving high inference accuracy and reducing latency. However, the energy efficiency aspect is often overlooked, possibly due to a lack of sustainability mindset in the field and the absence of a holistic energy dataset. In this paper, we conduct a threefold study, including energy measurement, prediction, and efficiency scoring, with an objective to foster transparency in power and energy consumption within deep learning across various edge devices. Firstly, we present a detailed, first-of-its-kind measurement study that uncovers the energy consumption characteristics of on-device deep learning. This study results in the creation of three extensive energy datasets for edge devices, covering a wide range of kernels, state-of-the-art DNN models, and popular AI applications. Secondly, we design and implement the first kernel-level energy predictors for edge devices based on our kernel-level energy dataset. Evaluation results demonstrate the ability of our predictors to provide consistent and accurate energy estimations on unseen DNN models. Lastly, we introduce two scoring metrics, PCS and IECS, developed to convert complex power and energy consumption data of an edge device into an easily understandable manner for edge device end-users. We hope our work can help shift the mindset of both end-users and the research community towards sustainability in edge computing, a principle that drives our research. Find data, code, and more up-to-date information at https://amai-gsu.github.io/DeepEn2023.

AdaMap: High-Scalable Real-Time Cooperative Perception at the Edge

Oct 03, 2023

Abstract:Cooperative perception is the key approach to augment the perception of connected and automated vehicles (CAVs) toward safe autonomous driving. However, it is challenging to achieve real-time perception sharing for hundreds of CAVs in large-scale deployment scenarios. In this paper, we propose AdaMap, a new high-scalable real-time cooperative perception system, which achieves assured percentile end-to-end latency under time-varying network dynamics. To achieve AdaMap, we design a tightly coupled data plane and control plane. In the data plane, we design a new hybrid localization module to dynamically switch between object detection and tracking, and a novel point cloud representation module to adaptively compress and reconstruct the point cloud of detected objects. In the control plane, we design a new graph-based object selection method to un-select excessive multi-viewed point clouds of objects, and a novel approximated gradient descent algorithm to optimize the representation of point clouds. We implement AdaMap on an emulation platform, including realistic vehicle and server computation and a simulated 5G network, under a 150-CAV trace collected from the CARLA simulator. The evaluation results show that, AdaMap reduces up to 49x average transmission data size at the cost of 0.37 reconstruction loss, as compared to state-of-the-art solutions, which verifies its high scalability, adaptability, and computation efficiency.

Confidence-based federated distillation for vision-based lane-centering

Jun 05, 2023

Abstract:A fundamental challenge of autonomous driving is maintaining the vehicle in the center of the lane by adjusting the steering angle. Recent advances leverage deep neural networks to predict steering decisions directly from images captured by the car cameras. Machine learning-based steering angle prediction needs to consider the vehicle's limitation in uploading large amounts of potentially private data for model training. Federated learning can address these constraints by enabling multiple vehicles to collaboratively train a global model without sharing their private data, but it is difficult to achieve good accuracy as the data distribution is often non-i.i.d. across the vehicles. This paper presents a new confidence-based federated distillation method to improve the performance of federated learning for steering angle prediction. Specifically, it proposes the novel use of entropy to determine the predictive confidence of each local model, and then selects the most confident local model as the teacher to guide the learning of the global model. A comprehensive evaluation of vision-based lane centering shows that the proposed approach can outperform FedAvg and FedDF by 11.3% and 9%, respectively.

EPAM: A Predictive Energy Model for Mobile AI

Mar 02, 2023

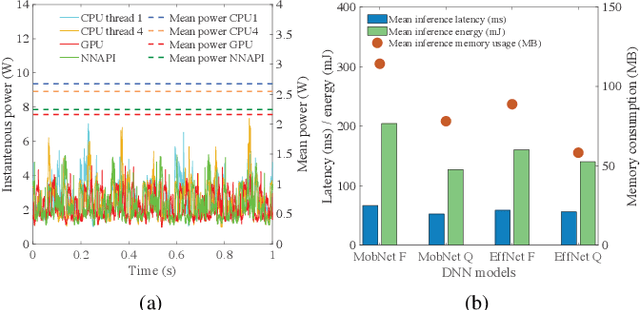

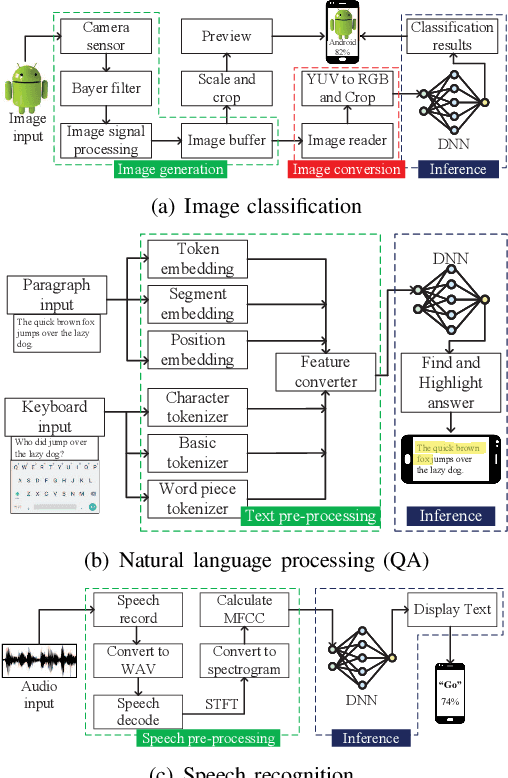

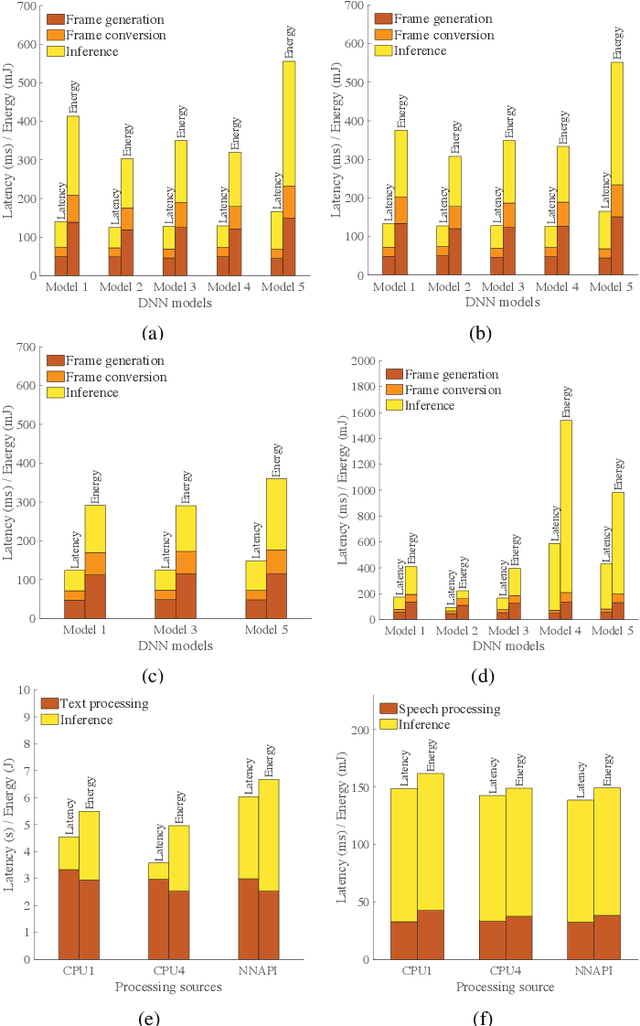

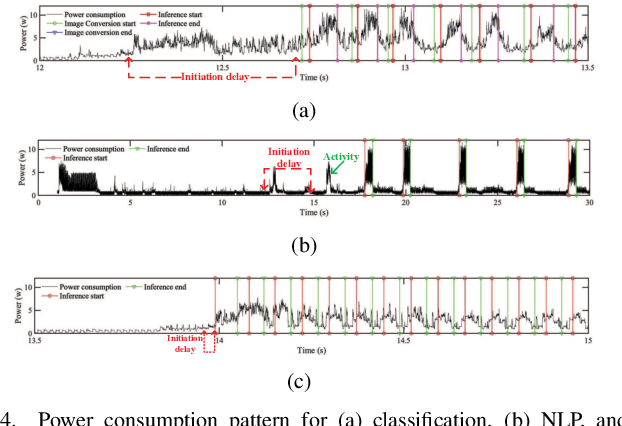

Abstract:Artificial intelligence (AI) has enabled a new paradigm of smart applications -- changing our way of living entirely. Many of these AI-enabled applications have very stringent latency requirements, especially for applications on mobile devices (e.g., smartphones, wearable devices, and vehicles). Hence, smaller and quantized deep neural network (DNN) models are developed for mobile devices, which provide faster and more energy-efficient computation for mobile AI applications. However, how AI models consume energy in a mobile device is still unexplored. Predicting the energy consumption of these models, along with their different applications, such as vision and non-vision, requires a thorough investigation of their behavior using various processing sources. In this paper, we introduce a comprehensive study of mobile AI applications considering different DNN models and processing sources, focusing on computational resource utilization, delay, and energy consumption. We measure the latency, energy consumption, and memory usage of all the models using four processing sources through extensive experiments. We explain the challenges in such investigations and how we propose to overcome them. Our study highlights important insights, such as how mobile AI behaves in different applications (vision and non-vision) using CPU, GPU, and NNAPI. Finally, we propose a novel Gaussian process regression-based general predictive energy model based on DNN structures, computation resources, and processors, which can predict the energy for each complete application cycle irrespective of device configuration and application. This study provides crucial facts and an energy prediction mechanism to the AI research community to help bring energy efficiency to mobile AI applications.

CoMap: Proactive Provision for Crowdsourcing Map in Automotive Edge Computing

Feb 07, 2023

Abstract:Crowdsourcing data from connected and automated vehicles (CAVs) is a cost-efficient way to achieve high-definition maps with up-to-date transient road information. Achieving the map with deterministic latency performance is, however, challenging due to the unpredictable resource competition and distributional resource demands. In this paper, we propose CoMap, a new crowdsourcing high definition (HD) map to minimize the monetary cost of network resource usage while satisfying the percentile requirement of end-to-end latency. We design a novel CROP algorithm to learn the resource demands of CAV offloading, optimize offloading decisions, and proactively allocate temporal network resources in a fully distributed manner. In particular, we create a prediction model to estimate the uncertainty of resource demands based on Bayesian neural networks and develop a utilization balancing scheme to resolve the imbalanced resource utilization in individual infrastructures. We evaluate the performance of CoMap with extensive simulations in an automotive edge computing network simulator. The results show that CoMap reduces up to 80.4% average resource usage as compared to existing solutions.

Metamobility: Connecting Future Mobility with Metaverse

Jan 17, 2023Abstract:A Metaverse is a perpetual, immersive, and shared digital universe that is linked to but beyond the physical reality, and this emerging technology is attracting enormous attention from different industries. In this article, we define the first holistic realization of the metaverse in the mobility domain, coined as ``metamobility". We present our vision of what metamobility will be and describe its basic architecture. We also propose two use cases, tactile live maps and meta-empowered advanced driver-assistance systems (ADAS), to demonstrate how the metamobility will benefit and reshape future mobility systems. Each use case is discussed from the perspective of the technology evolution, future vision, and critical research challenges, respectively. Finally, we identify multiple concrete open research issues for the development and deployment of the metamobility.

Factored Conditional Filtering: Tracking States and Estimating Parameters in High-Dimensional Spaces

Jun 05, 2022

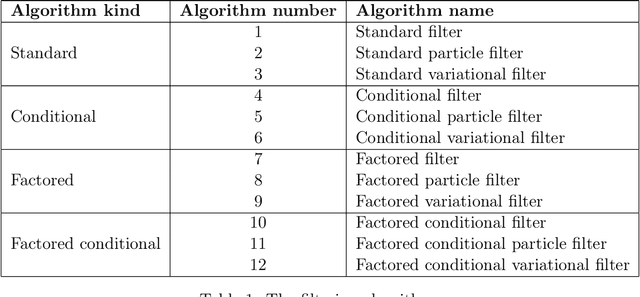

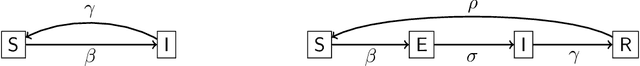

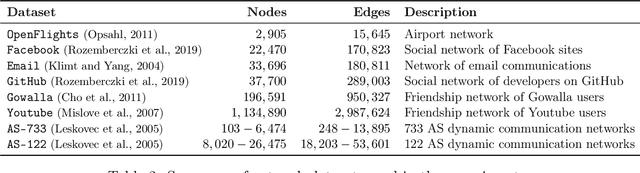

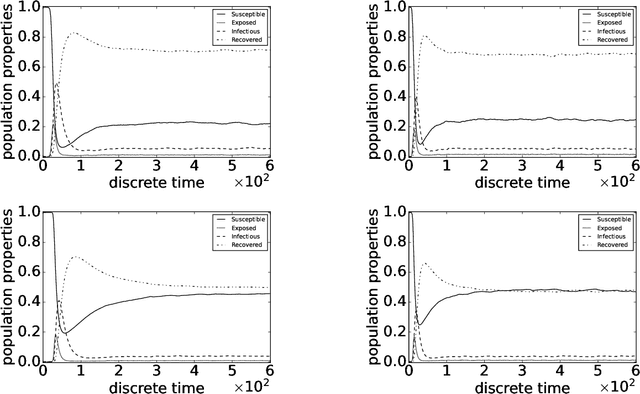

Abstract:This paper introduces the factored conditional filter, a new filtering algorithm for simultaneously tracking states and estimating parameters in high-dimensional state spaces. The conditional nature of the algorithm is used to estimate parameters and the factored nature is used to decompose the state space into low-dimensional subspaces in such a way that filtering on these subspaces gives distributions whose product is a good approximation to the distribution on the entire state space. The conditions for successful application of the algorithm are that observations be available at the subspace level and that the transition model can be factored into local transition models that are approximately confined to the subspaces; these conditions are widely satisfied in computer science, engineering, and geophysical filtering applications. We give experimental results on tracking epidemics and estimating parameters in large contact networks that show the effectiveness of our approach.

Cold-start Playlist Recommendation with Multitask Learning

Jan 18, 2019

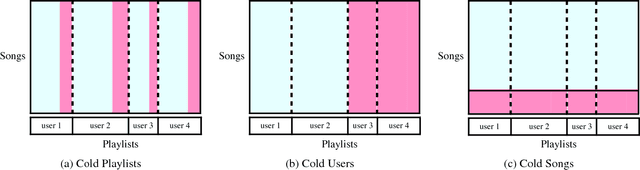

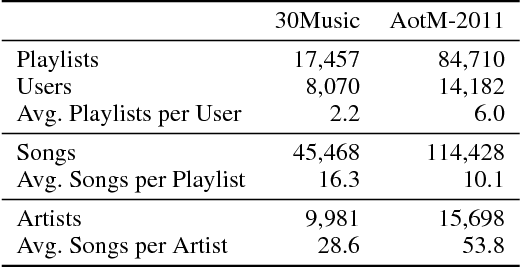

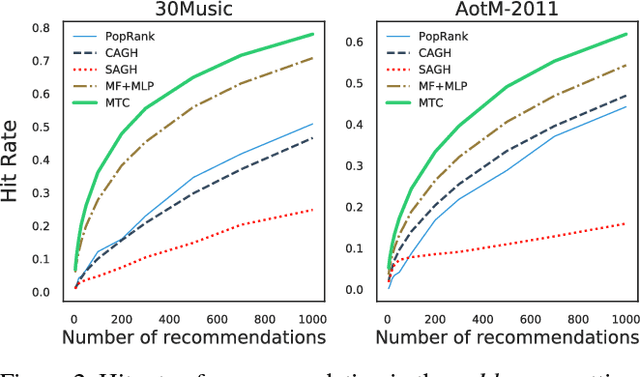

Abstract:Playlist recommendation involves producing a set of songs that a user might enjoy. We investigate this problem in three cold-start scenarios: (i) cold playlists, where we recommend songs to form new personalised playlists for an existing user; (ii) cold users, where we recommend songs to form new playlists for a new user; and (iii) cold songs, where we recommend newly released songs to extend users' existing playlists. We propose a flexible multitask learning method to deal with all three settings. The method learns from user-curated playlists, and encourages songs in a playlist to be ranked higher than those that are not by minimising a bipartite ranking loss. Inspired by an equivalence between bipartite ranking and binary classification, we show how one can efficiently approximate an optimal solution of the multitask learning objective by minimising a classification loss. Empirical results on two real playlist datasets show the proposed approach has good performance for cold-start playlist recommendation.

Revisiting revisits in trajectory recommendation

Aug 17, 2017

Abstract:Trajectory recommendation is the problem of recommending a sequence of places in a city for a tourist to visit. It is strongly desirable for the recommended sequence to avoid loops, as tourists typically would not wish to revisit the same location. Given some learned model that scores sequences, how can we then find the highest-scoring sequence that is loop-free? This paper studies this problem, with three contributions. First, we detail three distinct approaches to the problem -- graph-based heuristics, integer linear programming, and list extensions of the Viterbi algorithm -- and qualitatively summarise their strengths and weaknesses. Second, we explicate how two ostensibly different approaches to the list Viterbi algorithm are in fact fundamentally identical. Third, we conduct experiments on real-world trajectory recommendation datasets to identify the tradeoffs imposed by each of the three approaches. Overall, our results indicate that a greedy graph-based heuristic offer excellent performance and runtime, leading us to recommend its use for removing loops at prediction time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge