Haoxin Wang

MamBEV: Enabling State Space Models to Learn Birds-Eye-View Representations

Mar 18, 2025

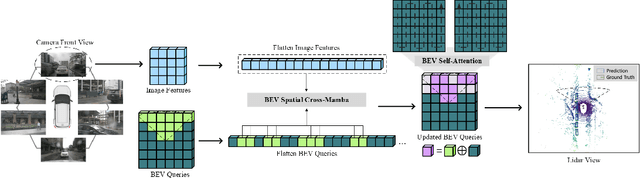

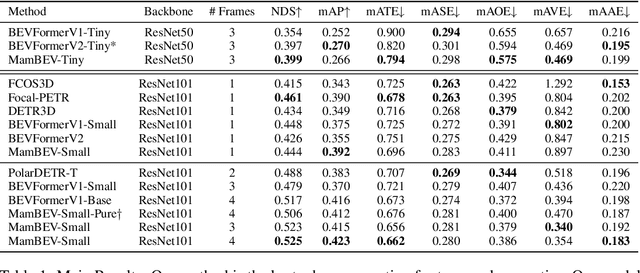

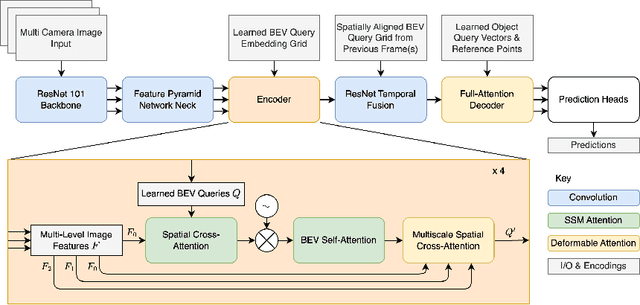

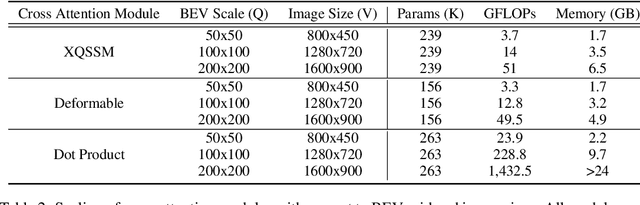

Abstract:3D visual perception tasks, such as 3D detection from multi-camera images, are essential components of autonomous driving and assistance systems. However, designing computationally efficient methods remains a significant challenge. In this paper, we propose a Mamba-based framework called MamBEV, which learns unified Bird's Eye View (BEV) representations using linear spatio-temporal SSM-based attention. This approach supports multiple 3D perception tasks with significantly improved computational and memory efficiency. Furthermore, we introduce SSM based cross-attention, analogous to standard cross attention, where BEV query representations can interact with relevant image features. Extensive experiments demonstrate MamBEV's promising performance across diverse visual perception metrics, highlighting its advantages in input scaling efficiency compared to existing benchmark models.

GreenAuto: An Automated Platform for Sustainable AI Model Design on Edge Devices

Jan 25, 2025

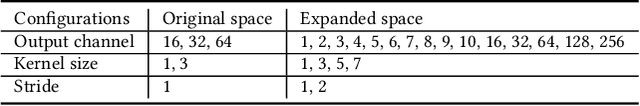

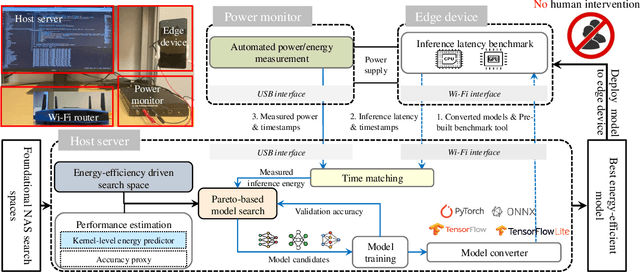

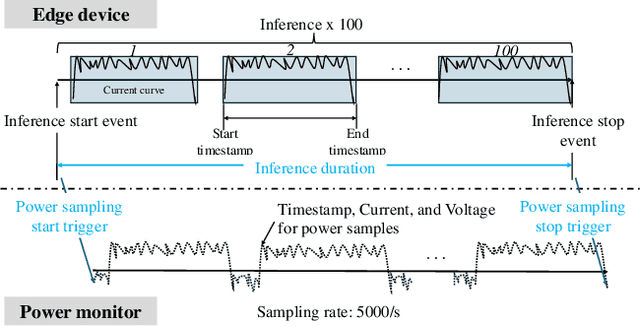

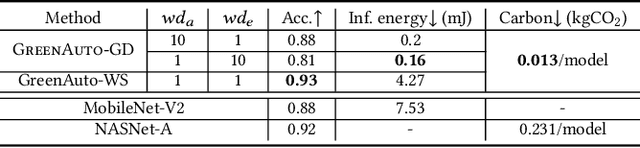

Abstract:We present GreenAuto, an end-to-end automated platform designed for sustainable AI model exploration, generation, deployment, and evaluation. GreenAuto employs a Pareto front-based search method within an expanded neural architecture search (NAS) space, guided by gradient descent to optimize model exploration. Pre-trained kernel-level energy predictors estimate energy consumption across all models, providing a global view that directs the search toward more sustainable solutions. By automating performance measurements and iteratively refining the search process, GreenAuto demonstrates the efficient identification of sustainable AI models without the need for human intervention.

Analyzing and Overcoming Local Optima in Complex Multi-Objective Optimization by Decomposition-Based Evolutionary Algorithms

Apr 12, 2024

Abstract:When addressing the challenge of complex multi-objective optimization problems, particularly those with non-convex and non-uniform Pareto fronts, Decomposition-based Multi-Objective Evolutionary Algorithms (MOEADs) often converge to local optima, thereby limiting solution diversity. Despite its significance, this issue has received limited theoretical exploration. Through a comprehensive geometric analysis, we identify that the traditional method of Reference Point (RP) selection fundamentally contributes to this challenge. In response, we introduce an innovative RP selection strategy, the Weight Vector-Guided and Gaussian-Hybrid method, designed to overcome the local optima issue. This approach employs a novel RP type that aligns with weight vector directions and integrates a Gaussian distribution to combine three distinct RP categories. Our research comprises two main experimental components: an ablation study involving 14 algorithms within the MOEADs framework, spanning from 2014 to 2022, to validate our theoretical framework, and a series of empirical tests to evaluate the effectiveness of our proposed method against both traditional and cutting-edge alternatives. Results demonstrate that our method achieves remarkable improvements in both population diversity and convergence.

Pre-insertion resistors temperature prediction based on improved WOA-SVR

Jan 07, 2024Abstract:The pre-insertion resistors (PIR) within high-voltage circuit breakers are critical components and warm up by generating Joule heat when an electric current flows through them. Elevated temperature can lead to temporary closure failure and, in severe cases, the rupture of PIR. To accurately predict the temperature of PIR, this study combines finite element simulation techniques with Support Vector Regression (SVR) optimized by an Improved Whale Optimization Algorithm (IWOA) approach. The IWOA includes Tent mapping, a convergence factor based on the sigmoid function, and the Ornstein-Uhlenbeck variation strategy. The IWOA-SVR model is compared with the SSA-SVR and WOA-SVR. The results reveal that the prediction accuracies of the IWOA-SVR model were 90.2% and 81.5% (above 100$^\circ$C) in the 3$^\circ$C temperature deviation range and 96.3% and 93.4% (above 100$^\circ$C) in the 4$^\circ$C temperature deviation range, surpassing the performance of the comparative models. This research demonstrates the method proposed can realize the online monitoring of the temperature of the PIR, which can effectively prevent thermal faults PIR and provide a basis for the opening and closing of the circuit breaker within a short period.

Dance of Channel and Sequence: An Efficient Attention-Based Approach for Multivariate Time Series Forecasting

Dec 11, 2023

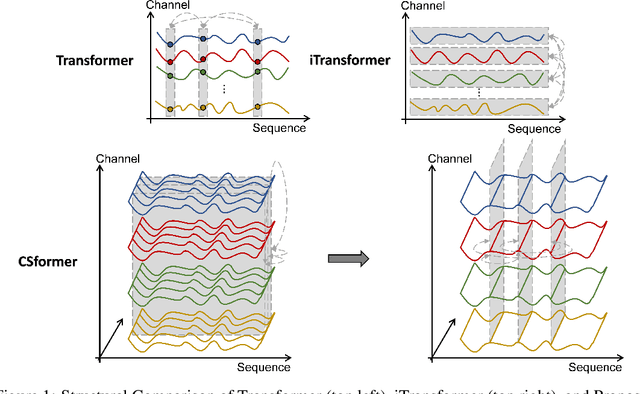

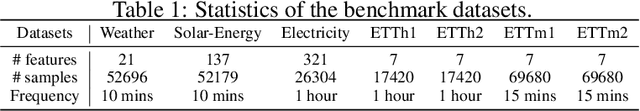

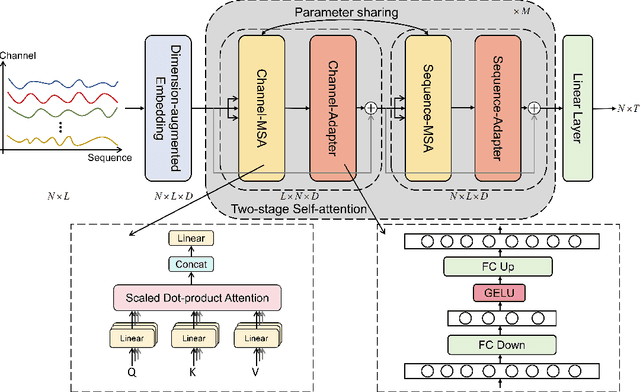

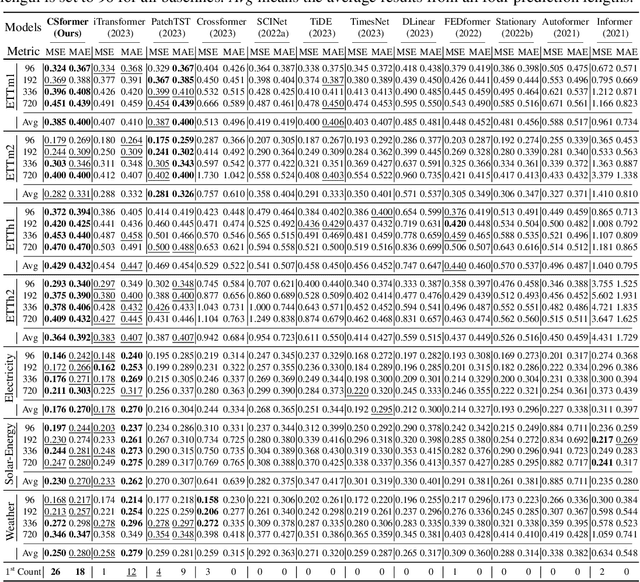

Abstract:In recent developments, predictive models for multivariate time series analysis have exhibited commendable performance through the adoption of the prevalent principle of channel independence. Nevertheless, it is imperative to acknowledge the intricate interplay among channels, which fundamentally influences the outcomes of multivariate predictions. Consequently, the notion of channel independence, while offering utility to a certain extent, becomes increasingly impractical, leading to information degradation. In response to this pressing concern, we present CSformer, an innovative framework characterized by a meticulously engineered two-stage self-attention mechanism. This mechanism is purposefully designed to enable the segregated extraction of sequence-specific and channel-specific information, while sharing parameters to promote synergy and mutual reinforcement between sequences and channels. Simultaneously, we introduce sequence adapters and channel adapters, ensuring the model's ability to discern salient features across various dimensions. Rigorous experimentation, spanning multiple real-world datasets, underscores the robustness of our approach, consistently establishing its position at the forefront of predictive performance across all datasets. This augmentation substantially enhances the capacity for feature extraction inherent to multivariate time series data, facilitating a more comprehensive exploitation of the available information.

DeepEn2023: Energy Datasets for Edge Artificial Intelligence

Nov 30, 2023

Abstract:Climate change poses one of the most significant challenges to humanity. As a result of these climatic changes, the frequency of weather, climate, and water-related disasters has multiplied fivefold over the past 50 years, resulting in over 2 million deaths and losses exceeding $3.64 trillion USD. Leveraging AI-powered technologies for sustainable development and combating climate change is a promising avenue. Numerous significant publications are dedicated to using AI to improve renewable energy forecasting, enhance waste management, and monitor environmental changes in real time. However, very few research studies focus on making AI itself environmentally sustainable. This oversight regarding the sustainability of AI within the field might be attributed to a mindset gap and the absence of comprehensive energy datasets. In addition, with the ubiquity of edge AI systems and applications, especially on-device learning, there is a pressing need to measure, analyze, and optimize their environmental sustainability, such as energy efficiency. To this end, in this paper, we propose large-scale energy datasets for edge AI, named DeepEn2023, covering a wide range of kernels, state-of-the-art deep neural network models, and popular edge AI applications. We anticipate that DeepEn2023 will improve transparency in sustainability in on-device deep learning across a range of edge AI systems and applications. For more information, including access to the dataset and code, please visit https://amai-gsu.github.io/DeepEn2023.

TimeSQL: Improving Multivariate Time Series Forecasting with Multi-Scale Patching and Smooth Quadratic Loss

Nov 19, 2023Abstract:Time series is a special type of sequence data, a sequence of real-valued random variables collected at even intervals of time. The real-world multivariate time series comes with noises and contains complicated local and global temporal dynamics, making it difficult to forecast the future time series given the historical observations. This work proposes a simple and effective framework, coined as TimeSQL, which leverages multi-scale patching and smooth quadratic loss (SQL) to tackle the above challenges. The multi-scale patching transforms the time series into two-dimensional patches with different length scales, facilitating the perception of both locality and long-term correlations in time series. SQL is derived from the rational quadratic kernel and can dynamically adjust the gradients to avoid overfitting to the noises and outliers. Theoretical analysis demonstrates that, under mild conditions, the effect of the noises on the model with SQL is always smaller than that with MSE. Based on the two modules, TimeSQL achieves new state-of-the-art performance on the eight real-world benchmark datasets. Further ablation studies indicate that the key modules in TimeSQL could also enhance the results of other models for multivariate time series forecasting, standing as plug-and-play techniques.

Poster: Real-Time Object Substitution for Mobile Diminished Reality with Edge Computing

Oct 23, 2023Abstract:Diminished Reality (DR) is considered as the conceptual counterpart to Augmented Reality (AR), and has recently gained increasing attention from both industry and academia. Unlike AR which adds virtual objects to the real world, DR allows users to remove physical content from the real world. When combined with object replacement technology, it presents an further exciting avenue for exploration within the metaverse. Although a few researches have been conducted on the intersection of object substitution and DR, there is no real-time object substitution for mobile diminished reality architecture with high quality. In this paper, we propose an end-to-end architecture to facilitate immersive and real-time scene construction for mobile devices with edge computing.

Unveiling Energy Efficiency in Deep Learning: Measurement, Prediction, and Scoring across Edge Devices

Oct 19, 2023

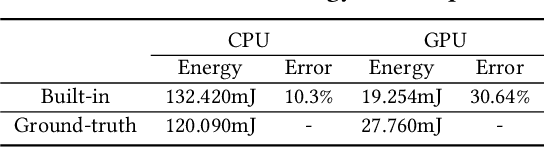

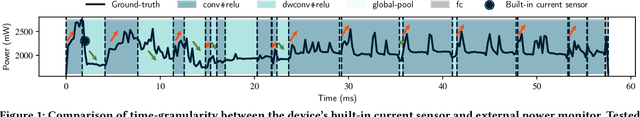

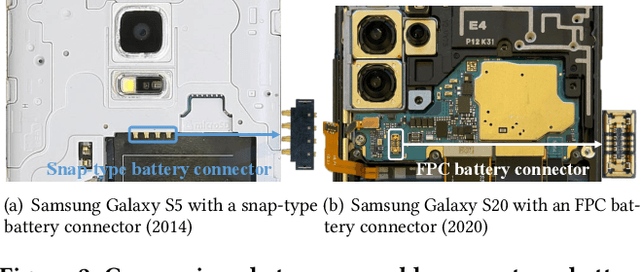

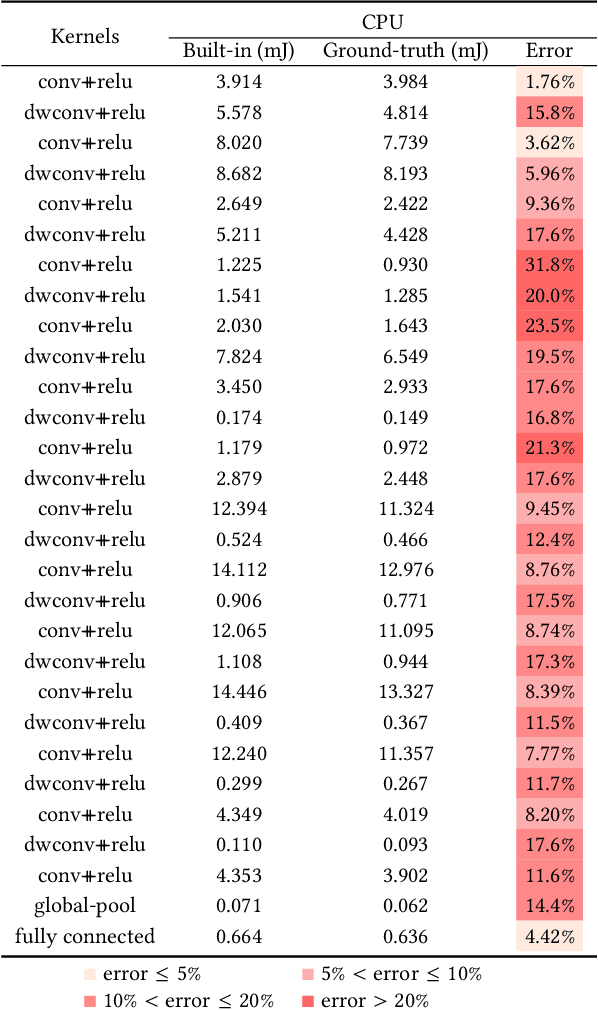

Abstract:Today, deep learning optimization is primarily driven by research focused on achieving high inference accuracy and reducing latency. However, the energy efficiency aspect is often overlooked, possibly due to a lack of sustainability mindset in the field and the absence of a holistic energy dataset. In this paper, we conduct a threefold study, including energy measurement, prediction, and efficiency scoring, with an objective to foster transparency in power and energy consumption within deep learning across various edge devices. Firstly, we present a detailed, first-of-its-kind measurement study that uncovers the energy consumption characteristics of on-device deep learning. This study results in the creation of three extensive energy datasets for edge devices, covering a wide range of kernels, state-of-the-art DNN models, and popular AI applications. Secondly, we design and implement the first kernel-level energy predictors for edge devices based on our kernel-level energy dataset. Evaluation results demonstrate the ability of our predictors to provide consistent and accurate energy estimations on unseen DNN models. Lastly, we introduce two scoring metrics, PCS and IECS, developed to convert complex power and energy consumption data of an edge device into an easily understandable manner for edge device end-users. We hope our work can help shift the mindset of both end-users and the research community towards sustainability in edge computing, a principle that drives our research. Find data, code, and more up-to-date information at https://amai-gsu.github.io/DeepEn2023.

Confidence-based federated distillation for vision-based lane-centering

Jun 05, 2023

Abstract:A fundamental challenge of autonomous driving is maintaining the vehicle in the center of the lane by adjusting the steering angle. Recent advances leverage deep neural networks to predict steering decisions directly from images captured by the car cameras. Machine learning-based steering angle prediction needs to consider the vehicle's limitation in uploading large amounts of potentially private data for model training. Federated learning can address these constraints by enabling multiple vehicles to collaboratively train a global model without sharing their private data, but it is difficult to achieve good accuracy as the data distribution is often non-i.i.d. across the vehicles. This paper presents a new confidence-based federated distillation method to improve the performance of federated learning for steering angle prediction. Specifically, it proposes the novel use of entropy to determine the predictive confidence of each local model, and then selects the most confident local model as the teacher to guide the learning of the global model. A comprehensive evaluation of vision-based lane centering shows that the proposed approach can outperform FedAvg and FedDF by 11.3% and 9%, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge