Dachuan Li

MV-MOS: Multi-View Feature Fusion for 3D Moving Object Segmentation

Aug 20, 2024

Abstract:Effectively summarizing dense 3D point cloud data and extracting motion information of moving objects (moving object segmentation, MOS) is crucial to autonomous driving and robotics applications. How to effectively utilize motion and semantic features and avoid information loss during 3D-to-2D projection is still a key challenge. In this paper, we propose a novel multi-view MOS model (MV-MOS) by fusing motion-semantic features from different 2D representations of point clouds. To effectively exploit complementary information, the motion branches of the proposed model combines motion features from both bird's eye view (BEV) and range view (RV) representations. In addition, a semantic branch is introduced to provide supplementary semantic features of moving objects. Finally, a Mamba module is utilized to fuse the semantic features with motion features and provide effective guidance for the motion branches. We validated the effectiveness of the proposed multi-branch fusion MOS framework via comprehensive experiments, and our proposed model outperforms existing state-of-the-art models on the SemanticKITTI benchmark.

Runtime Safety Assurance for Learning-enabled Control of Autonomous Driving Vehicles

Sep 28, 2021

Abstract:Providing safety guarantees for Autonomous Vehicle (AV) systems with machine-learning-based controllers remains a challenging issue. In this work, we propose Simplex-Drive, a framework that can achieve runtime safety assurance for machine-learning enabled controllers of AVs. The proposed Simplex-Drive consists of an unverified Deep Reinforcement Learning (DRL)-based advanced controller (AC) that achieves desirable performance in complex scenarios, a Velocity-Obstacle (VO) based baseline safe controller (BC) with provably safety guarantees, and a verified mode management unit that monitors the operation status and switches the control authority between AC and BC based on safety-related conditions. We provide a formal correctness proof of Simplex-Drive and conduct a lane-changing case study in dense traffic scenarios. The simulation experiment results demonstrate that Simplex-Drive can always ensure operation safety without sacrificing control performance, even if the DRL policy may lead to deviations from the safe status.

Unit-Modulus Wireless Federated Learning Via Penalty Alternating Minimization

Aug 31, 2021

Abstract:Wireless federated learning (FL) is an emerging machine learning paradigm that trains a global parametric model from distributed datasets via wireless communications. This paper proposes a unit-modulus wireless FL (UMWFL) framework, which simultaneously uploads local model parameters and computes global model parameters via optimized phase shifting. The proposed framework avoids sophisticated baseband signal processing, leading to both low communication delays and implementation costs. A training loss bound is derived and a penalty alternating minimization (PAM) algorithm is proposed to minimize the nonconvex nonsmooth loss bound. Experimental results in the Car Learning to Act (CARLA) platform show that the proposed UMWFL framework with PAM algorithm achieves smaller training losses and testing errors than those of the benchmark scheme.

Capture Uncertainties in Deep Neural Networks for Safe Operation of Autonomous Driving Vehicles

Aug 11, 2021Abstract:Uncertainties in Deep Neural Network (DNN)-based perception and vehicle's motion pose challenges to the development of safe autonomous driving vehicles. In this paper, we propose a safe motion planning framework featuring the quantification and propagation of DNN-based perception uncertainties and motion uncertainties. Contributions of this work are twofold: (1) A Bayesian Deep Neural network model which detects 3D objects and quantitatively captures the associated aleatoric and epistemic uncertainties of DNNs; (2) An uncertainty-aware motion planning algorithm (PU-RRT) that accounts for uncertainties in object detection and ego-vehicle's motion. The proposed approaches are validated via simulated complex scenarios built in CARLA. Experimental results show that the proposed motion planning scheme can cope with uncertainties of DNN-based perception and vehicle motion, and improve the operational safety of autonomous vehicles while still achieving desirable efficiency.

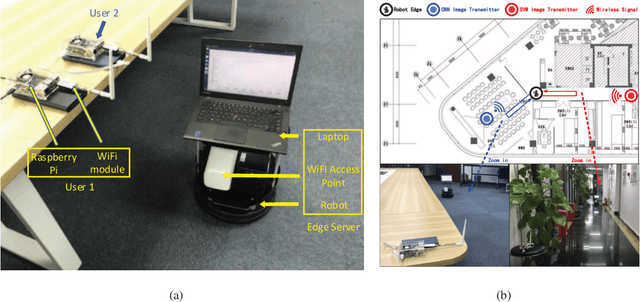

Learning Centric Wireless Resource Allocation for Edge Computing: Algorithm and Experiment

Oct 29, 2020

Abstract:Edge intelligence is an emerging network architecture that integrates sensing, communication, computing components, and supports various machine learning applications, where a fundamental communication question is: how to allocate the limited wireless resources (such as time, energy) to the simultaneous model training of heterogeneous learning tasks? Existing methods ignore two important facts: 1) different models have heterogeneous demands on training data; 2) there is a mismatch between the simulated environment and the real-world environment. As a result, they could lead to low learning performance in practice. This paper proposes the learning centric wireless resource allocation (LCWRA) scheme that maximizes the worst learning performance of multiple classification tasks. Analysis shows that the optimal transmission time has an inverse power relationship with respect to the classification error. Finally, both simulation and experimental results are provided to verify the performance of the proposed LCWRA scheme and its robustness in real implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge