Chongyang Zhang

ADPretrain: Advancing Industrial Anomaly Detection via Anomaly Representation Pretraining

Nov 07, 2025Abstract:The current mainstream and state-of-the-art anomaly detection (AD) methods are substantially established on pretrained feature networks yielded by ImageNet pretraining. However, regardless of supervised or self-supervised pretraining, the pretraining process on ImageNet does not match the goal of anomaly detection (i.e., pretraining in natural images doesn't aim to distinguish between normal and abnormal). Moreover, natural images and industrial image data in AD scenarios typically have the distribution shift. The two issues can cause ImageNet-pretrained features to be suboptimal for AD tasks. To further promote the development of the AD field, pretrained representations specially for AD tasks are eager and very valuable. To this end, we propose a novel AD representation learning framework specially designed for learning robust and discriminative pretrained representations for industrial anomaly detection. Specifically, closely surrounding the goal of anomaly detection (i.e., focus on discrepancies between normals and anomalies), we propose angle- and norm-oriented contrastive losses to maximize the angle size and norm difference between normal and abnormal features simultaneously. To avoid the distribution shift from natural images to AD images, our pretraining is performed on a large-scale AD dataset, RealIAD. To further alleviate the potential shift between pretraining data and downstream AD datasets, we learn the pretrained AD representations based on the class-generalizable representation, residual features. For evaluation, based on five embedding-based AD methods, we simply replace their original features with our pretrained representations. Extensive experiments on five AD datasets and five backbones consistently show the superiority of our pretrained features. The code is available at https://github.com/xcyao00/ADPretrain.

ResAD: A Simple Framework for Class Generalizable Anomaly Detection

Oct 26, 2024

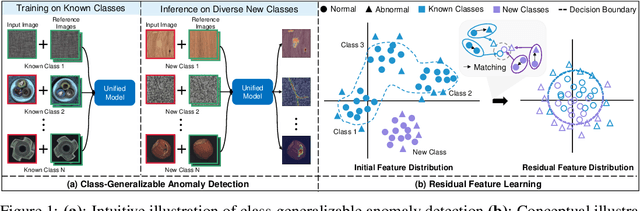

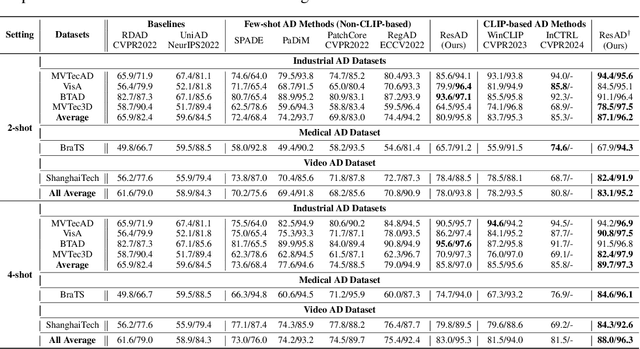

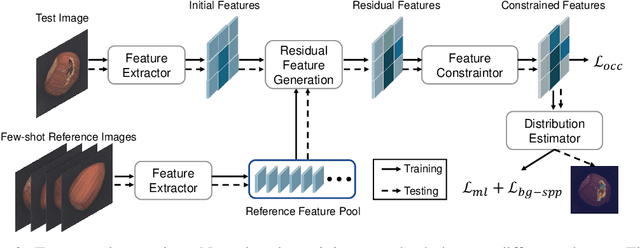

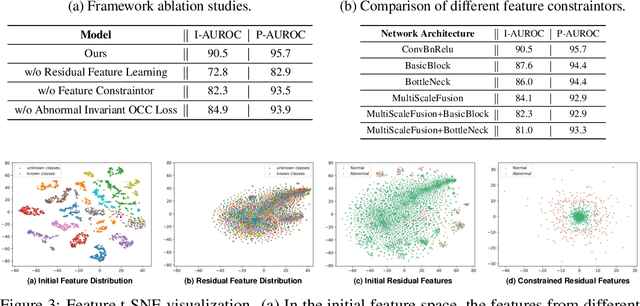

Abstract:This paper explores the problem of class-generalizable anomaly detection, where the objective is to train one unified AD model that can generalize to detect anomalies in diverse classes from different domains without any retraining or fine-tuning on the target data. Because normal feature representations vary significantly across classes, this will cause the widely studied one-for-one AD models to be poorly classgeneralizable (i.e., performance drops dramatically when used for new classes). In this work, we propose a simple but effective framework (called ResAD) that can be directly applied to detect anomalies in new classes. Our main insight is to learn the residual feature distribution rather than the initial feature distribution. In this way, we can significantly reduce feature variations. Even in new classes, the distribution of normal residual features would not remarkably shift from the learned distribution. Therefore, the learned model can be directly adapted to new classes. ResAD consists of three components: (1) a Feature Converter that converts initial features into residual features; (2) a simple and shallow Feature Constraintor that constrains normal residual features into a spatial hypersphere for further reducing feature variations and maintaining consistency in feature scales among different classes; (3) a Feature Distribution Estimator that estimates the normal residual feature distribution, anomalies can be recognized as out-of-distribution. Despite the simplicity, ResAD can achieve remarkable anomaly detection results when directly used in new classes. The code is available at https://github.com/xcyao00/ResAD.

Hierarchical Gaussian Mixture Normalizing Flow Modeling for Unified Anomaly Detection

Mar 20, 2024Abstract:Unified anomaly detection (AD) is one of the most challenges for anomaly detection, where one unified model is trained with normal samples from multiple classes with the objective to detect anomalies in these classes. For such a challenging task, popular normalizing flow (NF) based AD methods may fall into a "homogeneous mapping" issue,where the NF-based AD models are biased to generate similar latent representations for both normal and abnormal features, and thereby lead to a high missing rate of anomalies. In this paper, we propose a novel Hierarchical Gaussian mixture normalizing flow modeling method for accomplishing unified Anomaly Detection, which we call HGAD. Our HGAD consists of two key components: inter-class Gaussian mixture modeling and intra-class mixed class centers learning. Compared to the previous NF-based AD methods, the hierarchical Gaussian mixture modeling approach can bring stronger representation capability to the latent space of normalizing flows, so that even complex multi-class distribution can be well represented and learned in the latent space. In this way, we can avoid mapping different class distributions into the same single Gaussian prior, thus effectively avoiding or mitigating the "homogeneous mapping" issue. We further indicate that the more distinguishable different class centers, the more conducive to avoiding the bias issue. Thus, we further propose a mutual information maximization loss for better structuring the latent feature space. We evaluate our method on four real-world AD benchmarks, where we can significantly improve the previous NF-based AD methods and also outperform the SOTA unified AD methods.

Focus the Discrepancy: Intra- and Inter-Correlation Learning for Image Anomaly Detection

Aug 06, 2023

Abstract:Humans recognize anomalies through two aspects: larger patch-wise representation discrepancies and weaker patch-to-normal-patch correlations. However, the previous AD methods didn't sufficiently combine the two complementary aspects to design AD models. To this end, we find that Transformer can ideally satisfy the two aspects as its great power in the unified modeling of patch-wise representations and patch-to-patch correlations. In this paper, we propose a novel AD framework: FOcus-the-Discrepancy (FOD), which can simultaneously spot the patch-wise, intra- and inter-discrepancies of anomalies. The major characteristic of our method is that we renovate the self-attention maps in transformers to Intra-Inter-Correlation (I2Correlation). The I2Correlation contains a two-branch structure to first explicitly establish intra- and inter-image correlations, and then fuses the features of two-branch to spotlight the abnormal patterns. To learn the intra- and inter-correlations adaptively, we propose the RBF-kernel-based target-correlations as learning targets for self-supervised learning. Besides, we introduce an entropy constraint strategy to solve the mode collapse issue in optimization and further amplify the normal-abnormal distinguishability. Extensive experiments on three unsupervised real-world AD benchmarks show the superior performance of our approach. Code will be available at https://github.com/xcyao00/FOD.

Towards Diverse Temporal Grounding under Single Positive Labels

Mar 12, 2023Abstract:Temporal grounding aims to retrieve moments of the described event within an untrimmed video by a language query. Typically, existing methods assume annotations are precise and unique, yet one query may describe multiple moments in many cases. Hence, simply taking it as a one-vs-one mapping task and striving to match single-label annotations will inevitably introduce false negatives during optimization. In this study, we reformulate this task as a one-vs-many optimization problem under the condition of single positive labels. The unlabeled moments are considered unobserved rather than negative, and we explore mining potential positive moments to assist in multiple moment retrieval. In this setting, we propose a novel Diverse Temporal Grounding framework, termed DTG-SPL, which mainly consists of a positive moment estimation (PME) module and a diverse moment regression (DMR) module. PME leverages semantic reconstruction information and an expected positive regularization to uncover potential positive moments in an online fashion. Under the supervision of these pseudo positives, DMR is able to localize diverse moments in parallel that meet different users. The entire framework allows for end-to-end optimization as well as fast inference. Extensive experiments on Charades-STA and ActivityNet Captions show that our method achieves superior performance in terms of both single-label and multi-label metrics.

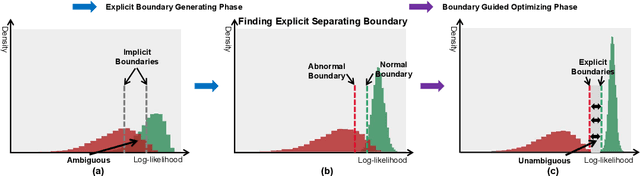

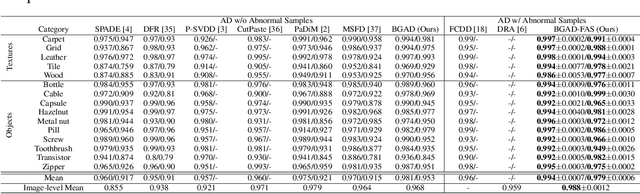

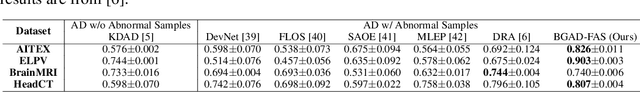

Explicit Boundary Guided Semi-Push-Pull Contrastive Learning for Better Anomaly Detection

Jul 04, 2022

Abstract:Most of anomaly detection algorithms are mainly focused on modeling the distribution of normal samples and treating anomalies as outliers. However, the discriminative performance of the model may be insufficient due to the lack of knowledge about anomalies. Thus, anomalies should be exploited as possible. However, utilizing a few known anomalies during training may cause another issue that model may be biased by those known anomalies and fail to generalize to unseen anomalies. In this paper, we aim to exploit a few existing anomalies with a carefully designed explicit boundary guided semi-push-pull learning strategy, which can enhance discriminability while mitigating bias problem caused by insufficient known anomalies. Our model is based on two core designs: First, finding one explicit separating boundary as the guidance for further contrastive learning. Specifically, we employ normalizing flow to learn normal feature distribution, then find an explicit separating boundary close to the distribution edge. The obtained explicit and compact separating boundary only relies on the normal feature distribution, thus the bias problem caused by a few known anomalies can be mitigated. Second, learning more discriminative features under the guidance of the explicit separating boundary. A boundary guided semi-push-pull loss is developed to only pull the normal features together while pushing the abnormal features apart from the separating boundary beyond a certain margin region. In this way, our model can form a more explicit and discriminative decision boundary to achieve better results for known and also unseen anomalies, while also maintaining high training efficiency. Extensive experiments on the widely-used MVTecAD benchmark show that the proposed method achieves new state-of-the-art results, with the performance of 98.8% image-level AUROC and 99.4% pixel-level AUROC.

Embracing Uncertainty: Decoupling and De-bias for Robust Temporal Grounding

Mar 31, 2021

Abstract:Temporal grounding aims to localize temporal boundaries within untrimmed videos by language queries, but it faces the challenge of two types of inevitable human uncertainties: query uncertainty and label uncertainty. The two uncertainties stem from human subjectivity, leading to limited generalization ability of temporal grounding. In this work, we propose a novel DeNet (Decoupling and De-bias) to embrace human uncertainty: Decoupling - We explicitly disentangle each query into a relation feature and a modified feature. The relation feature, which is mainly based on skeleton-like words (including nouns and verbs), aims to extract basic and consistent information in the presence of query uncertainty. Meanwhile, modified feature assigned with style-like words (including adjectives, adverbs, etc) represents the subjective information, and thus brings personalized predictions; De-bias - We propose a de-bias mechanism to generate diverse predictions, aim to alleviate the bias caused by single-style annotations in the presence of label uncertainty. Moreover, we put forward new multi-label metrics to diversify the performance evaluation. Extensive experiments show that our approach is more effective and robust than state-of-the-arts on Charades-STA and ActivityNet Captions datasets.

Which to Match? Selecting Consistent GT-Proposal Assignment for Pedestrian Detection

Mar 18, 2021

Abstract:Accurate pedestrian classification and localization have received considerable attention due to their wide applications such as security monitoring, autonomous driving, etc. Although pedestrian detectors have made great progress in recent years, the fixed Intersection over Union (IoU) based assignment-regression manner still limits their performance. Two main factors are responsible for this: 1) the IoU threshold faces a dilemma that a lower one will result in more false positives, while a higher one will filter out the matched positives; 2) the IoU-based GT-Proposal assignment suffers from the inconsistent supervision problem that spatially adjacent proposals with similar features are assigned to different ground-truth boxes, which means some very similar proposals may be forced to regress towards different targets, and thus confuses the bounding-box regression when predicting the location results. In this paper, we first put forward the question that \textbf{Regression Direction} would affect the performance for pedestrian detection. Consequently, we address the weakness of IoU by introducing one geometric sensitive search algorithm as a new assignment and regression metric. Different from the previous IoU-based \textbf{one-to-one} assignment manner of one proposal to one ground-truth box, the proposed method attempts to seek a reasonable matching between the sets of proposals and ground-truth boxes. Specifically, we boost the MR-FPPI under R$_{75}$ by 8.8\% on Citypersons dataset. Furthermore, by incorporating this method as a metric into the state-of-the-art pedestrian detectors, we show a consistent improvement.

Where, What, Whether: Multi-modal Learning Meets Pedestrian Detection

Dec 20, 2020

Abstract:Pedestrian detection benefits greatly from deep convolutional neural networks (CNNs). However, it is inherently hard for CNNs to handle situations in the presence of occlusion and scale variation. In this paper, we propose W$^3$Net, which attempts to address above challenges by decomposing the pedestrian detection task into \textbf{\textit{W}}here, \textbf{\textit{W}}hat and \textbf{\textit{W}}hether problem directing against pedestrian localization, scale prediction and classification correspondingly. Specifically, for a pedestrian instance, we formulate its feature by three steps. i) We generate a bird view map, which is naturally free from occlusion issues, and scan all points on it to look for suitable locations for each pedestrian instance. ii) Instead of utilizing pre-fixed anchors, we model the interdependency between depth and scale aiming at generating depth-guided scales at different locations for better matching instances of different sizes. iii) We learn a latent vector shared by both visual and corpus space, by which false positives with similar vertical structure but lacking human partial features would be filtered out. We achieve state-of-the-art results on widely used datasets (Citypersons and Caltech). In particular. when evaluating on heavy occlusion subset, our results reduce MR$^{-2}$ from 49.3$\%$ to 18.7$\%$ on Citypersons, and from 45.18$\%$ to 28.33$\%$ on Caltech.

Visual Relationship Detection with Relative Location Mining

Nov 02, 2019

Abstract:Visual relationship detection, as a challenging task used to find and distinguish the interactions between object pairs in one image, has received much attention recently. In this work, we propose a novel visual relationship detection framework by deeply mining and utilizing relative location of object-pair in every stage of the procedure. In both the stages, relative location information of each object-pair is abstracted and encoded as auxiliary feature to improve the distinguishing capability of object-pairs proposing and predicate recognition, respectively; Moreover, one Gated Graph Neural Network(GGNN) is introduced to mine and measure the relevance of predicates using relative location. With the location-based GGNN, those non-exclusive predicates with similar spatial position can be clustered firstly and then be smoothed with close classification scores, thus the accuracy of top $n$ recall can be increased further. Experiments on two widely used datasets VRD and VG show that, with the deeply mining and exploiting of relative location information, our proposed model significantly outperforms the current state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge