Explicit Boundary Guided Semi-Push-Pull Contrastive Learning for Better Anomaly Detection

Paper and Code

Jul 04, 2022

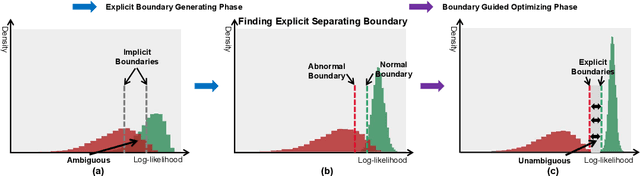

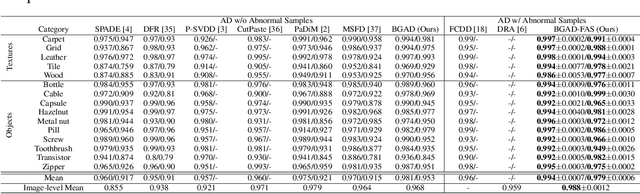

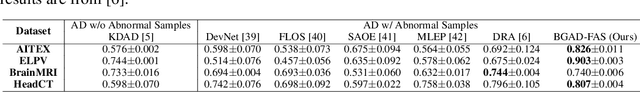

Most of anomaly detection algorithms are mainly focused on modeling the distribution of normal samples and treating anomalies as outliers. However, the discriminative performance of the model may be insufficient due to the lack of knowledge about anomalies. Thus, anomalies should be exploited as possible. However, utilizing a few known anomalies during training may cause another issue that model may be biased by those known anomalies and fail to generalize to unseen anomalies. In this paper, we aim to exploit a few existing anomalies with a carefully designed explicit boundary guided semi-push-pull learning strategy, which can enhance discriminability while mitigating bias problem caused by insufficient known anomalies. Our model is based on two core designs: First, finding one explicit separating boundary as the guidance for further contrastive learning. Specifically, we employ normalizing flow to learn normal feature distribution, then find an explicit separating boundary close to the distribution edge. The obtained explicit and compact separating boundary only relies on the normal feature distribution, thus the bias problem caused by a few known anomalies can be mitigated. Second, learning more discriminative features under the guidance of the explicit separating boundary. A boundary guided semi-push-pull loss is developed to only pull the normal features together while pushing the abnormal features apart from the separating boundary beyond a certain margin region. In this way, our model can form a more explicit and discriminative decision boundary to achieve better results for known and also unseen anomalies, while also maintaining high training efficiency. Extensive experiments on the widely-used MVTecAD benchmark show that the proposed method achieves new state-of-the-art results, with the performance of 98.8% image-level AUROC and 99.4% pixel-level AUROC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge