Xincheng Yao

ADPretrain: Advancing Industrial Anomaly Detection via Anomaly Representation Pretraining

Nov 07, 2025Abstract:The current mainstream and state-of-the-art anomaly detection (AD) methods are substantially established on pretrained feature networks yielded by ImageNet pretraining. However, regardless of supervised or self-supervised pretraining, the pretraining process on ImageNet does not match the goal of anomaly detection (i.e., pretraining in natural images doesn't aim to distinguish between normal and abnormal). Moreover, natural images and industrial image data in AD scenarios typically have the distribution shift. The two issues can cause ImageNet-pretrained features to be suboptimal for AD tasks. To further promote the development of the AD field, pretrained representations specially for AD tasks are eager and very valuable. To this end, we propose a novel AD representation learning framework specially designed for learning robust and discriminative pretrained representations for industrial anomaly detection. Specifically, closely surrounding the goal of anomaly detection (i.e., focus on discrepancies between normals and anomalies), we propose angle- and norm-oriented contrastive losses to maximize the angle size and norm difference between normal and abnormal features simultaneously. To avoid the distribution shift from natural images to AD images, our pretraining is performed on a large-scale AD dataset, RealIAD. To further alleviate the potential shift between pretraining data and downstream AD datasets, we learn the pretrained AD representations based on the class-generalizable representation, residual features. For evaluation, based on five embedding-based AD methods, we simply replace their original features with our pretrained representations. Extensive experiments on five AD datasets and five backbones consistently show the superiority of our pretrained features. The code is available at https://github.com/xcyao00/ADPretrain.

HRVVS: A High-resolution Video Vasculature Segmentation Network via Hierarchical Autoregressive Residual Priors

Jul 30, 2025

Abstract:The segmentation of the hepatic vasculature in surgical videos holds substantial clinical significance in the context of hepatectomy procedures. However, owing to the dearth of an appropriate dataset and the inherently complex task characteristics, few researches have been reported in this domain. To address this issue, we first introduce a high quality frame-by-frame annotated hepatic vasculature dataset containing 35 long hepatectomy videos and 11442 high-resolution frames. On this basis, we propose a novel high-resolution video vasculature segmentation network, dubbed as HRVVS. We innovatively embed a pretrained visual autoregressive modeling (VAR) model into different layers of the hierarchical encoder as prior information to reduce the information degradation generated during the downsampling process. In addition, we designed a dynamic memory decoder on a multi-view segmentation network to minimize the transmission of redundant information while preserving more details between frames. Extensive experiments on surgical video datasets demonstrate that our proposed HRVVS significantly outperforms the state-of-the-art methods. The source code and dataset will be publicly available at \href{https://github.com/scott-yjyang/xx}{https://github.com/scott-yjyang/HRVVS}.

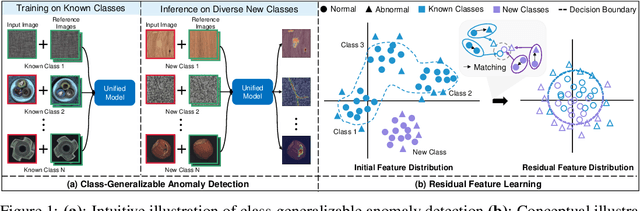

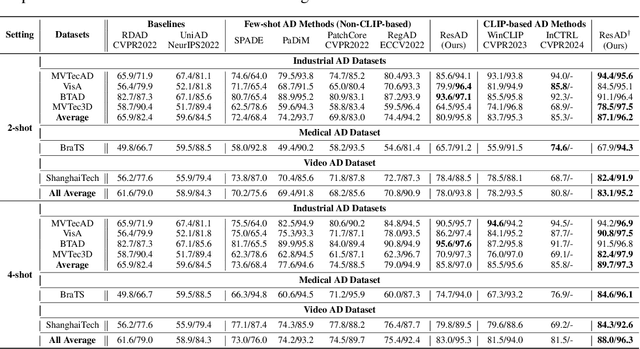

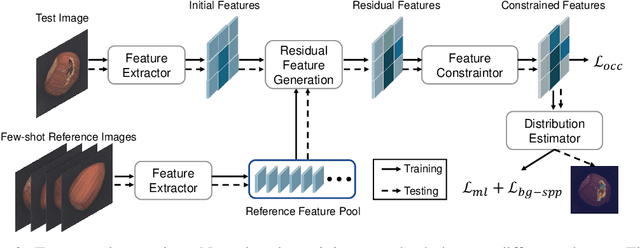

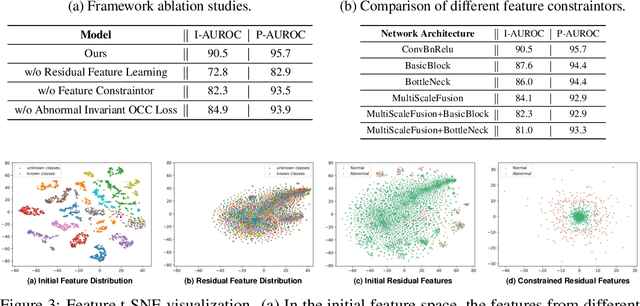

ResAD: A Simple Framework for Class Generalizable Anomaly Detection

Oct 26, 2024

Abstract:This paper explores the problem of class-generalizable anomaly detection, where the objective is to train one unified AD model that can generalize to detect anomalies in diverse classes from different domains without any retraining or fine-tuning on the target data. Because normal feature representations vary significantly across classes, this will cause the widely studied one-for-one AD models to be poorly classgeneralizable (i.e., performance drops dramatically when used for new classes). In this work, we propose a simple but effective framework (called ResAD) that can be directly applied to detect anomalies in new classes. Our main insight is to learn the residual feature distribution rather than the initial feature distribution. In this way, we can significantly reduce feature variations. Even in new classes, the distribution of normal residual features would not remarkably shift from the learned distribution. Therefore, the learned model can be directly adapted to new classes. ResAD consists of three components: (1) a Feature Converter that converts initial features into residual features; (2) a simple and shallow Feature Constraintor that constrains normal residual features into a spatial hypersphere for further reducing feature variations and maintaining consistency in feature scales among different classes; (3) a Feature Distribution Estimator that estimates the normal residual feature distribution, anomalies can be recognized as out-of-distribution. Despite the simplicity, ResAD can achieve remarkable anomaly detection results when directly used in new classes. The code is available at https://github.com/xcyao00/ResAD.

Hierarchical Gaussian Mixture Normalizing Flow Modeling for Unified Anomaly Detection

Mar 20, 2024Abstract:Unified anomaly detection (AD) is one of the most challenges for anomaly detection, where one unified model is trained with normal samples from multiple classes with the objective to detect anomalies in these classes. For such a challenging task, popular normalizing flow (NF) based AD methods may fall into a "homogeneous mapping" issue,where the NF-based AD models are biased to generate similar latent representations for both normal and abnormal features, and thereby lead to a high missing rate of anomalies. In this paper, we propose a novel Hierarchical Gaussian mixture normalizing flow modeling method for accomplishing unified Anomaly Detection, which we call HGAD. Our HGAD consists of two key components: inter-class Gaussian mixture modeling and intra-class mixed class centers learning. Compared to the previous NF-based AD methods, the hierarchical Gaussian mixture modeling approach can bring stronger representation capability to the latent space of normalizing flows, so that even complex multi-class distribution can be well represented and learned in the latent space. In this way, we can avoid mapping different class distributions into the same single Gaussian prior, thus effectively avoiding or mitigating the "homogeneous mapping" issue. We further indicate that the more distinguishable different class centers, the more conducive to avoiding the bias issue. Thus, we further propose a mutual information maximization loss for better structuring the latent feature space. We evaluate our method on four real-world AD benchmarks, where we can significantly improve the previous NF-based AD methods and also outperform the SOTA unified AD methods.

Focus the Discrepancy: Intra- and Inter-Correlation Learning for Image Anomaly Detection

Aug 06, 2023

Abstract:Humans recognize anomalies through two aspects: larger patch-wise representation discrepancies and weaker patch-to-normal-patch correlations. However, the previous AD methods didn't sufficiently combine the two complementary aspects to design AD models. To this end, we find that Transformer can ideally satisfy the two aspects as its great power in the unified modeling of patch-wise representations and patch-to-patch correlations. In this paper, we propose a novel AD framework: FOcus-the-Discrepancy (FOD), which can simultaneously spot the patch-wise, intra- and inter-discrepancies of anomalies. The major characteristic of our method is that we renovate the self-attention maps in transformers to Intra-Inter-Correlation (I2Correlation). The I2Correlation contains a two-branch structure to first explicitly establish intra- and inter-image correlations, and then fuses the features of two-branch to spotlight the abnormal patterns. To learn the intra- and inter-correlations adaptively, we propose the RBF-kernel-based target-correlations as learning targets for self-supervised learning. Besides, we introduce an entropy constraint strategy to solve the mode collapse issue in optimization and further amplify the normal-abnormal distinguishability. Extensive experiments on three unsupervised real-world AD benchmarks show the superior performance of our approach. Code will be available at https://github.com/xcyao00/FOD.

Normalized Blood Flow Index in Optical Coherence Tomography Angiography Provides a Sensitive Biomarker of Early Diabetic Retinopathy

Dec 22, 2022Abstract:Purpose: To evaluate the sensitivity of normalized blood flow index (NBFI) for detecting early diabetic retinopathy (DR). Methods: Optical coherence tomography angiography (OCTA) images of 30 eyes from 20 healthy controls, 21 eyes of diabetic patients with no DR (NoDR) and 26 eyes from 22 patients with mild non-proliferative DR (NPDR) were analyzed in this study. The OCTA images were centered on the fovea and covered a 6 mm x 6 mm area. Enface projections of the superficial vascular plexus (SVP) and the deep capillary plexus (DCP) were obtained for the quantitative OCTA feature analysis. Three quantitative OCTA features were examined: blood vessel density (BVD), blood flow flux (BFF), and normalized blood flow index (NBFI). Each feature was calculated from both the SVP and DCP and their sensitivity to distinguish the three cohorts of the study were evaluated. Results: The only quantitative feature that was capable of distinguishing between all three cohorts was NBFI in the DCP image. Comparative study revealed that both BVD and BFF were able to distinguish the controls from NoDR and mild NPDR. However, neither BVD nor BFF was sensitive enough to separate NoDR from the healthy controls. Conclusion: The NBFI has been demonstrated as a sensitive biomarker of early DR, revealing retinal blood flow abnormality better than traditional BVD and BFF. The NBFI in the DCP was verified as the most sensitive biomarker, supporting that diabetes affects the DCP earlier than SVP in DR.

A portable widefield fundus camera with high dynamic range imaging capability

Dec 20, 2022Abstract:Fundus photography is indispensable for clinical detection and management of eye diseases. Limited image contrast and field of view (FOV) are common limitations of conventional fundus cameras, making it difficult to detect subtle abnormalities at the early stages of eye diseases. Further improvements of image contrast and FOV coverage are important to improve early disease detection and reliable treatment assessment. We report here a portable fundus camera, with a wide FOV and high dynamic range (HDR) imaging capabilities. Miniaturized indirect ophthalmoscopy illumination was employed to achieve the portable design for nonmydriatic, widefield fundus photography. Orthogonal polarization control was used to eliminate illumination reflectance artifact. With independent power controls, three fundus images were sequentially acquired and fused to achieve HDR function for local image contrast enhancement. A 101{\deg} eye-angle (67{\deg} visual-angle) snapshot FOV was achieved for nonmydriatic fundus photography. The effective FOV can be readily expanded up to 190{\deg} eye-angle (134{\deg} visual-angle) with the aid of a fixation target, without the need of pharmacologic pupillary dilation. The effectiveness of HDR imaging was validated with both normal healthy and pathologic eyes, compared to a conventional fundus camera.

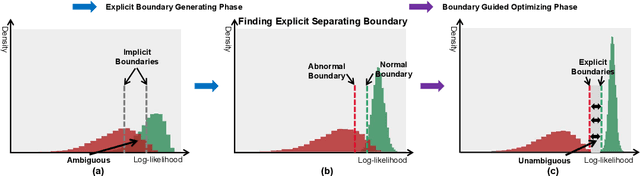

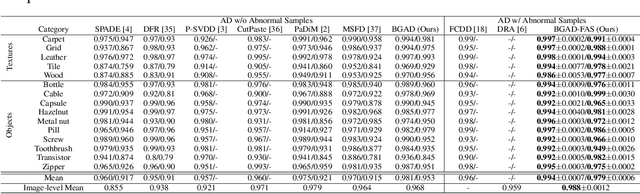

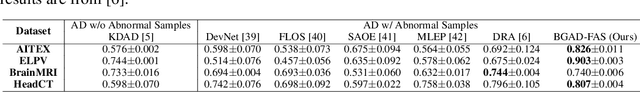

Explicit Boundary Guided Semi-Push-Pull Contrastive Learning for Better Anomaly Detection

Jul 04, 2022

Abstract:Most of anomaly detection algorithms are mainly focused on modeling the distribution of normal samples and treating anomalies as outliers. However, the discriminative performance of the model may be insufficient due to the lack of knowledge about anomalies. Thus, anomalies should be exploited as possible. However, utilizing a few known anomalies during training may cause another issue that model may be biased by those known anomalies and fail to generalize to unseen anomalies. In this paper, we aim to exploit a few existing anomalies with a carefully designed explicit boundary guided semi-push-pull learning strategy, which can enhance discriminability while mitigating bias problem caused by insufficient known anomalies. Our model is based on two core designs: First, finding one explicit separating boundary as the guidance for further contrastive learning. Specifically, we employ normalizing flow to learn normal feature distribution, then find an explicit separating boundary close to the distribution edge. The obtained explicit and compact separating boundary only relies on the normal feature distribution, thus the bias problem caused by a few known anomalies can be mitigated. Second, learning more discriminative features under the guidance of the explicit separating boundary. A boundary guided semi-push-pull loss is developed to only pull the normal features together while pushing the abnormal features apart from the separating boundary beyond a certain margin region. In this way, our model can form a more explicit and discriminative decision boundary to achieve better results for known and also unseen anomalies, while also maintaining high training efficiency. Extensive experiments on the widely-used MVTecAD benchmark show that the proposed method achieves new state-of-the-art results, with the performance of 98.8% image-level AUROC and 99.4% pixel-level AUROC.

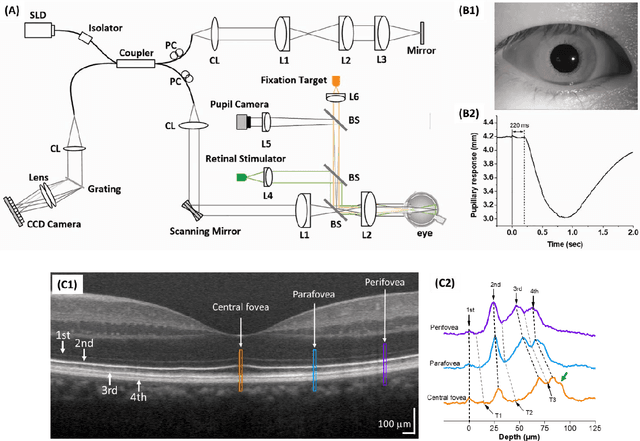

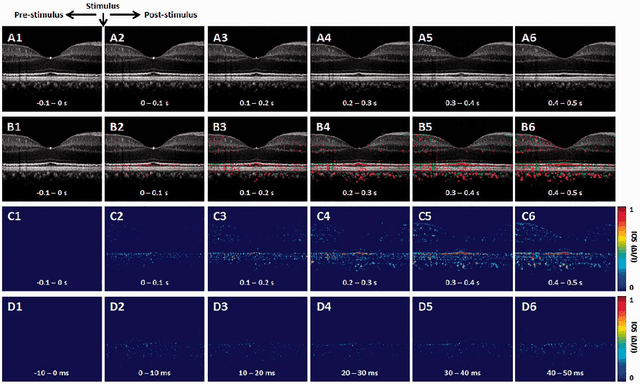

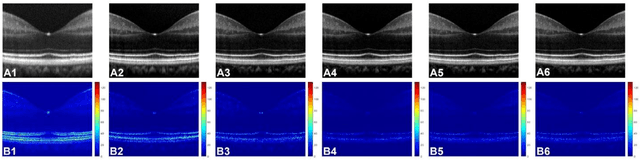

Functional Optical Coherence Tomography for Intrinsic Signal Optoretinography: Recent Developments and Deployment Challenges

Feb 18, 2022

Abstract:Intrinsic optical signal (IOS) imaging of the retina, also termed as optoretinography (ORG), promises a noninvasive method for objective assessment of retinal function. By providing unparalleled capability to differentiate individual layers of the retina, functional optical coherence tomography (OCT) has been actively investigated for intrinsic signal ORG measurements. However, clinical deployment of functional OCT for quantitative ORG is still challenging due to the lack of a standardized imaging protocol and the complication of IOS sources and mechanisms. This article aims to summarize recent developments of functional OCT for ORG measurement, OCT intensity- and phase-based IOS processing. Technical challenges and perspectives of quantitative IOS analysis and ORG interpretations are discussed.

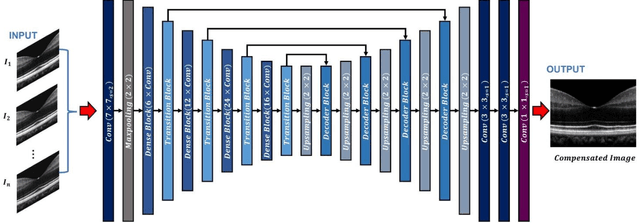

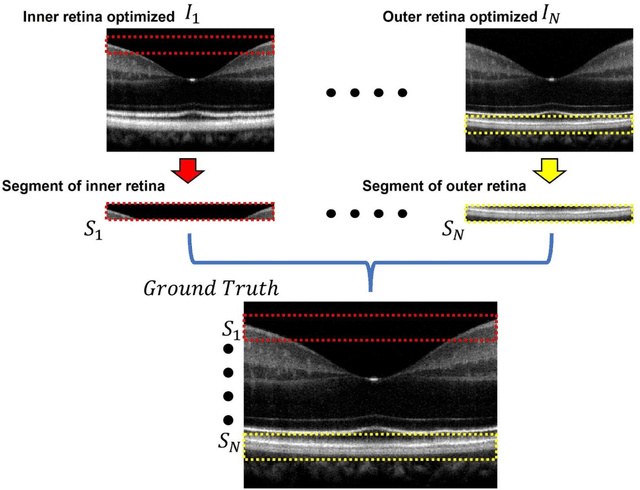

ADC-Net: An Open-Source Deep Learning Network for Automated Dispersion Compensation in Optical Coherence Tomography

Jan 29, 2022

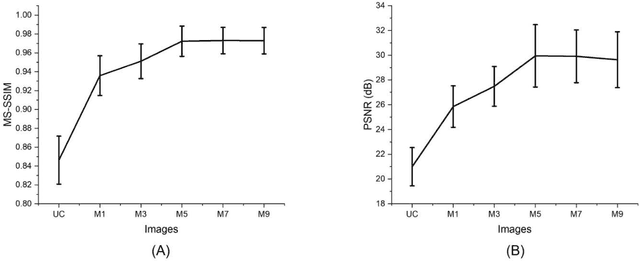

Abstract:Chromatic dispersion is a common problem to degrade the system resolution in optical coherence tomography (OCT). This study is to develop a deep learning network for automated dispersion compensation (ADC-Net) in OCT. The ADC-Net is based on a redesigned UNet architecture which employs an encoder-decoder pipeline. The input section encompasses partially compensated OCT B-scans with individual retinal layers optimized. Corresponding output is a fully compensated OCT B-scans with all retinal layers optimized. Two numeric parameters, i.e., peak signal to noise ratio (PSNR) and structural similarity index metric computed at multiple scales (MS-SSIM), were used for objective assessment of the ADC-Net performance. Comparative analysis of training models, including single, three, five, seven and nine input channels were implemented. The five-input channels implementation was observed as the optimal mode for ADC-Net training to achieve robust dispersion compensation in OCT

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge