Charlotte Laclau

The quest for the GRAph Level autoEncoder (GRALE)

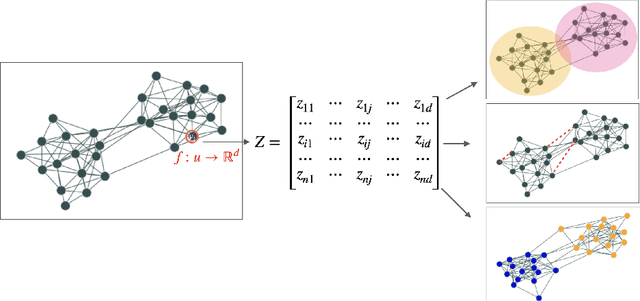

May 28, 2025Abstract:Although graph-based learning has attracted a lot of attention, graph representation learning is still a challenging task whose resolution may impact key application fields such as chemistry or biology. To this end, we introduce GRALE, a novel graph autoencoder that encodes and decodes graphs of varying sizes into a shared embedding space. GRALE is trained using an Optimal Transport-inspired loss that compares the original and reconstructed graphs and leverages a differentiable node matching module, which is trained jointly with the encoder and decoder. The proposed attention-based architecture relies on Evoformer, the core component of AlphaFold, which we extend to support both graph encoding and decoding. We show, in numerical experiments on simulated and molecular data, that GRALE enables a highly general form of pre-training, applicable to a wide range of downstream tasks, from classification and regression to more complex tasks such as graph interpolation, editing, matching, and prediction.

Modeling Musical Genre Trajectories through Pathlet Learning

May 06, 2025Abstract:The increasing availability of user data on music streaming platforms opens up new possibilities for analyzing music consumption. However, understanding the evolution of user preferences remains a complex challenge, particularly as their musical tastes change over time. This paper uses the dictionary learning paradigm to model user trajectories across different musical genres. We define a new framework that captures recurring patterns in genre trajectories, called pathlets, enabling the creation of comprehensible trajectory embeddings. We show that pathlet learning reveals relevant listening patterns that can be analyzed both qualitatively and quantitatively. This work improves our understanding of users' interactions with music and opens up avenues of research into user behavior and fostering diversity in recommender systems. A dataset of 2000 user histories tagged by genre over 17 months, supplied by Deezer (a leading music streaming company), is also released with the code.

Fair Text Classification via Transferable Representations

Mar 10, 2025Abstract:Group fairness is a central research topic in text classification, where reaching fair treatment between sensitive groups (e.g., women and men) remains an open challenge. We propose an approach that extends the use of the Wasserstein Dependency Measure for learning unbiased neural text classifiers. Given the challenge of distinguishing fair from unfair information in a text encoder, we draw inspiration from adversarial training by inducing independence between representations learned for the target label and those for a sensitive attribute. We further show that Domain Adaptation can be efficiently leveraged to remove the need for access to the sensitive attributes in the dataset we cure. We provide both theoretical and empirical evidence that our approach is well-founded.

Histoires Morales: A French Dataset for Assessing Moral Alignment

Jan 28, 2025Abstract:Aligning language models with human values is crucial, especially as they become more integrated into everyday life. While models are often adapted to user preferences, it is equally important to ensure they align with moral norms and behaviours in real-world social situations. Despite significant progress in languages like English and Chinese, French has seen little attention in this area, leaving a gap in understanding how LLMs handle moral reasoning in this language. To address this gap, we introduce Histoires Morales, a French dataset derived from Moral Stories, created through translation and subsequently refined with the assistance of native speakers to guarantee grammatical accuracy and adaptation to the French cultural context. We also rely on annotations of the moral values within the dataset to ensure their alignment with French norms. Histoires Morales covers a wide range of social situations, including differences in tipping practices, expressions of honesty in relationships, and responsibilities toward animals. To foster future research, we also conduct preliminary experiments on the alignment of multilingual models on French and English data and the robustness of the alignment. We find that while LLMs are generally aligned with human moral norms by default, they can be easily influenced with user-preference optimization for both moral and immoral data.

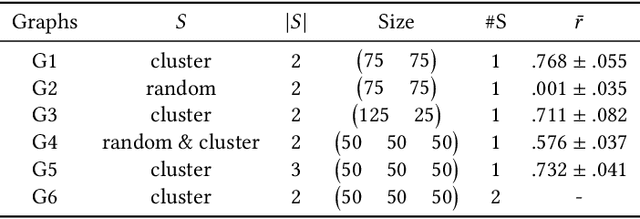

End-to-end Supervised Prediction of Arbitrary-size Graphs with Partially-Masked Fused Gromov-Wasserstein Matching

Feb 23, 2024

Abstract:We present a novel end-to-end deep learning-based approach for Supervised Graph Prediction (SGP). We introduce an original Optimal Transport (OT)-based loss, the Partially-Masked Fused Gromov-Wasserstein loss (PM-FGW), that allows to directly leverage graph representations such as adjacency and feature matrices. PM-FGW exhibits all the desirable properties for SGP: it is node permutation invariant, sub-differentiable and handles graphs of different sizes by comparing their padded representations as well as their masking vectors. Moreover, we present a flexible transformer-based architecture that easily adapts to different types of input data. In the experimental section, three different tasks, a novel and challenging synthetic dataset (image2graph) and two real-world tasks, image2map and fingerprint2molecule - showcase the efficiency and versatility of the approach compared to competitors.

An investigation of structures responsible for gender bias in BERT and DistilBERT

Jan 12, 2024Abstract:In recent years, large Transformer-based Pre-trained Language Models (PLM) have changed the Natural Language Processing (NLP) landscape, by pushing the performance boundaries of the state-of-the-art on a wide variety of tasks. However, this performance gain goes along with an increase in complexity, and as a result, the size of such models (up to billions of parameters) represents a constraint for their deployment on embedded devices or short-inference time tasks. To cope with this situation, compressed models emerged (e.g. DistilBERT), democratizing their usage in a growing number of applications that impact our daily lives. A crucial issue is the fairness of the predictions made by both PLMs and their distilled counterparts. In this paper, we propose an empirical exploration of this problem by formalizing two questions: (1) Can we identify the neural mechanism(s) responsible for gender bias in BERT (and by extension DistilBERT)? (2) Does distillation tend to accentuate or mitigate gender bias (e.g. is DistilBERT more prone to gender bias than its uncompressed version, BERT)? Our findings are the following: (I) one cannot identify a specific layer that produces bias; (II) every attention head uniformly encodes bias; except in the context of underrepresented classes with a high imbalance of the sensitive attribute; (III) this subset of heads is different as we re-fine tune the network; (IV) bias is more homogeneously produced by the heads in the distilled model.

Fair Text Classification with Wasserstein Independence

Nov 21, 2023Abstract:Group fairness is a central research topic in text classification, where reaching fair treatment between sensitive groups (e.g. women vs. men) remains an open challenge. This paper presents a novel method for mitigating biases in neural text classification, agnostic to the model architecture. Considering the difficulty to distinguish fair from unfair information in a text encoder, we take inspiration from adversarial training to induce Wasserstein independence between representations learned to predict our target label and the ones learned to predict some sensitive attribute. Our approach provides two significant advantages. Firstly, it does not require annotations of sensitive attributes in both testing and training data. This is more suitable for real-life scenarios compared to existing methods that require annotations of sensitive attributes at train time. Second, our approach exhibits a comparable or better fairness-accuracy trade-off compared to existing methods.

Understanding deep neural networks through the lens of their non-linearity

Oct 17, 2023

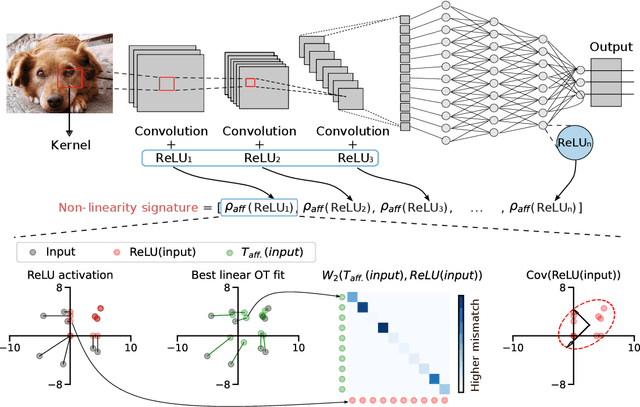

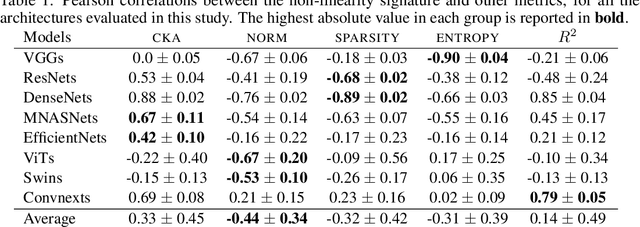

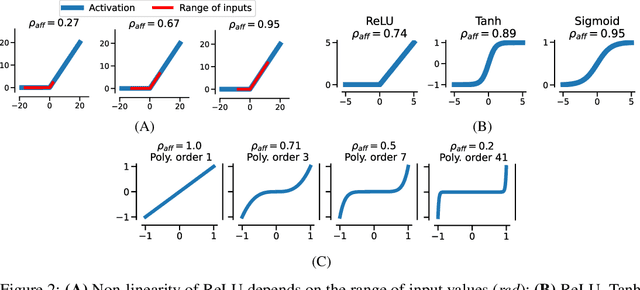

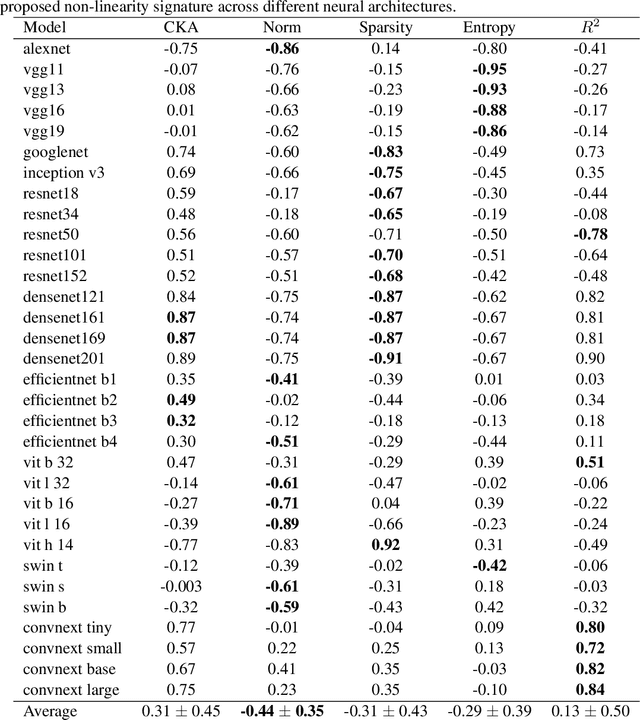

Abstract:The remarkable success of deep neural networks (DNN) is often attributed to their high expressive power and their ability to approximate functions of arbitrary complexity. Indeed, DNNs are highly non-linear models, and activation functions introduced into them are largely responsible for this. While many works studied the expressive power of DNNs through the lens of their approximation capabilities, quantifying the non-linearity of DNNs or of individual activation functions remains an open problem. In this paper, we propose the first theoretically sound solution to track non-linearity propagation in deep neural networks with a specific focus on computer vision applications. Our proposed affinity score allows us to gain insights into the inner workings of a wide range of different architectures and learning paradigms. We provide extensive experimental results that highlight the practical utility of the proposed affinity score and its potential for long-reaching applications.

Mixture of segmentation for heterogeneous functional data

Mar 19, 2023

Abstract:In this paper we consider functional data with heterogeneity in time and in population. We propose a mixture model with segmentation of time to represent this heterogeneity while keeping the functional structure. Maximum likelihood estimator is considered, proved to be identifiable and consistent. In practice, an EM algorithm is used, combined with dynamic programming for the maximization step, to approximate the maximum likelihood estimator. The method is illustrated on a simulated dataset, and used on a real dataset of electricity consumption.

A Survey on Fairness for Machine Learning on Graphs

May 11, 2022

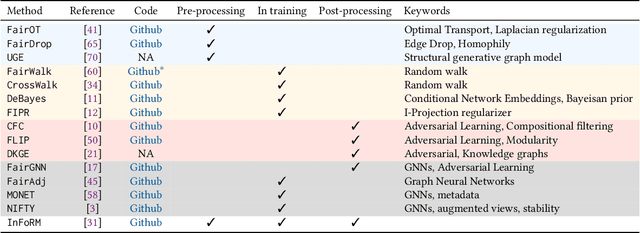

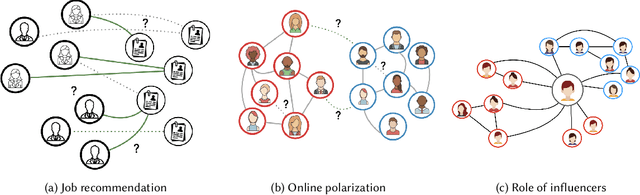

Abstract:Nowadays, the analysis of complex phenomena modeled by graphs plays a crucial role in many real-world application domains where decisions can have a strong societal impact. However, numerous studies and papers have recently revealed that machine learning models could lead to potential disparate treatment between individuals and unfair outcomes. In that context, algorithmic contributions for graph mining are not spared by the problem of fairness and present some specific challenges related to the intrinsic nature of graphs: (1) graph data is non-IID, and this assumption may invalidate many existing studies in fair machine learning, (2) suited metric definitions to assess the different types of fairness with relational data and (3) algorithmic challenge on the difficulty of finding a good trade-off between model accuracy and fairness. This survey is the first one dedicated to fairness for relational data. It aims to present a comprehensive review of state-of-the-art techniques in fairness on graph mining and identify the open challenges and future trends. In particular, we start by presenting several sensible application domains and the associated graph mining tasks with a focus on edge prediction and node classification in the sequel. We also recall the different metrics proposed to evaluate potential bias at different levels of the graph mining process; then we provide a comprehensive overview of recent contributions in the domain of fair machine learning for graphs, that we classify into pre-processing, in-processing and post-processing models. We also propose to describe existing graph data, synthetic and real-world benchmarks. Finally, we present in detail five potential promising directions to advance research in studying algorithmic fairness on graphs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge