Oliver Struckmeier

Understanding deep neural networks through the lens of their non-linearity

Oct 17, 2023

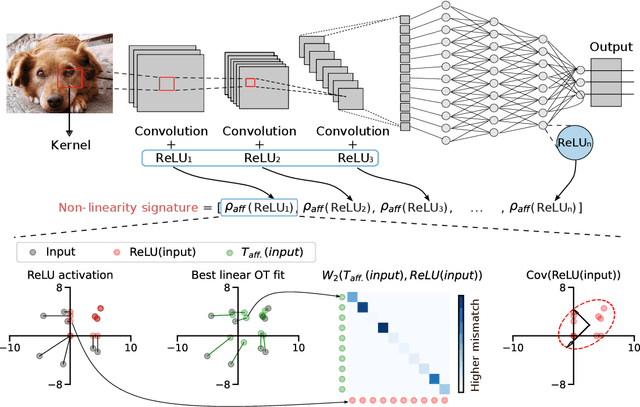

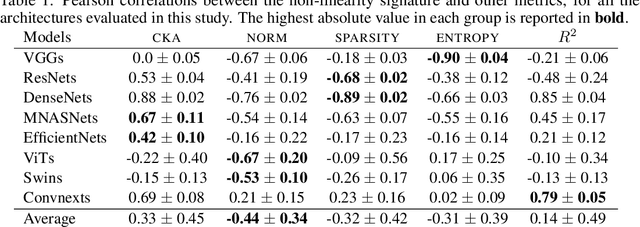

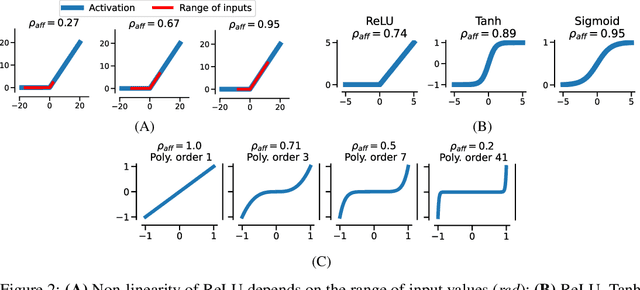

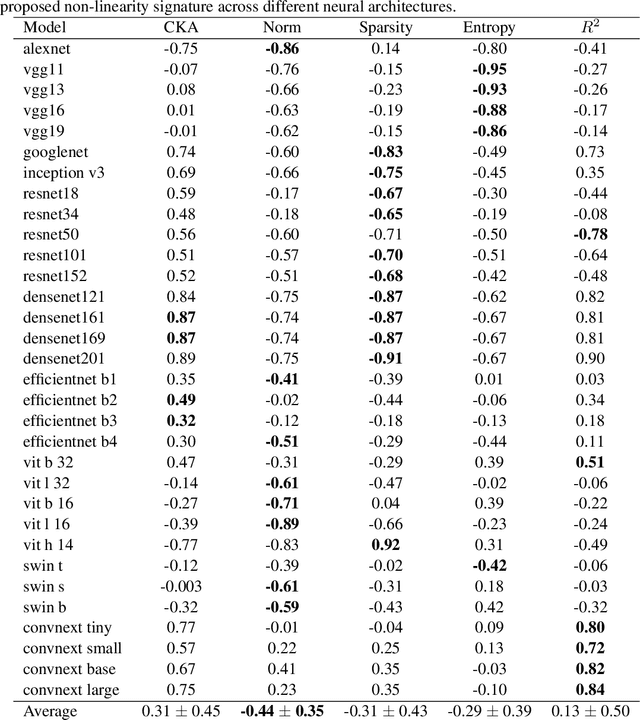

Abstract:The remarkable success of deep neural networks (DNN) is often attributed to their high expressive power and their ability to approximate functions of arbitrary complexity. Indeed, DNNs are highly non-linear models, and activation functions introduced into them are largely responsible for this. While many works studied the expressive power of DNNs through the lens of their approximation capabilities, quantifying the non-linearity of DNNs or of individual activation functions remains an open problem. In this paper, we propose the first theoretically sound solution to track non-linearity propagation in deep neural networks with a specific focus on computer vision applications. Our proposed affinity score allows us to gain insights into the inner workings of a wide range of different architectures and learning paradigms. We provide extensive experimental results that highlight the practical utility of the proposed affinity score and its potential for long-reaching applications.

Beyond invariant representation learning: linearly alignable latent spaces for efficient closed-form domain adaptation

May 12, 2023

Abstract:Optimal transport (OT) is a powerful geometric tool used to compare and align probability measures following the least effort principle. Among many successful applications of OT in machine learning (ML), domain adaptation (DA) -- a field of study where the goal is to transfer a classifier from one labelled domain to another similar, yet different unlabelled or scarcely labelled domain -- has been historically among the most investigated ones. This success is due to the ability of OT to provide both a meaningful discrepancy measure to assess the similarity of two domains' distributions and a mapping that can project source domain data onto the target one. In this paper, we propose a principally new OT-based approach applied to DA that uses the closed-form solution of the OT problem given by an affine mapping and learns an embedding space for which this solution is optimal and computationally less complex. We show that our approach works in both homogeneous and heterogeneous DA settings and outperforms or is on par with other famous baselines based on both traditional OT and OT in incomparable spaces. Furthermore, we show that our proposed method vastly reduces computational complexity.

Domain Curiosity: Learning Efficient Data Collection Strategies for Domain Adaptation

Mar 12, 2021

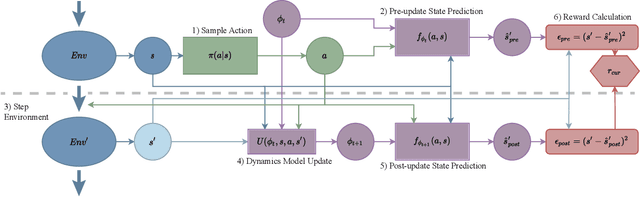

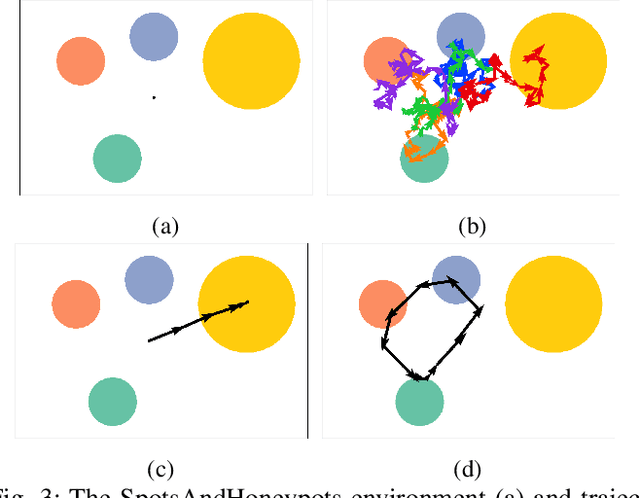

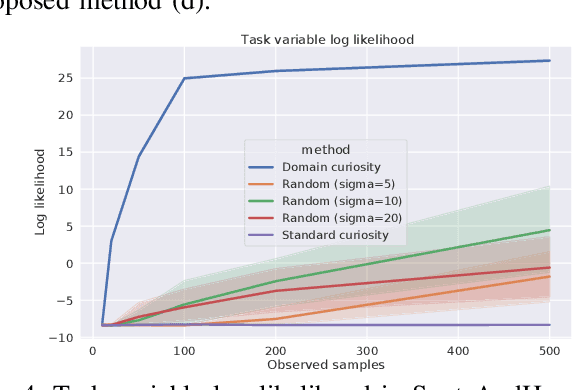

Abstract:Domain adaptation is a common problem in robotics, with applications such as transferring policies from simulation to real world and lifelong learning. Performing such adaptation, however, requires informative data about the environment to be available during the adaptation. In this paper, we present domain curiosity -- a method of training exploratory policies that are explicitly optimized to provide data that allows a model to learn about the unknown aspects of the environment. In contrast to most curiosity methods, our approach explicitly rewards learning, which makes it robust to environment noise without sacrificing its ability to learn. We evaluate the proposed method by comparing how much a model can learn about environment dynamics given data collected by the proposed approach, compared to standard curious and random policies. The evaluation is performed using a toy environment, two simulated robot setups, and on a real-world haptic exploration task. The results show that the proposed method allows data-efficient and accurate estimation of dynamics.

Unsupervised Learning of slow features for Data Efficient Regression

Dec 11, 2020

Abstract:Research in computational neuroscience suggests that the human brain's unparalleled data efficiency is a result of highly efficient mechanisms to extract and organize slowly changing high level features from continuous sensory inputs. In this paper, we apply this slowness principle to a state of the art representation learning method with the goal of performing data efficient learning of down-stream regression tasks. To this end, we propose the slow variational autoencoder (S-VAE), an extension to the $\beta$-VAE which applies a temporal similarity constraint to the latent representations. We empirically compare our method to the $\beta$-VAE and the Temporal Difference VAE (TD-VAE), a state-of-the-art method for next frame prediction in latent space with temporal abstraction. We evaluate the three methods against their data-efficiency on down-stream tasks using a synthetic 2D ball tracking dataset, a dataset from a reinforcent learning environment and a dataset generated using the DeepMind Lab environment. In all tasks, the proposed method outperformed the baselines both with dense and especially sparse labeled data. The S-VAE achieved similar or better performance compared to the baselines with $20\%$ to $93\%$ less data.

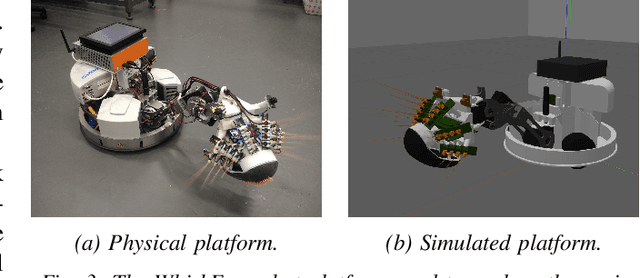

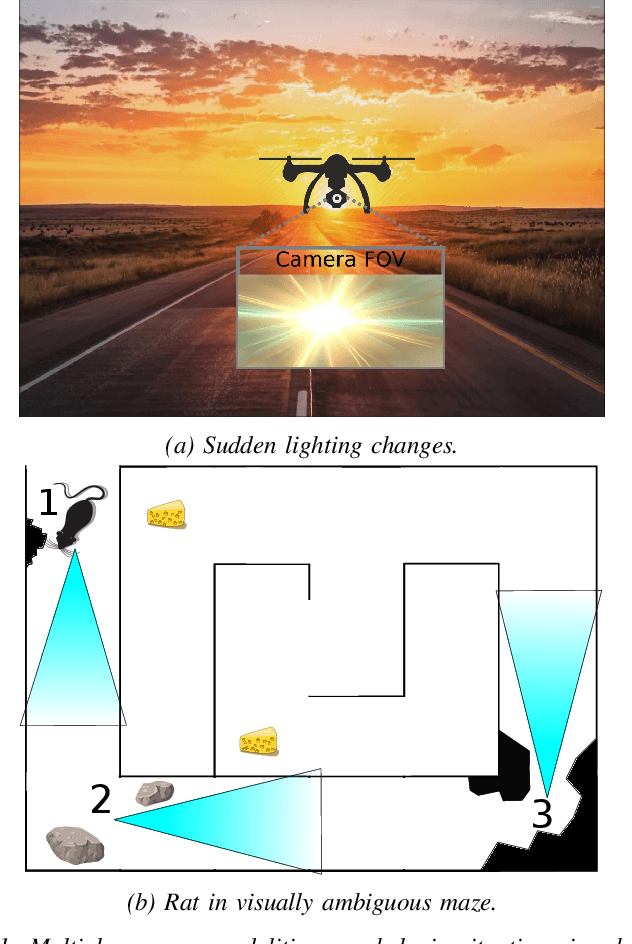

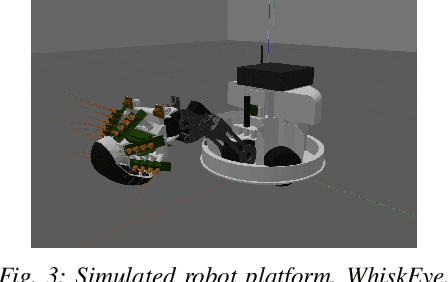

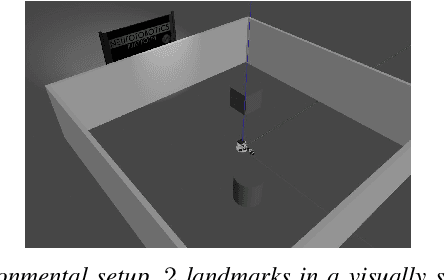

MuPNet: Multi-modal Predictive Coding Network for Place Recognition by Unsupervised Learning of Joint Visuo-Tactile Latent Representations

Sep 16, 2019

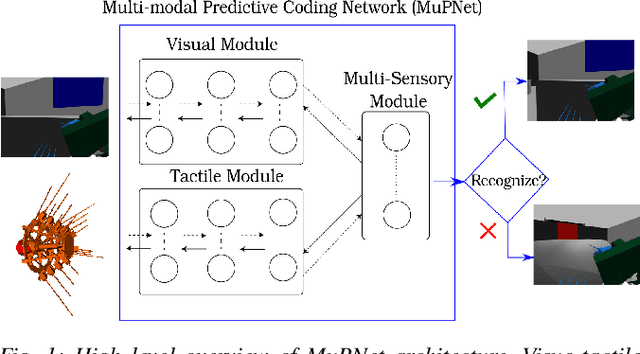

Abstract:Extracting and binding salient information from different sensory modalities to determine common features in the environment is a significant challenge in robotics. Here we present MuPNet (Multi-modal Predictive Coding Network), a biologically plausible network architecture for extracting joint latent features from visuo-tactile sensory data gathered from a biomimetic mobile robot. In this study we evaluate MuPNet applied to place recognition as a simulated biomimetic robot platform explores visually aliased environments. The F1 scores demonstrate that its performance over prior hand-crafted sensory feature extraction techniques is equivalent under controlled conditions, with significant improvement when operating in novel environments.

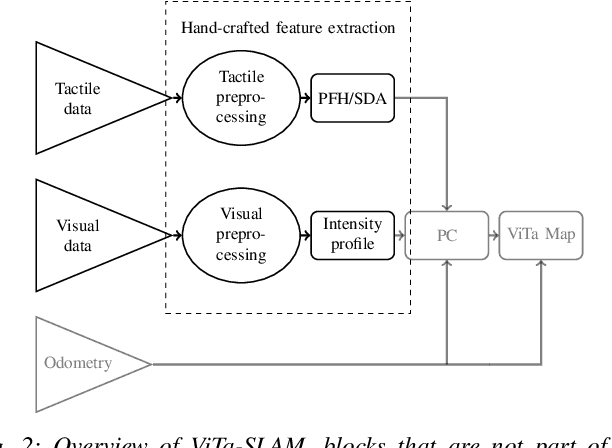

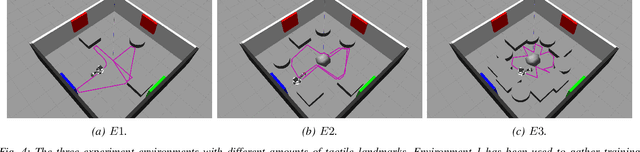

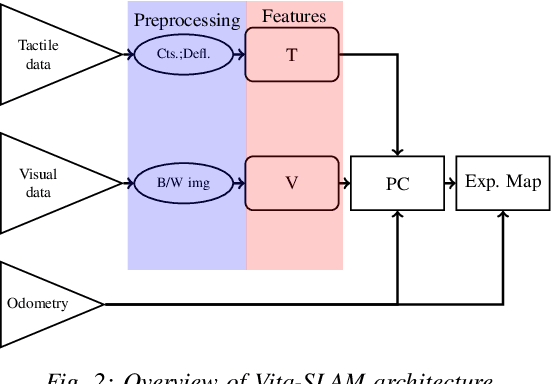

ViTa-SLAM: A Bio-inspired Visuo-Tactile SLAM for Navigation while Interacting with Aliased Environments

Jun 26, 2019

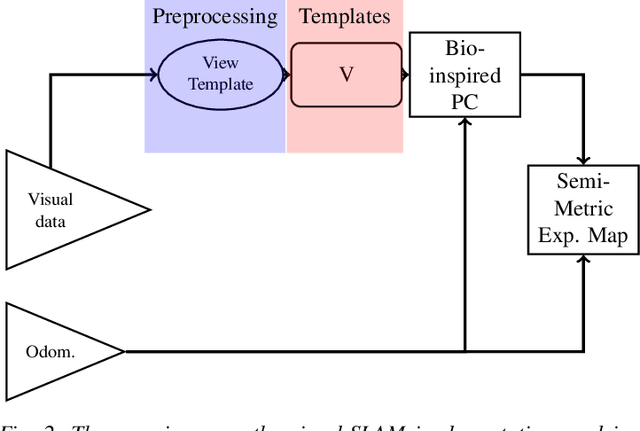

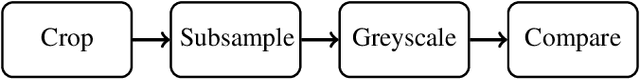

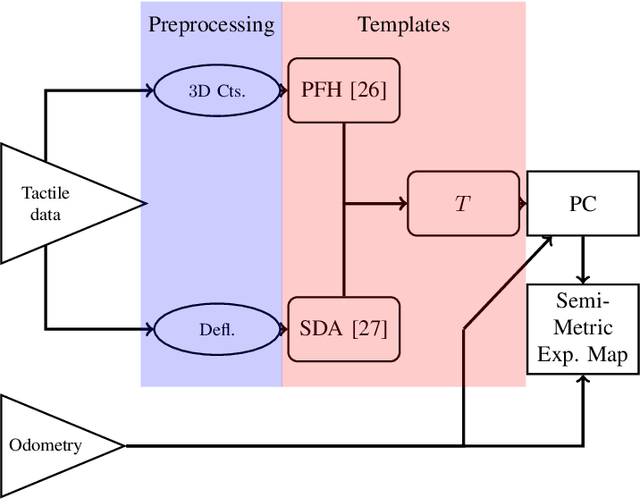

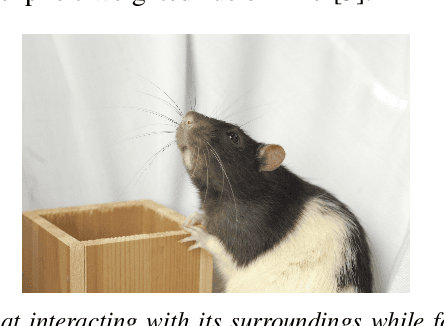

Abstract:RatSLAM is a rat hippocampus-inspired visual Simultaneous Localization and Mapping (SLAM) framework capable of generating semi-metric topological representations of indoor and outdoor environments. Whisker-RatSLAM is a 6D extension of the RatSLAM and primarily focuses on object recognition by generating point clouds of objects based on whisking information. This paper introduces a novel extension to both former works that is referred to as ViTa-SLAM that harnesses both vision and tactile information for performing SLAM. This not only allows the robot to perform natural interaction with the environment whilst navigating, as is normally seen in nature, but also provides a mechanism to fuse non-unique tactile and unique visual data. Compared to the former works, our approach can handle ambiguous scenes in which one sensor alone is not capable of identifying false-positive loop-closures.

LeagueAI: Improving object detector performance and flexibility through automatically generated training data and domain randomization

May 28, 2019

Abstract:In this technical report I present my method for automatic synthetic dataset generation for object detection and demonstrate it on the video game League of Legends. This report furthermore serves as a handbook on how to automatically generate datasets and as an introduction on the dataset generation part of the LeagueAI framework. The LeagueAI framework is a software framework that provides detailed information about the game League of Legends based on the same input a human player would have, namely vision. The framework allows researchers and enthusiasts to develop their own intelligent agents or to extract detailed information about the state of the game. A big problem of machine vision applications usually is the laborious work of gathering large amounts of hand labeled data. Thus, a crucial part of the vision pipeline of the LeagueAI framework, the dataset generation, is presented in this report. The method involves extracting image raw data from the game's 3D models and combining them with the game background to create game-like synthetic images and to generate the corresponding labels automatically. In an experiment I compared a model trained on synthetic data to a model trained on hand labeled data and a model trained on a combined dataset. The model trained on the synthetic data showed higher detection precision on more classes and more reliable tracking performance of the player character. The model trained on the combined dataset did not perform better because of the different formats of the older hand labeled dataset and the synthetic data.

ViTa-SLAM: Biologically-Inspired Visuo-Tactile SLAM

May 14, 2019

Abstract:In this work, we propose a novel, bio-inspired multi-sensory SLAM approach called ViTa-SLAM. Compared to other multisensory SLAM variants, this approach allows for a seamless multi-sensory information fusion whilst naturally interacting with the environment. The algorithm is empirically evaluated in a simulated setting using a biomimetic robot platform called the WhiskEye. Our results show promising performance enhancements over existing bio-inspired SLAM approaches in terms of loop-closure detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge