Arkady Zgonnikov

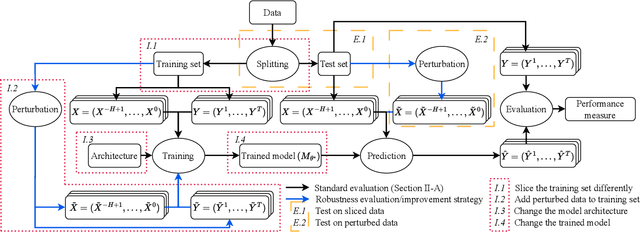

STEP: Structured Training and Evaluation Platform for benchmarking trajectory prediction models

Sep 18, 2025Abstract:While trajectory prediction plays a critical role in enabling safe and effective path-planning in automated vehicles, standardized practices for evaluating such models remain underdeveloped. Recent efforts have aimed to unify dataset formats and model interfaces for easier comparisons, yet existing frameworks often fall short in supporting heterogeneous traffic scenarios, joint prediction models, or user documentation. In this work, we introduce STEP -- a new benchmarking framework that addresses these limitations by providing a unified interface for multiple datasets, enforcing consistent training and evaluation conditions, and supporting a wide range of prediction models. We demonstrate the capabilities of STEP in a number of experiments which reveal 1) the limitations of widely-used testing procedures, 2) the importance of joint modeling of agents for better predictions of interactions, and 3) the vulnerability of current state-of-the-art models against both distribution shifts and targeted attacks by adversarial agents. With STEP, we aim to shift the focus from the ``leaderboard'' approach to deeper insights about model behavior and generalization in complex multi-agent settings.

Towards Human-Centric Evaluation of Interaction-Aware Automated Vehicle Controllers: A Framework and Case Study

Aug 07, 2025Abstract:As automated vehicles (AVs) increasingly integrate into mixed-traffic environments, evaluating their interaction with human-driven vehicles (HDVs) becomes critical. In most research focused on developing new AV control algorithms (controllers), the performance of these algorithms is assessed solely based on performance metrics such as collision avoidance or lane-keeping efficiency, while largely overlooking the human-centred dimensions of interaction with HDVs. This paper proposes a structured evaluation framework that addresses this gap by incorporating metrics grounded in the human-robot interaction literature. The framework spans four key domains: a) interaction effect, b) interaction perception, c) interaction effort, and d) interaction ability. These domains capture both the performance of the AV and its impact on human drivers around it. To demonstrate the utility of the framework, we apply it to a case study evaluating how a state-of-the-art AV controller interacts with human drivers in a merging scenario in a driving simulator. Measuring HDV-HDV interactions as a baseline, this study included one representative metric per domain: a) perceived safety, b) subjective ratings, specifically how participants perceived the other vehicle's driving behaviour (e.g., aggressiveness or predictability) , c) driver workload, and d) merging success. The results showed that incorporating metrics covering all four domains in the evaluation of AV controllers can illuminate critical differences in driver experience when interacting with AVs. This highlights the need for a more comprehensive evaluation approach. Our framework offers researchers, developers, and policymakers a systematic method for assessing AV behaviour beyond technical performance, fostering the development of AVs that are not only functionally capable but also understandable, acceptable, and safe from a human perspective.

Feasible Action Space Reduction for Quantifying Causal Responsibility in Continuous Spatial Interactions

May 23, 2025Abstract:Understanding the causal influence of one agent on another agent is crucial for safely deploying artificially intelligent systems such as automated vehicles and mobile robots into human-inhabited environments. Existing models of causal responsibility deal with simplified abstractions of scenarios with discrete actions, thus, limiting real-world use when understanding responsibility in spatial interactions. Based on the assumption that spatially interacting agents are embedded in a scene and must follow an action at each instant, Feasible Action-Space Reduction (FeAR) was proposed as a metric for causal responsibility in a grid-world setting with discrete actions. Since real-world interactions involve continuous action spaces, this paper proposes a formulation of the FeAR metric for measuring causal responsibility in space-continuous interactions. We illustrate the utility of the metric in prototypical space-sharing conflicts, and showcase its applications for analysing backward-looking responsibility and in estimating forward-looking responsibility to guide agent decision making. Our results highlight the potential of the FeAR metric for designing and engineering artificial agents, as well as for assessing the responsibility of agents around humans.

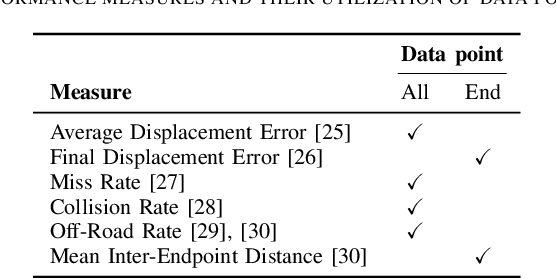

Realistic Adversarial Attacks for Robustness Evaluation of Trajectory Prediction Models via Future State Perturbation

May 09, 2025

Abstract:Trajectory prediction is a key element of autonomous vehicle systems, enabling them to anticipate and react to the movements of other road users. Evaluating the robustness of prediction models against adversarial attacks is essential to ensure their reliability in real-world traffic. However, current approaches tend to focus on perturbing the past positions of surrounding agents, which can generate unrealistic scenarios and overlook critical vulnerabilities. This limitation may result in overly optimistic assessments of model performance in real-world conditions. In this work, we demonstrate that perturbing not just past but also future states of adversarial agents can uncover previously undetected weaknesses and thereby provide a more rigorous evaluation of model robustness. Our novel approach incorporates dynamic constraints and preserves tactical behaviors, enabling more effective and realistic adversarial attacks. We introduce new performance measures to assess the realism and impact of these adversarial trajectories. Testing our method on a state-of-the-art prediction model revealed significant increases in prediction errors and collision rates under adversarial conditions. Qualitative analysis further showed that our attacks can expose critical weaknesses, such as the inability of the model to detect potential collisions in what appear to be safe predictions. These results underscore the need for more comprehensive adversarial testing to better evaluate and improve the reliability of trajectory prediction models for autonomous vehicles.

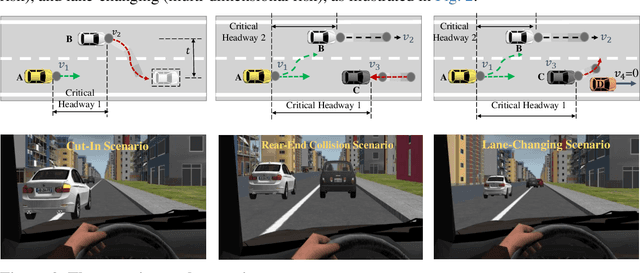

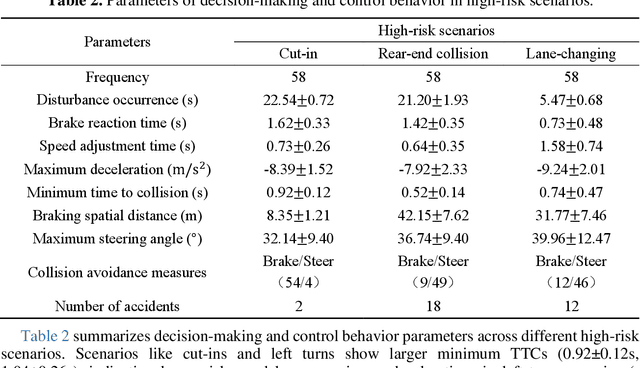

Understanding Driver Cognition and Decision-Making Behaviors in High-Risk Scenarios: A Drift Diffusion Perspective

Mar 16, 2025

Abstract:Ensuring safe interactions between autonomous vehicles (AVs) and human drivers in mixed traffic systems remains a major challenge, particularly in complex, high-risk scenarios. This paper presents a cognition-decision framework that integrates individual variability and commonalities in driver behavior to quantify risk cognition and model dynamic decision-making. First, a risk sensitivity model based on a multivariate Gaussian distribution is developed to characterize individual differences in risk cognition. Then, a cognitive decision-making model based on the drift diffusion model (DDM) is introduced to capture common decision-making mechanisms in high-risk environments. The DDM dynamically adjusts decision thresholds by integrating initial bias, drift rate, and boundary parameters, adapting to variations in speed, relative distance, and risk sensitivity to reflect diverse driving styles and risk preferences. By simulating high-risk scenarios with lateral, longitudinal, and multidimensional risk sources in a driving simulator, the proposed model accurately predicts cognitive responses and decision behaviors during emergency maneuvers. Specifically, by incorporating driver-specific risk sensitivity, the model enables dynamic adjustments of key DDM parameters, allowing for personalized decision-making representations in diverse scenarios. Comparative analysis with IDM, Gipps, and MOBIL demonstrates that DDM more precisely captures human cognitive processes and adaptive decision-making in high-risk scenarios. These findings provide a theoretical basis for modeling human driving behavior and offer critical insights for enhancing AV-human interaction in real-world traffic environments.

Hybrid Human-Machine Perception via Adaptive LiDAR for Advanced Driver Assistance Systems

Feb 24, 2025

Abstract:Accurate environmental perception is critical for advanced driver assistance systems (ADAS). Light detection and ranging (LiDAR) systems play a crucial role in ADAS; they can reliably detect obstacles and help ensure traffic safety. Existing research on LiDAR sensing has demonstrated that adapting the LiDAR's resolution and range based on environmental characteristics can improve machine perception. However, current adaptive LiDAR approaches for ADAS have not explored the possibility of combining the perception abilities of the vehicle and the human driver, which can potentially further enhance the detection performance. In this paper, we propose a novel system that adapts LiDAR characteristics to human driver's visual perception to enhance LiDAR sensing outside human's field of view. We develop a proof-of-concept prototype of the system in the virtual environment CARLA. Our system integrates real-time data on the driver's gaze to identify regions in the environment that the driver is monitoring. This allows the system to optimize LiDAR resources by dynamically increasing the LiDAR's range and resolution in peripheral areas that the driver may not be attending to. Our simulations show that this gaze-aware LiDAR enhances detection performance compared to a baseline standalone LiDAR, particularly in challenging environmental conditions like fog. Our hybrid human-machine sensing approach potentially offers improved safety and situational awareness in real-time driving scenarios for ADAS applications.

Safe Spot: Perceived safety of dominant and submissive appearances of quadruped robots in human-robot interactions

Mar 08, 2024

Abstract:Unprecedented possibilities of quadruped robots have driven much research on the technical aspects of these robots. However, the social perception and acceptability of quadruped robots so far remain poorly understood. This work investigates whether the way we design quadruped robots' behaviors can affect people's perception of safety in interactions with these robots. We designed and tested a dominant and submissive personality for the quadruped robot (Boston Dynamics Spot). These were tested in two different walking scenarios (head-on and crossing interactions) in a 2x2 within-subjects study. We collected both behavioral data and subjective reports on participants' perception of the interaction. The results highlight that participants perceived the submissive robot as safer compared to the dominant one. The behavioral dynamics of interactions did not change depending on the robot's appearance. Participants' previous in-person experience with the robot was associated with lower subjective safety ratings but did not correlate with the interaction dynamics. Our findings have implications for the design of quadruped robots and contribute to the body of knowledge on the social perception of non-humanoid robots. We call for a stronger standing of felt experiences in human-robot interaction research.

ARMCHAIR: integrated inverse reinforcement learning and model predictive control for human-robot collaboration

Feb 29, 2024

Abstract:One of the key issues in human-robot collaboration is the development of computational models that allow robots to predict and adapt to human behavior. Much progress has been achieved in developing such models, as well as control techniques that address the autonomy problems of motion planning and decision-making in robotics. However, the integration of computational models of human behavior with such control techniques still poses a major challenge, resulting in a bottleneck for efficient collaborative human-robot teams. In this context, we present a novel architecture for human-robot collaboration: Adaptive Robot Motion for Collaboration with Humans using Adversarial Inverse Reinforcement learning (ARMCHAIR). Our solution leverages adversarial inverse reinforcement learning and model predictive control to compute optimal trajectories and decisions for a mobile multi-robot system that collaborates with a human in an exploration task. During the mission, ARMCHAIR operates without human intervention, autonomously identifying the necessity to support and acting accordingly. Our approach also explicitly addresses the network connectivity requirement of the human-robot team. Extensive simulation-based evaluations demonstrate that ARMCHAIR allows a group of robots to safely support a simulated human in an exploration scenario, preventing collisions and network disconnections, and improving the overall performance of the task.

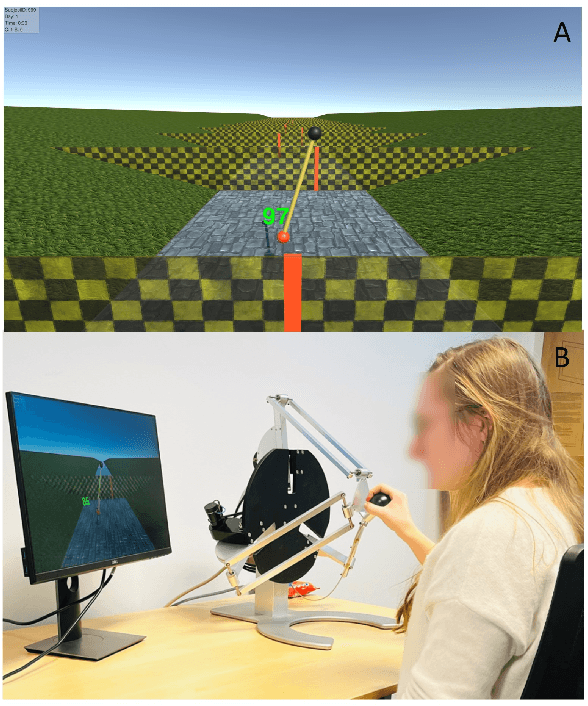

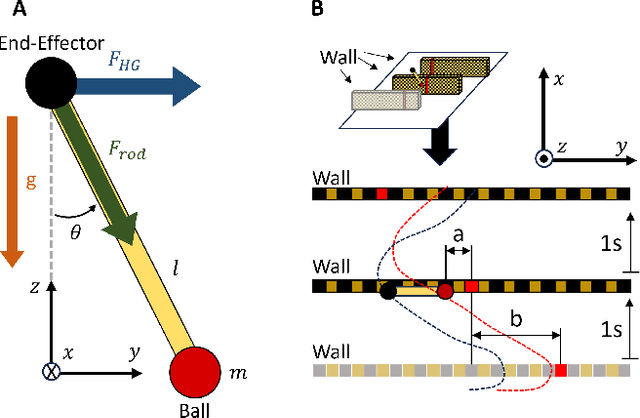

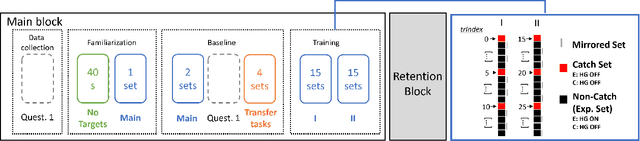

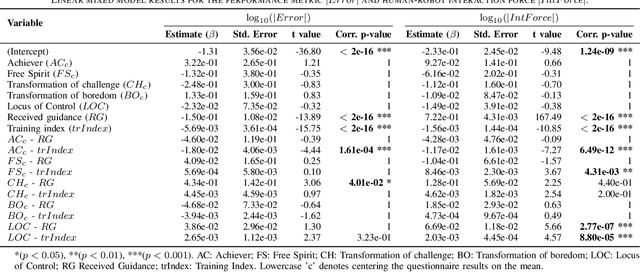

The Effect of Haptic Guidance during Robotic-assisted Motor Training is Modulated by Personality Traits

Feb 09, 2024

Abstract:The provision of robotic assistance during motor training has proven to be effective in enhancing motor learning in some healthy trainee groups as well as patients. Personalizing such robotic assistance can help further improve motor (re)learning outcomes and cater better to the trainee's needs and desires. However, the development of personalized haptic assistance is hindered by the lack of understanding of the link between the trainee's personality and the effects of haptic guidance during human-robot interaction. To address this gap, we ran an experiment with 42 healthy participants who trained with a robotic device to control a virtual pendulum to hit incoming targets either with or without haptic guidance. We found that certain personal traits affected how users adapt and interact with the guidance during training. In particular, those participants with an 'Achiever gaming style' performed better and applied lower interaction forces to the robotic device than the average participant as the training progressed. Conversely, participants with the 'Free spirit game style' increased the interaction force in the course of training. We also found an interaction between some personal characteristics and haptic guidance. Specifically, participants with a higher 'Transformation of challenge' trait exhibited poorer performance during training while receiving haptic guidance compared to an average participant receiving haptic guidance. Furthermore, individuals with an external Locus of Control tended to increase their interaction force with the device, deviating from the pattern observed in an average participant under the same guidance. These findings suggest that individual characteristics may play a crucial role in the effectiveness of haptic guidance training strategies.

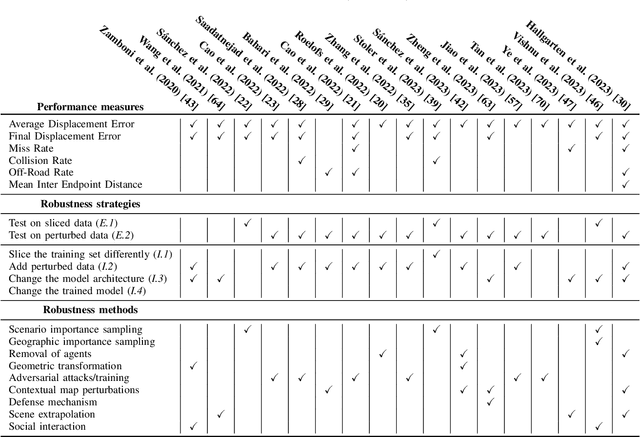

A survey on robustness in trajectory prediction for autonomous vehicles

Feb 08, 2024

Abstract:Autonomous vehicles rely on accurate trajectory prediction to inform decision-making processes related to navigation and collision avoidance. However, current trajectory prediction models show signs of overfitting, which may lead to unsafe or suboptimal behavior. To address these challenges, this paper presents a comprehensive framework that categorizes and assesses the definitions and strategies used in the literature on evaluating and improving the robustness of trajectory prediction models. This involves a detailed exploration of various approaches, including data slicing methods, perturbation techniques, model architecture changes, and post-training adjustments. In the literature, we see many promising methods for increasing robustness, which are necessary for safe and reliable autonomous driving.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge