Marcos Ricardo Omena de Albuquerque Maximo

ARMCHAIR: integrated inverse reinforcement learning and model predictive control for human-robot collaboration

Feb 29, 2024

Abstract:One of the key issues in human-robot collaboration is the development of computational models that allow robots to predict and adapt to human behavior. Much progress has been achieved in developing such models, as well as control techniques that address the autonomy problems of motion planning and decision-making in robotics. However, the integration of computational models of human behavior with such control techniques still poses a major challenge, resulting in a bottleneck for efficient collaborative human-robot teams. In this context, we present a novel architecture for human-robot collaboration: Adaptive Robot Motion for Collaboration with Humans using Adversarial Inverse Reinforcement learning (ARMCHAIR). Our solution leverages adversarial inverse reinforcement learning and model predictive control to compute optimal trajectories and decisions for a mobile multi-robot system that collaborates with a human in an exploration task. During the mission, ARMCHAIR operates without human intervention, autonomously identifying the necessity to support and acting accordingly. Our approach also explicitly addresses the network connectivity requirement of the human-robot team. Extensive simulation-based evaluations demonstrate that ARMCHAIR allows a group of robots to safely support a simulated human in an exploration scenario, preventing collisions and network disconnections, and improving the overall performance of the task.

A Mixed-Integer Approach for Motion Planning of Nonholonomic Robots under Visible Light Communication Constraints

Jun 27, 2023Abstract:This work addresses the problem of motion planning for a group of nonholonomic robots under Visible Light Communication (VLC) connectivity requirements. In particular, we consider an inspection task performed by a Robot Chain Control System (RCCS), where a leader must visit relevant regions of an environment while the remaining robots operate as relays, maintaining the connectivity between the leader and a base station. We leverage Mixed-Integer Linear Programming (MILP) to design a trajectory planner that can coordinate the RCCS, minimizing time and control effort while also handling the issues of directed Line-Of-Sight (LOS), connectivity over directed networks, and the nonlinearity of the robots' dynamics. The efficacy of the proposal is demonstrated with realistic simulations in the Gazebo environment using the Turtlebot3 robot platform.

Real-time motion planning and decision-making for a group of differential drive robots under connectivity constraints using robust MPC and mixed-integer programming

May 31, 2022

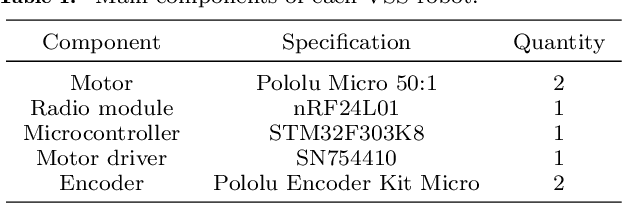

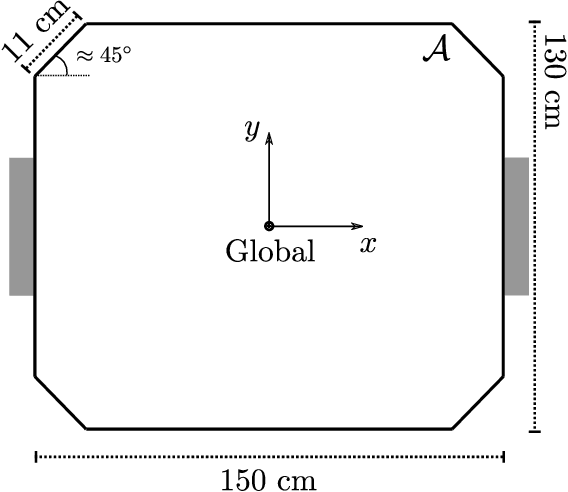

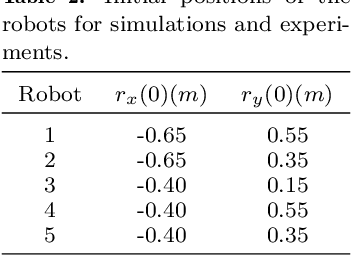

Abstract:This work is concerned with the problem of planning trajectories and assigning tasks for a Multi-Agent System (MAS) comprised of differential drive robots. We propose a multirate hierarchical control structure that employs a planner based on robust Model Predictive Control (MPC) with mixed-integer programming (MIP) encoding. The planner computes trajectories and assigns tasks for each element of the group in real-time, while also guaranteeing the communication network of the MAS to be robustly connected at all times. Additionally, we provide a data-based methodology to estimate the disturbances sets required by the robust MPC formulation. The results are demonstrated with experiments in two obstacle-filled scenarios

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge