Maria Luce Lupetti

The Unbearable Lightness of Prompting: A Critical Reflection on the Environmental Impact of genAI use in Design Education

Jan 27, 2025Abstract:Design educators are finding ways to support students in skillfully using GenAI tools in their practices while encouraging the critical scrutiny of the ethical and social issues around these technologies. However, the issue of environmental sustainability remains unaddressed. There is a lack of both resources to grasp the environmental costs of genAI in education and a lack of shared practices for engaging with the issue. This paper critically reflects on the energy costs of using genAI in design education, using a workshop held in 2023 with 49 students as a motivating example. Through this reflection, we develop a set of five alternative stances, with related actions, that support the conscious use of genAI in design education. The work contributes to the field of design and HCI by bringing together ways for educators to reflect on their practices, informing the future development of educational programs around genAI.

(Un)making AI Magic: a Design Taxonomy

Mar 22, 2024Abstract:This paper examines the role that enchantment plays in the design of AI things by constructing a taxonomy of design approaches that increase or decrease the perception of magic and enchantment. We start from the design discourse surrounding recent developments in AI technologies, highlighting specific interaction qualities such as algorithmic uncertainties and errors and articulating relations to the rhetoric of magic and supernatural thinking. Through analyzing and reflecting upon 52 students' design projects from two editions of a Master course in design and AI, we identify seven design principles and unpack the effects of each in terms of enchantment and disenchantment. We conclude by articulating ways in which this taxonomy can be approached and appropriated by design/HCI practitioners, especially to support exploration and reflexivity.

Safe Spot: Perceived safety of dominant and submissive appearances of quadruped robots in human-robot interactions

Mar 08, 2024

Abstract:Unprecedented possibilities of quadruped robots have driven much research on the technical aspects of these robots. However, the social perception and acceptability of quadruped robots so far remain poorly understood. This work investigates whether the way we design quadruped robots' behaviors can affect people's perception of safety in interactions with these robots. We designed and tested a dominant and submissive personality for the quadruped robot (Boston Dynamics Spot). These were tested in two different walking scenarios (head-on and crossing interactions) in a 2x2 within-subjects study. We collected both behavioral data and subjective reports on participants' perception of the interaction. The results highlight that participants perceived the submissive robot as safer compared to the dominant one. The behavioral dynamics of interactions did not change depending on the robot's appearance. Participants' previous in-person experience with the robot was associated with lower subjective safety ratings but did not correlate with the interaction dynamics. Our findings have implications for the design of quadruped robots and contribute to the body of knowledge on the social perception of non-humanoid robots. We call for a stronger standing of felt experiences in human-robot interaction research.

Grasping AI: experiential exercises for designers

Oct 02, 2023

Abstract:Artificial intelligence (AI) and machine learning (ML) are increasingly integrated into the functioning of physical and digital products, creating unprecedented opportunities for interaction and functionality. However, there is a challenge for designers to ideate within this creative landscape, balancing the possibilities of technology with human interactional concerns. We investigate techniques for exploring and reflecting on the interactional affordances, the unique relational possibilities, and the wider social implications of AI systems. We introduced into an interaction design course (n=100) nine 'AI exercises' that draw on more than human design, responsible AI, and speculative enactment to create experiential engagements around AI interaction design. We find that exercises around metaphors and enactments make questions of training and learning, privacy and consent, autonomy and agency more tangible, and thereby help students be more reflective and responsible on how to design with AI and its complex properties in both their design process and outcomes.

Meaningful human control over AI systems: beyond talking the talk

Nov 25, 2021

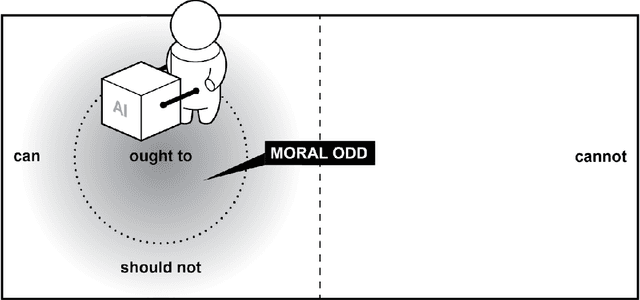

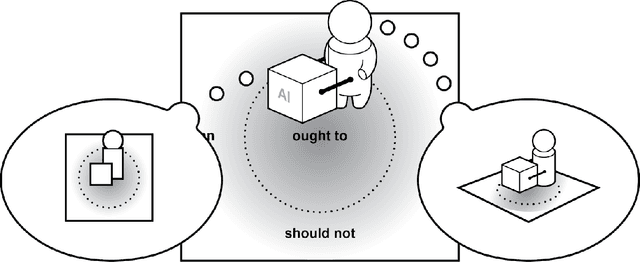

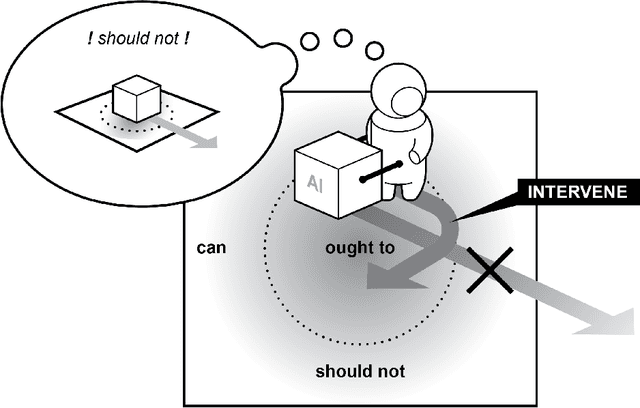

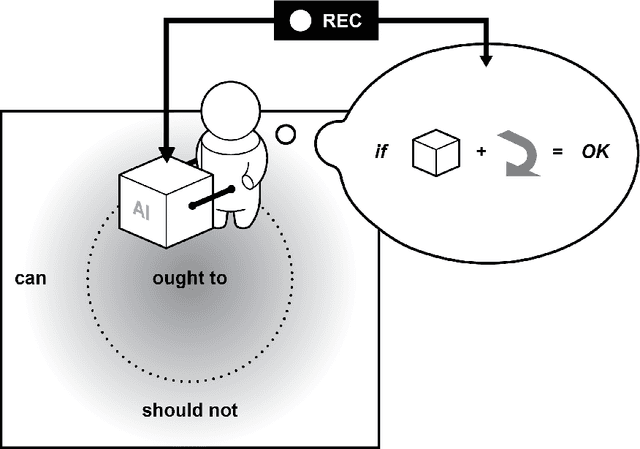

Abstract:The concept of meaningful human control has been proposed to address responsibility gaps and mitigate them by establishing conditions that enable a proper attribution of responsibility for humans (e.g., users, designers and developers, manufacturers, legislators). However, the relevant discussions around meaningful human control have so far not resulted in clear requirements for researchers, designers, and engineers. As a result, there is no consensus on how to assess whether a designed AI system is under meaningful human control, making the practical development of AI-based systems that remain under meaningful human control challenging. In this paper, we address the gap between philosophical theory and engineering practice by identifying four actionable properties which AI-based systems must have to be under meaningful human control. First, a system in which humans and AI algorithms interact should have an explicitly defined domain of morally loaded situations within which the system ought to operate. Second, humans and AI agents within the system should have appropriate and mutually compatible representations. Third, responsibility attributed to a human should be commensurate with that human's ability and authority to control the system. Fourth, there should be explicit links between the actions of the AI agents and actions of humans who are aware of their moral responsibility. We argue these four properties are necessary for AI systems under meaningful human control, and provide possible directions to incorporate them into practice. We illustrate these properties with two use cases, automated vehicle and AI-based hiring. We believe these four properties will support practically-minded professionals to take concrete steps toward designing and engineering for AI systems that facilitate meaningful human control and responsibility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge