Dave Murray-Rust

Towards Meaningful Transparency in Civic AI Systems

Oct 09, 2025Abstract:Artificial intelligence has become a part of the provision of governmental services, from making decisions about benefits to issuing fines for parking violations. However, AI systems rarely live up to the promise of neutral optimisation, creating biased or incorrect outputs and reducing the agency of both citizens and civic workers to shape the way decisions are made. Transparency is a principle that can both help subjects understand decisions made about them and shape the processes behind those decisions. However, transparency as practiced around AI systems tends to focus on the production of technical objects that represent algorithmic aspects of decision making. These are often difficult for publics to understand, do not connect to potential for action, and do not give insight into the wider socio-material context of decision making. In this paper, we build on existing approaches that take a human-centric view on AI transparency, combined with a socio-technical systems view, to develop the concept of meaningful transparency for civic AI systems: transparencies that allow publics to engage with AI systems that affect their lives, connecting understanding with potential for action.

The Unbearable Lightness of Prompting: A Critical Reflection on the Environmental Impact of genAI use in Design Education

Jan 27, 2025Abstract:Design educators are finding ways to support students in skillfully using GenAI tools in their practices while encouraging the critical scrutiny of the ethical and social issues around these technologies. However, the issue of environmental sustainability remains unaddressed. There is a lack of both resources to grasp the environmental costs of genAI in education and a lack of shared practices for engaging with the issue. This paper critically reflects on the energy costs of using genAI in design education, using a workshop held in 2023 with 49 students as a motivating example. Through this reflection, we develop a set of five alternative stances, with related actions, that support the conscious use of genAI in design education. The work contributes to the field of design and HCI by bringing together ways for educators to reflect on their practices, informing the future development of educational programs around genAI.

(Un)making AI Magic: a Design Taxonomy

Mar 22, 2024Abstract:This paper examines the role that enchantment plays in the design of AI things by constructing a taxonomy of design approaches that increase or decrease the perception of magic and enchantment. We start from the design discourse surrounding recent developments in AI technologies, highlighting specific interaction qualities such as algorithmic uncertainties and errors and articulating relations to the rhetoric of magic and supernatural thinking. Through analyzing and reflecting upon 52 students' design projects from two editions of a Master course in design and AI, we identify seven design principles and unpack the effects of each in terms of enchantment and disenchantment. We conclude by articulating ways in which this taxonomy can be approached and appropriated by design/HCI practitioners, especially to support exploration and reflexivity.

Unpacking Human-AI interactions: From interaction primitives to a design space

Jan 10, 2024Abstract:This paper aims to develop a semi-formal design space for Human-AI interactions, by building a set of interaction primitives which specify the communication between users and AI systems during their interaction. We show how these primitives can be combined into a set of interaction patterns which can provide an abstract specification for exchanging messages between humans and AI/ML models to carry out purposeful interactions. The motivation behind this is twofold: firstly, to provide a compact generalisation of existing practices, that highlights the similarities and differences between systems in terms of their interaction behaviours; and secondly, to support the creation of new systems, in particular by opening the space of possibilities for interactions with models. We present a short literature review on frameworks, guidelines and taxonomies related to the design and implementation of HAI interactions, including human-in-the-loop, explainable AI, as well as hybrid intelligence and collaborative learning approaches. From the literature review, we define a vocabulary for describing information exchanges in terms of providing and requesting particular model-specific data types. Based on this vocabulary, a message passing model for interactions between humans and models is presented, which we demonstrate can account for existing systems and approaches. Finally, we build this into design patterns as mid-level constructs that capture common interactional structures. We discuss how this approach can be used towards a design space for Human-AI interactions that creates new possibilities for designs as well as keeping track of implementation issues and concerns.

Grasping AI: experiential exercises for designers

Oct 02, 2023

Abstract:Artificial intelligence (AI) and machine learning (ML) are increasingly integrated into the functioning of physical and digital products, creating unprecedented opportunities for interaction and functionality. However, there is a challenge for designers to ideate within this creative landscape, balancing the possibilities of technology with human interactional concerns. We investigate techniques for exploring and reflecting on the interactional affordances, the unique relational possibilities, and the wider social implications of AI systems. We introduced into an interaction design course (n=100) nine 'AI exercises' that draw on more than human design, responsible AI, and speculative enactment to create experiential engagements around AI interaction design. We find that exercises around metaphors and enactments make questions of training and learning, privacy and consent, autonomy and agency more tangible, and thereby help students be more reflective and responsible on how to design with AI and its complex properties in both their design process and outcomes.

Towards a multi-stakeholder value-based assessment framework for algorithmic systems

May 09, 2022

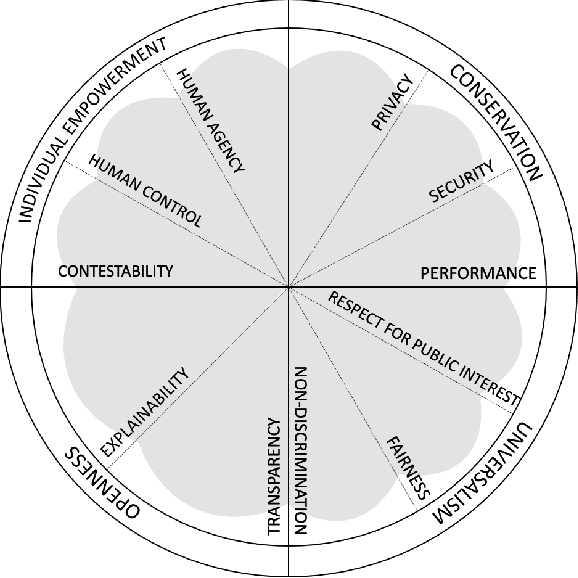

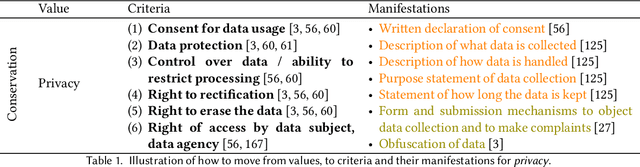

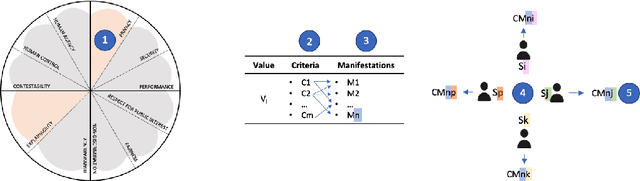

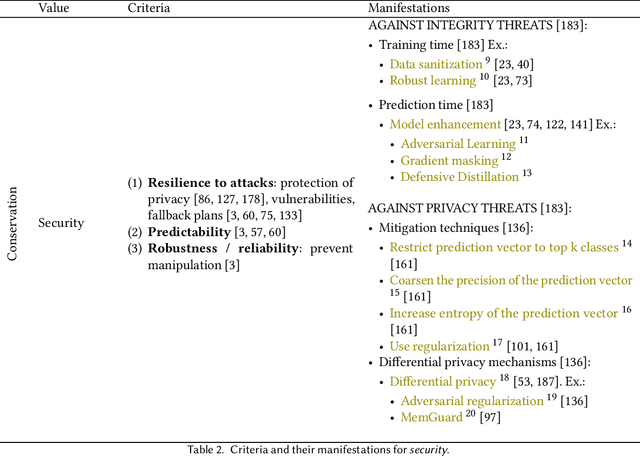

Abstract:In an effort to regulate Machine Learning-driven (ML) systems, current auditing processes mostly focus on detecting harmful algorithmic biases. While these strategies have proven to be impactful, some values outlined in documents dealing with ethics in ML-driven systems are still underrepresented in auditing processes. Such unaddressed values mainly deal with contextual factors that cannot be easily quantified. In this paper, we develop a value-based assessment framework that is not limited to bias auditing and that covers prominent ethical principles for algorithmic systems. Our framework presents a circular arrangement of values with two bipolar dimensions that make common motivations and potential tensions explicit. In order to operationalize these high-level principles, values are then broken down into specific criteria and their manifestations. However, some of these value-specific criteria are mutually exclusive and require negotiation. As opposed to some other auditing frameworks that merely rely on ML researchers' and practitioners' input, we argue that it is necessary to include stakeholders that present diverse standpoints to systematically negotiate and consolidate value and criteria tensions. To that end, we map stakeholders with different insight needs, and assign tailored means for communicating value manifestations to them. We, therefore, contribute to current ML auditing practices with an assessment framework that visualizes closeness and tensions between values and we give guidelines on how to operationalize them, while opening up the evaluation and deliberation process to a wide range of stakeholders.

Experiential AI

Aug 06, 2019

Abstract:Experiential AI is proposed as a new research agenda in which artists and scientists come together to dispel the mystery of algorithms and make their mechanisms vividly apparent. It addresses the challenge of finding novel ways of opening up the field of artificial intelligence to greater transparency and collaboration between human and machine. The hypothesis is that art can mediate between computer code and human comprehension to overcome the limitations of explanations in and for AI systems. Artists can make the boundaries of systems visible and offer novel ways to make the reasoning of AI transparent and decipherable. Beyond this, artistic practice can explore new configurations of humans and algorithms, mapping the terrain of inter-agencies between people and machines. This helps to viscerally understand the complex causal chains in environments with AI components, including questions about what data to collect or who to collect it about, how the algorithms are chosen, commissioned and configured or how humans are conditioned by their participation in algorithmic processes.

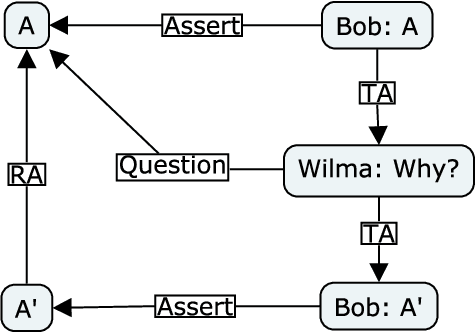

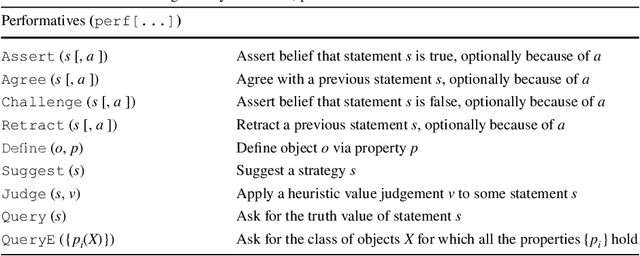

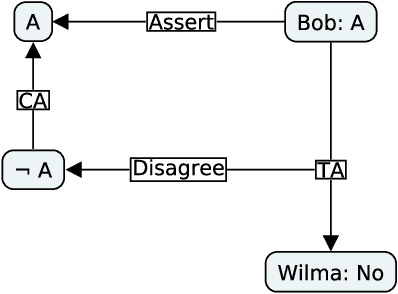

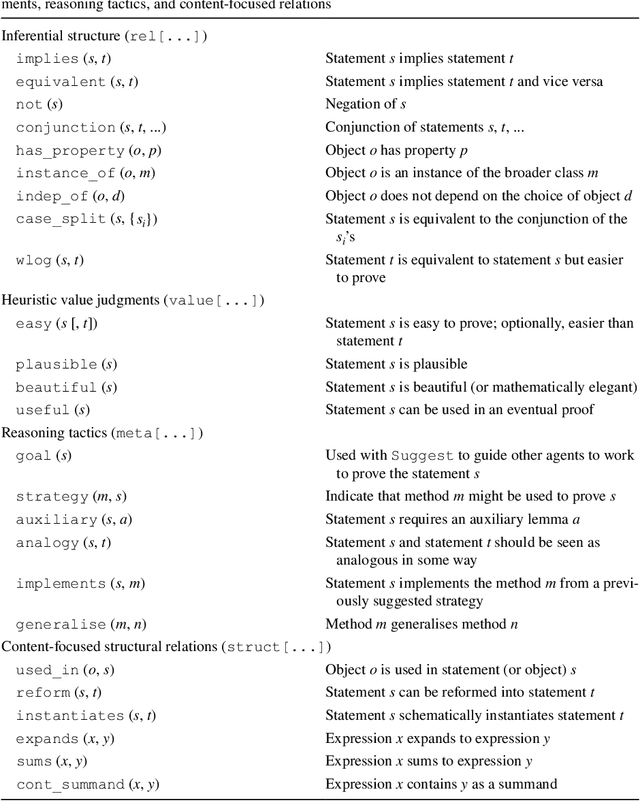

Argumentation theory for mathematical argument

Jul 15, 2018

Abstract:To adequately model mathematical arguments the analyst must be able to represent the mathematical objects under discussion and the relationships between them, as well as inferences drawn about these objects and relationships as the discourse unfolds. We introduce a framework with these properties, which has been used to analyse mathematical dialogues and expository texts. The framework can recover salient elements of discourse at, and within, the sentence level, as well as the way mathematical content connects to form larger argumentative structures. We show how the framework might be used to support computational reasoning, and argue that it provides a more natural way to examine the process of proving theorems than do Lamport's structured proofs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge