Evgeni Aizenberg

Meaningful human control over AI systems: beyond talking the talk

Nov 25, 2021

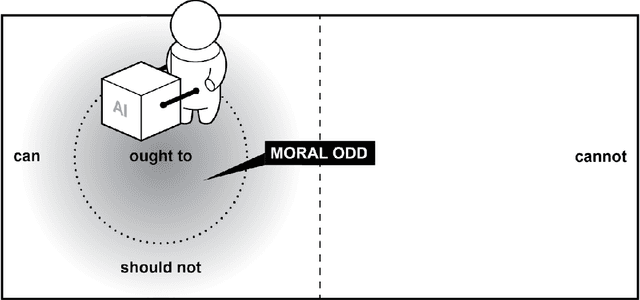

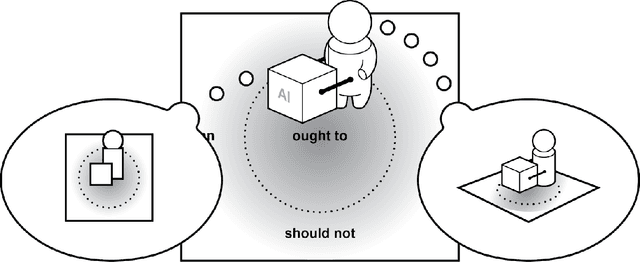

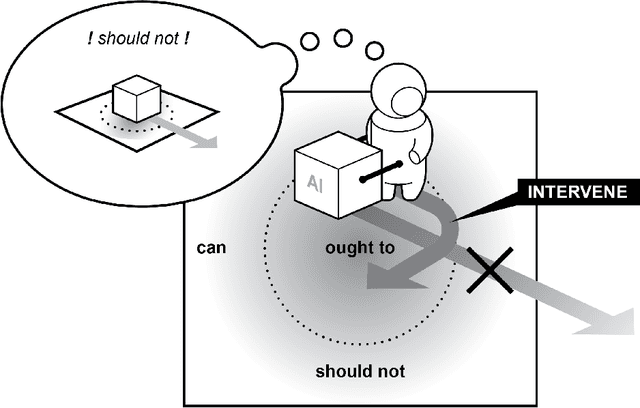

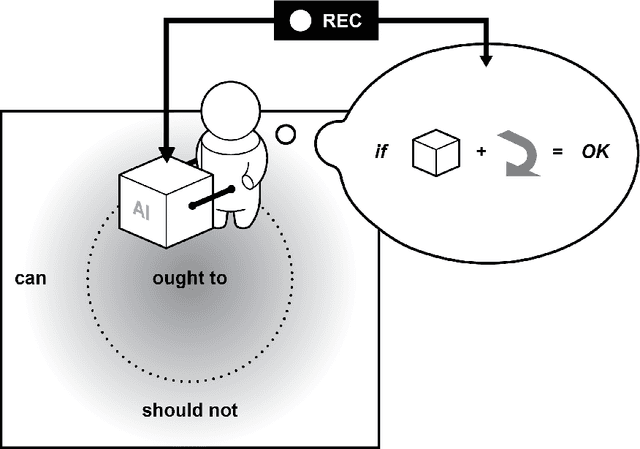

Abstract:The concept of meaningful human control has been proposed to address responsibility gaps and mitigate them by establishing conditions that enable a proper attribution of responsibility for humans (e.g., users, designers and developers, manufacturers, legislators). However, the relevant discussions around meaningful human control have so far not resulted in clear requirements for researchers, designers, and engineers. As a result, there is no consensus on how to assess whether a designed AI system is under meaningful human control, making the practical development of AI-based systems that remain under meaningful human control challenging. In this paper, we address the gap between philosophical theory and engineering practice by identifying four actionable properties which AI-based systems must have to be under meaningful human control. First, a system in which humans and AI algorithms interact should have an explicitly defined domain of morally loaded situations within which the system ought to operate. Second, humans and AI agents within the system should have appropriate and mutually compatible representations. Third, responsibility attributed to a human should be commensurate with that human's ability and authority to control the system. Fourth, there should be explicit links between the actions of the AI agents and actions of humans who are aware of their moral responsibility. We argue these four properties are necessary for AI systems under meaningful human control, and provide possible directions to incorporate them into practice. We illustrate these properties with two use cases, automated vehicle and AI-based hiring. We believe these four properties will support practically-minded professionals to take concrete steps toward designing and engineering for AI systems that facilitate meaningful human control and responsibility.

Designing for Human Rights in AI

May 11, 2020

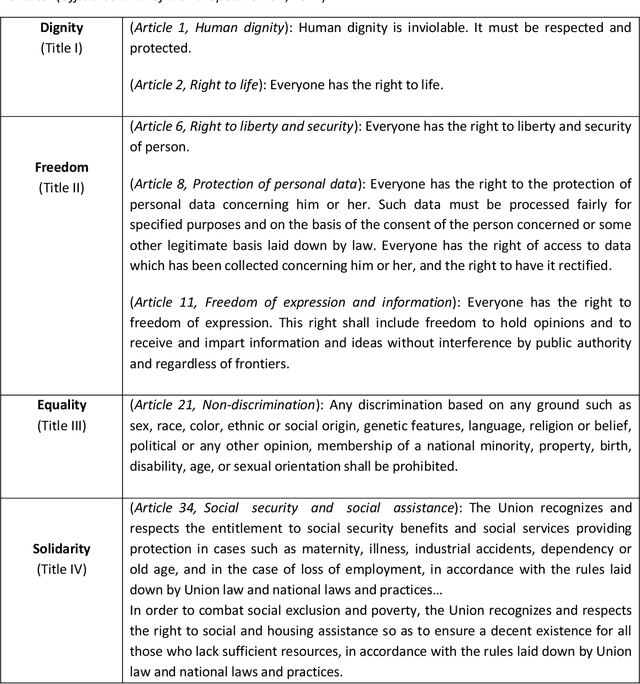

Abstract:In the age of big data, companies and governments are increasingly using algorithms to inform hiring decisions, employee management, policing, credit scoring, insurance pricing, and many more aspects of our lives. AI systems can help us make evidence-driven, efficient decisions, but can also confront us with unjustified, discriminatory decisions wrongly assumed to be accurate because they are made automatically and quantitatively. It is becoming evident that these technological developments are consequential to people's fundamental human rights. Despite increasing attention to these urgent challenges in recent years, technical solutions to these complex socio-ethical problems are often developed without empirical study of societal context and the critical input of societal stakeholders who are impacted by the technology. On the other hand, calls for more ethically- and socially-aware AI often fail to provide answers for how to proceed beyond stressing the importance of transparency, explainability, and fairness. Bridging these socio-technical gaps and the deep divide between abstract value language and design requirements is essential to facilitate nuanced, context-dependent design choices that will support moral and social values. In this paper, we bridge this divide through the framework of Design for Values, drawing on methodologies of Value Sensitive Design and Participatory Design to present a roadmap for proactively engaging societal stakeholders to translate fundamental human rights into context-dependent design requirements through a structured, inclusive, and transparent process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge