Alfredo Kalaitzis

University of Sheffield

HighRes-net: Recursive Fusion for Multi-Frame Super-Resolution of Satellite Imagery

Feb 15, 2020

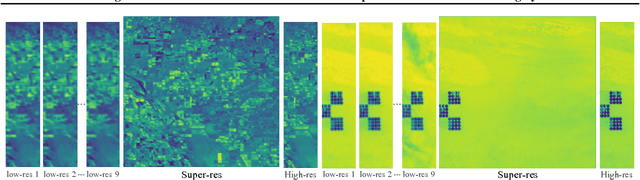

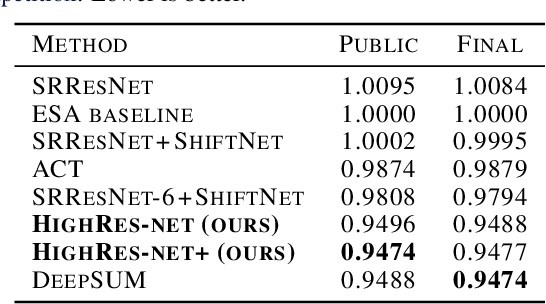

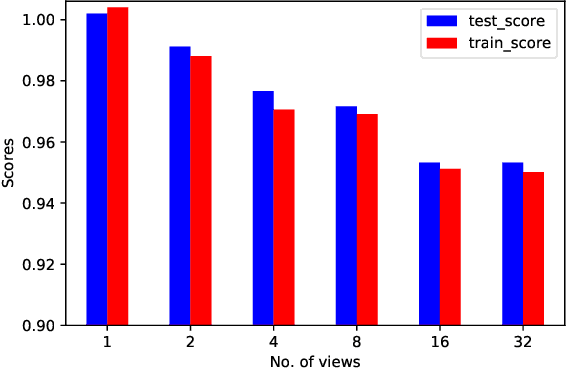

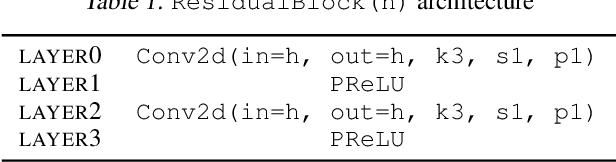

Abstract:Generative deep learning has sparked a new wave of Super-Resolution (SR) algorithms that enhance single images with impressive aesthetic results, albeit with imaginary details. Multi-frame Super-Resolution (MFSR) offers a more grounded approach to the ill-posed problem, by conditioning on multiple low-resolution views. This is important for satellite monitoring of human impact on the planet -- from deforestation, to human rights violations -- that depend on reliable imagery. To this end, we present HighRes-net, the first deep learning approach to MFSR that learns its sub-tasks in an end-to-end fashion: (i) co-registration, (ii) fusion, (iii) up-sampling, and (iv) registration-at-the-loss. Co-registration of low-resolution views is learned implicitly through a reference-frame channel, with no explicit registration mechanism. We learn a global fusion operator that is applied recursively on an arbitrary number of low-resolution pairs. We introduce a registered loss, by learning to align the SR output to a ground-truth through ShiftNet. We show that by learning deep representations of multiple views, we can super-resolve low-resolution signals and enhance Earth Observation data at scale. Our approach recently topped the European Space Agency's MFSR competition on real-world satellite imagery.

Single-Frame Super-Resolution of Solar Magnetograms: Investigating Physics-Based Metrics \& Losses

Nov 04, 2019

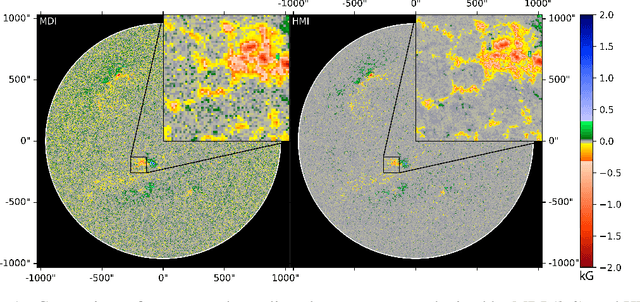

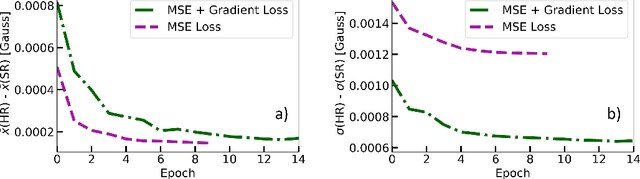

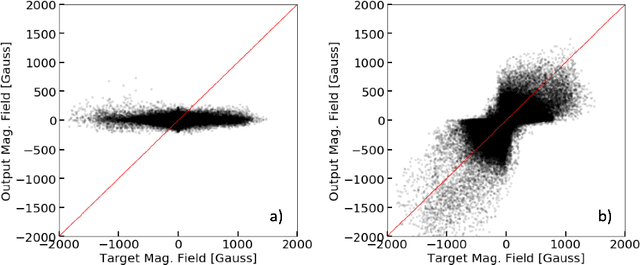

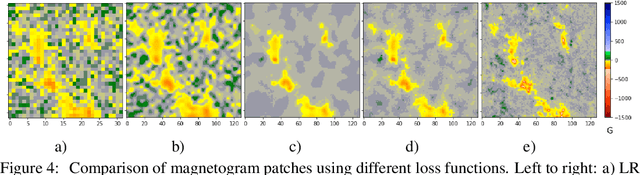

Abstract:Breakthroughs in our understanding of physical phenomena have traditionally followed improvements in instrumentation. Studies of the magnetic field of the Sun, and its influence on the solar dynamo and space weather events, have benefited from improvements in resolution and measurement frequency of new instruments. However, in order to fully understand the solar cycle, high-quality data across time-scales longer than the typical lifespan of a solar instrument are required. At the moment, discrepancies between measurement surveys prevent the combined use of all available data. In this work, we show that machine learning can help bridge the gap between measurement surveys by learning to \textbf{super-resolve} low-resolution magnetic field images and \textbf{translate} between characteristics of contemporary instruments in orbit. We also introduce the notion of physics-based metrics and losses for super-resolution to preserve underlying physics and constrain the solution space of possible super-resolution outputs.

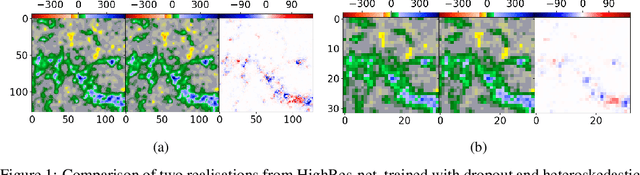

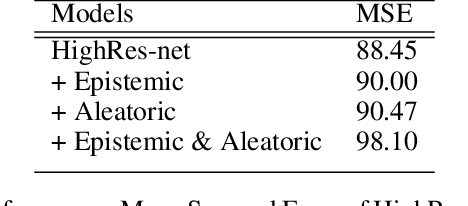

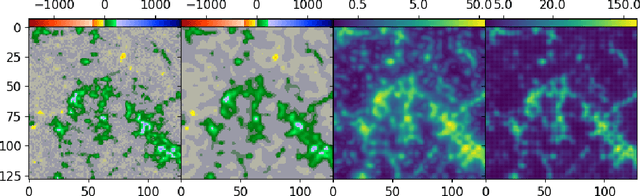

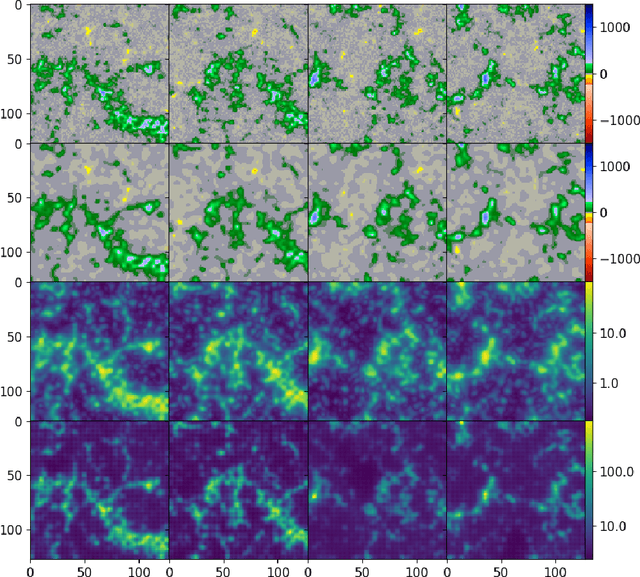

Probabilistic Super-Resolution of Solar Magnetograms: Generating Many Explanations and Measuring Uncertainties

Nov 04, 2019

Abstract:Machine learning techniques have been successfully applied to super-resolution tasks on natural images where visually pleasing results are sufficient. However in many scientific domains this is not adequate and estimations of errors and uncertainties are crucial. To address this issue we propose a Bayesian framework that decomposes uncertainties into epistemic and aleatoric uncertainties. We test the validity of our approach by super-resolving images of the Sun's magnetic field and by generating maps measuring the range of possible high resolution explanations compatible with a given low resolution magnetogram.

Correlation of Auroral Dynamics and GNSS Scintillation with an Autoencoder

Oct 04, 2019

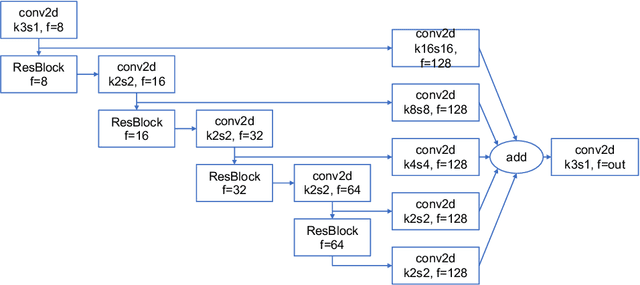

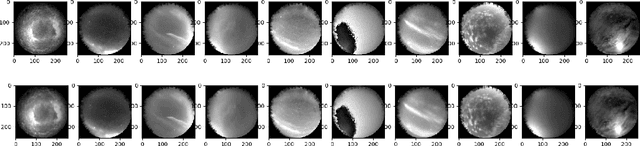

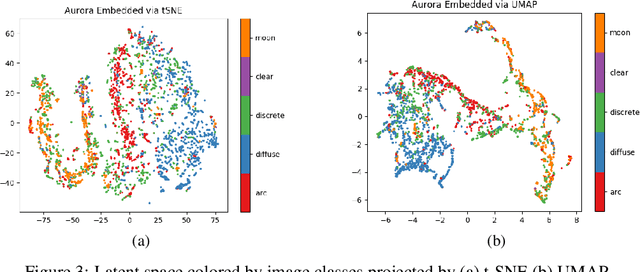

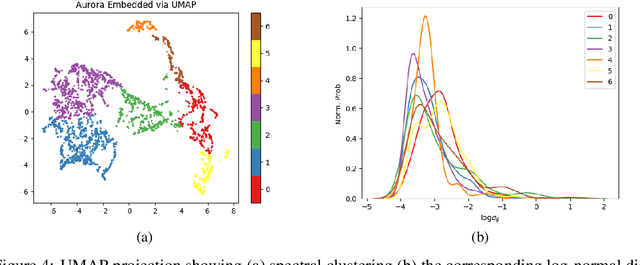

Abstract:High energy particles originating from solar activity travel along the the Earth's magnetic field and interact with the atmosphere around the higher latitudes. These interactions often manifest as aurora in the form of visible light in the Earth's ionosphere. These interactions also result in irregularities in the electron density, which cause disruptions in the amplitude and phase of the radio signals from the Global Navigation Satellite Systems (GNSS), known as 'scintillation'. In this paper we use a multi-scale residual autoencoder (Res-AE) to show the correlation between specific dynamic structures of the aurora and the magnitude of the GNSS phase scintillations ($\sigma_{\phi}$). Auroral images are encoded in a lower dimensional feature space using the Res-AE, which in turn are clustered with t-SNE and UMAP. Both methods produce similar clusters, and specific clusters demonstrate greater correlations with observed phase scintillations. Our results suggest that specific dynamic structures of auroras are highly correlated with GNSS phase scintillations.

Prediction of GNSS Phase Scintillations: A Machine Learning Approach

Oct 03, 2019

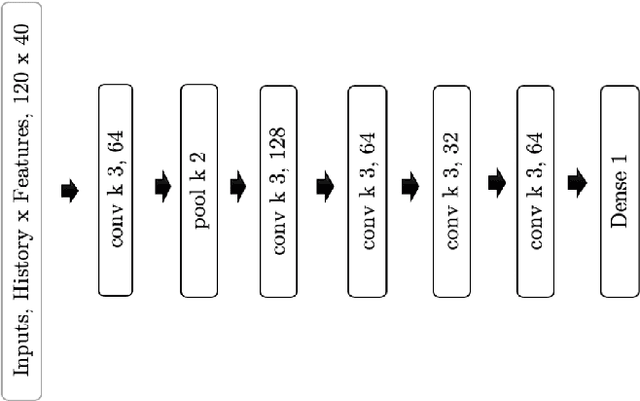

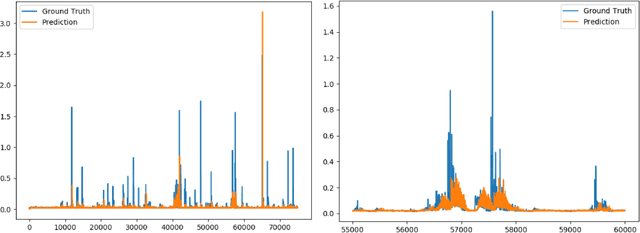

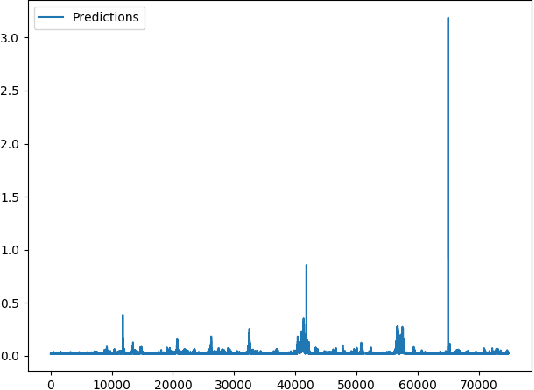

Abstract:A Global Navigation Satellite System (GNSS) uses a constellation of satellites around the earth for accurate navigation, timing, and positioning. Natural phenomena like space weather introduce irregularities in the Earth's ionosphere, disrupting the propagation of the radio signals that GNSS relies upon. Such disruptions affect both the amplitude and the phase of the propagated waves. No physics-based model currently exists to predict the time and location of these disruptions with sufficient accuracy and at relevant scales. In this paper, we focus on predicting the phase fluctuations of GNSS radio waves, known as phase scintillations. We propose a novel architecture and loss function to predict 1 hour in advance the magnitude of phase scintillations within a time window of plus-minus 5 minutes with state-of-the-art performance.

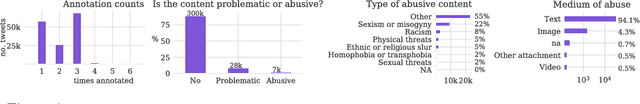

A large-scale crowdsourced analysis of abuse against women journalists and politicians on Twitter

Jan 31, 2019

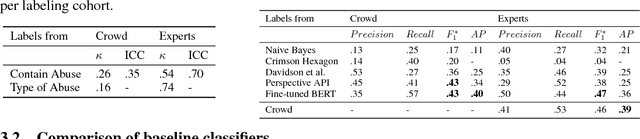

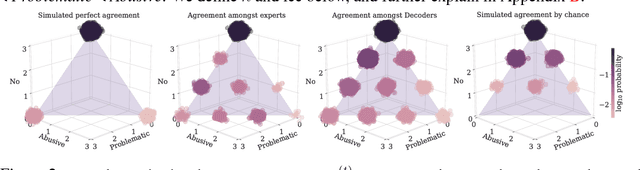

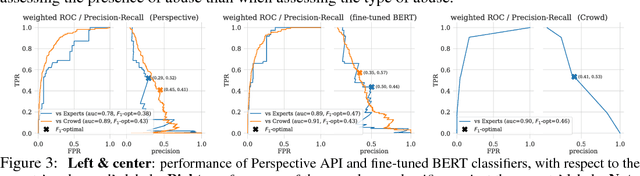

Abstract:We report the first, to the best of our knowledge, hand-in-hand collaboration between human rights activists and machine learners, leveraging crowd-sourcing to study online abuse against women on Twitter. On a technical front, we carefully curate an unbiased yet low-variance dataset of labeled tweets, analyze it to account for the variability of abuse perception, and establish baselines, preparing it for release to community research efforts. On a social impact front, this study provides the technical backbone for a media campaign aimed at raising public and deciders' awareness and elevating the standards expected from social media companies.

Flexible sampling of discrete data correlations without the marginal distributions

Nov 14, 2013

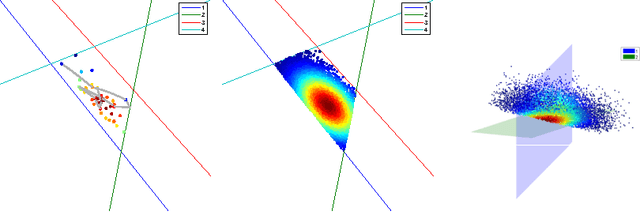

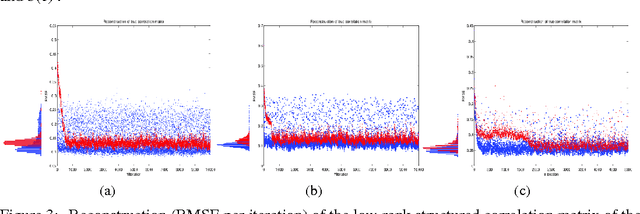

Abstract:Learning the joint dependence of discrete variables is a fundamental problem in machine learning, with many applications including prediction, clustering and dimensionality reduction. More recently, the framework of copula modeling has gained popularity due to its modular parametrization of joint distributions. Among other properties, copulas provide a recipe for combining flexible models for univariate marginal distributions with parametric families suitable for potentially high dimensional dependence structures. More radically, the extended rank likelihood approach of Hoff (2007) bypasses learning marginal models completely when such information is ancillary to the learning task at hand as in, e.g., standard dimensionality reduction problems or copula parameter estimation. The main idea is to represent data by their observable rank statistics, ignoring any other information from the marginals. Inference is typically done in a Bayesian framework with Gaussian copulas, and it is complicated by the fact this implies sampling within a space where the number of constraints increases quadratically with the number of data points. The result is slow mixing when using off-the-shelf Gibbs sampling. We present an efficient algorithm based on recent advances on constrained Hamiltonian Markov chain Monte Carlo that is simple to implement and does not require paying for a quadratic cost in sample size.

Residual Component Analysis: Generalising PCA for more flexible inference in linear-Gaussian models

Jun 18, 2012

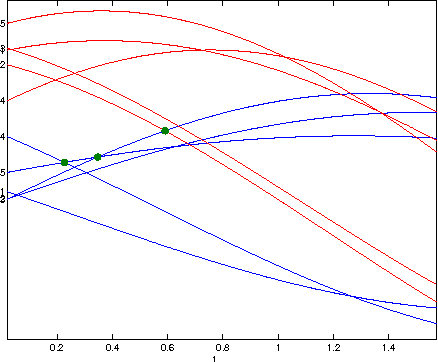

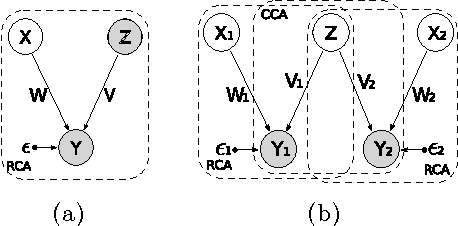

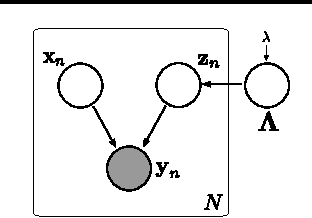

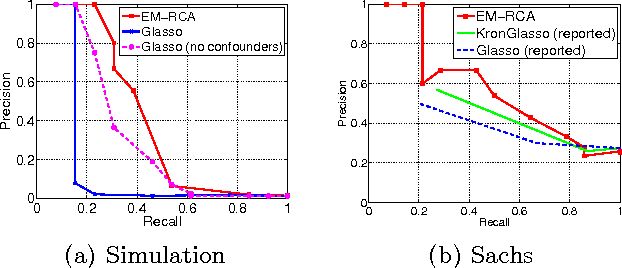

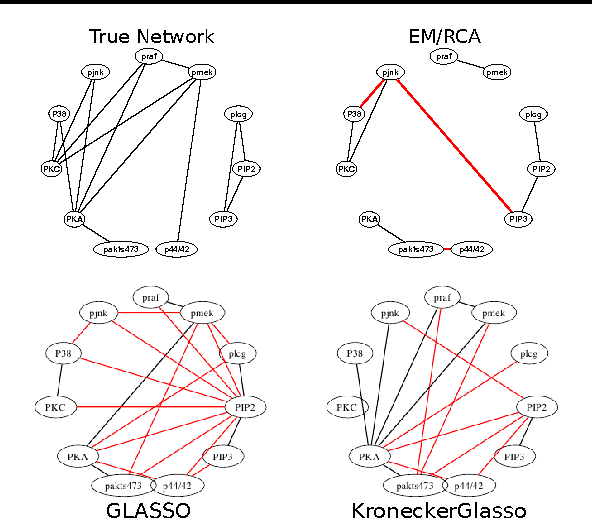

Abstract:Probabilistic principal component analysis (PPCA) seeks a low dimensional representation of a data set in the presence of independent spherical Gaussian noise. The maximum likelihood solution for the model is an eigenvalue problem on the sample covariance matrix. In this paper we consider the situation where the data variance is already partially explained by other actors, for example sparse conditional dependencies between the covariates, or temporal correlations leaving some residual variance. We decompose the residual variance into its components through a generalised eigenvalue problem, which we call residual component analysis (RCA). We explore a range of new algorithms that arise from the framework, including one that factorises the covariance of a Gaussian density into a low-rank and a sparse-inverse component. We illustrate the ideas on the recovery of a protein-signaling network, a gene expression time-series data set and the recovery of the human skeleton from motion capture 3-D cloud data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge