Carl Shneider

Multiscale Dynamics Group, Center for Mathematics and Computer Science

Hardware Aware Evolutionary Neural Architecture Search using Representation Similarity Metric

Nov 07, 2023Abstract:Hardware-aware Neural Architecture Search (HW-NAS) is a technique used to automatically design the architecture of a neural network for a specific task and target hardware. However, evaluating the performance of candidate architectures is a key challenge in HW-NAS, as it requires significant computational resources. To address this challenge, we propose an efficient hardware-aware evolution-based NAS approach called HW-EvRSNAS. Our approach re-frames the neural architecture search problem as finding an architecture with performance similar to that of a reference model for a target hardware, while adhering to a cost constraint for that hardware. This is achieved through a representation similarity metric known as Representation Mutual Information (RMI) employed as a proxy performance evaluator. It measures the mutual information between the hidden layer representations of a reference model and those of sampled architectures using a single training batch. We also use a penalty term that penalizes the search process in proportion to how far an architecture's hardware cost is from the desired hardware cost threshold. This resulted in a significantly reduced search time compared to the literature that reached up to 8000x speedups resulting in lower CO2 emissions. The proposed approach is evaluated on two different search spaces while using lower computational resources. Furthermore, our approach is thoroughly examined on six different edge devices under various hardware cost constraints.

Impact of Disentanglement on Pruning Neural Networks

Jul 19, 2023Abstract:Deploying deep learning neural networks on edge devices, to accomplish task specific objectives in the real-world, requires a reduction in their memory footprint, power consumption, and latency. This can be realized via efficient model compression. Disentangled latent representations produced by variational autoencoder (VAE) networks are a promising approach for achieving model compression because they mainly retain task-specific information, discarding useless information for the task at hand. We make use of the Beta-VAE framework combined with a standard criterion for pruning to investigate the impact of forcing the network to learn disentangled representations on the pruning process for the task of classification. In particular, we perform experiments on MNIST and CIFAR10 datasets, examine disentanglement challenges, and propose a path forward for future works.

A Survey on Deep Learning-Based Monocular Spacecraft Pose Estimation: Current State, Limitations and Prospects

May 17, 2023

Abstract:Estimating the pose of an uncooperative spacecraft is an important computer vision problem for enabling the deployment of automatic vision-based systems in orbit, with applications ranging from on-orbit servicing to space debris removal. Following the general trend in computer vision, more and more works have been focusing on leveraging Deep Learning (DL) methods to address this problem. However and despite promising research-stage results, major challenges preventing the use of such methods in real-life missions still stand in the way. In particular, the deployment of such computation-intensive algorithms is still under-investigated, while the performance drop when training on synthetic and testing on real images remains to mitigate. The primary goal of this survey is to describe the current DL-based methods for spacecraft pose estimation in a comprehensive manner. The secondary goal is to help define the limitations towards the effective deployment of DL-based spacecraft pose estimation solutions for reliable autonomous vision-based applications. To this end, the survey first summarises the existing algorithms according to two approaches: hybrid modular pipelines and direct end-to-end regression methods. A comparison of algorithms is presented not only in terms of pose accuracy but also with a focus on network architectures and models' sizes keeping potential deployment in mind. Then, current monocular spacecraft pose estimation datasets used to train and test these methods are discussed. The data generation methods: simulators and testbeds, the domain gap and the performance drop between synthetically generated and lab/space collected images and the potential solutions are also discussed. Finally, the paper presents open research questions and future directions in the field, drawing parallels with other computer vision applications.

A Machine-Learning-Ready Dataset Prepared from the Solar and Heliospheric Observatory Mission

Aug 04, 2021

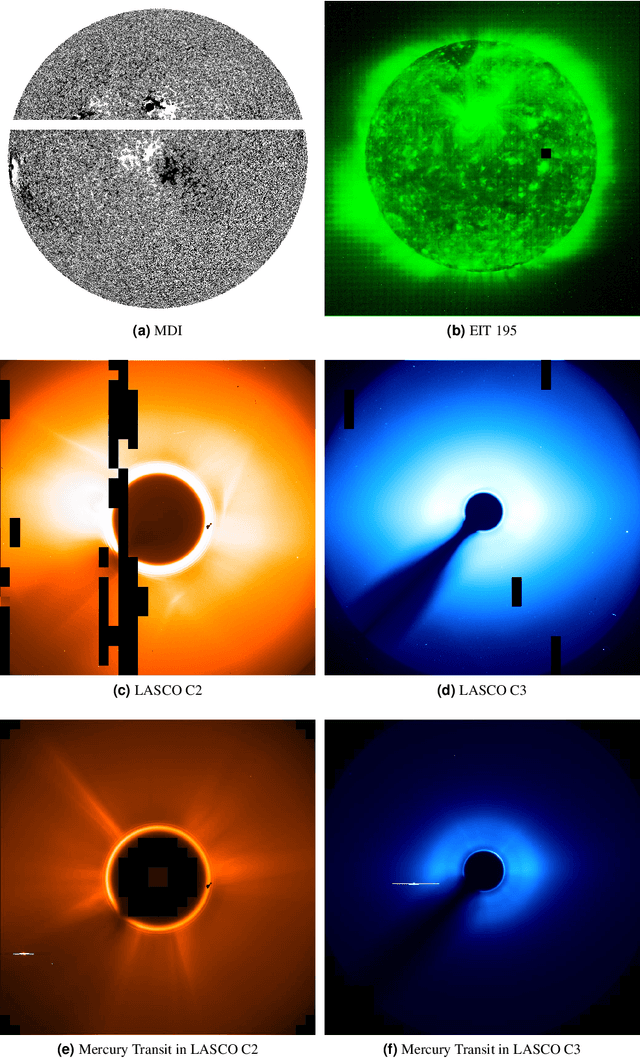

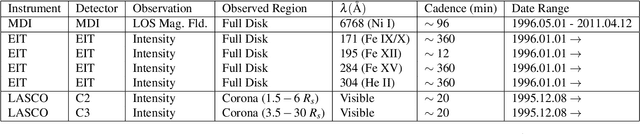

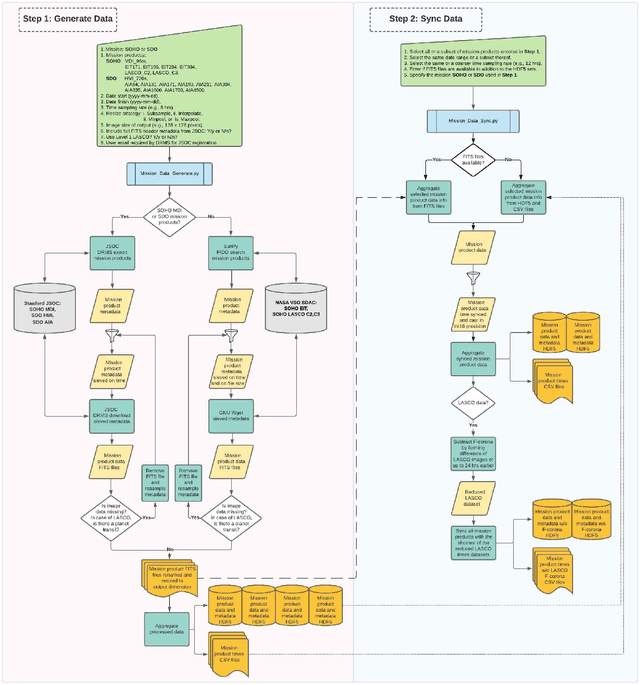

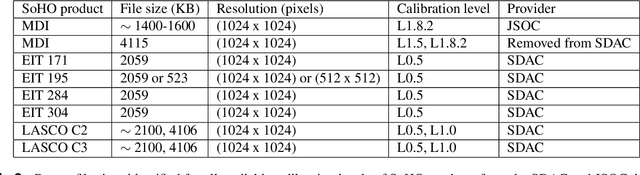

Abstract:We present a Python tool to generate a standard dataset from solar images that allows for user-defined selection criteria and a range of pre-processing steps. Our Python tool works with all image products from both the Solar and Heliospheric Observatory (SoHO) and Solar Dynamics Observatory (SDO) missions. We discuss a dataset produced from the SoHO mission's multi-spectral images which is free of missing or corrupt data as well as planetary transits in coronagraph images, and is temporally synced making it ready for input to a machine learning system. Machine-learning-ready images are a valuable resource for the community because they can be used, for example, for forecasting space weather parameters. We illustrate the use of this data with a 3-5 day-ahead forecast of the north-south component of the interplanetary magnetic field (IMF) observed at Lagrange point one (L1). For this use case, we apply a deep convolutional neural network (CNN) to a subset of the full SoHO dataset and compare with baseline results from a Gaussian Naive Bayes classifier.

Ideas for Improving the Field of Machine Learning: Summarizing Discussion from the NeurIPS 2019 Retrospectives Workshop

Jul 21, 2020Abstract:This report documents ideas for improving the field of machine learning, which arose from discussions at the ML Retrospectives workshop at NeurIPS 2019. The goal of the report is to disseminate these ideas more broadly, and in turn encourage continuing discussion about how the field could improve along these axes. We focus on topics that were most discussed at the workshop: incentives for encouraging alternate forms of scholarship, re-structuring the review process, participation from academia and industry, and how we might better train computer scientists as scientists. Videos from the workshop can be accessed at https://slideslive.com/neurips/west-114-115-retrospectives-a-venue-for-selfreflection-in-ml-research

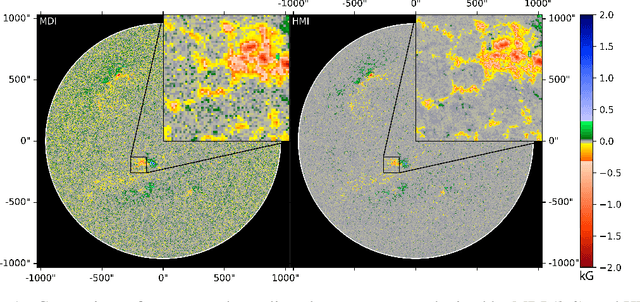

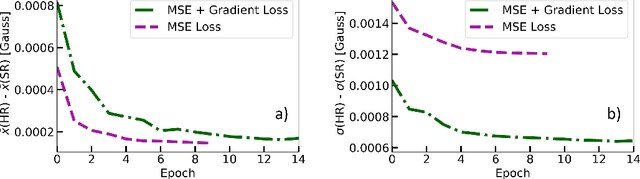

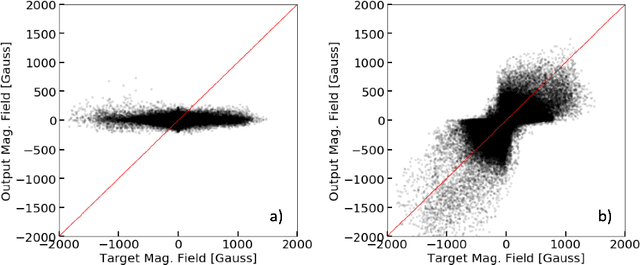

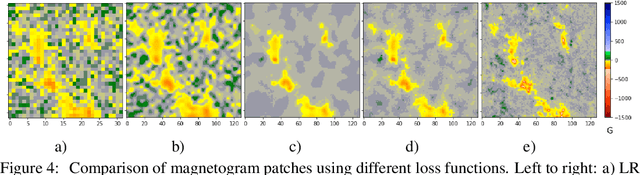

Single-Frame Super-Resolution of Solar Magnetograms: Investigating Physics-Based Metrics \& Losses

Nov 04, 2019

Abstract:Breakthroughs in our understanding of physical phenomena have traditionally followed improvements in instrumentation. Studies of the magnetic field of the Sun, and its influence on the solar dynamo and space weather events, have benefited from improvements in resolution and measurement frequency of new instruments. However, in order to fully understand the solar cycle, high-quality data across time-scales longer than the typical lifespan of a solar instrument are required. At the moment, discrepancies between measurement surveys prevent the combined use of all available data. In this work, we show that machine learning can help bridge the gap between measurement surveys by learning to \textbf{super-resolve} low-resolution magnetic field images and \textbf{translate} between characteristics of contemporary instruments in orbit. We also introduce the notion of physics-based metrics and losses for super-resolution to preserve underlying physics and constrain the solution space of possible super-resolution outputs.

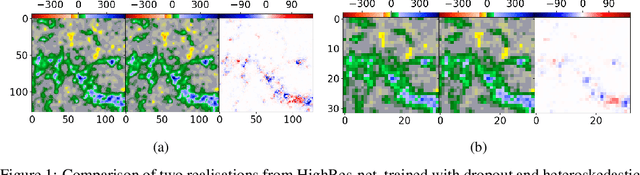

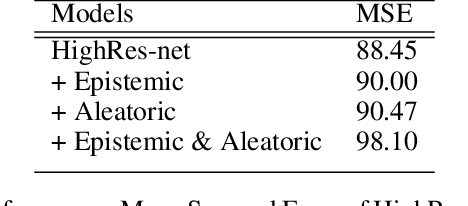

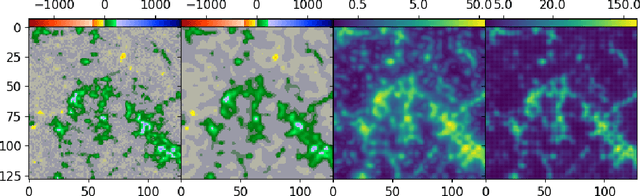

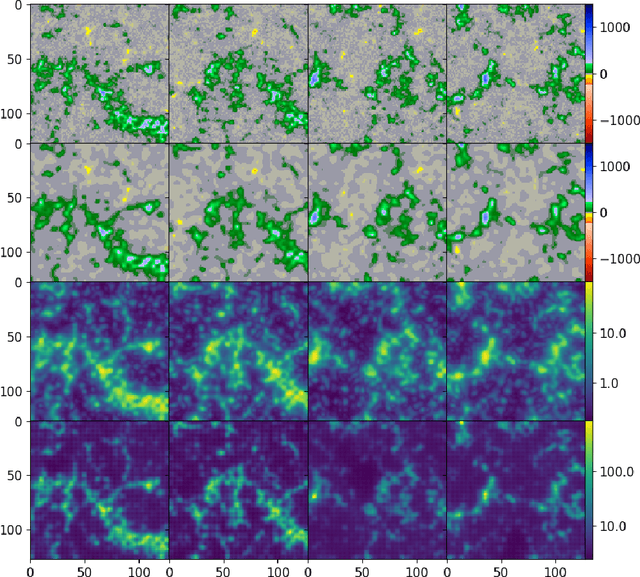

Probabilistic Super-Resolution of Solar Magnetograms: Generating Many Explanations and Measuring Uncertainties

Nov 04, 2019

Abstract:Machine learning techniques have been successfully applied to super-resolution tasks on natural images where visually pleasing results are sufficient. However in many scientific domains this is not adequate and estimations of errors and uncertainties are crucial. To address this issue we propose a Bayesian framework that decomposes uncertainties into epistemic and aleatoric uncertainties. We test the validity of our approach by super-resolving images of the Sun's magnetic field and by generating maps measuring the range of possible high resolution explanations compatible with a given low resolution magnetogram.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge