Enrico Camporeale

CIRES, University of Colorado, Boulder, CO, USA, NOAA, Space Weather Prediction Center, Boulder, CO, USA

A Machine-Learning-Ready Dataset Prepared from the Solar and Heliospheric Observatory Mission

Aug 04, 2021

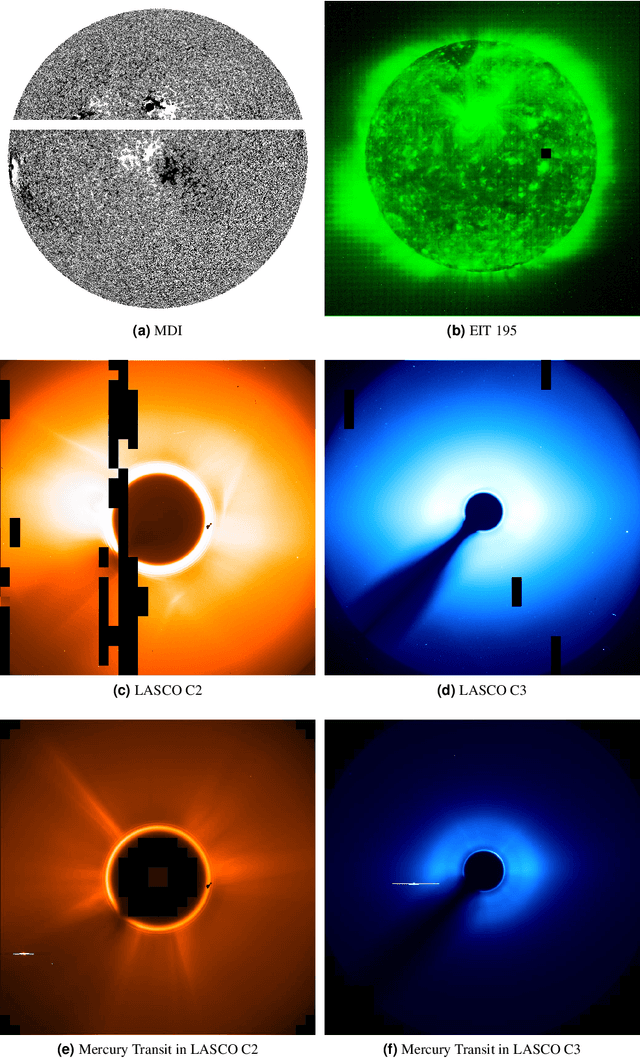

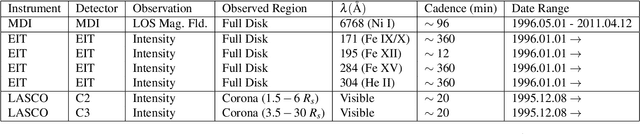

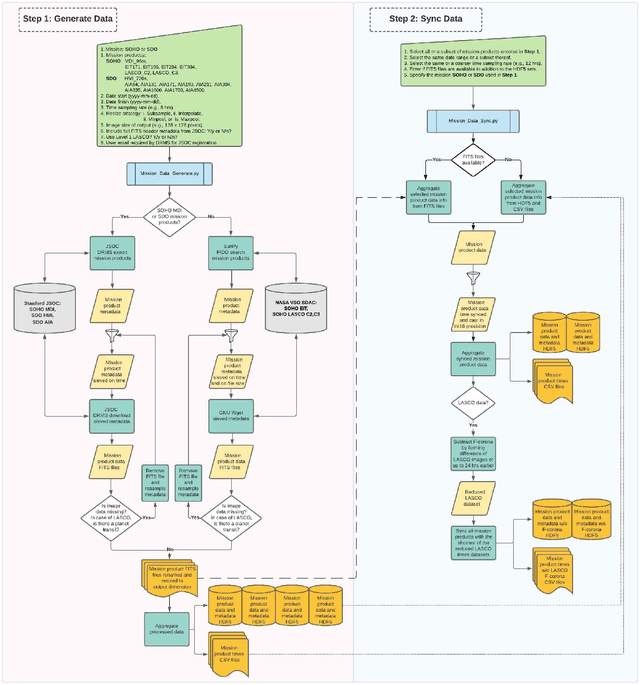

Abstract:We present a Python tool to generate a standard dataset from solar images that allows for user-defined selection criteria and a range of pre-processing steps. Our Python tool works with all image products from both the Solar and Heliospheric Observatory (SoHO) and Solar Dynamics Observatory (SDO) missions. We discuss a dataset produced from the SoHO mission's multi-spectral images which is free of missing or corrupt data as well as planetary transits in coronagraph images, and is temporally synced making it ready for input to a machine learning system. Machine-learning-ready images are a valuable resource for the community because they can be used, for example, for forecasting space weather parameters. We illustrate the use of this data with a 3-5 day-ahead forecast of the north-south component of the interplanetary magnetic field (IMF) observed at Lagrange point one (L1). For this use case, we apply a deep convolutional neural network (CNN) to a subset of the full SoHO dataset and compare with baseline results from a Gaussian Naive Bayes classifier.

Next generation particle precipitation: Mesoscale prediction through machine learning (a case study and framework for progress)

Nov 19, 2020

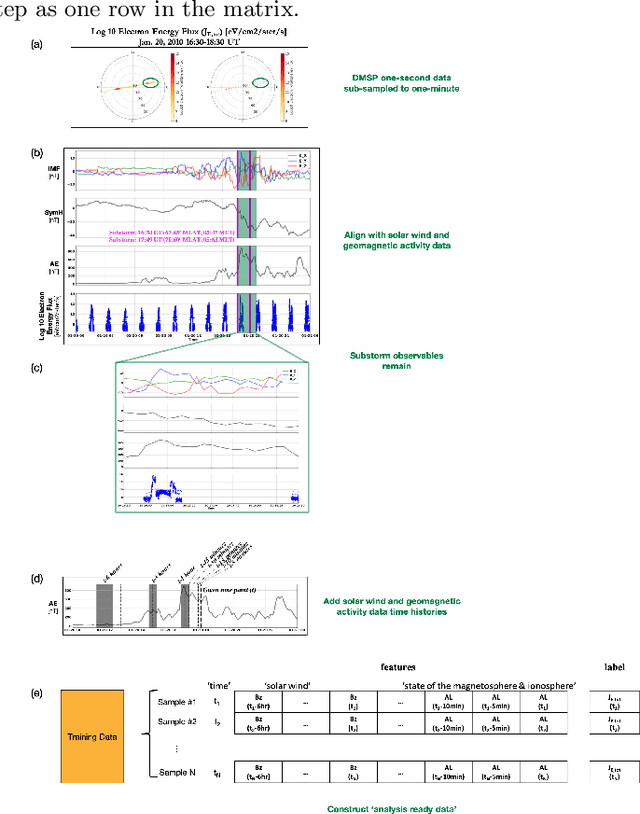

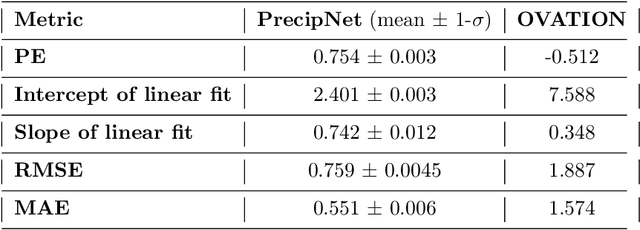

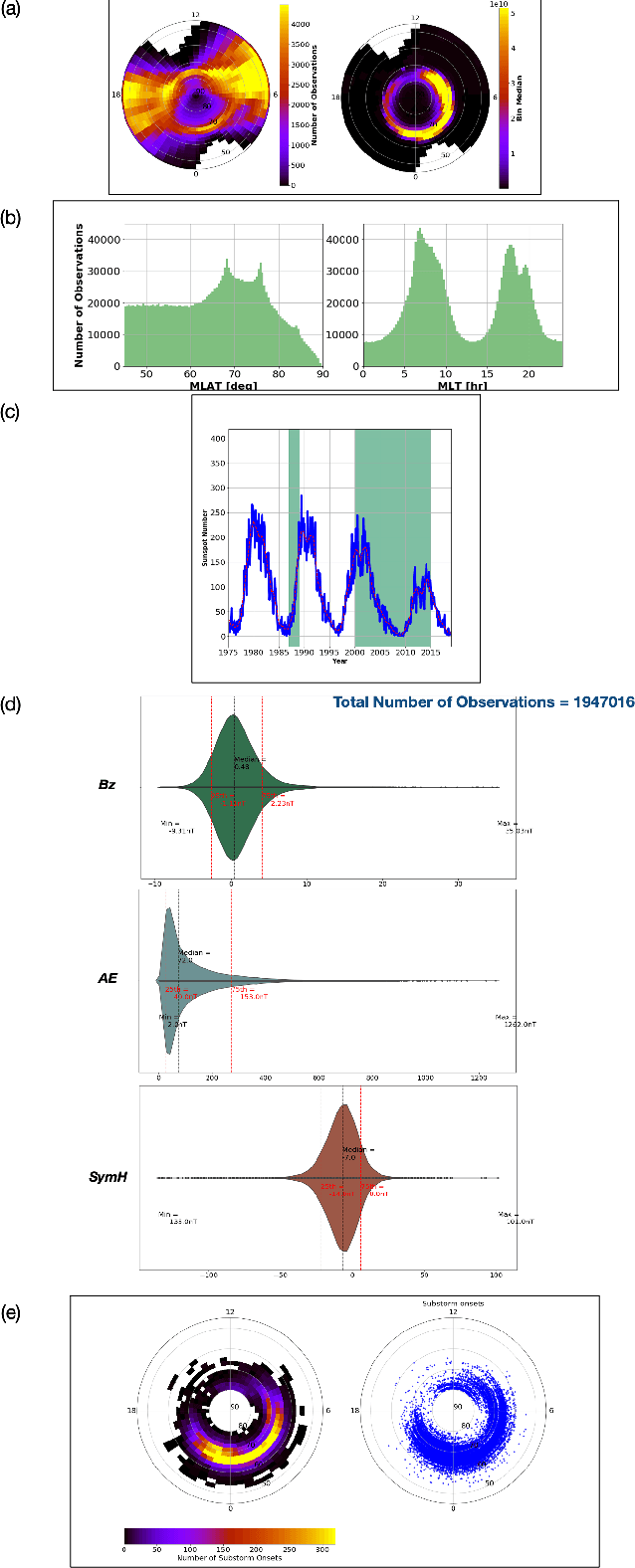

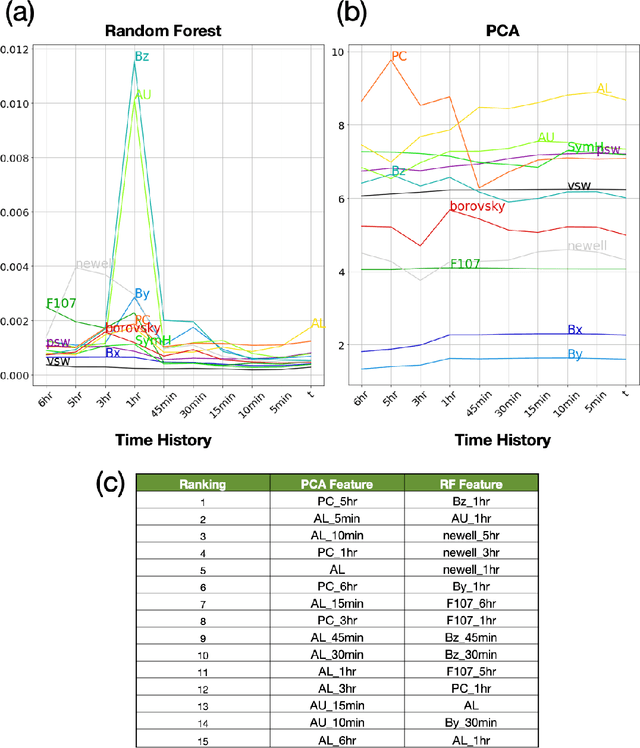

Abstract:We advance the modeling capability of electron particle precipitation from the magnetosphere to the ionosphere through a new database and use of machine learning tools to gain utility from those data. We have compiled, curated, analyzed, and made available a new and more capable database of particle precipitation data that includes 51 satellite years of Defense Meteorological Satellite Program (DMSP) observations temporally aligned with solar wind and geomagnetic activity data. The new total electron energy flux particle precipitation nowcast model, a neural network called PrecipNet, takes advantage of increased expressive power afforded by machine learning approaches to appropriately utilize diverse information from the solar wind and geomagnetic activity and, importantly, their time histories. With a more capable representation of the organizing parameters and the target electron energy flux observations, PrecipNet achieves a >50% reduction in errors from a current state-of-the-art model (OVATION Prime), better captures the dynamic changes of the auroral flux, and provides evidence that it can capably reconstruct mesoscale phenomena. We create and apply a new framework for space weather model evaluation that culminates previous guidance from across the solar-terrestrial research community. The research approach and results are representative of the `new frontier' of space weather research at the intersection of traditional and data science-driven discovery and provides a foundation for future efforts.

Machine Learning in Heliophysics and Space Weather Forecasting: A White Paper of Findings and Recommendations

Jun 22, 2020Abstract:The authors of this white paper met on 16-17 January 2020 at the New Jersey Institute of Technology, Newark, NJ, for a 2-day workshop that brought together a group of heliophysicists, data providers, expert modelers, and computer/data scientists. Their objective was to discuss critical developments and prospects of the application of machine and/or deep learning techniques for data analysis, modeling and forecasting in Heliophysics, and to shape a strategy for further developments in the field. The workshop combined a set of plenary sessions featuring invited introductory talks interleaved with a set of open discussion sessions. The outcome of the discussion is encapsulated in this white paper that also features a top-level list of recommendations agreed by participants.

Estimation of Accurate and Calibrated Uncertainties in Deterministic models

Mar 11, 2020

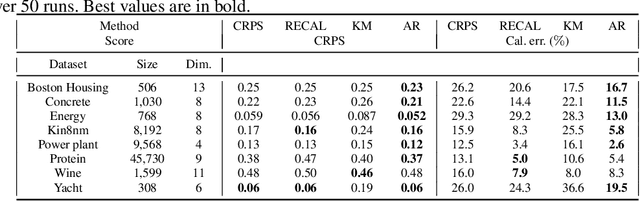

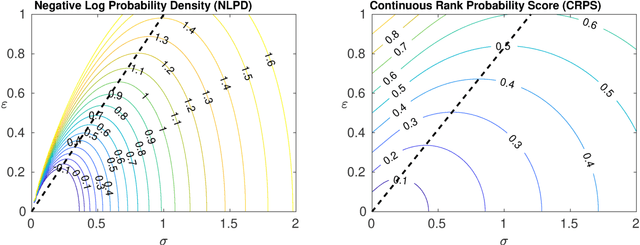

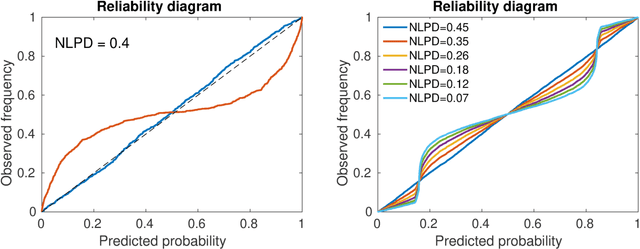

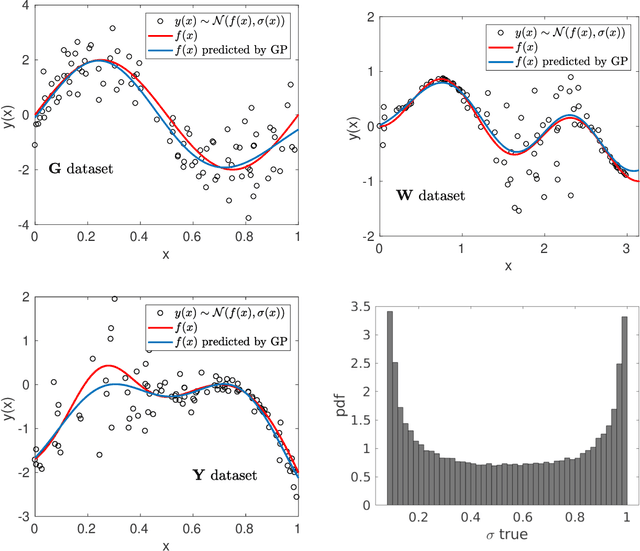

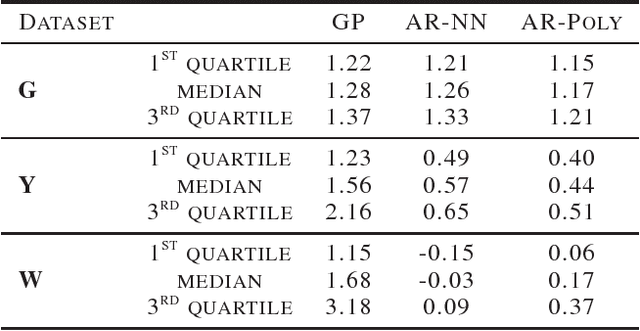

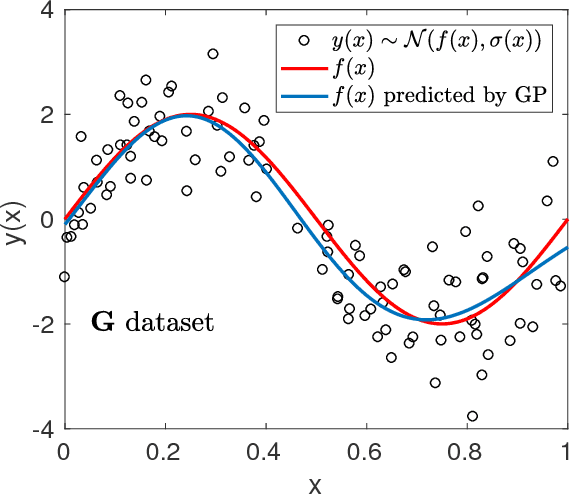

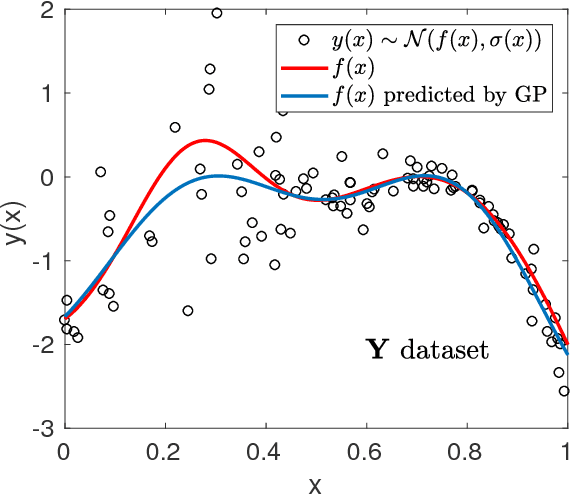

Abstract:In this paper we focus on the problem of assigning uncertainties to single-point predictions generated by a deterministic model that outputs a continuous variable. This problem applies to any state-of-the-art physics or engineering models that have a computational cost that does not readily allow to run ensembles and to estimate the uncertainty associated to single-point predictions. Essentially, we devise a method to easily transform a deterministic prediction into a probabilistic one. We show that for doing so, one has to compromise between the accuracy and the reliability (calibration) of such a probabilistic model. Hence, we introduce a cost function that encodes their trade-off. We use the Continuous Rank Probability Score to measure accuracy and we derive an analytic formula for the reliability, in the case of forecasts of continuous scalar variables expressed in terms of Gaussian distributions. The new Accuracy-Reliability cost function is then used to estimate the input-dependent variance, given a black-box mean function, by solving a two-objective optimization problem. The simple philosophy behind this strategy is that predictions based on the estimated variances should not only be accurate, but also reliable (i.e. statistical consistent with observations). Conversely, early works based on the minimization of classical cost functions, such as the negative log probability density, cannot simultaneously enforce both accuracy and reliability. We show several examples both with synthetic data, where the underlying hidden noise can accurately be recovered, and with large real-world datasets.

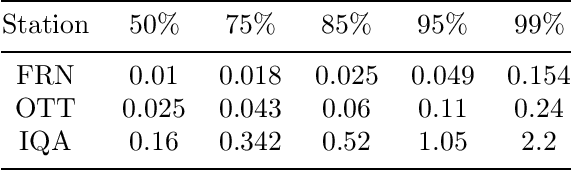

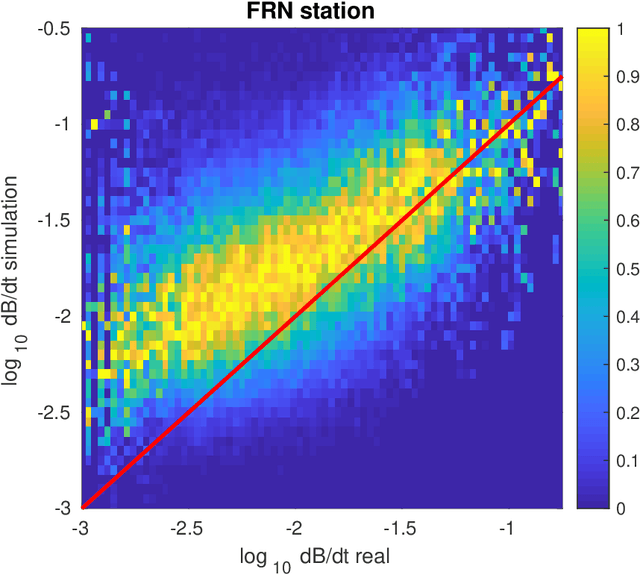

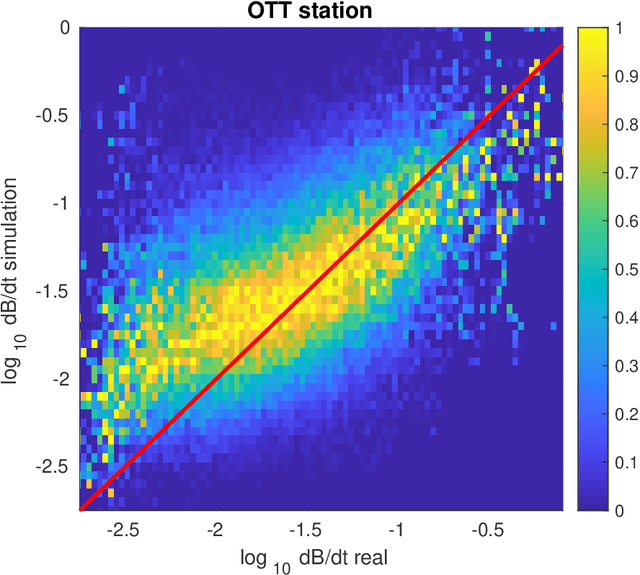

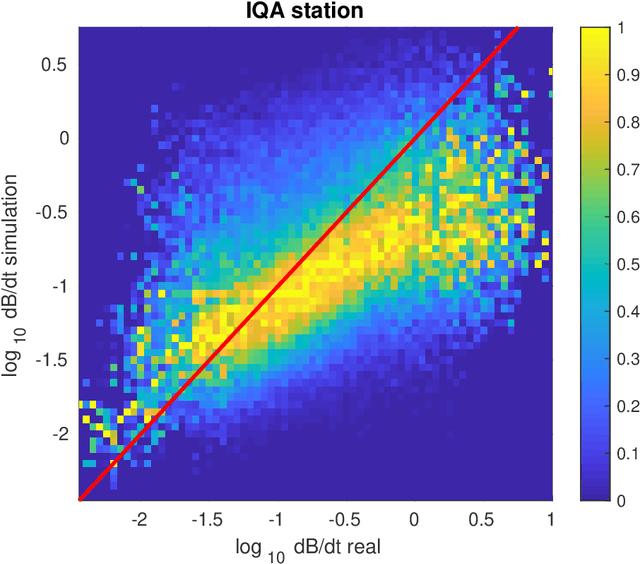

A gray-box model for a probabilistic estimate of regional ground magnetic perturbations: Enhancing the NOAA operational Geospace model with machine learning

Dec 02, 2019

Abstract:We present a novel algorithm that predicts the probability that time derivative of the horizontal component of the ground magnetic field $dB/dt$ exceeds a specified threshold at a given location. This quantity provides important information that is physically relevant to Geomagnetically Induced Currents (GIC), which are electric currents induced by sudden changes of the Earth's magnetic field due to Space Weather events. The model follows a 'gray-box' approach by combining the output of a physics-based model with a machine learning approach. Specifically, we use the University of Michigan's Geospace model, that is operational at the NOAA Space Weather Prediction Center, with a boosted ensemble of classification trees. We discuss in detail the issue of combining a large dataset of ground-based measurements ($\sim$ 20 years) with a limited set of simulation runs ($\sim$ 2 years) by developing a surrogate model for the years in which simulation runs are not available. We also discuss the problem of re-calibrating the output of the decision tree to obtain reliable probabilities. The performance of the model is assessed by typical metrics for probabilistic forecasts: Probability of Detection and False Detection, True Skill Score, Heidke Skill Score, and Receiver Operating Characteristic curve.

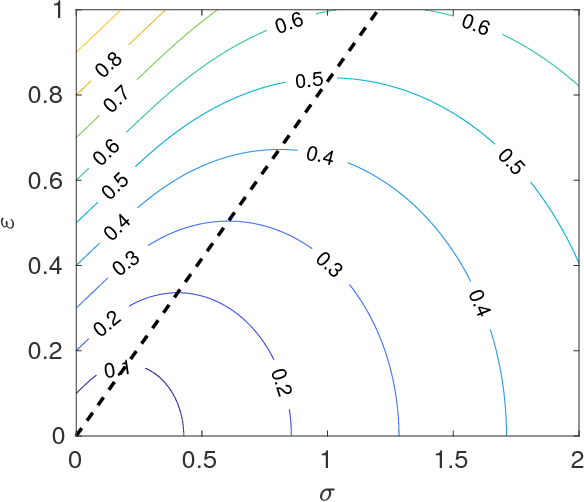

Accuracy-Reliability Cost Function for Empirical Variance Estimation

Mar 12, 2018

Abstract:In this paper we focus on the problem of assigning uncertainties to single-point predictions. We introduce a cost function that encodes the trade-off between accuracy and reliability in probabilistic forecast. We derive analytic formula for the case of forecasts of continuous scalar variables expressed in terms of Gaussian distributions. The Accuracy-Reliability cost function can be used to empirically estimate the variance in heteroskedastic regression problems (input dependent noise), by solving a two-objective optimization problem. The simple philosophy behind this strategy is that predictions based on the estimated variances should be both accurate and reliable (i.e. statistical consistent with observations). We show several examples with synthetic data, where the underlying hidden noise function can be accurately recovered, both in one and multi-dimensional problems. The practical implementation of the method has been done using a Neural Network and, in the one-dimensional case, with a simple polynomial fit.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge