Z. Huang

Graph Cut-guided Maximal Coding Rate Reduction for Learning Image Embedding and Clustering

Dec 25, 2024Abstract:In the era of pre-trained models, image clustering task is usually addressed by two relevant stages: a) to produce features from pre-trained vision models; and b) to find clusters from the pre-trained features. However, these two stages are often considered separately or learned by different paradigms, leading to suboptimal clustering performance. In this paper, we propose a unified framework, termed graph Cut-guided Maximal Coding Rate Reduction (CgMCR$^2$), for jointly learning the structured embeddings and the clustering. To be specific, we attempt to integrate an efficient clustering module into the principled framework for learning structured representation, in which the clustering module is used to provide partition information to guide the cluster-wise compression and the learned embeddings is aligned to desired geometric structures in turn to help for yielding more accurate partitions. We conduct extensive experiments on both standard and out-of-domain image datasets and experimental results validate the effectiveness of our approach.

* 24 pages, 9 figures, accepted in ACCV2024

Study of Robust Direction Finding Based on Joint Sparse Representation

May 27, 2024Abstract:Standard Direction of Arrival (DOA) estimation methods are typically derived based on the Gaussian noise assumption, making them highly sensitive to outliers. Therefore, in the presence of impulsive noise, the performance of these methods may significantly deteriorate. In this paper, we model impulsive noise as Gaussian noise mixed with sparse outliers. By exploiting their statistical differences, we propose a novel DOA estimation method based on sparse signal recovery (SSR). Furthermore, to address the issue of grid mismatch, we utilize an alternating optimization approach that relies on the estimated outlier matrix and the on-grid DOA estimates to obtain the off-grid DOA estimates. Simulation results demonstrate that the proposed method exhibits robustness against large outliers.

Accurate and confident prediction of electron beam longitudinal properties using spectral virtual diagnostics

Sep 27, 2020

Abstract:Longitudinal phase space (LPS) provides a critical information about electron beam dynamics for various scientific applications. For example, it can give insight into the high-brightness X-ray radiation from a free electron laser. Existing diagnostics are invasive, and often times cannot operate at the required resolution. In this work we present a machine learning-based Virtual Diagnostic (VD) tool to accurately predict the LPS for every shot using spectral information collected non-destructively from the radiation of relativistic electron beam. We demonstrate the tool's accuracy for three different case studies with experimental or simulated data. For each case, we introduce a method to increase the confidence in the VD tool. We anticipate that spectral VD would improve the setup and understanding of experimental configurations at DOE's user facilities as well as data sorting and analysis. The spectral VD can provide confident knowledge of the longitudinal bunch properties at the next generation of high-repetition rate linear accelerators while reducing the load on data storage, readout and streaming requirements.

A gray-box model for a probabilistic estimate of regional ground magnetic perturbations: Enhancing the NOAA operational Geospace model with machine learning

Dec 02, 2019

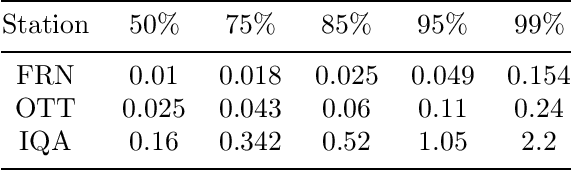

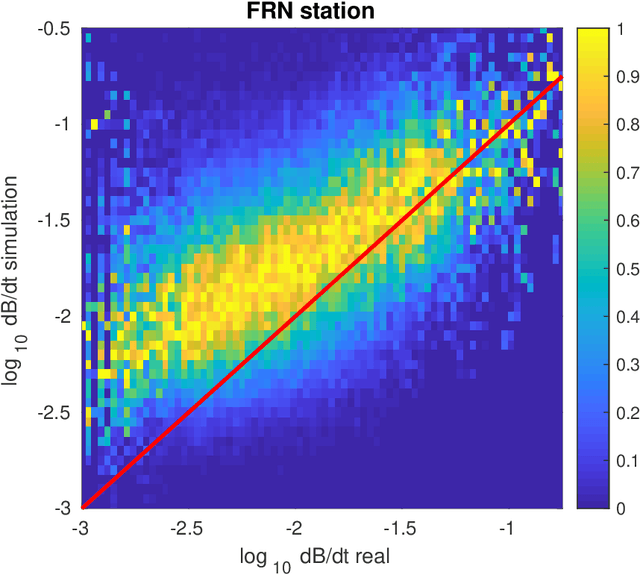

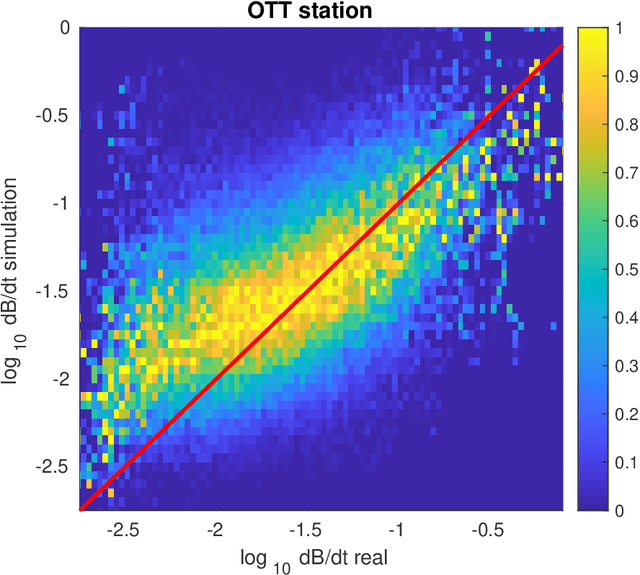

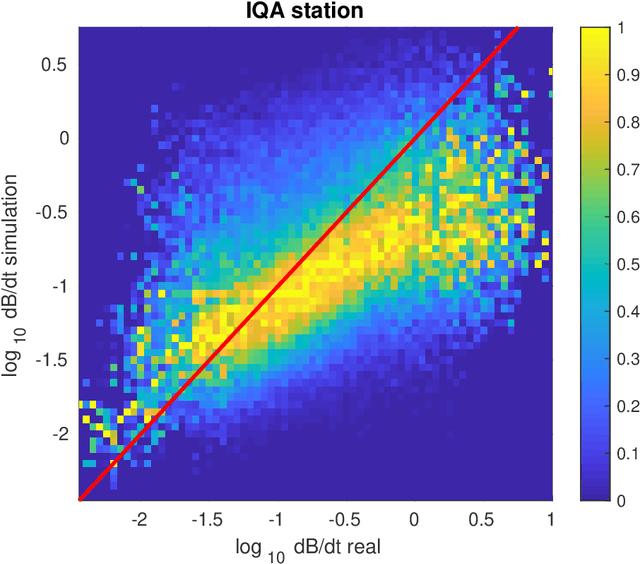

Abstract:We present a novel algorithm that predicts the probability that time derivative of the horizontal component of the ground magnetic field $dB/dt$ exceeds a specified threshold at a given location. This quantity provides important information that is physically relevant to Geomagnetically Induced Currents (GIC), which are electric currents induced by sudden changes of the Earth's magnetic field due to Space Weather events. The model follows a 'gray-box' approach by combining the output of a physics-based model with a machine learning approach. Specifically, we use the University of Michigan's Geospace model, that is operational at the NOAA Space Weather Prediction Center, with a boosted ensemble of classification trees. We discuss in detail the issue of combining a large dataset of ground-based measurements ($\sim$ 20 years) with a limited set of simulation runs ($\sim$ 2 years) by developing a surrogate model for the years in which simulation runs are not available. We also discuss the problem of re-calibrating the output of the decision tree to obtain reliable probabilities. The performance of the model is assessed by typical metrics for probabilistic forecasts: Probability of Detection and False Detection, True Skill Score, Heidke Skill Score, and Receiver Operating Characteristic curve.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge