Aleix Martinez

Supervision-free Vision-Language Alignment

Jan 08, 2025Abstract:Vision-language models (VLMs) have demonstrated remarkable potential in integrating visual and linguistic information, but their performance is often constrained by the need for extensive, high-quality image-text training data. Curation of these image-text pairs is both time-consuming and computationally expensive. To address this challenge, we introduce SVP (Supervision-free Visual Projection), a novel framework that enhances vision-language alignment without relying on curated data or preference annotation. SVP leverages self-captioning and a pre-trained grounding model as a feedback mechanism to elicit latent information in VLMs. We evaluate our approach across six key areas: captioning, referring, visual question answering, multitasking, hallucination control, and object recall. Results demonstrate significant improvements, including a 14% average improvement in captioning tasks, up to 12% increase in object recall, and substantial reduction in hallucination rates. Notably, a small VLM using SVP achieves hallucination reductions comparable to a model five times larger, while a VLM with initially poor referring capabilities more than doubles its performance, approaching parity with a model twice its size.

Structural Similarity: When to Use Deep Generative Models on Imbalanced Image Dataset Augmentation

Mar 08, 2023

Abstract:Improving the performance on an imbalanced training set is one of the main challenges in nowadays Machine Learning. One way to augment and thus re-balance the image dataset is through existing deep generative models, like class-conditional Generative Adversarial Networks (cGAN) or Diffusion Models by synthesizing images on each of the tail-class. Our experiments on imbalanced image dataset classification show that, the validation accuracy improvement with such re-balancing method is related to the image similarity between different classes. Thus, to quantify this image dataset class similarity, we propose a measurement called Super-Sub Class Structural Similarity (SSIM-supSubCls) based on Structural Similarity (SSIM). A deep generative model data augmentation classification (GM-augCls) pipeline is also provided to verify this metric correlates with the accuracy enhancement. We further quantify the relationship between them, discovering that the accuracy improvement decays exponentially with respect to SSIM-supSubCls values.

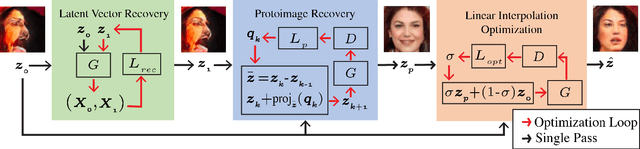

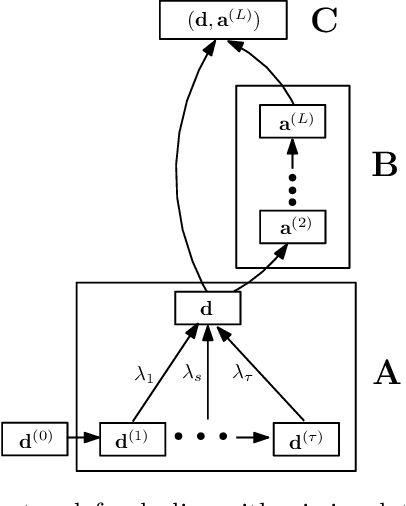

Near Perfect GAN Inversion

Feb 23, 2022

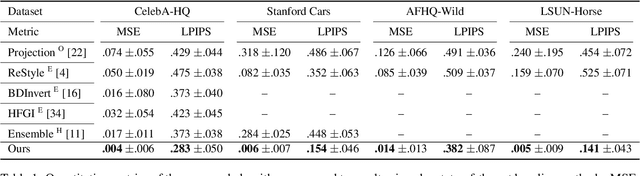

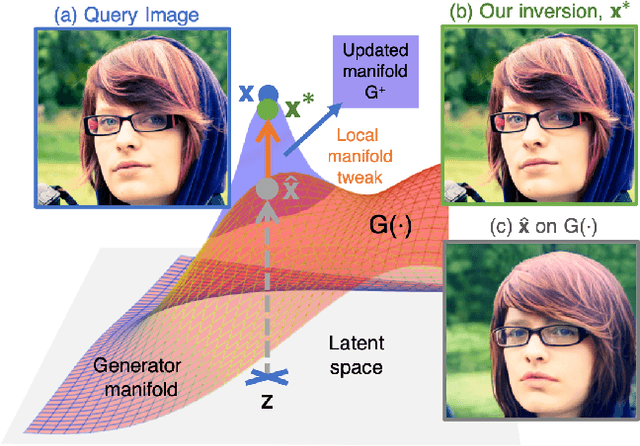

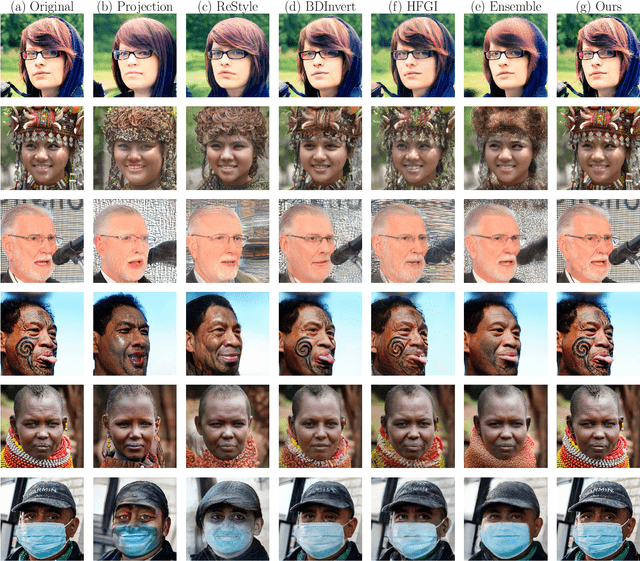

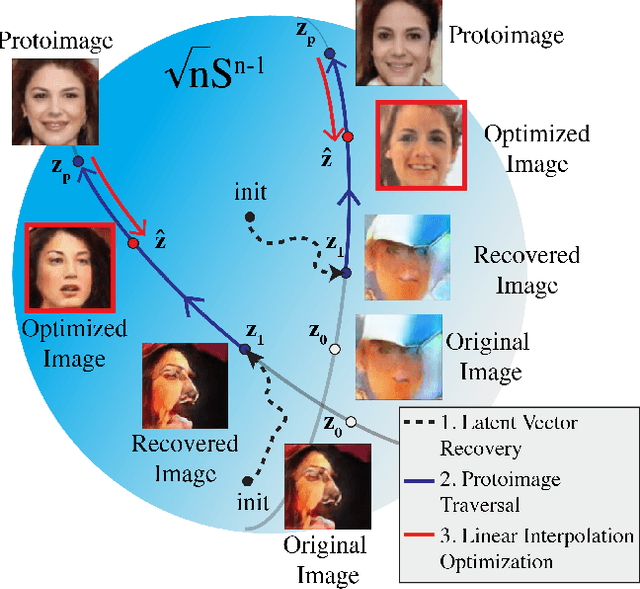

Abstract:To edit a real photo using Generative Adversarial Networks (GANs), we need a GAN inversion algorithm to identify the latent vector that perfectly reproduces it. Unfortunately, whereas existing inversion algorithms can synthesize images similar to real photos, they cannot generate the identical clones needed in most applications. Here, we derive an algorithm that achieves near perfect reconstructions of photos. Rather than relying on encoder- or optimization-based methods to find an inverse mapping on a fixed generator $G(\cdot)$, we derive an approach to locally adjust $G(\cdot)$ to more optimally represent the photos we wish to synthesize. This is done by locally tweaking the learned mapping $G(\cdot)$ s.t. $\| {\bf x} - G({\bf z}) \|<\epsilon$, with ${\bf x}$ the photo we wish to reproduce, ${\bf z}$ the latent vector, $\|\cdot\|$ an appropriate metric, and $\epsilon > 0$ a small scalar. We show that this approach can not only produce synthetic images that are indistinguishable from the real photos we wish to replicate, but that these images are readily editable. We demonstrate the effectiveness of the derived algorithm on a variety of datasets including human faces, animals, and cars, and discuss its importance for diversity and inclusion.

When do GANs replicate? On the choice of dataset size

Feb 23, 2022

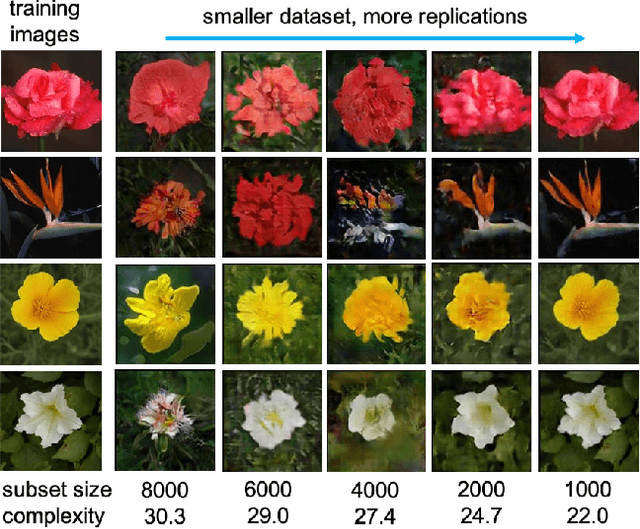

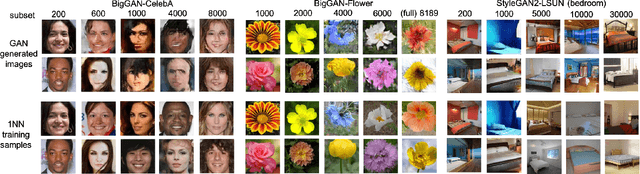

Abstract:Do GANs replicate training images? Previous studies have shown that GANs do not seem to replicate training data without significant change in the training procedure. This leads to a series of research on the exact condition needed for GANs to overfit to the training data. Although a number of factors has been theoretically or empirically identified, the effect of dataset size and complexity on GANs replication is still unknown. With empirical evidence from BigGAN and StyleGAN2, on datasets CelebA, Flower and LSUN-bedroom, we show that dataset size and its complexity play an important role in GANs replication and perceptual quality of the generated images. We further quantify this relationship, discovering that replication percentage decays exponentially with respect to dataset size and complexity, with a shared decaying factor across GAN-dataset combinations. Meanwhile, the perceptual image quality follows a U-shape trend w.r.t dataset size. This finding leads to a practical tool for one-shot estimation on minimal dataset size to prevent GAN replication which can be used to guide datasets construction and selection.

Rayleigh EigenDirections (REDs): GAN latent space traversals for multidimensional features

Jan 25, 2022

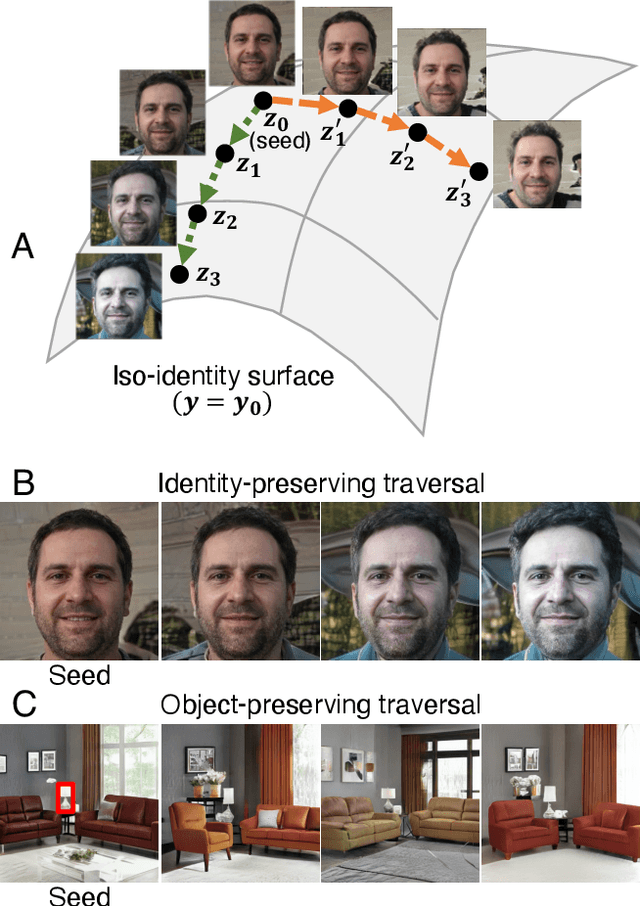

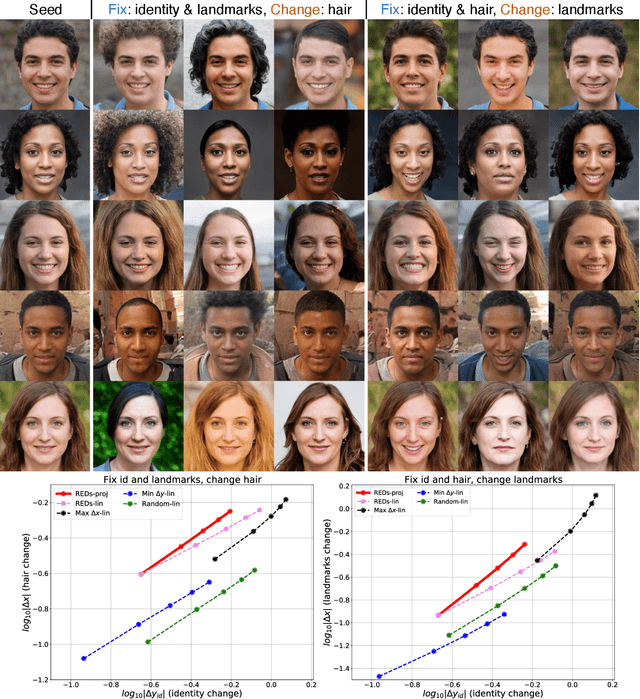

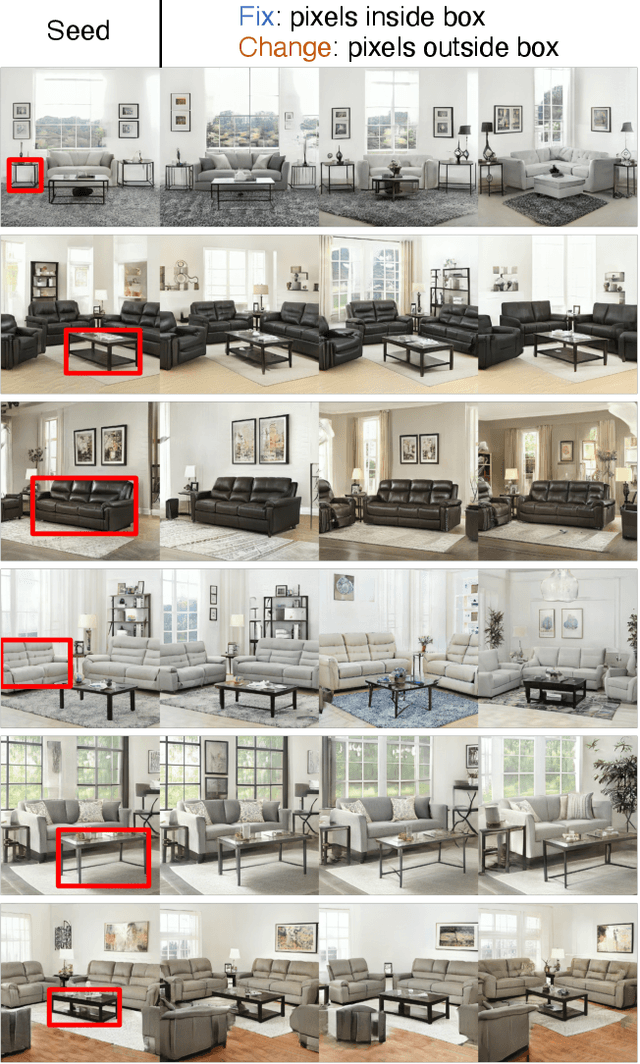

Abstract:We present a method for finding paths in a deep generative model's latent space that can maximally vary one set of image features while holding others constant. Crucially, unlike past traversal approaches, ours can manipulate multidimensional features of an image such as facial identity and pixels within a specified region. Our method is principled and conceptually simple: optimal traversal directions are chosen by maximizing differential changes to one feature set such that changes to another set are negligible. We show that this problem is nearly equivalent to one of Rayleigh quotient maximization, and provide a closed-form solution to it based on solving a generalized eigenvalue equation. We use repeated computations of the corresponding optimal directions, which we call Rayleigh EigenDirections (REDs), to generate appropriately curved paths in latent space. We empirically evaluate our method using StyleGAN2 on two image domains: faces and living rooms. We show that our method is capable of controlling various multidimensional features out of the scope of previous latent space traversal methods: face identity, spatial frequency bands, pixels within a region, and the appearance and position of an object. Our work suggests that a wealth of opportunities lies in the local analysis of the geometry and semantics of latent spaces.

Diamond in the rough: Improving image realism by traversing the GAN latent space

Apr 12, 2021

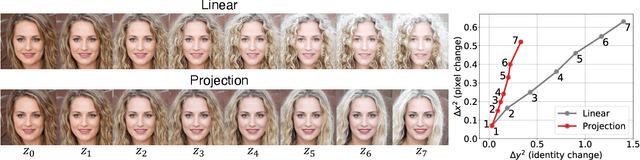

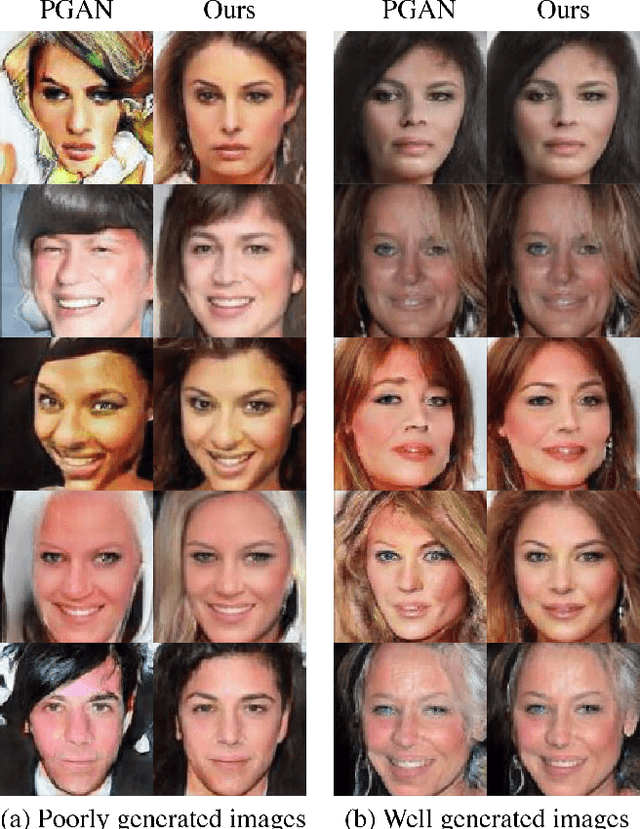

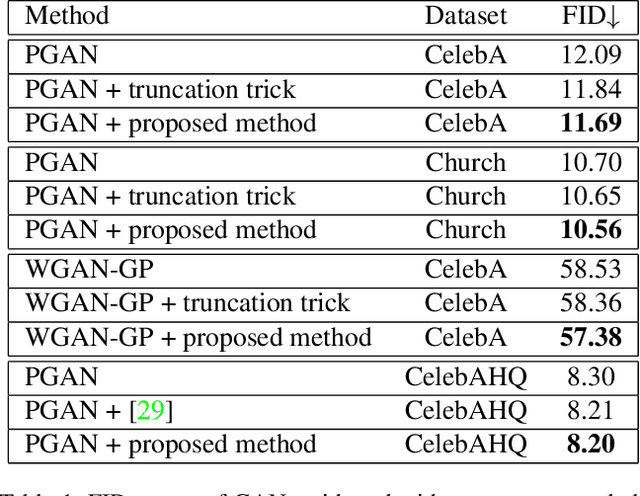

Abstract:In just a few years, the photo-realism of images synthesized by Generative Adversarial Networks (GANs) has gone from somewhat reasonable to almost perfect largely by increasing the complexity of the networks, e.g., adding layers, intermediate latent spaces, style-transfer parameters, etc. This trajectory has led many of the state-of-the-art GANs to be inaccessibly large, disengaging many without large computational resources. Recognizing this, we explore a method for squeezing additional performance from existing, low-complexity GANs. Formally, we present an unsupervised method to find a direction in the latent space that aligns with improved photo-realism. Our approach leaves the network unchanged while enhancing the fidelity of the generated image. We use a simple generator inversion to find the direction in the latent space that results in the smallest change in the image space. Leveraging the learned structure of the latent space, we find moving in this direction corrects many image artifacts and brings the image into greater realism. We verify our findings qualitatively and quantitatively, showing an improvement in Frechet Inception Distance (FID) exists along our trajectory which surpasses the original GAN and other approaches including a supervised method. We expand further and provide an optimization method to automatically select latent vectors along the path that balance the variation and realism of samples. We apply our method to several diverse datasets and three architectures of varying complexity to illustrate the generalizability of our approach. By expanding the utility of low-complexity and existing networks, we hope to encourage the democratization of GANs.

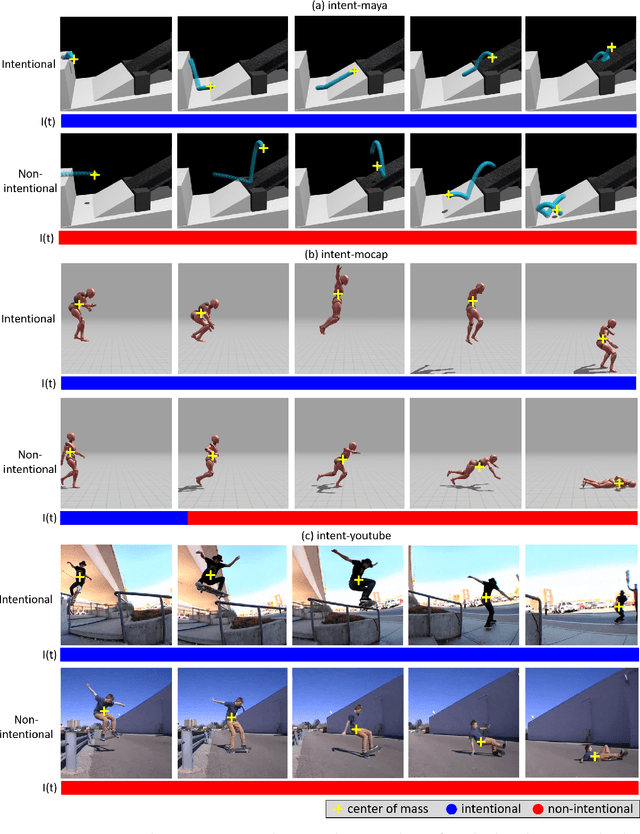

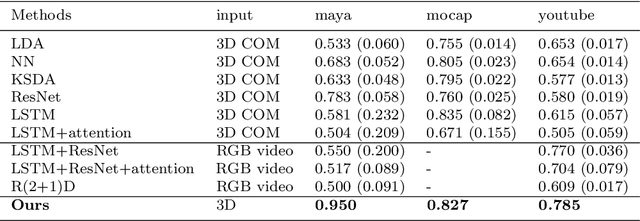

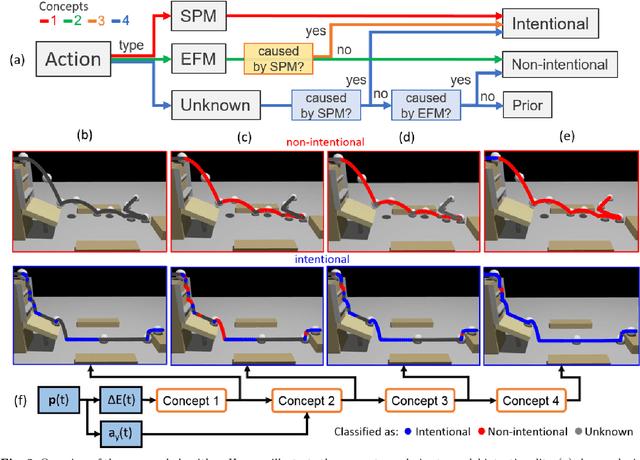

Adding Knowledge to Unsupervised Algorithms for the Recognition of Intent

Nov 12, 2020

Abstract:Computer vision algorithms performance are near or superior to humans in the visual problems including object recognition (especially those of fine-grained categories), segmentation, and 3D object reconstruction from 2D views. Humans are, however, capable of higher-level image analyses. A clear example, involving theory of mind, is our ability to determine whether a perceived behavior or action was performed intentionally or not. In this paper, we derive an algorithm that can infer whether the behavior of an agent in a scene is intentional or unintentional based on its 3D kinematics, using the knowledge of self-propelled motion, Newtonian motion and their relationship. We show how the addition of this basic knowledge leads to a simple, unsupervised algorithm. To test the derived algorithm, we constructed three dedicated datasets from abstract geometric animation to realistic videos of agents performing intentional and non-intentional actions. Experiments on these datasets show that our algorithm can recognize whether an action is intentional or not, even without training data. The performance is comparable to various supervised baselines quantitatively, with sensible intentionality segmentation qualitatively.

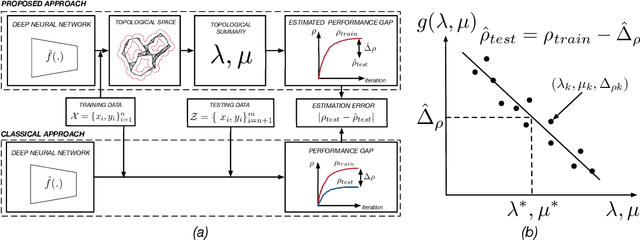

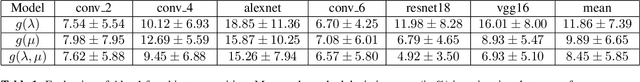

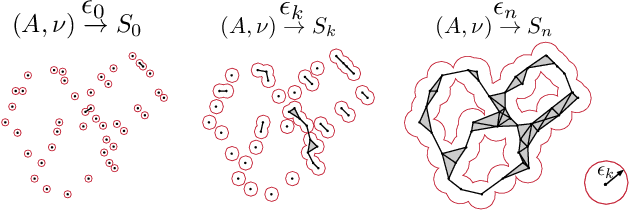

Computing the Testing Error without a Testing Set

May 01, 2020

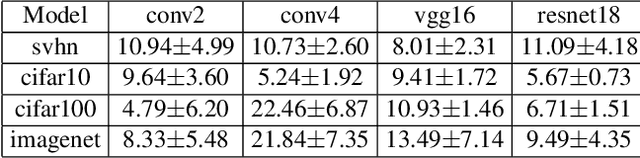

Abstract:Deep Neural Networks (DNNs) have revolutionized computer vision. We now have DNNs that achieve top (performance) results in many problems, including object recognition, facial expression analysis, and semantic segmentation, to name but a few. The design of the DNNs that achieve top results is, however, non-trivial and mostly done by trail-and-error. That is, typically, researchers will derive many DNN architectures (i.e., topologies) and then test them on multiple datasets. However, there are no guarantees that the selected DNN will perform well in the real world. One can use a testing set to estimate the performance gap between the training and testing sets, but avoiding overfitting-to-the-testing-data is almost impossible. Using a sequestered testing dataset may address this problem, but this requires a constant update of the dataset, a very expensive venture. Here, we derive an algorithm to estimate the performance gap between training and testing that does not require any testing dataset. Specifically, we derive a number of persistent topology measures that identify when a DNN is learning to generalize to unseen samples. This allows us to compute the DNN's testing error on unseen samples, even when we do not have access to them. We provide extensive experimental validation on multiple networks and datasets to demonstrate the feasibility of the proposed approach.

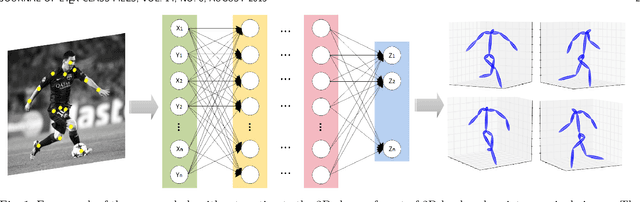

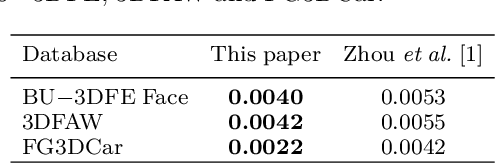

A Simple, Fast and Highly-Accurate Algorithm to Recover 3D Shape from 2D Landmarks on a Single Image

Sep 28, 2016

Abstract:Three-dimensional shape reconstruction of 2D landmark points on a single image is a hallmark of human vision, but is a task that has been proven difficult for computer vision algorithms. We define a feed-forward deep neural network algorithm that can reconstruct 3D shapes from 2D landmark points almost perfectly (i.e., with extremely small reconstruction errors), even when these 2D landmarks are from a single image. Our experimental results show an improvement of up to two-fold over state-of-the-art computer vision algorithms; 3D shape reconstruction of human faces is given at a reconstruction error < .004, cars at .0022, human bodies at .022, and highly-deformable flags at an error of .0004. Our algorithm was also a top performer at the 2016 3D Face Alignment in the Wild Challenge competition (done in conjunction with the European Conference on Computer Vision, ECCV) that required the reconstruction of 3D face shape from a single image. The derived algorithm can be trained in a couple hours and testing runs at more than 1, 000 frames/s on an i7 desktop. We also present an innovative data augmentation approach that allows us to train the system efficiently with small number of samples. And the system is robust to noise (e.g., imprecise landmark points) and missing data (e.g., occluded or undetected landmark points).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge