A. Murat Tekalp

Padé Neurons for Efficient Neural Models

Jan 07, 2026Abstract:Neural networks commonly employ the McCulloch-Pitts neuron model, which is a linear model followed by a point-wise non-linear activation. Various researchers have already advanced inherently non-linear neuron models, such as quadratic neurons, generalized operational neurons, generative neurons, and super neurons, which offer stronger non-linearity compared to point-wise activation functions. In this paper, we introduce a novel and better non-linear neuron model called Padé neurons (Paons), inspired by Padé approximants. Paons offer several advantages, such as diversity of non-linearity, since each Paon learns a different non-linear function of its inputs, and layer efficiency, since Paons provide stronger non-linearity in much fewer layers compared to piecewise linear approximation. Furthermore, Paons include all previously proposed neuron models as special cases, thus any neuron model in any network can be replaced by Paons. We note that there has been a proposal to employ the Padé approximation as a generalized point-wise activation function, which is fundamentally different from our model. To validate the efficacy of Paons, in our experiments, we replace classic neurons in some well-known neural image super-resolution, compression, and classification models based on the ResNet architecture with Paons. Our comprehensive experimental results and analyses demonstrate that neural models built by Paons provide better or equal performance than their classic counterparts with a smaller number of layers. The PyTorch implementation code for Paon is open-sourced at https://github.com/onur-keles/Paon.

Edit2Restore:Few-Shot Image Restoration via Parameter-Efficient Adaptation of Pre-trained Editing Models

Jan 06, 2026Abstract:Image restoration has traditionally required training specialized models on thousands of paired examples per degradation type. We challenge this paradigm by demonstrating that powerful pre-trained text-conditioned image editing models can be efficiently adapted for multiple restoration tasks through parameter-efficient fine-tuning with remarkably few examples. Our approach fine-tunes LoRA adapters on FLUX.1 Kontext, a state-of-the-art 12B parameter flow matching model for image-to-image translation, using only 16-128 paired images per task, guided by simple text prompts that specify the restoration operation. Unlike existing methods that train specialized restoration networks from scratch with thousands of samples, we leverage the rich visual priors already encoded in large-scale pre-trained editing models, dramatically reducing data requirements while maintaining high perceptual quality. A single unified LoRA adapter, conditioned on task-specific text prompts, effectively handles multiple degradations including denoising, deraining, and dehazing. Through comprehensive ablation studies, we analyze: (i) the impact of training set size on restoration quality, (ii) trade-offs between task-specific versus unified multi-task adapters, (iii) the role of text encoder fine-tuning, and (iv) zero-shot baseline performance. While our method prioritizes perceptual quality over pixel-perfect reconstruction metrics like PSNR/SSIM, our results demonstrate that pre-trained image editing models, when properly adapted, offer a compelling and data-efficient alternative to traditional image restoration approaches, opening new avenues for few-shot, prompt-guided image enhancement. The code to reproduce our results are available at: https://github.com/makinyilmaz/Edit2Restore

Content-Adaptive Inference for State-of-the-art Learned Video Compression

Oct 08, 2025Abstract:While the BD-rate performance of recent learned video codec models in both low-delay and random-access modes exceed that of respective modes of traditional codecs on average over common benchmarks, the performance improvements for individual videos with complex/large motions is much smaller compared to scenes with simple motion. This is related to the inability of a learned encoder model to generalize to motion vector ranges that have not been seen in the training set, which causes loss of performance in both coding of flow fields as well as frame prediction and coding. As a remedy, we propose a generic (model-agnostic) framework to control the scale of motion vectors in a scene during inference (encoding) to approximately match the range of motion vectors in the test and training videos by adaptively downsampling frames. This results in down-scaled motion vectors enabling: i) better flow estimation; hence, frame prediction and ii) more efficient flow compression. We show that the proposed framework for content-adaptive inference improves the BD-rate performance of already state-of-the-art low-delay video codec DCVC-FM by up to 41\% on individual videos without any model fine tuning. We present ablation studies to show measures of motion and scene complexity can be used to predict the effectiveness of the proposed framework.

DiMoSR: Feature Modulation via Multi-Branch Dilated Convolutions for Efficient Image Super-Resolution

May 27, 2025Abstract:Balancing reconstruction quality versus model efficiency remains a critical challenge in lightweight single image super-resolution (SISR). Despite the prevalence of attention mechanisms in recent state-of-the-art SISR approaches that primarily emphasize or suppress feature maps, alternative architectural paradigms warrant further exploration. This paper introduces DiMoSR (Dilated Modulation Super-Resolution), a novel architecture that enhances feature representation through modulation to complement attention in lightweight SISR networks. The proposed approach leverages multi-branch dilated convolutions to capture rich contextual information over a wider receptive field while maintaining computational efficiency. Experimental results demonstrate that DiMoSR outperforms state-of-the-art lightweight methods across diverse benchmark datasets, achieving superior PSNR and SSIM metrics with comparable or reduced computational complexity. Through comprehensive ablation studies, this work not only validates the effectiveness of DiMoSR but also provides critical insights into the interplay between attention mechanisms and feature modulation to guide future research in efficient network design. The code and model weights to reproduce our results are available at: https://github.com/makinyilmaz/DiMoSR

Exploring Sparsity for Parameter Efficient Fine Tuning Using Wavelets

May 18, 2025Abstract:Efficiently adapting large foundation models is critical, especially with tight compute and memory budgets. Parameter-Efficient Fine-Tuning (PEFT) methods such as LoRA offer limited granularity and effectiveness in few-parameter regimes. We propose Wavelet Fine-Tuning (WaveFT), a novel PEFT method that learns highly sparse updates in the wavelet domain of residual matrices. WaveFT allows precise control of trainable parameters, offering fine-grained capacity adjustment and excelling with remarkably low parameter count, potentially far fewer than LoRA's minimum -- ideal for extreme parameter-efficient scenarios. In order to demonstrate the effect of the wavelet transform, we compare WaveFT with a special case, called SHiRA, that entails applying sparse updates directly in the weight domain. Evaluated on personalized text-to-image generation using Stable Diffusion XL as baseline, WaveFT significantly outperforms LoRA and other PEFT methods, especially at low parameter counts; achieving superior subject fidelity, prompt alignment, and image diversity.

FG-DFPN: Flow Guided Deformable Frame Prediction Network

Mar 14, 2025Abstract:Video frame prediction remains a fundamental challenge in computer vision with direct implications for autonomous systems, video compression, and media synthesis. We present FG-DFPN, a novel architecture that harnesses the synergy between optical flow estimation and deformable convolutions to model complex spatio-temporal dynamics. By guiding deformable sampling with motion cues, our approach addresses the limitations of fixed-kernel networks when handling diverse motion patterns. The multi-scale design enables FG-DFPN to simultaneously capture global scene transformations and local object movements with remarkable precision. Our experiments demonstrate that FG-DFPN achieves state-of-the-art performance on eight diverse MPEG test sequences, outperforming existing methods by 1dB PSNR while maintaining competitive inference speeds. The integration of motion cues with adaptive geometric transformations makes FG-DFPN a promising solution for next-generation video processing systems that require high-fidelity temporal predictions. The model and instructions to reproduce our results will be released at: https://github.com/KUIS-AI-Tekalp-Research Group/frame-prediction

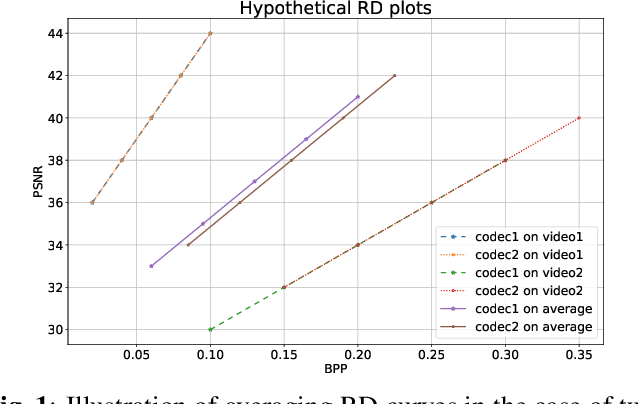

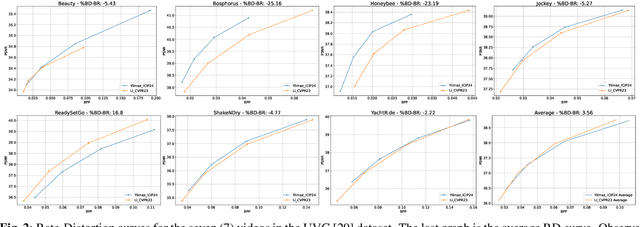

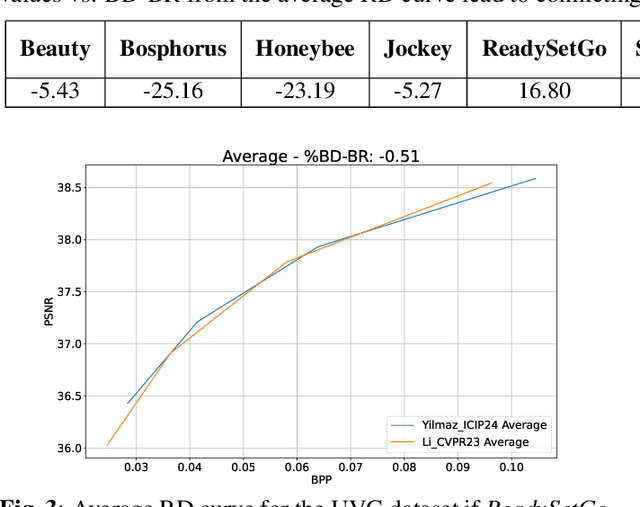

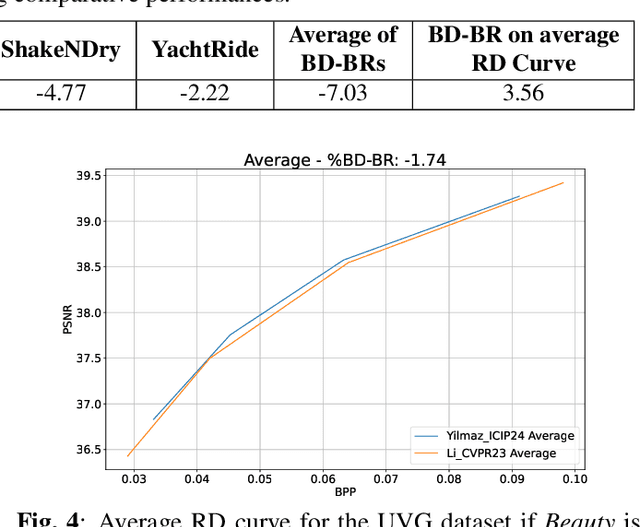

On the Computation of BD-Rate over a Set of Videos for Fair Assessment of Performance of Learned Video Codecs

Sep 13, 2024

Abstract:The Bj{\o}ntegaard Delta (BD) measure is widely employed to evaluate and quantify the variations in the rate-distortion(RD) performance across different codecs. Many researchers report the average BD value over multiple videos within a dataset for different codecs. We claim that the current practice in the learned video compression community of computing the average BD value over a dataset based on the average RD curve of multiple videos can lead to misleading conclusions. We show both by analysis of a simplistic case of linear RD curves and experimental results with two recent learned video codecs that averaging RD curves can lead to a single video to disproportionately influence the average BD value especially when the operating bitrate range of different codecs do not exactly match. Instead, we advocate for calculating the BD measure per-video basis, as commonly done by the traditional video compression community, followed by averaging the individual BD values over videos, to provide a fair comparison of learned video codecs. Our experimental results demonstrate that the comparison of two recent learned video codecs is affected by how we evaluate the average BD measure.

A New Multi-Picture Architecture for Learned Video Deinterlacing and Demosaicing with Parallel Deformable Convolution and Self-Attention Blocks

Apr 19, 2024

Abstract:Despite the fact real-world video deinterlacing and demosaicing are well-suited to supervised learning from synthetically degraded data because the degradation models are known and fixed, learned video deinterlacing and demosaicing have received much less attention compared to denoising and super-resolution tasks. We propose a new multi-picture architecture for video deinterlacing or demosaicing by aligning multiple supporting pictures with missing data to a reference picture to be reconstructed, benefiting from both local and global spatio-temporal correlations in the feature space using modified deformable convolution blocks and a novel residual efficient top-$k$ self-attention (kSA) block, respectively. Separate reconstruction blocks are used to estimate different types of missing data. Our extensive experimental results, on synthetic or real-world datasets, demonstrate that the proposed novel architecture provides superior results that significantly exceed the state-of-the-art for both tasks in terms of PSNR, SSIM, and perceptual quality. Ablation studies are provided to justify and show the benefit of each novel modification made to the deformable convolution and residual efficient kSA blocks. Code is available: https://github.com/KUIS-AI-Tekalp-Research-Group/Video-Deinterlacing.

Training Transformer Models by Wavelet Losses Improves Quantitative and Visual Performance in Single Image Super-Resolution

Apr 17, 2024

Abstract:Transformer-based models have achieved remarkable results in low-level vision tasks including image super-resolution (SR). However, early Transformer-based approaches that rely on self-attention within non-overlapping windows encounter challenges in acquiring global information. To activate more input pixels globally, hybrid attention models have been proposed. Moreover, training by solely minimizing pixel-wise RGB losses, such as L1, have been found inadequate for capturing essential high-frequency details. This paper presents two contributions: i) We introduce convolutional non-local sparse attention (NLSA) blocks to extend the hybrid transformer architecture in order to further enhance its receptive field. ii) We employ wavelet losses to train Transformer models to improve quantitative and subjective performance. While wavelet losses have been explored previously, showing their power in training Transformer-based SR models is novel. Our experimental results demonstrate that the proposed model provides state-of-the-art PSNR results as well as superior visual performance across various benchmark datasets.

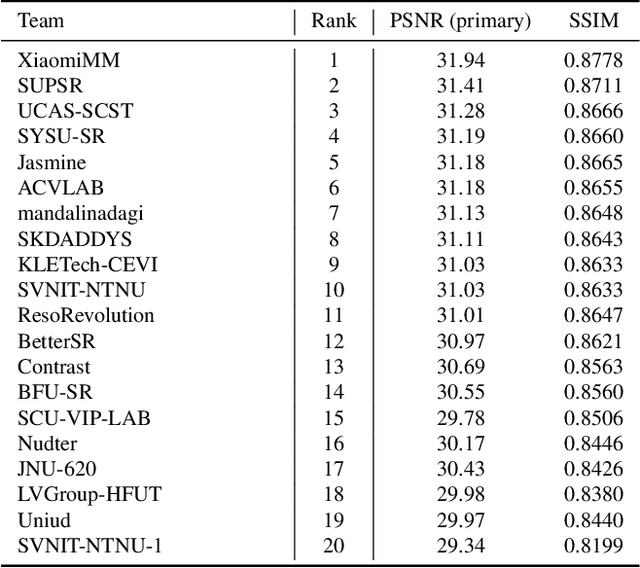

NTIRE 2024 Challenge on Image Super-Resolution ($\times$4): Methods and Results

Apr 15, 2024

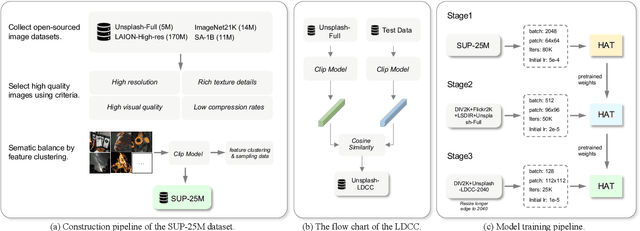

Abstract:This paper reviews the NTIRE 2024 challenge on image super-resolution ($\times$4), highlighting the solutions proposed and the outcomes obtained. The challenge involves generating corresponding high-resolution (HR) images, magnified by a factor of four, from low-resolution (LR) inputs using prior information. The LR images originate from bicubic downsampling degradation. The aim of the challenge is to obtain designs/solutions with the most advanced SR performance, with no constraints on computational resources (e.g., model size and FLOPs) or training data. The track of this challenge assesses performance with the PSNR metric on the DIV2K testing dataset. The competition attracted 199 registrants, with 20 teams submitting valid entries. This collective endeavour not only pushes the boundaries of performance in single-image SR but also offers a comprehensive overview of current trends in this field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge