A. Enis Cetin

Wildfire Detection Via Transfer Learning: A Survey

Jun 21, 2023

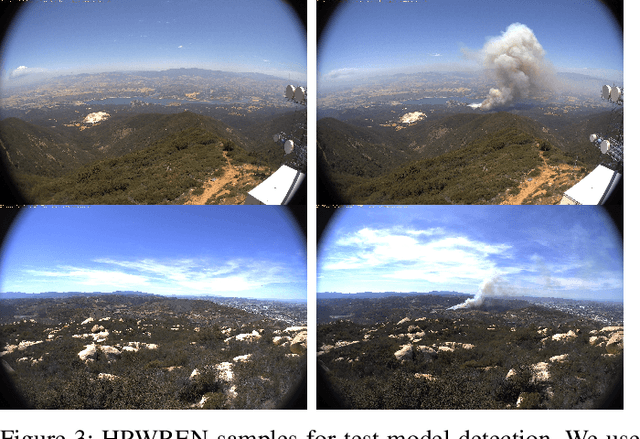

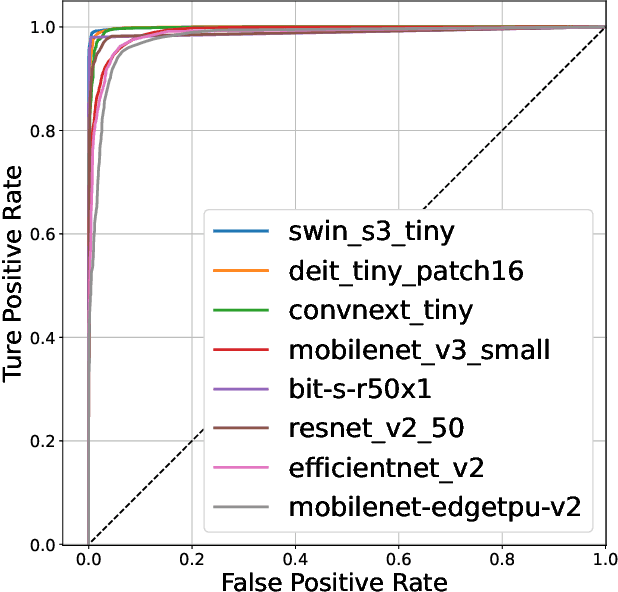

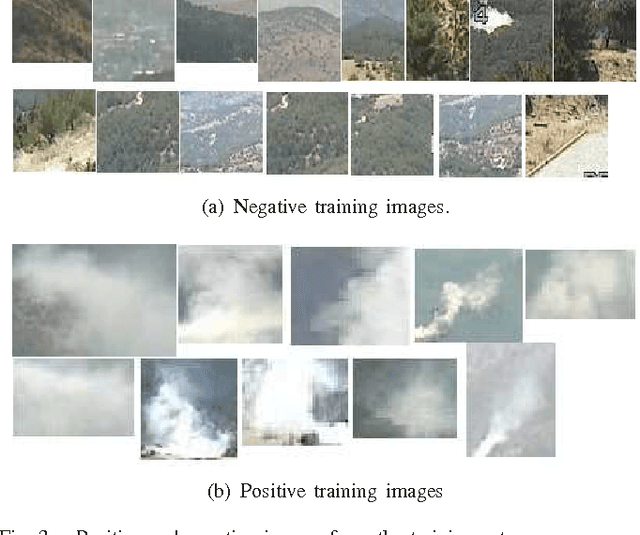

Abstract:This paper surveys different publicly available neural network models used for detecting wildfires using regular visible-range cameras which are placed on hilltops or forest lookout towers. The neural network models are pre-trained on ImageNet-1K and fine-tuned on a custom wildfire dataset. The performance of these models is evaluated on a diverse set of wildfire images, and the survey provides useful information for those interested in using transfer learning for wildfire detection. Swin Transformer-tiny has the highest AUC value but ConvNext-tiny detects all the wildfire events and has the lowest false alarm rate in our dataset.

Robust Principal Component Analysis Using a Novel Kernel Related with the L1-Norm

May 25, 2021

Abstract:We consider a family of vector dot products that can be implemented using sign changes and addition operations only. The dot products are energy-efficient as they avoid the multiplication operation entirely. Moreover, the dot products induce the $\ell_1$-norm, thus providing robustness to impulsive noise. First, we analytically prove that the dot products yield symmetric, positive semi-definite generalized covariance matrices, thus enabling principal component analysis (PCA). Moreover, the generalized covariance matrices can be constructed in an Energy Efficient (EEF) manner due to the multiplication-free property of the underlying vector products. We present image reconstruction examples in which our EEF PCA method result in the highest peak signal-to-noise ratios compared to the ordinary $\ell_2$-PCA and the recursive $\ell_1$-PCA.

Energy Efficient Hadamard Neural Networks

May 14, 2018

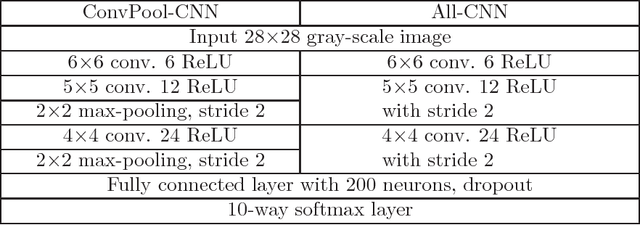

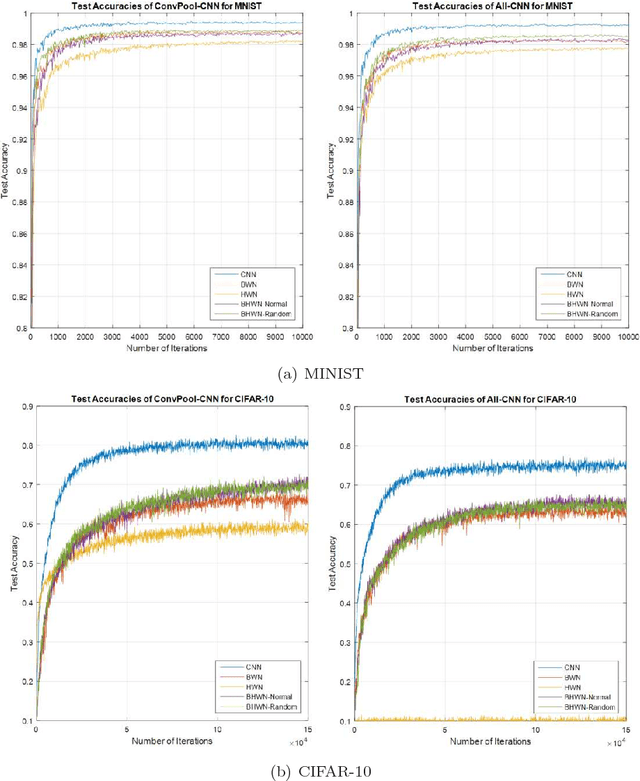

Abstract:Deep learning has made significant improvements at many image processing tasks in recent years, such as image classification, object recognition and object detection. Convolutional neural networks (CNN), which is a popular deep learning architecture designed to process data in multiple array form, show great success to almost all detection \& recognition problems and computer vision tasks. However, the number of parameters in a CNN is too high such that the computers require more energy and larger memory size. In order to solve this problem, we propose a novel energy efficient model Binary Weight and Hadamard-transformed Image Network (BWHIN), which is a combination of Binary Weight Network (BWN) and Hadamard-transformed Image Network (HIN). It is observed that energy efficiency is achieved with a slight sacrifice at classification accuracy. Among all energy efficient networks, our novel ensemble model outperforms other energy efficient models.

Energy Saving Additive Neural Network

Feb 09, 2017

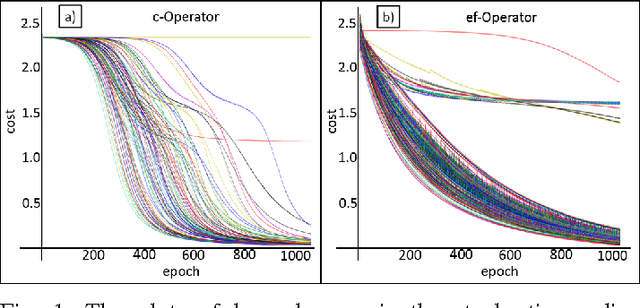

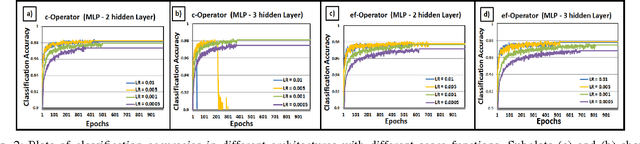

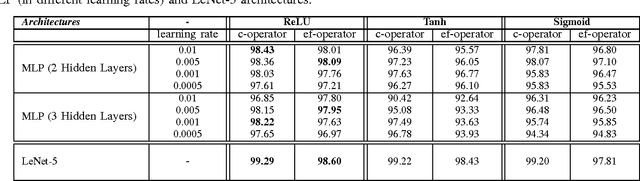

Abstract:In recent years, machine learning techniques based on neural networks for mobile computing become increasingly popular. Classical multi-layer neural networks require matrix multiplications at each stage. Multiplication operation is not an energy efficient operation and consequently it drains the battery of the mobile device. In this paper, we propose a new energy efficient neural network with the universal approximation property over space of Lebesgue integrable functions. This network, called, additive neural network, is very suitable for mobile computing. The neural structure is based on a novel vector product definition, called ef-operator, that permits a multiplier-free implementation. In ef-operation, the "product" of two real numbers is defined as the sum of their absolute values, with the sign determined by the sign of the product of the numbers. This "product" is used to construct a vector product in $R^N$. The vector product induces the $l_1$ norm. The proposed additive neural network successfully solves the XOR problem. The experiments on MNIST dataset show that the classification performances of the proposed additive neural networks are very similar to the corresponding multi-layer perceptron and convolutional neural networks (LeNet).

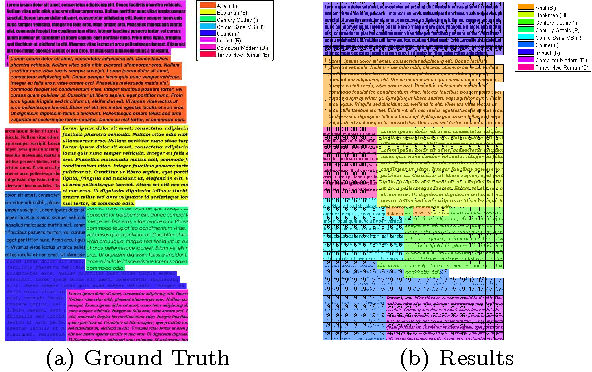

Phase and TV Based Convex Sets for Blind Deconvolution of Microscopic Images

Mar 16, 2015

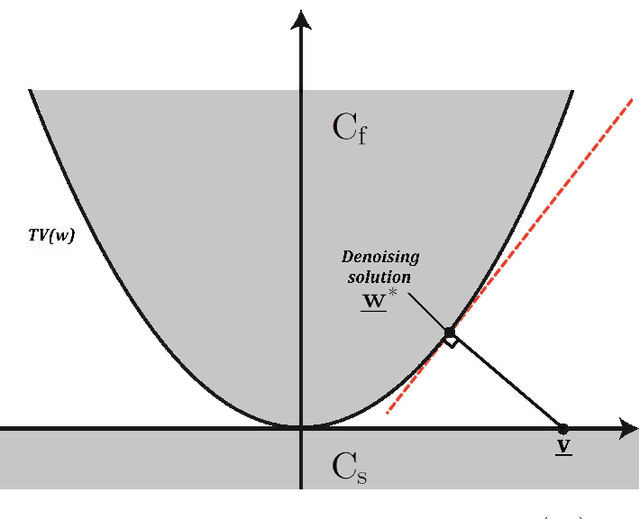

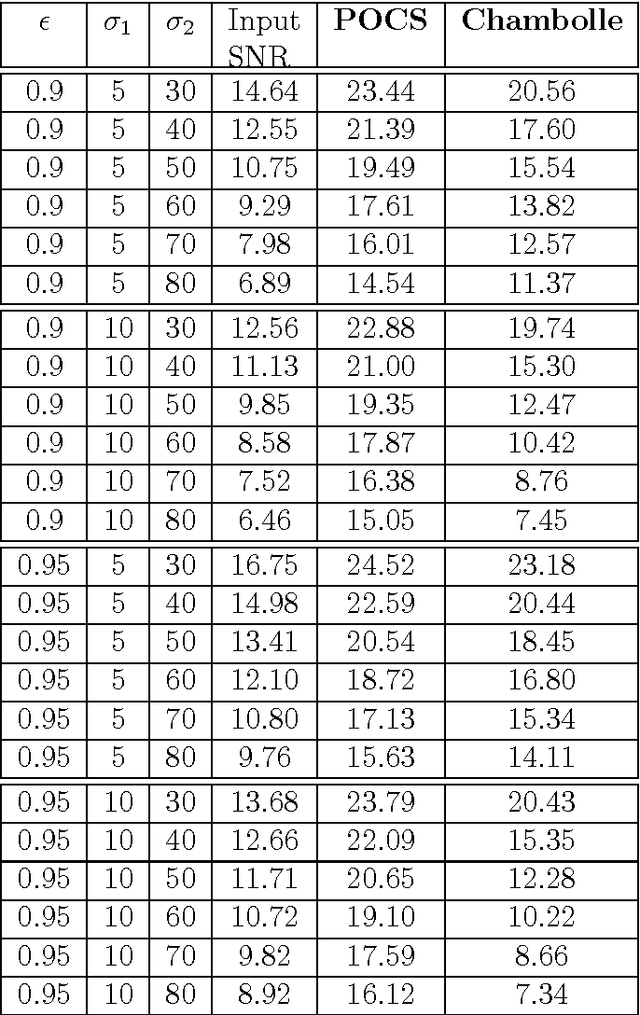

Abstract:In this article, two closed and convex sets for blind deconvolution problem are proposed. Most blurring functions in microscopy are symmetric with respect to the origin. Therefore, they do not modify the phase of the Fourier transform (FT) of the original image. As a result blurred image and the original image have the same FT phase. Therefore, the set of images with a prescribed FT phase can be used as a constraint set in blind deconvolution problems. Another convex set that can be used during the image reconstruction process is the epigraph set of Total Variation (TV) function. This set does not need a prescribed upper bound on the total variation of the image. The upper bound is automatically adjusted according to the current image of the restoration process. Both of these two closed and convex sets can be used as a part of any blind deconvolution algorithm. Simulation examples are presented.

Cosine Similarity Measure According to a Convex Cost Function

Oct 22, 2014

Abstract:In this paper, we describe a new vector similarity measure associated with a convex cost function. Given two vectors, we determine the surface normals of the convex function at the vectors. The angle between the two surface normals is the similarity measure. Convex cost function can be the negative entropy function, total variation (TV) function and filtered variation function. The convex cost function need not be differentiable everywhere. In general, we need to compute the gradient of the cost function to compute the surface normals. If the gradient does not exist at a given vector, it is possible to use the subgradients and the normal producing the smallest angle between the two vectors is used to compute the similarity measure.

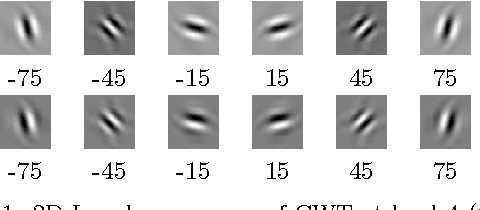

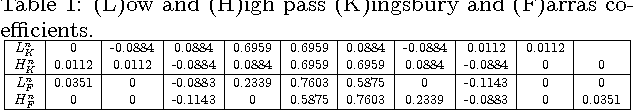

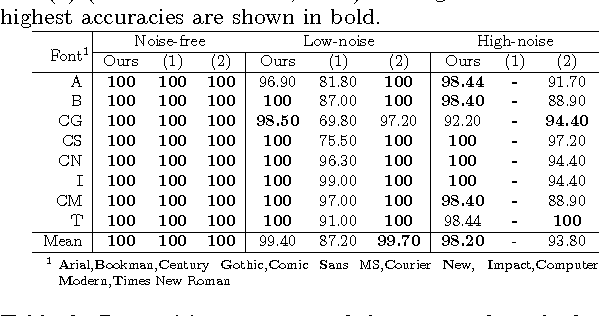

Classifying Fonts and Calligraphy Styles Using Complex Wavelet Transform

Jul 09, 2014

Abstract:Recognizing fonts has become an important task in document analysis, due to the increasing number of available digital documents in different fonts and emphases. A generic font-recognition system independent of language, script and content is desirable for processing various types of documents. At the same time, categorizing calligraphy styles in handwritten manuscripts is important for palaeographic analysis, but has not been studied sufficiently in the literature. We address the font-recognition problem as analysis and categorization of textures. We extract features using complex wavelet transform and use support vector machines for classification. Extensive experimental evaluations on different datasets in four languages and comparisons with state-of-the-art studies show that our proposed method achieves higher recognition accuracy while being computationally simpler. Furthermore, on a new dataset generated from Ottoman manuscripts, we show that the proposed method can also be used for categorizing Ottoman calligraphy with high accuracy.

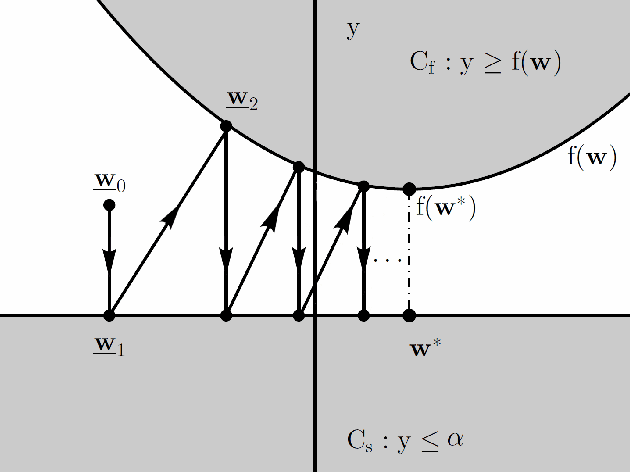

Denosing Using Wavelets and Projections onto the L1-Ball

Jun 10, 2014

Abstract:Both wavelet denoising and denosing methods using the concept of sparsity are based on soft-thresholding. In sparsity based denoising methods, it is assumed that the original signal is sparse in some transform domains such as the wavelet domain and the wavelet subsignals of the noisy signal are projected onto L1-balls to reduce noise. In this lecture note, it is shown that the size of the L1-ball or equivalently the soft threshold value can be determined using linear algebra. The key step is an orthogonal projection onto the epigraph set of the L1-norm cost function.

Signal Reconstruction Framework Based On Projections Onto Epigraph Set Of A Convex Cost Function (PESC)

Feb 10, 2014

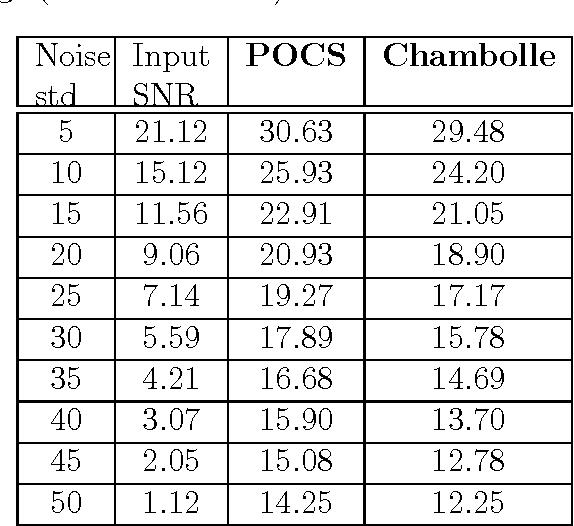

Abstract:A new signal processing framework based on making orthogonal Projections onto the Epigraph Set of a Convex cost function (PESC) is developed. In this way it is possible to solve convex optimization problems using the well-known Projections onto Convex Set (POCS) approach. In this algorithm, the dimension of the minimization problem is lifted by one and a convex set corresponding to the epigraph of the cost function is defined. If the cost function is a convex function in $R^N$, the corresponding epigraph set is also a convex set in R^{N+1}. The PESC method provides globally optimal solutions for total-variation (TV), filtered variation (FV), L_1, L_2, and entropic cost function based convex optimization problems. In this article, the PESC based denoising and compressive sensing algorithms are developed. Simulation examples are presented.

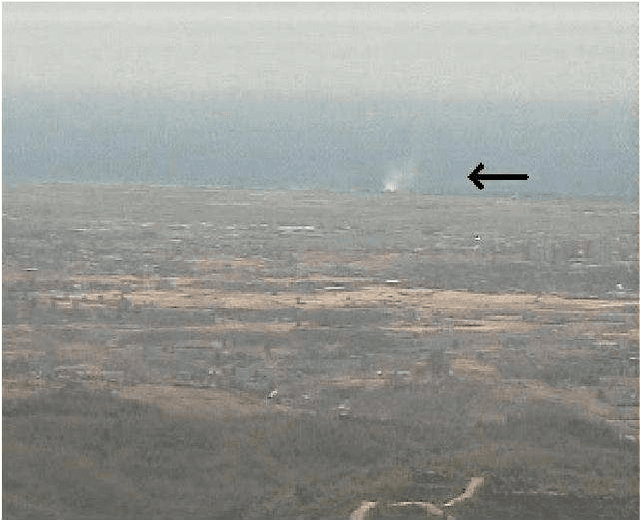

Online Adaptive Decision Fusion Framework Based on Entropic Projections onto Convex Sets with Application to Wildfire Detection in Video

Jan 25, 2011

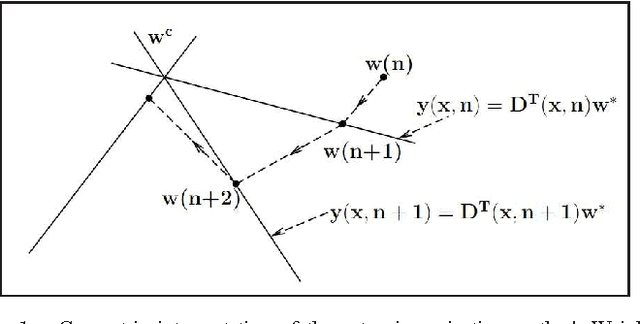

Abstract:In this paper, an Entropy functional based online Adaptive Decision Fusion (EADF) framework is developed for image analysis and computer vision applications. In this framework, it is assumed that the compound algorithm consists of several sub-algorithms each of which yielding its own decision as a real number centered around zero, representing the confidence level of that particular sub-algorithm. Decision values are linearly combined with weights which are updated online according to an active fusion method based on performing entropic projections onto convex sets describing sub-algorithms. It is assumed that there is an oracle, who is usually a human operator, providing feedback to the decision fusion method. A video based wildfire detection system is developed to evaluate the performance of the algorithm in handling the problems where data arrives sequentially. In this case, the oracle is the security guard of the forest lookout tower verifying the decision of the combined algorithm. Simulation results are presented. The EADF framework is also tested with a standard dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge