Diaa Badawi

Block Walsh-Hadamard Transform Based Binary Layers in Deep Neural Networks

Jan 28, 2022

Abstract:Convolution has been the core operation of modern deep neural networks. It is well-known that convolutions can be implemented in the Fourier Transform domain. In this paper, we propose to use binary block Walsh-Hadamard transform (WHT) instead of the Fourier transform. We use WHT-based binary layers to replace some of the regular convolution layers in deep neural networks. We utilize both one-dimensional (1-D) and two-dimensional (2-D) binary WHTs in this paper. In both 1-D and 2-D layers, we compute the binary WHT of the input feature map and denoise the WHT domain coefficients using a nonlinearity which is obtained by combining soft-thresholding with the tanh function. After denoising, we compute the inverse WHT. We use 1D-WHT to replace the $1\times 1$ convolutional layers, and 2D-WHT layers can replace the 3$\times$3 convolution layers and Squeeze-and-Excite layers. 2D-WHT layers with trainable weights can be also inserted before the Global Average Pooling (GAP) layers to assist the dense layers. In this way, we can reduce the number of trainable parameters significantly with a slight decrease in trainable parameters. In this paper, we implement the WHT layers into MobileNet-V2, MobileNet-V3-Large, and ResNet to reduce the number of parameters significantly with negligible accuracy loss. Moreover, according to our speed test, the 2D-FWHT layer runs about 24 times as fast as the regular $3\times 3$ convolution with 19.51\% less RAM usage in an NVIDIA Jetson Nano experiment.

Detecting Anomaly in Chemical Sensors via L1-Kernels based Principal Component Analysis

Jan 07, 2022

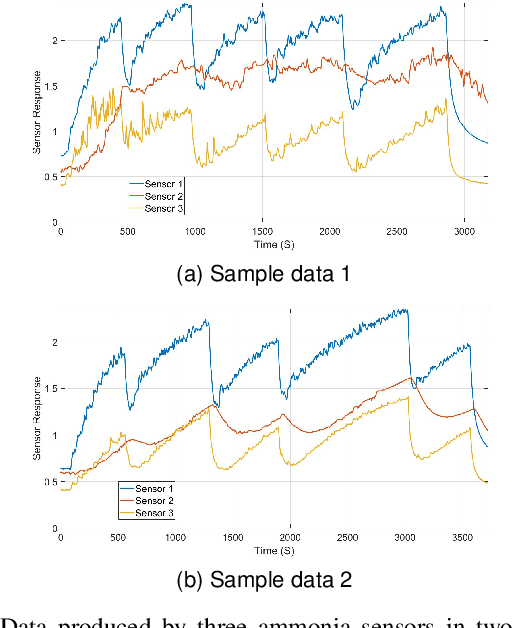

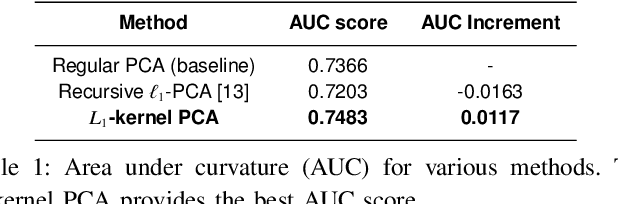

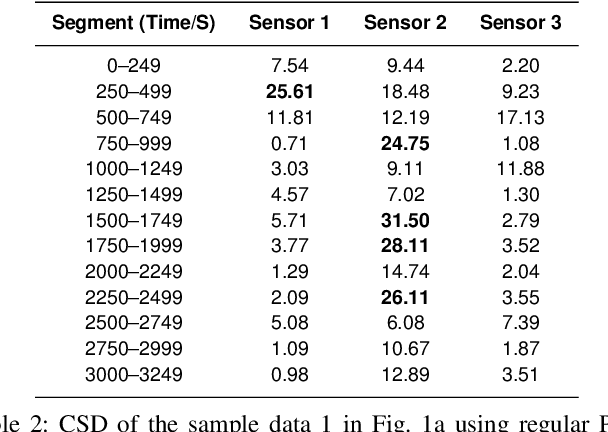

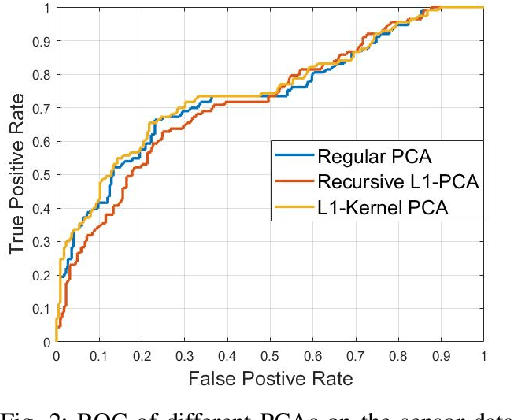

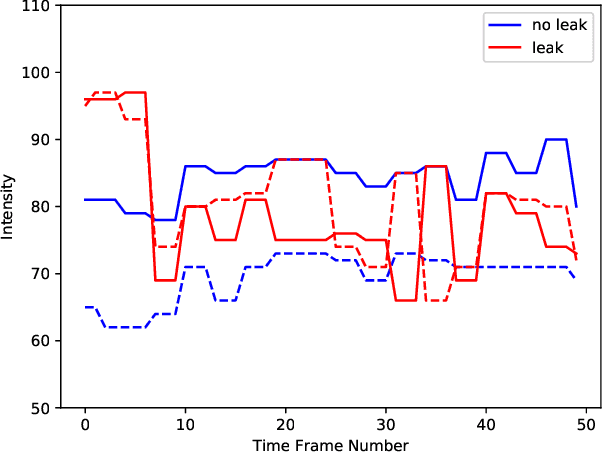

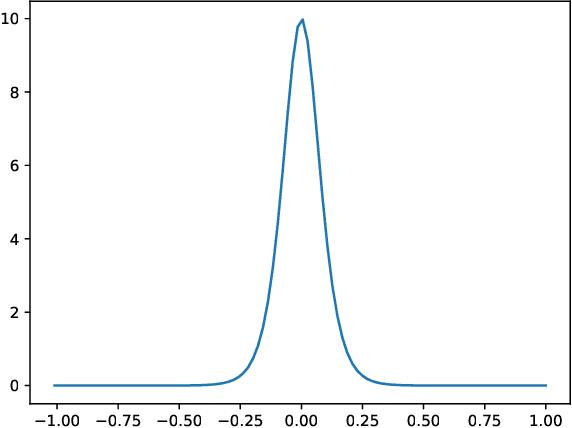

Abstract:We propose a kernel-PCA based method to detect anomaly in chemical sensors. We use temporal signals produced by chemical sensors to form vectors to perform the Principal Component Analysis (PCA). We estimate the kernel-covariance matrix of the sensor data and compute the eigenvector corresponding to the largest eigenvalue of the covariance matrix. The anomaly can be detected by comparing the difference between the actual sensor data and the reconstructed data from the dominant eigenvector. In this paper, we introduce a new multiplication-free kernel, which is related to the l1-norm for the anomaly detection task. The l1-kernel PCA is not only computationally efficient but also energy-efficient because it does not require any actual multiplications during the kernel covariance matrix computation. Our experimental results show that our kernel-PCA method achieves a higher area under curvature (AUC) score (0.7483) than the baseline regular PCA method (0.7366).

Multiplication-Avoiding Variant of Power Iteration with Applications

Oct 22, 2021

Abstract:Power iteration is a fundamental algorithm in data analysis. It extracts the eigenvector corresponding to the largest eigenvalue of a given matrix. Applications include ranking algorithms, recommendation systems, principal component analysis (PCA), among many others. In this paper, We introduce multiplication-avoiding power iteration (MAPI), which replaces the standard $\ell_2$-inner products that appear at the regular power iteration (RPI) with multiplication-free vector products which are Mercer-type kernel operations related with the $\ell_1$ norm. Precisely, for an $n\times n$ matrix, MAPI requires $n$ multiplications, while RPI needs $n^2$ multiplications per iteration. Therefore, MAPI provides a significant reduction of the number of multiplication operations, which are known to be costly in terms of energy consumption. We provide applications of MAPI to PCA-based image reconstruction as well as to graph-based ranking algorithms. When compared to RPI, MAPI not only typically converges much faster, but also provides superior performance.

Robust Principal Component Analysis Using a Novel Kernel Related with the L1-Norm

May 25, 2021

Abstract:We consider a family of vector dot products that can be implemented using sign changes and addition operations only. The dot products are energy-efficient as they avoid the multiplication operation entirely. Moreover, the dot products induce the $\ell_1$-norm, thus providing robustness to impulsive noise. First, we analytically prove that the dot products yield symmetric, positive semi-definite generalized covariance matrices, thus enabling principal component analysis (PCA). Moreover, the generalized covariance matrices can be constructed in an Energy Efficient (EEF) manner due to the multiplication-free property of the underlying vector products. We present image reconstruction examples in which our EEF PCA method result in the highest peak signal-to-noise ratios compared to the ordinary $\ell_2$-PCA and the recursive $\ell_1$-PCA.

Detecting Gas Vapor Leaks Using Uncalibrated Sensors

Aug 20, 2019

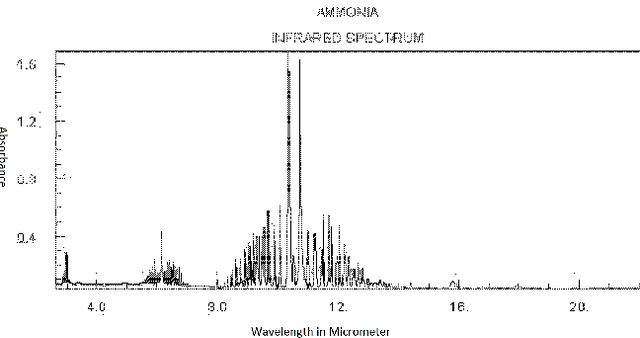

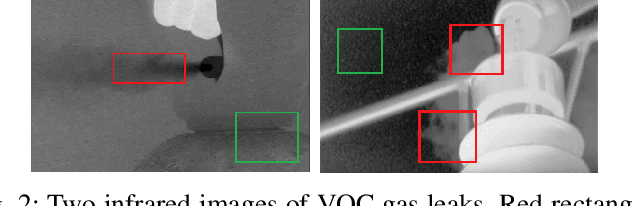

Abstract:Chemical and infra-red sensors generate distinct responses under similar conditions because of sensor drift, noise or resolution errors. In this work, we use different time-series data sets obtained by infra-red and E-nose sensors in order to detect Volatile Organic Compounds (VOCs) and Ammonia vapor leaks. We process time-series sensor signals using deep neural networks (DNN). Three neural network algorithms are utilized for this purpose. Additive neural networks (termed AddNet) are based on a multiplication-devoid operator and consequently exhibit energy-efficiency compared to regular neural networks. The second algorithm uses generative adversarial neural networks so as to expose the classifying neural network to more realistic data points in order to help the classifier network to deliver improved generalization. Finally, we use conventional convolutional neural networks as a baseline method and compare their performance with the two aforementioned deep neural network algorithms in order to evaluate their effectiveness empirically.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge