A. Enis Çetin

High Resolution Time-Frequency Generation with Generative Adversarial Networks

Jun 01, 2021

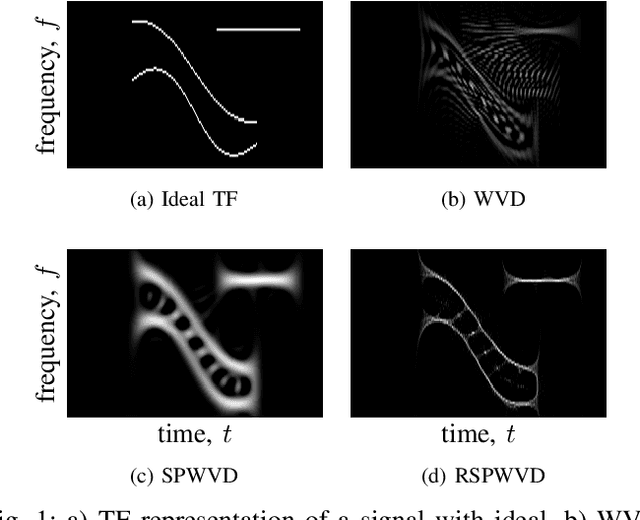

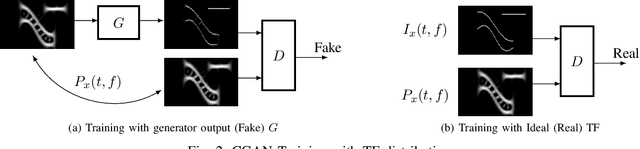

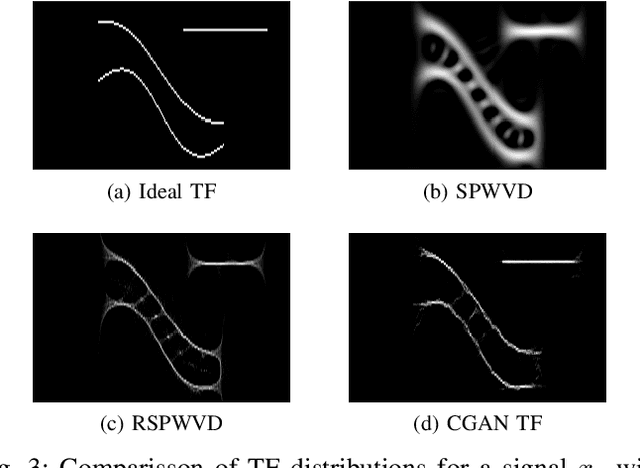

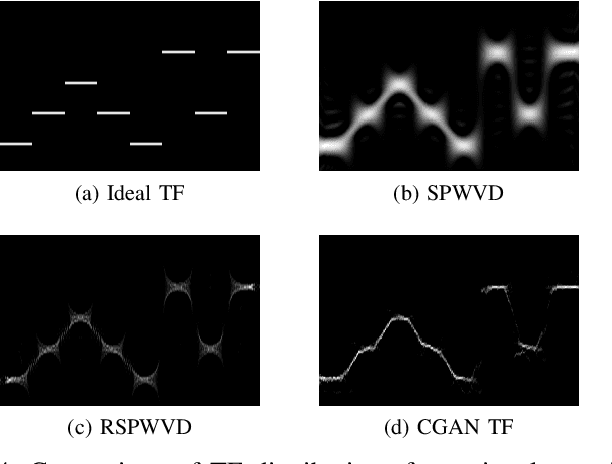

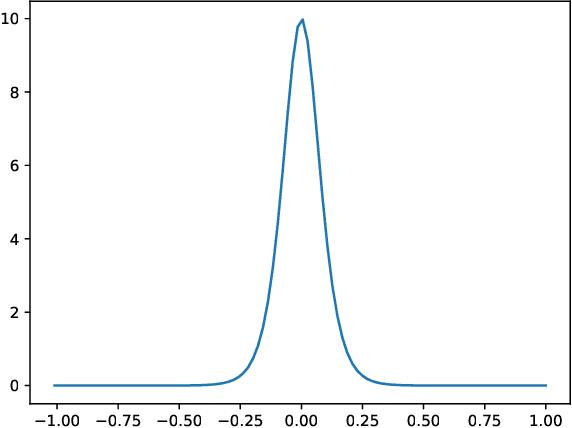

Abstract:Signal representation in Time-Frequency (TF) domain is valuable in many applications including radar imaging and inverse synthetic aparture radar. TF representation allows us to identify signal components or features in a mixed time and frequency plane. There are several well-known tools, such as Wigner-Ville Distribution (WVD), Short-Time Fourier Transform (STFT) and various other variants for such a purpose. The main requirement for a TF representation tool is to give a high-resolution view of the signal such that the signal components or features are identifiable. A commonly used method is the reassignment process which reduces the cross-terms by artificially moving smoothed WVD values from their actual location to the center of the gravity for that region. In this article, we propose a novel reassignment method using the Conditional Generative Adversarial Network (CGAN). We train a CGAN to perform the reassignment process. Through examples, it is shown that the method generates high-resolution TF representations which are better than the current reassignment methods.

Detecting Gas Vapor Leaks Using Uncalibrated Sensors

Aug 20, 2019

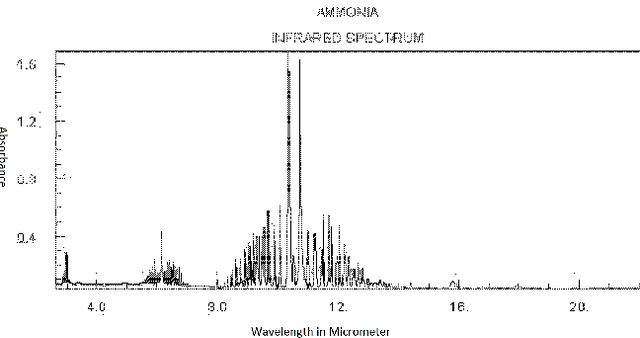

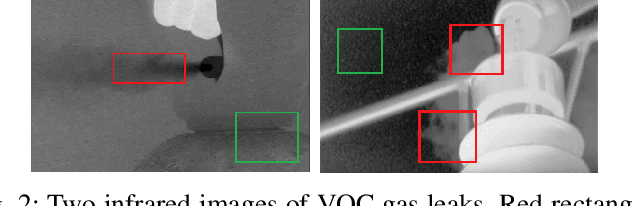

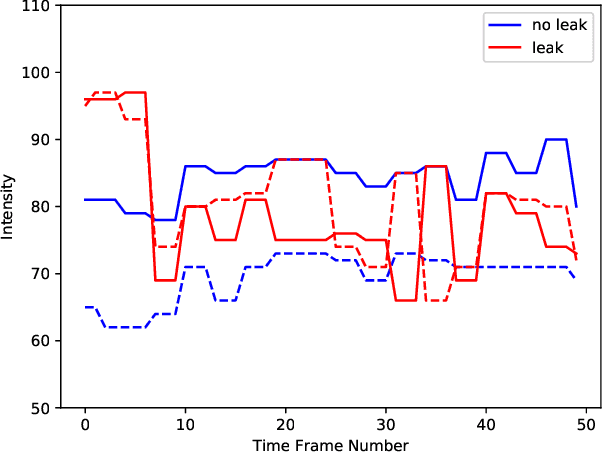

Abstract:Chemical and infra-red sensors generate distinct responses under similar conditions because of sensor drift, noise or resolution errors. In this work, we use different time-series data sets obtained by infra-red and E-nose sensors in order to detect Volatile Organic Compounds (VOCs) and Ammonia vapor leaks. We process time-series sensor signals using deep neural networks (DNN). Three neural network algorithms are utilized for this purpose. Additive neural networks (termed AddNet) are based on a multiplication-devoid operator and consequently exhibit energy-efficiency compared to regular neural networks. The second algorithm uses generative adversarial neural networks so as to expose the classifying neural network to more realistic data points in order to help the classifier network to deliver improved generalization. Finally, we use conventional convolutional neural networks as a baseline method and compare their performance with the two aforementioned deep neural network algorithms in order to evaluate their effectiveness empirically.

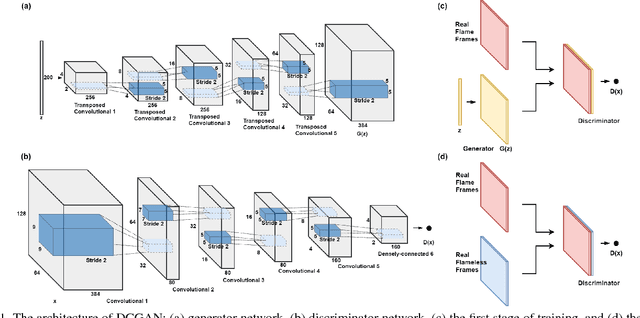

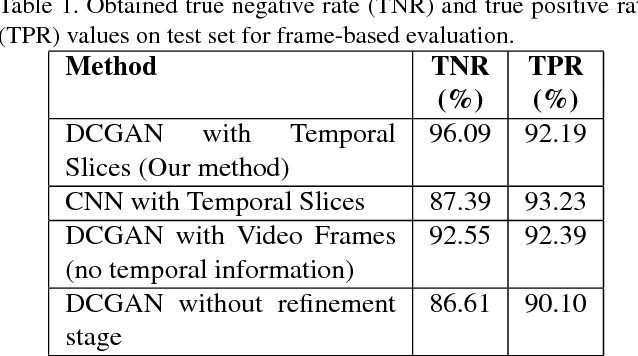

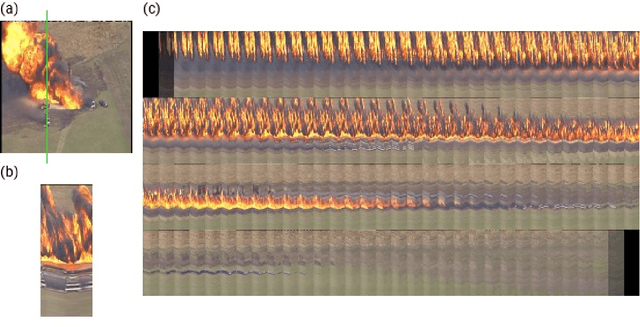

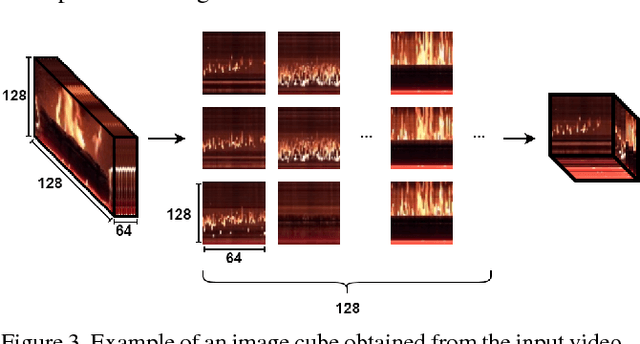

Deep Convolutional Generative Adversarial Networks Based Flame Detection in Video

Feb 05, 2019

Abstract:Real-time flame detection is crucial in video based surveillance systems. We propose a vision-based method to detect flames using Deep Convolutional Generative Adversarial Neural Networks (DCGANs). Many existing supervised learning approaches using convolutional neural networks do not take temporal information into account and require substantial amount of labeled data. In order to have a robust representation of sequences with and without flame, we propose a two-stage training of a DCGAN exploiting spatio-temporal flame evolution. Our training framework includes the regular training of a DCGAN with real spatio-temporal images, namely, temporal slice images, and noise vectors, and training the discriminator separately using the temporal flame images without the generator. Experimental results show that the proposed method effectively detects flame in video with negligible false positive rates in real-time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge