Süleyman Aslan

Multimodal Video-based Apparent Personality Recognition Using Long Short-Term Memory and Convolutional Neural Networks

Nov 01, 2019

Abstract:Personality computing and affective computing, where the recognition of personality traits is essential, have gained increasing interest and attention in many research areas recently. We propose a novel approach to recognize the Big Five personality traits of people from videos. Personality and emotion affect the speaking style, facial expressions, body movements, and linguistic factors in social contexts, and they are affected by environmental elements. We develop a multimodal system to recognize apparent personality based on various modalities such as the face, environment, audio, and transcription features. We use modality-specific neural networks that learn to recognize the traits independently and we obtain a final prediction of apparent personality with a feature-level fusion of these networks. We employ pre-trained deep convolutional neural networks such as ResNet and VGGish networks to extract high-level features and Long Short-Term Memory networks to integrate temporal information. We train the large model consisting of modality-specific subnetworks using a two-stage training process. We first train the subnetworks separately and then fine-tune the overall model using these trained networks. We evaluate the proposed method using ChaLearn First Impressions V2 challenge dataset. Our approach obtains the best overall "mean accuracy" score, averaged over five personality traits, compared to the state-of-the-art.

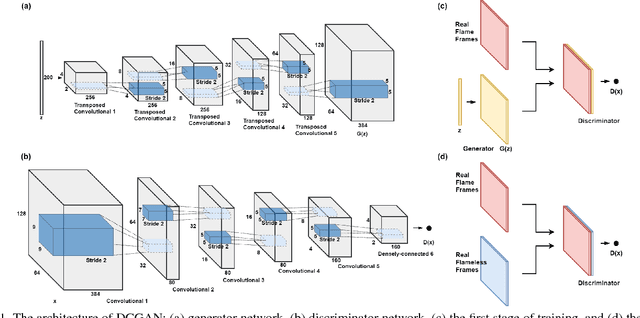

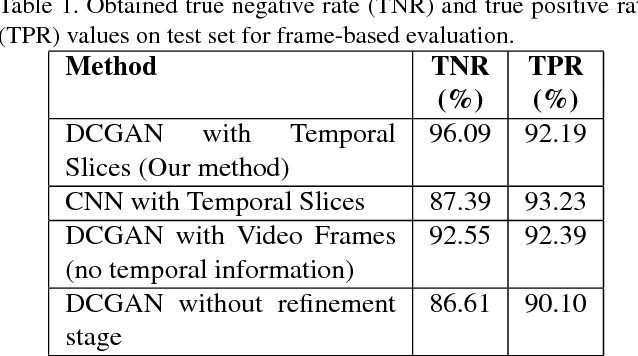

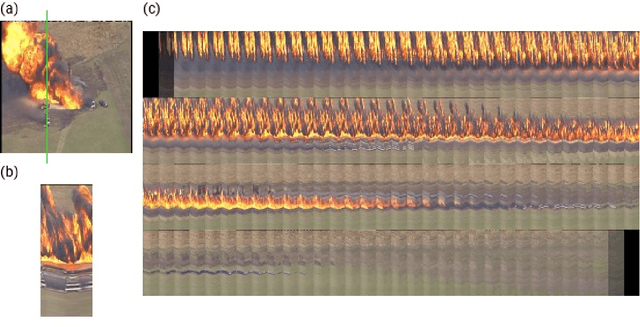

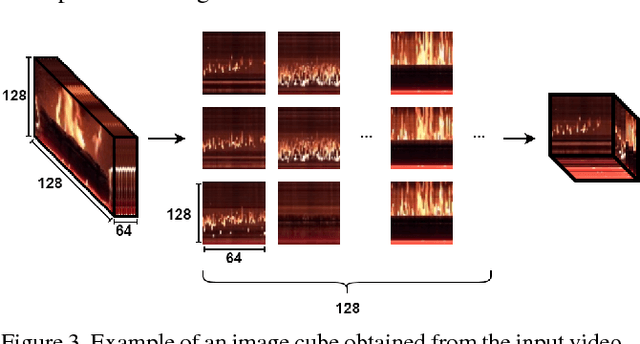

Deep Convolutional Generative Adversarial Networks Based Flame Detection in Video

Feb 05, 2019

Abstract:Real-time flame detection is crucial in video based surveillance systems. We propose a vision-based method to detect flames using Deep Convolutional Generative Adversarial Neural Networks (DCGANs). Many existing supervised learning approaches using convolutional neural networks do not take temporal information into account and require substantial amount of labeled data. In order to have a robust representation of sequences with and without flame, we propose a two-stage training of a DCGAN exploiting spatio-temporal flame evolution. Our training framework includes the regular training of a DCGAN with real spatio-temporal images, namely, temporal slice images, and noise vectors, and training the discriminator separately using the temporal flame images without the generator. Experimental results show that the proposed method effectively detects flame in video with negligible false positive rates in real-time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge