Uğur Güdükbay

Personality Perception in Human Videos Altered by Motion Transfer Networks

Jan 26, 2024Abstract:The successful portrayal of personality in digital characters improves communication and immersion. Current research focuses on expressing personality through modifying animations using heuristic rules or data-driven models. While studies suggest motion style highly influences the apparent personality, the role of appearance can be similarly essential. This work analyzes the influence of movement and appearance on the perceived personality of short videos altered by motion transfer networks. We label the personalities in conference video clips with a user study to determine the samples that best represent the Five-Factor model's high, neutral, and low traits. We alter these videos using the Thin-Plate Spline Motion Model, utilizing the selected samples as the source and driving inputs. We follow five different cases to study the influence of motion and appearance on personality perception. Our comparative study reveals that motion and appearance influence different factors: motion strongly affects perceived extraversion, and appearance helps convey agreeableness and neuroticism.

Visual Analysis of Large Multi-Field AMR Data on GPUs Using Interactive Volume Lines

Jun 20, 2023

Abstract:To visually compare ensembles of volumes, dynamic volume lines (DVLs) represent each ensemble member as a 1D polyline. To compute these, the volume cells are sorted on a space-filling curve and scaled by the ensemble's local variation. The resulting 1D plot can augment or serve as an alternative to a 3D volume visualization free of visual clutter and occlusion. Interactively computing DVLs is challenging when the data is large, and the volume grid is not structured/regular, as is often the case with computational fluid dynamics simulations. We extend DVLs to support large-scale, multi-field adaptive mesh refinement (AMR) data that can be explored interactively. Our GPU-based system updates the DVL representation whenever the data or the alpha transfer function changes. We demonstrate and evaluate our interactive prototype using large AMR volumes from astrophysics simulations.

Multimodal Video-based Apparent Personality Recognition Using Long Short-Term Memory and Convolutional Neural Networks

Nov 01, 2019

Abstract:Personality computing and affective computing, where the recognition of personality traits is essential, have gained increasing interest and attention in many research areas recently. We propose a novel approach to recognize the Big Five personality traits of people from videos. Personality and emotion affect the speaking style, facial expressions, body movements, and linguistic factors in social contexts, and they are affected by environmental elements. We develop a multimodal system to recognize apparent personality based on various modalities such as the face, environment, audio, and transcription features. We use modality-specific neural networks that learn to recognize the traits independently and we obtain a final prediction of apparent personality with a feature-level fusion of these networks. We employ pre-trained deep convolutional neural networks such as ResNet and VGGish networks to extract high-level features and Long Short-Term Memory networks to integrate temporal information. We train the large model consisting of modality-specific subnetworks using a two-stage training process. We first train the subnetworks separately and then fine-tune the overall model using these trained networks. We evaluate the proposed method using ChaLearn First Impressions V2 challenge dataset. Our approach obtains the best overall "mean accuracy" score, averaged over five personality traits, compared to the state-of-the-art.

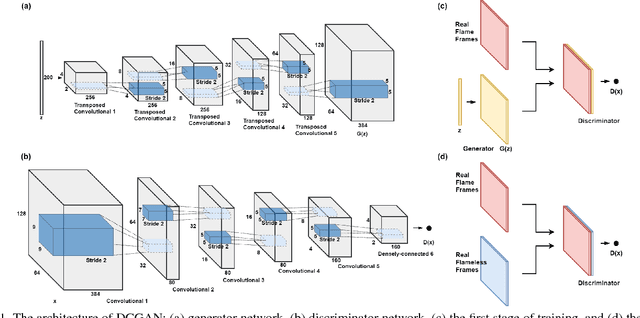

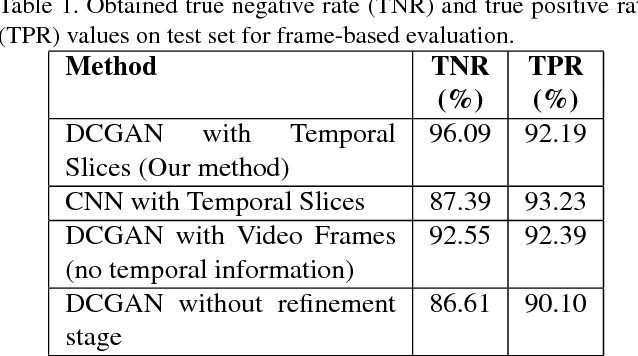

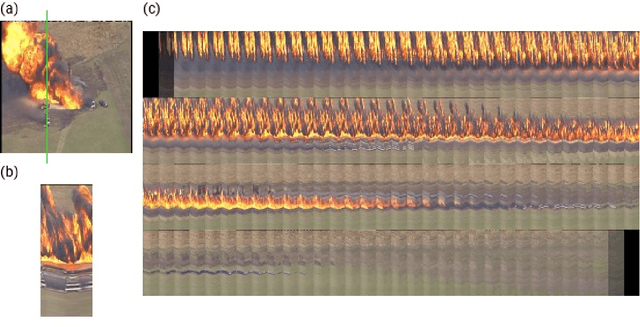

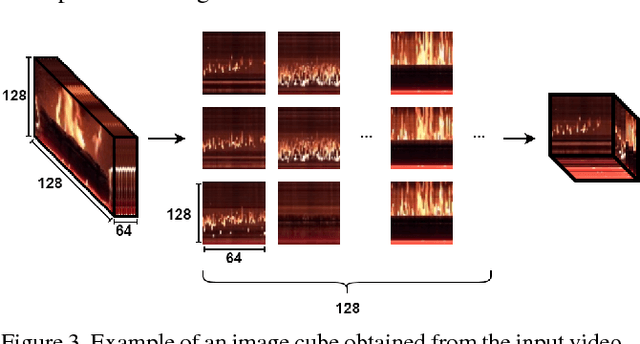

Deep Convolutional Generative Adversarial Networks Based Flame Detection in Video

Feb 05, 2019

Abstract:Real-time flame detection is crucial in video based surveillance systems. We propose a vision-based method to detect flames using Deep Convolutional Generative Adversarial Neural Networks (DCGANs). Many existing supervised learning approaches using convolutional neural networks do not take temporal information into account and require substantial amount of labeled data. In order to have a robust representation of sequences with and without flame, we propose a two-stage training of a DCGAN exploiting spatio-temporal flame evolution. Our training framework includes the regular training of a DCGAN with real spatio-temporal images, namely, temporal slice images, and noise vectors, and training the discriminator separately using the temporal flame images without the generator. Experimental results show that the proposed method effectively detects flame in video with negligible false positive rates in real-time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge