Energy Saving Additive Neural Network

Paper and Code

Feb 09, 2017

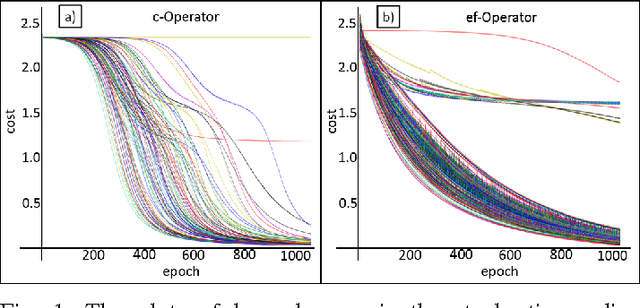

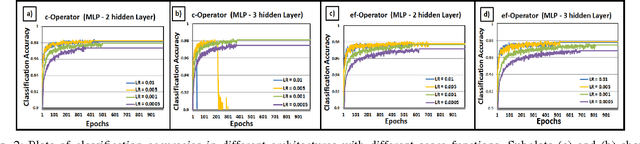

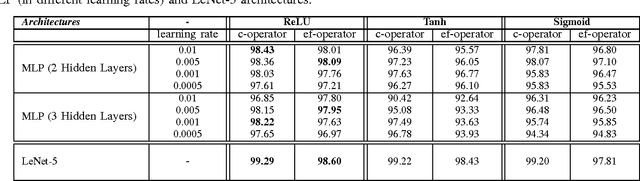

In recent years, machine learning techniques based on neural networks for mobile computing become increasingly popular. Classical multi-layer neural networks require matrix multiplications at each stage. Multiplication operation is not an energy efficient operation and consequently it drains the battery of the mobile device. In this paper, we propose a new energy efficient neural network with the universal approximation property over space of Lebesgue integrable functions. This network, called, additive neural network, is very suitable for mobile computing. The neural structure is based on a novel vector product definition, called ef-operator, that permits a multiplier-free implementation. In ef-operation, the "product" of two real numbers is defined as the sum of their absolute values, with the sign determined by the sign of the product of the numbers. This "product" is used to construct a vector product in $R^N$. The vector product induces the $l_1$ norm. The proposed additive neural network successfully solves the XOR problem. The experiments on MNIST dataset show that the classification performances of the proposed additive neural networks are very similar to the corresponding multi-layer perceptron and convolutional neural networks (LeNet).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge