Zongren Zou

Learning and discovering multiple solutions using physics-informed neural networks with random initialization and deep ensemble

Mar 08, 2025Abstract:We explore the capability of physics-informed neural networks (PINNs) to discover multiple solutions. Many real-world phenomena governed by nonlinear differential equations (DEs), such as fluid flow, exhibit multiple solutions under the same conditions, yet capturing this solution multiplicity remains a significant challenge. A key difficulty is giving appropriate initial conditions or initial guesses, to which the widely used time-marching schemes and Newton's iteration method are very sensitive in finding solutions for complex computational problems. While machine learning models, particularly PINNs, have shown promise in solving DEs, their ability to capture multiple solutions remains underexplored. In this work, we propose a simple and practical approach using PINNs to learn and discover multiple solutions. We first reveal that PINNs, when combined with random initialization and deep ensemble method -- originally developed for uncertainty quantification -- can effectively uncover multiple solutions to nonlinear ordinary and partial differential equations (ODEs/PDEs). Our approach highlights the critical role of initialization in shaping solution diversity, addressing an often-overlooked aspect of machine learning for scientific computing. Furthermore, we propose utilizing PINN-generated solutions as initial conditions or initial guesses for conventional numerical solvers to enhance accuracy and efficiency in capturing multiple solutions. Extensive numerical experiments, including the Allen-Cahn equation and cavity flow, where our approach successfully identifies both stable and unstable solutions, validate the effectiveness of our method. These findings establish a general and efficient framework for addressing solution multiplicity in nonlinear differential equations.

From PINNs to PIKANs: Recent Advances in Physics-Informed Machine Learning

Oct 17, 2024

Abstract:Physics-Informed Neural Networks (PINNs) have emerged as a key tool in Scientific Machine Learning since their introduction in 2017, enabling the efficient solution of ordinary and partial differential equations using sparse measurements. Over the past few years, significant advancements have been made in the training and optimization of PINNs, covering aspects such as network architectures, adaptive refinement, domain decomposition, and the use of adaptive weights and activation functions. A notable recent development is the Physics-Informed Kolmogorov-Arnold Networks (PIKANS), which leverage a representation model originally proposed by Kolmogorov in 1957, offering a promising alternative to traditional PINNs. In this review, we provide a comprehensive overview of the latest advancements in PINNs, focusing on improvements in network design, feature expansion, optimization techniques, uncertainty quantification, and theoretical insights. We also survey key applications across a range of fields, including biomedicine, fluid and solid mechanics, geophysics, dynamical systems, heat transfer, chemical engineering, and beyond. Finally, we review computational frameworks and software tools developed by both academia and industry to support PINN research and applications.

HJ-sampler: A Bayesian sampler for inverse problems of a stochastic process by leveraging Hamilton-Jacobi PDEs and score-based generative models

Sep 15, 2024

Abstract:The interplay between stochastic processes and optimal control has been extensively explored in the literature. With the recent surge in the use of diffusion models, stochastic processes have increasingly been applied to sample generation. This paper builds on the log transform, known as the Cole-Hopf transform in Brownian motion contexts, and extends it within a more abstract framework that includes a linear operator. Within this framework, we found that the well-known relationship between the Cole-Hopf transform and optimal transport is a particular instance where the linear operator acts as the infinitesimal generator of a stochastic process. We also introduce a novel scenario where the linear operator is the adjoint of the generator, linking to Bayesian inference under specific initial and terminal conditions. Leveraging this theoretical foundation, we develop a new algorithm, named the HJ-sampler, for Bayesian inference for the inverse problem of a stochastic differential equation with given terminal observations. The HJ-sampler involves two stages: (1) solving the viscous Hamilton-Jacobi partial differential equations, and (2) sampling from the associated stochastic optimal control problem. Our proposed algorithm naturally allows for flexibility in selecting the numerical solver for viscous HJ PDEs. We introduce two variants of the solver: the Riccati-HJ-sampler, based on the Riccati method, and the SGM-HJ-sampler, which utilizes diffusion models. We demonstrate the effectiveness and flexibility of the proposed methods by applying them to solve Bayesian inverse problems involving various stochastic processes and prior distributions, including applications that address model misspecifications and quantifying model uncertainty.

Quantification of total uncertainty in the physics-informed reconstruction of CVSim-6 physiology

Aug 13, 2024Abstract:When predicting physical phenomena through simulation, quantification of the total uncertainty due to multiple sources is as crucial as making sure the underlying numerical model is accurate. Possible sources include irreducible aleatoric uncertainty due to noise in the data, epistemic uncertainty induced by insufficient data or inadequate parameterization, and model-form uncertainty related to the use of misspecified model equations. Physics-based regularization interacts in nontrivial ways with aleatoric, epistemic and model-form uncertainty and their combination, and a better understanding of this interaction is needed to improve the predictive performance of physics-informed digital twins that operate under real conditions. With a specific focus on biological and physiological models, this study investigates the decomposition of total uncertainty in the estimation of states and parameters of a differential system simulated with MC X-TFC, a new physics-informed approach for uncertainty quantification based on random projections and Monte-Carlo sampling. MC X-TFC is applied to a six-compartment stiff ODE system, the CVSim-6 model, developed in the context of human physiology. The system is analyzed by progressively removing data while estimating an increasing number of parameters and by investigating total uncertainty under model-form misspecification of non-linear resistance in the pulmonary compartment. In particular, we focus on the interaction between the formulation of the discrepancy term and quantification of model-form uncertainty, and show how additional physics can help in the estimation process. The method demonstrates robustness and efficiency in estimating unknown states and parameters, even with limited, sparse, and noisy data. It also offers great flexibility in integrating data with physics for improved estimation, even in cases of model misspecification.

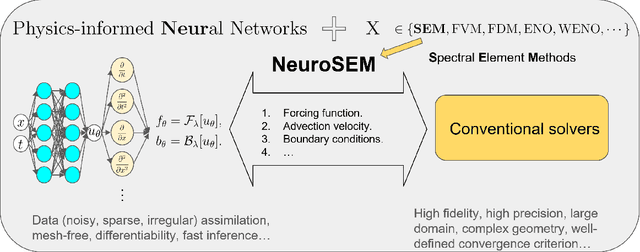

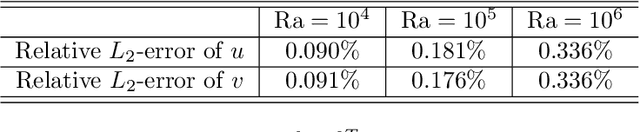

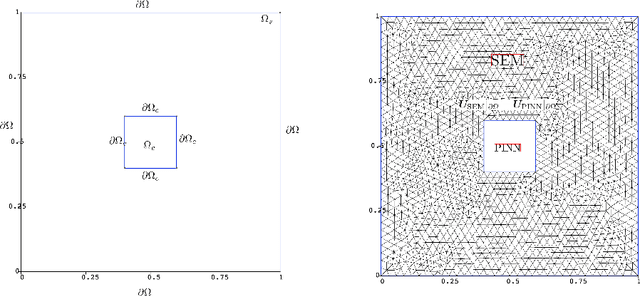

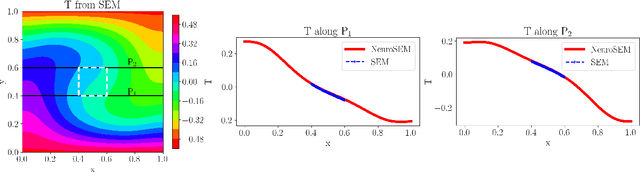

NeuroSEM: A hybrid framework for simulating multiphysics problems by coupling PINNs and spectral elements

Jul 30, 2024

Abstract:Multiphysics problems that are characterized by complex interactions among fluid dynamics, heat transfer, structural mechanics, and electromagnetics, are inherently challenging due to their coupled nature. While experimental data on certain state variables may be available, integrating these data with numerical solvers remains a significant challenge. Physics-informed neural networks (PINNs) have shown promising results in various engineering disciplines, particularly in handling noisy data and solving inverse problems. However, their effectiveness in forecasting nonlinear phenomena in multiphysics regimes is yet to be fully established. This study introduces NeuroSEM, a hybrid framework integrating PINNs with the high-fidelity Spectral Element Method (SEM) solver, Nektar++. NeuroSEM leverages strengths of both PINNs and SEM, providing robust solutions for multiphysics problems. PINNs are trained to assimilate data and model physical phenomena in specific subdomains, which are then integrated into Nektar++. We demonstrate the efficiency and accuracy of NeuroSEM for thermal convection in cavity flow and flow past a cylinder. The framework effectively handles data assimilation by addressing those subdomains and state variables where data are available. We applied NeuroSEM to the Rayleigh-B\'enard convection system, including cases with missing thermal boundary conditions. Our results indicate that NeuroSEM accurately models the physical phenomena and assimilates the data within the specified subdomains. The framework's plug-and-play nature facilitates its extension to other multiphysics or multiscale problems. Furthermore, NeuroSEM is optimized for an efficient execution on emerging integrated GPU-CPU architectures. This hybrid approach enhances the accuracy and efficiency of simulations, making it a powerful tool for tackling complex engineering challenges in various scientific domains.

A comprehensive and FAIR comparison between MLP and KAN representations for differential equations and operator networks

Jun 05, 2024

Abstract:Kolmogorov-Arnold Networks (KANs) were recently introduced as an alternative representation model to MLP. Herein, we employ KANs to construct physics-informed machine learning models (PIKANs) and deep operator models (DeepOKANs) for solving differential equations for forward and inverse problems. In particular, we compare them with physics-informed neural networks (PINNs) and deep operator networks (DeepONets), which are based on the standard MLP representation. We find that although the original KANs based on the B-splines parameterization lack accuracy and efficiency, modified versions based on low-order orthogonal polynomials have comparable performance to PINNs and DeepONet although they still lack robustness as they may diverge for different random seeds or higher order orthogonal polynomials. We visualize their corresponding loss landscapes and analyze their learning dynamics using information bottleneck theory. Our study follows the FAIR principles so that other researchers can use our benchmarks to further advance this emerging topic.

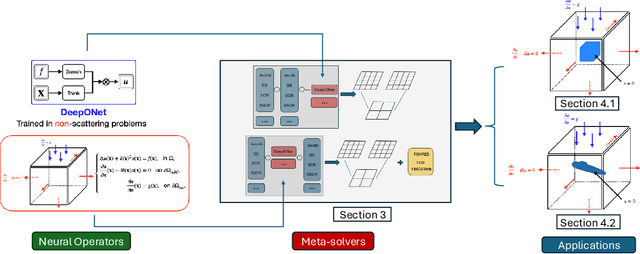

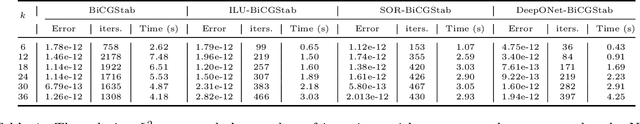

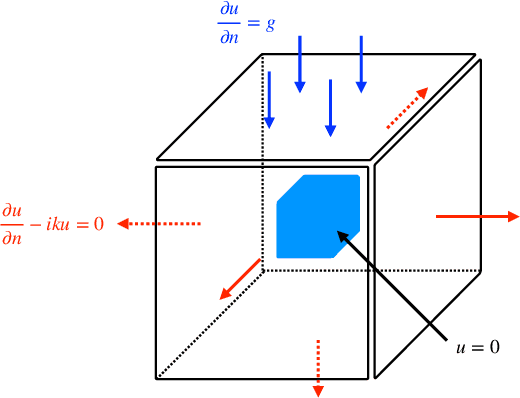

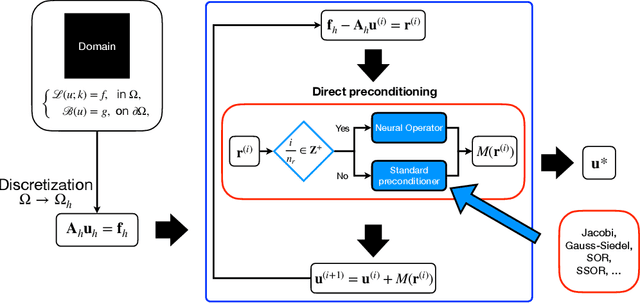

Large scale scattering using fast solvers based on neural operators

May 20, 2024

Abstract:We extend a recently proposed machine-learning-based iterative solver, i.e. the hybrid iterative transferable solver (HINTS), to solve the scattering problem described by the Helmholtz equation in an exterior domain with a complex absorbing boundary condition. The HINTS method combines neural operators (NOs) with standard iterative solvers, e.g. Jacobi and Gauss-Seidel (GS), to achieve better performance by leveraging the spectral bias of neural networks. In HINTS, some iterations of the conventional iterative method are replaced by inferences of the pre-trained NO. In this work, we employ HINTS to solve the scattering problem for both 2D and 3D problems, where the standard iterative solver fails. We consider square and triangular scatterers of various sizes in 2D, and a cube and a model submarine in 3D. We explore and illustrate the extrapolation capability of HINTS in handling diverse geometries of the scatterer, which is achieved by training the NO on non-scattering scenarios and then deploying it in HINTS to solve scattering problems. The accurate results demonstrate that the NO in HINTS method remains effective without retraining or fine-tuning it whenever a new scatterer is given. Taken together, our results highlight the adaptability and versatility of the extended HINTS methodology in addressing diverse scattering problems.

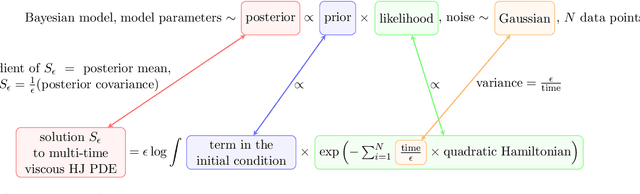

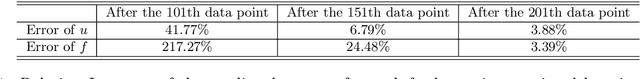

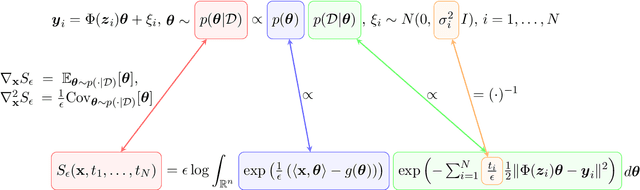

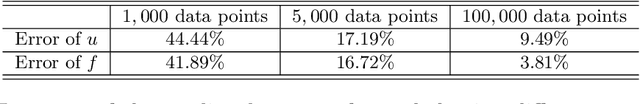

Leveraging viscous Hamilton-Jacobi PDEs for uncertainty quantification in scientific machine learning

Apr 12, 2024

Abstract:Uncertainty quantification (UQ) in scientific machine learning (SciML) combines the powerful predictive power of SciML with methods for quantifying the reliability of the learned models. However, two major challenges remain: limited interpretability and expensive training procedures. We provide a new interpretation for UQ problems by establishing a new theoretical connection between some Bayesian inference problems arising in SciML and viscous Hamilton-Jacobi partial differential equations (HJ PDEs). Namely, we show that the posterior mean and covariance can be recovered from the spatial gradient and Hessian of the solution to a viscous HJ PDE. As a first exploration of this connection, we specialize to Bayesian inference problems with linear models, Gaussian likelihoods, and Gaussian priors. In this case, the associated viscous HJ PDEs can be solved using Riccati ODEs, and we develop a new Riccati-based methodology that provides computational advantages when continuously updating the model predictions. Specifically, our Riccati-based approach can efficiently add or remove data points to the training set invariant to the order of the data and continuously tune hyperparameters. Moreover, neither update requires retraining on or access to previously incorporated data. We provide several examples from SciML involving noisy data and \textit{epistemic uncertainty} to illustrate the potential advantages of our approach. In particular, this approach's amenability to data streaming applications demonstrates its potential for real-time inferences, which, in turn, allows for applications in which the predicted uncertainty is used to dynamically alter the learning process.

Uncertainty quantification for noisy inputs-outputs in physics-informed neural networks and neural operators

Nov 19, 2023

Abstract:Uncertainty quantification (UQ) in scientific machine learning (SciML) becomes increasingly critical as neural networks (NNs) are being widely adopted in addressing complex problems across various scientific disciplines. Representative SciML models are physics-informed neural networks (PINNs) and neural operators (NOs). While UQ in SciML has been increasingly investigated in recent years, very few works have focused on addressing the uncertainty caused by the noisy inputs, such as spatial-temporal coordinates in PINNs and input functions in NOs. The presence of noise in the inputs of the models can pose significantly more challenges compared to noise in the outputs of the models, primarily due to the inherent nonlinearity of most SciML algorithms. As a result, UQ for noisy inputs becomes a crucial factor for reliable and trustworthy deployment of these models in applications involving physical knowledge. To this end, we introduce a Bayesian approach to quantify uncertainty arising from noisy inputs-outputs in PINNs and NOs. We show that this approach can be seamlessly integrated into PINNs and NOs, when they are employed to encode the physical information. PINNs incorporate physics by including physics-informed terms via automatic differentiation, either in the loss function or the likelihood, and often take as input the spatial-temporal coordinate. Therefore, the present method equips PINNs with the capability to address problems where the observed coordinate is subject to noise. On the other hand, pretrained NOs are also commonly employed as equation-free surrogates in solving differential equations and Bayesian inverse problems, in which they take functions as inputs. The proposed approach enables them to handle noisy measurements for both input and output functions with UQ.

Leveraging Hamilton-Jacobi PDEs with time-dependent Hamiltonians for continual scientific machine learning

Nov 13, 2023Abstract:We address two major challenges in scientific machine learning (SciML): interpretability and computational efficiency. We increase the interpretability of certain learning processes by establishing a new theoretical connection between optimization problems arising from SciML and a generalized Hopf formula, which represents the viscosity solution to a Hamilton-Jacobi partial differential equation (HJ PDE) with time-dependent Hamiltonian. Namely, we show that when we solve certain regularized learning problems with integral-type losses, we actually solve an optimal control problem and its associated HJ PDE with time-dependent Hamiltonian. This connection allows us to reinterpret incremental updates to learned models as the evolution of an associated HJ PDE and optimal control problem in time, where all of the previous information is intrinsically encoded in the solution to the HJ PDE. As a result, existing HJ PDE solvers and optimal control algorithms can be reused to design new efficient training approaches for SciML that naturally coincide with the continual learning framework, while avoiding catastrophic forgetting. As a first exploration of this connection, we consider the special case of linear regression and leverage our connection to develop a new Riccati-based methodology for solving these learning problems that is amenable to continual learning applications. We also provide some corresponding numerical examples that demonstrate the potential computational and memory advantages our Riccati-based approach can provide.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge