Jay Pathak

Hybrid Iterative Solvers with Geometry-Aware Neural Preconditioners for Parametric PDEs

Dec 16, 2025

Abstract:The convergence behavior of classical iterative solvers for parametric partial differential equations (PDEs) is often highly sensitive to the domain and specific discretization of PDEs. Previously, we introduced hybrid solvers by combining the classical solvers with neural operators for a specific geometry 1, but they tend to under-perform in geometries not encountered during training. To address this challenge, we introduce Geo-DeepONet, a geometry-aware deep operator network that incorporates domain information extracted from finite element discretizations. Geo-DeepONet enables accurate operator learning across arbitrary unstructured meshes without requiring retraining. Building on this, we develop a class of geometry-aware hybrid preconditioned iterative solvers by coupling Geo-DeepONet with traditional methods such as relaxation schemes and Krylov subspace algorithms. Through numerical experiments on parametric PDEs posed over diverse unstructured domains, we demonstrate the enhanced robustness and efficiency of the proposed hybrid solvers for multiple real-world applications.

A domain decomposition-based autoregressive deep learning model for unsteady and nonlinear partial differential equations

Aug 27, 2024

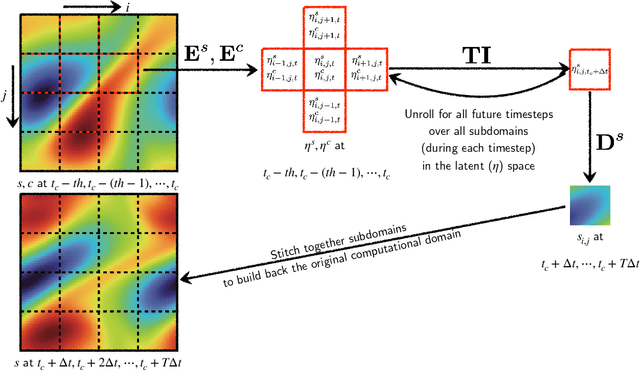

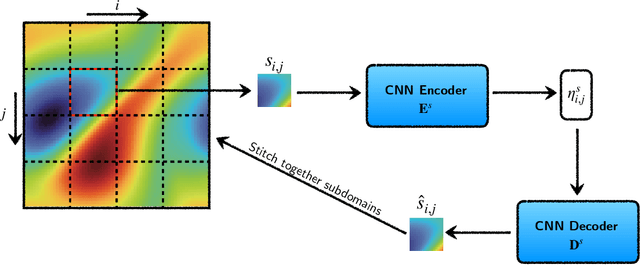

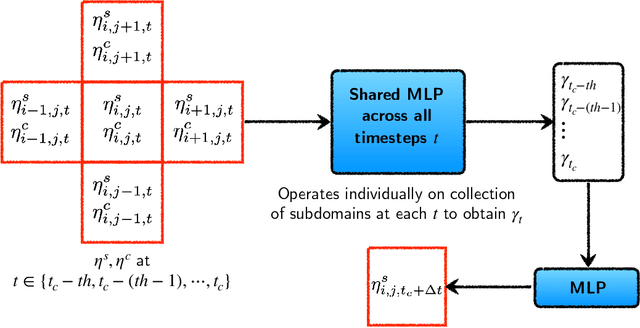

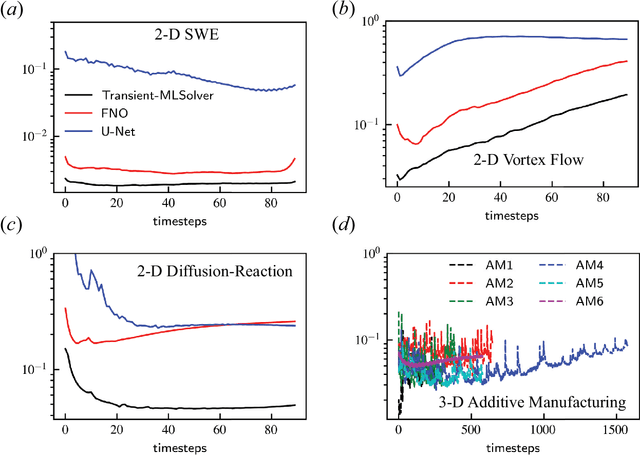

Abstract:In this paper, we propose a domain-decomposition-based deep learning (DL) framework, named transient-CoMLSim, for accurately modeling unsteady and nonlinear partial differential equations (PDEs). The framework consists of two key components: (a) a convolutional neural network (CNN)-based autoencoder architecture and (b) an autoregressive model composed of fully connected layers. Unlike existing state-of-the-art methods that operate on the entire computational domain, our CNN-based autoencoder computes a lower-dimensional basis for solution and condition fields represented on subdomains. Timestepping is performed entirely in the latent space, generating embeddings of the solution variables from the time history of embeddings of solution and condition variables. This approach not only reduces computational complexity but also enhances scalability, making it well-suited for large-scale simulations. Furthermore, to improve the stability of our rollouts, we employ a curriculum learning (CL) approach during the training of the autoregressive model. The domain-decomposition strategy enables scaling to out-of-distribution domain sizes while maintaining the accuracy of predictions -- a feature not easily integrated into popular DL-based approaches for physics simulations. We benchmark our model against two widely-used DL architectures, Fourier Neural Operator (FNO) and U-Net, and demonstrate that our framework outperforms them in terms of accuracy, extrapolation to unseen timesteps, and stability for a wide range of use cases.

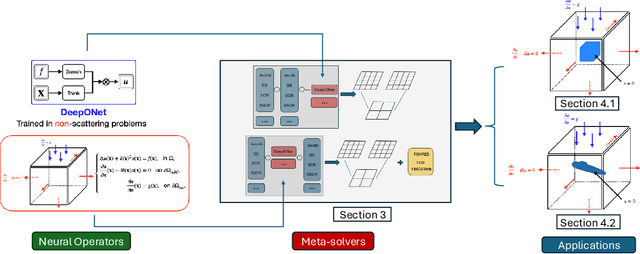

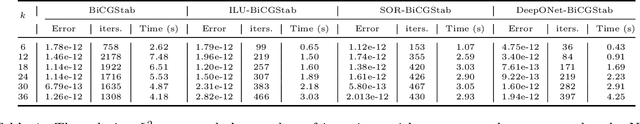

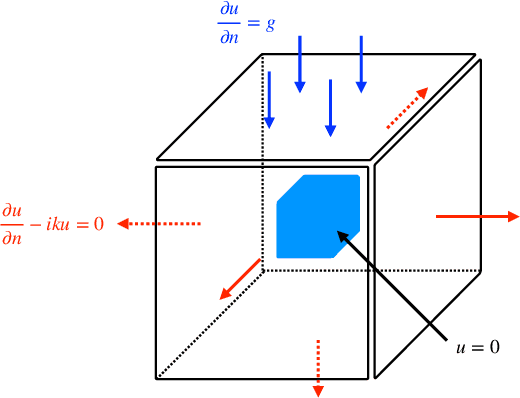

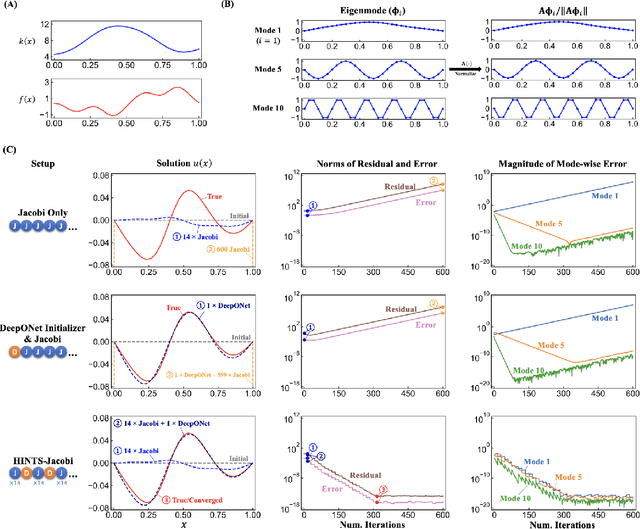

Large scale scattering using fast solvers based on neural operators

May 20, 2024

Abstract:We extend a recently proposed machine-learning-based iterative solver, i.e. the hybrid iterative transferable solver (HINTS), to solve the scattering problem described by the Helmholtz equation in an exterior domain with a complex absorbing boundary condition. The HINTS method combines neural operators (NOs) with standard iterative solvers, e.g. Jacobi and Gauss-Seidel (GS), to achieve better performance by leveraging the spectral bias of neural networks. In HINTS, some iterations of the conventional iterative method are replaced by inferences of the pre-trained NO. In this work, we employ HINTS to solve the scattering problem for both 2D and 3D problems, where the standard iterative solver fails. We consider square and triangular scatterers of various sizes in 2D, and a cube and a model submarine in 3D. We explore and illustrate the extrapolation capability of HINTS in handling diverse geometries of the scatterer, which is achieved by training the NO on non-scattering scenarios and then deploying it in HINTS to solve scattering problems. The accurate results demonstrate that the NO in HINTS method remains effective without retraining or fine-tuning it whenever a new scatterer is given. Taken together, our results highlight the adaptability and versatility of the extended HINTS methodology in addressing diverse scattering problems.

Diffusion model based data generation for partial differential equations

Jun 19, 2023

Abstract:In a preliminary attempt to address the problem of data scarcity in physics-based machine learning, we introduce a novel methodology for data generation in physics-based simulations. Our motivation is to overcome the limitations posed by the limited availability of numerical data. To achieve this, we leverage a diffusion model that allows us to generate synthetic data samples and test them for two canonical cases: (a) the steady 2-D Poisson equation, and (b) the forced unsteady 2-D Navier-Stokes (NS) {vorticity-transport} equation in a confined box. By comparing the generated data samples against outputs from classical solvers, we assess their accuracy and examine their adherence to the underlying physics laws. In this way, we emphasize the importance of not only satisfying visual and statistical comparisons with solver data but also ensuring the generated data's conformity to physics laws, thus enabling their effective utilization in downstream tasks.

NLP Inspired Training Mechanics For Modeling Transient Dynamics

Nov 04, 2022

Abstract:In recent years, Machine learning (ML) techniques developed for Natural Language Processing (NLP) have permeated into developing better computer vision algorithms. In this work, we use such NLP-inspired techniques to improve the accuracy, robustness and generalizability of ML models for simulating transient dynamics. We introduce teacher forcing and curriculum learning based training mechanics to model vortical flows and show an enhancement in accuracy for ML models, such as FNO and UNet by more than 50%.

A composable machine-learning approach for steady-state simulations on high-resolution grids

Oct 11, 2022

Abstract:In this paper we show that our Machine Learning (ML) approach, CoMLSim (Composable Machine Learning Simulator), can simulate PDEs on highly-resolved grids with higher accuracy and generalization to out-of-distribution source terms and geometries than traditional ML baselines. Our unique approach combines key principles of traditional PDE solvers with local-learning and low-dimensional manifold techniques to iteratively simulate PDEs on large computational domains. The proposed approach is validated on more than 5 steady-state PDEs across different PDE conditions on highly-resolved grids and comparisons are made with the commercial solver, Ansys Fluent as well as 4 other state-of-the-art ML methods. The numerical experiments show that our approach outperforms ML baselines in terms of 1) accuracy across quantitative metrics and 2) generalization to out-of-distribution conditions as well as domain sizes. Additionally, we provide results for a large number of ablations experiments conducted to highlight components of our approach that strongly influence the results. We conclude that our local-learning and iterative-inferencing approach reduces the challenge of generalization that most ML models face.

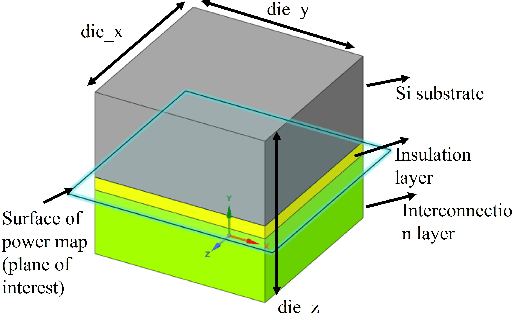

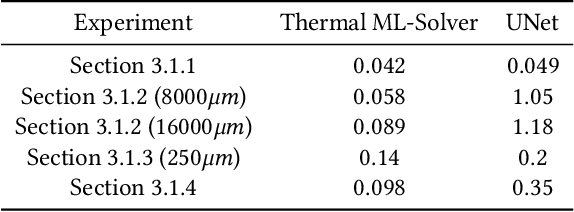

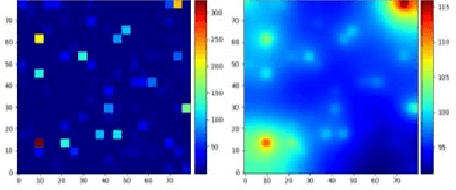

A Thermal Machine Learning Solver For Chip Simulation

Sep 10, 2022

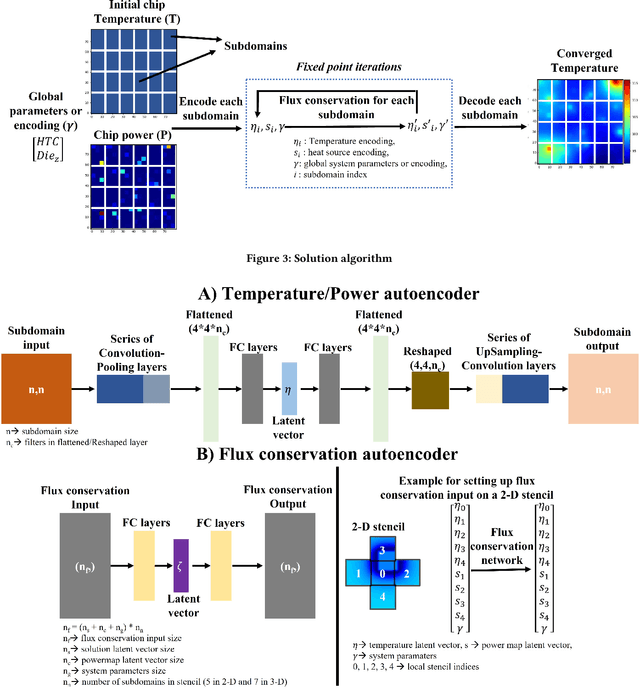

Abstract:Thermal analysis provides deeper insights into electronic chips behavior under different temperature scenarios and enables faster design exploration. However, obtaining detailed and accurate thermal profile on chip is very time-consuming using FEM or CFD. Therefore, there is an urgent need for speeding up the on-chip thermal solution to address various system scenarios. In this paper, we propose a thermal machine-learning (ML) solver to speed-up thermal simulations of chips. The thermal ML-Solver is an extension of the recent novel approach, CoAEMLSim (Composable Autoencoder Machine Learning Simulator) with modifications to the solution algorithm to handle constant and distributed HTC. The proposed method is validated against commercial solvers, such as Ansys MAPDL, as well as a latest ML baseline, UNet, under different scenarios to demonstrate its enhanced accuracy, scalability, and generalizability.

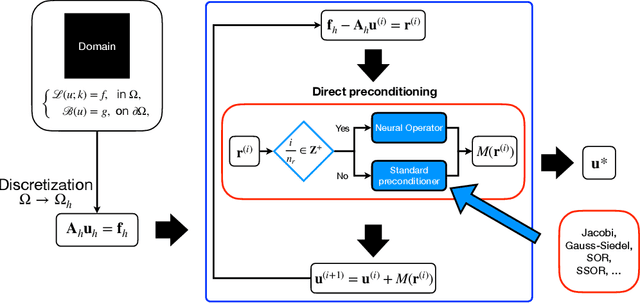

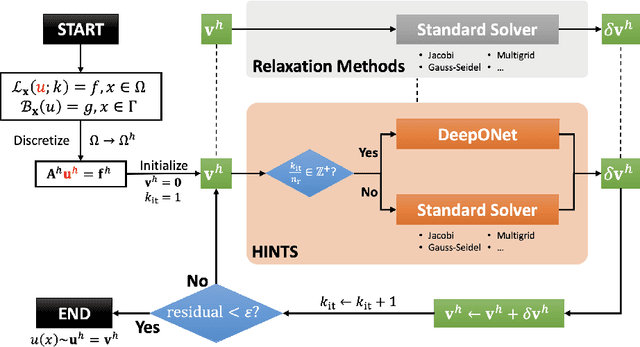

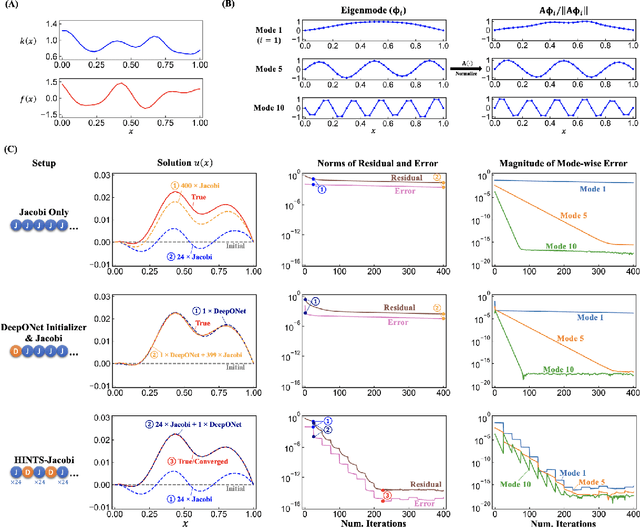

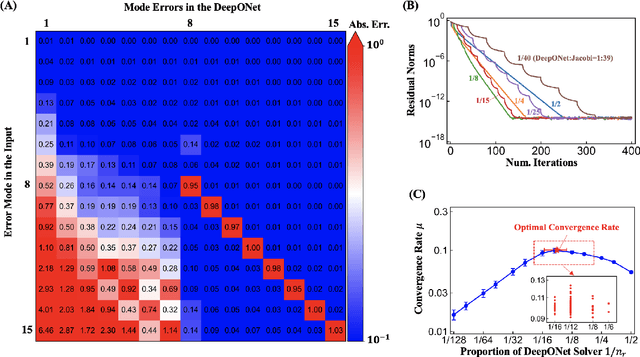

A Hybrid Iterative Numerical Transferable Solver (HINTS) for PDEs Based on Deep Operator Network and Relaxation Methods

Aug 28, 2022

Abstract:Iterative solvers of linear systems are a key component for the numerical solutions of partial differential equations (PDEs). While there have been intensive studies through past decades on classical methods such as Jacobi, Gauss-Seidel, conjugate gradient, multigrid methods and their more advanced variants, there is still a pressing need to develop faster, more robust and reliable solvers. Based on recent advances in scientific deep learning for operator regression, we propose HINTS, a hybrid, iterative, numerical, and transferable solver for differential equations. HINTS combines standard relaxation methods and the Deep Operator Network (DeepONet). Compared to standard numerical solvers, HINTS is capable of providing faster solutions for a wide class of differential equations, while preserving the accuracy close to machine zero. Through an eigenmode analysis, we find that the individual solvers in HINTS target distinct regions in the spectrum of eigenmodes, resulting in a uniform convergence rate and hence exceptional performance of the hybrid solver overall. Moreover, HINTS applies to equations in multidimensions, and is flexible with regards to computational domain and transferable to different discretizations.

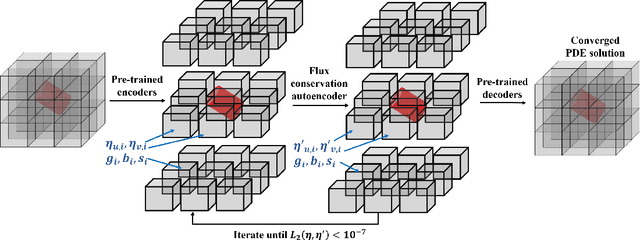

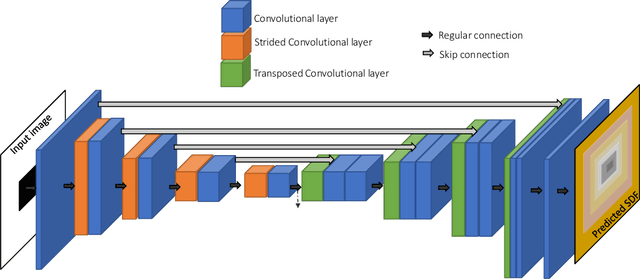

A composable autoencoder-based iterative algorithm for accelerating numerical simulations

Oct 07, 2021

Abstract:Numerical simulations for engineering applications solve partial differential equations (PDE) to model various physical processes. Traditional PDE solvers are very accurate but computationally costly. On the other hand, Machine Learning (ML) methods offer a significant computational speedup but face challenges with accuracy and generalization to different PDE conditions, such as geometry, boundary conditions, initial conditions and PDE source terms. In this work, we propose a novel ML-based approach, CoAE-MLSim (Composable AutoEncoder Machine Learning Simulation), which is an unsupervised, lower-dimensional, local method, that is motivated from key ideas used in commercial PDE solvers. This allows our approach to learn better with relatively fewer samples of PDE solutions. The proposed ML-approach is compared against commercial solvers for better benchmarks as well as latest ML-approaches for solving PDEs. It is tested for a variety of complex engineering cases to demonstrate its computational speed, accuracy, scalability, and generalization across different PDE conditions. The results show that our approach captures physics accurately across all metrics of comparison (including measures such as results on section cuts and lines).

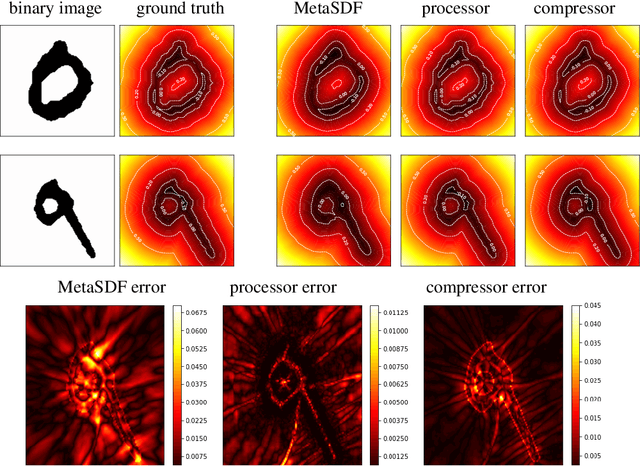

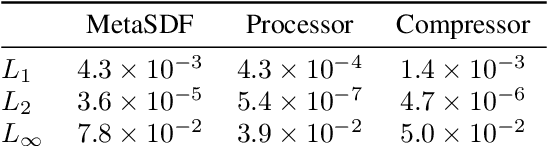

Geometry encoding for numerical simulations

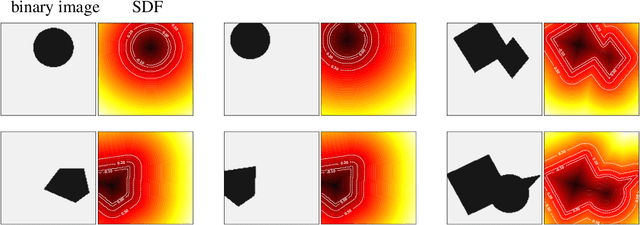

Apr 15, 2021

Abstract:We present a notion of geometry encoding suitable for machine learning-based numerical simulation. In particular, we delineate how this notion of encoding is different than other encoding algorithms commonly used in other disciplines such as computer vision and computer graphics. We also present a model comprised of multiple neural networks including a processor, a compressor and an evaluator.These parts each satisfy a particular requirement of our encoding. We compare our encoding model with the analogous models in the literature

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge