Zhilun Zhou

ResMAS: Resilience Optimization in LLM-based Multi-agent Systems

Jan 08, 2026Abstract:Large Language Model-based Multi-Agent Systems (LLM-based MAS), where multiple LLM agents collaborate to solve complex tasks, have shown impressive performance in many areas. However, MAS are typically distributed across different devices or environments, making them vulnerable to perturbations such as agent failures. While existing works have studied the adversarial attacks and corresponding defense strategies, they mainly focus on reactively detecting and mitigating attacks after they occur rather than proactively designing inherently resilient systems. In this work, we study the resilience of LLM-based MAS under perturbations and find that both the communication topology and prompt design significantly influence system resilience. Motivated by these findings, we propose ResMAS: a two-stage framework for enhancing MAS resilience. First, we train a reward model to predict the MAS's resilience, based on which we train a topology generator to automatically design resilient topology for specific tasks through reinforcement learning. Second, we introduce a topology-aware prompt optimization method that refines each agent's prompt based on its connections and interactions with other agents. Extensive experiments across a range of tasks show that our approach substantially improves MAS resilience under various constraints. Moreover, our framework demonstrates strong generalization ability to new tasks and models, highlighting its potential for building resilient MASs.

Rationality Check! Benchmarking the Rationality of Large Language Models

Sep 18, 2025Abstract:Large language models (LLMs), a recent advance in deep learning and machine intelligence, have manifested astonishing capacities, now considered among the most promising for artificial general intelligence. With human-like capabilities, LLMs have been used to simulate humans and serve as AI assistants across many applications. As a result, great concern has arisen about whether and under what circumstances LLMs think and behave like real human agents. Rationality is among the most important concepts in assessing human behavior, both in thinking (i.e., theoretical rationality) and in taking action (i.e., practical rationality). In this work, we propose the first benchmark for evaluating the omnibus rationality of LLMs, covering a wide range of domains and LLMs. The benchmark includes an easy-to-use toolkit, extensive experimental results, and analysis that illuminates where LLMs converge and diverge from idealized human rationality. We believe the benchmark can serve as a foundational tool for both developers and users of LLMs.

A Survey of Large Language Model-Powered Spatial Intelligence Across Scales: Advances in Embodied Agents, Smart Cities, and Earth Science

Apr 14, 2025Abstract:Over the past year, the development of large language models (LLMs) has brought spatial intelligence into focus, with much attention on vision-based embodied intelligence. However, spatial intelligence spans a broader range of disciplines and scales, from navigation and urban planning to remote sensing and earth science. What are the differences and connections between spatial intelligence across these fields? In this paper, we first review human spatial cognition and its implications for spatial intelligence in LLMs. We then examine spatial memory, knowledge representations, and abstract reasoning in LLMs, highlighting their roles and connections. Finally, we analyze spatial intelligence across scales -- from embodied to urban and global levels -- following a framework that progresses from spatial memory and understanding to spatial reasoning and intelligence. Through this survey, we aim to provide insights into interdisciplinary spatial intelligence research and inspire future studies.

Synergizing LLM Agents and Knowledge Graph for Socioeconomic Prediction in LBSN

Oct 29, 2024

Abstract:The fast development of location-based social networks (LBSNs) has led to significant changes in society, resulting in popular studies of using LBSN data for socioeconomic prediction, e.g., regional population and commercial activity estimation. Existing studies design various graphs to model heterogeneous LBSN data, and further apply graph representation learning methods for socioeconomic prediction. However, these approaches heavily rely on heuristic ideas and expertise to extract task-relevant knowledge from diverse data, which may not be optimal for specific tasks. Additionally, they tend to overlook the inherent relationships between different indicators, limiting the prediction accuracy. Motivated by the remarkable abilities of large language models (LLMs) in commonsense reasoning, embedding, and multi-agent collaboration, in this work, we synergize LLM agents and knowledge graph for socioeconomic prediction. We first construct a location-based knowledge graph (LBKG) to integrate multi-sourced LBSN data. Then we leverage the reasoning power of LLM agent to identify relevant meta-paths in the LBKG for each type of socioeconomic prediction task, and design a semantic-guided attention module for knowledge fusion with meta-paths. Moreover, we introduce a cross-task communication mechanism to further enhance performance by enabling knowledge sharing across tasks at both LLM agent and KG levels. On the one hand, the LLM agents for different tasks collaborate to generate more diverse and comprehensive meta-paths. On the other hand, the embeddings from different tasks are adaptively merged for better socioeconomic prediction. Experiments on two datasets demonstrate the effectiveness of the synergistic design between LLM and KG, providing insights for information sharing across socioeconomic prediction tasks.

Large Language Model for Participatory Urban Planning

Feb 27, 2024

Abstract:Participatory urban planning is the mainstream of modern urban planning that involves the active engagement of residents. However, the traditional participatory paradigm requires experienced planning experts and is often time-consuming and costly. Fortunately, the emerging Large Language Models (LLMs) have shown considerable ability to simulate human-like agents, which can be used to emulate the participatory process easily. In this work, we introduce an LLM-based multi-agent collaboration framework for participatory urban planning, which can generate land-use plans for urban regions considering the diverse needs of residents. Specifically, we construct LLM agents to simulate a planner and thousands of residents with diverse profiles and backgrounds. We first ask the planner to carry out an initial land-use plan. To deal with the different facilities needs of residents, we initiate a discussion among the residents in each community about the plan, where residents provide feedback based on their profiles. Furthermore, to improve the efficiency of discussion, we adopt a fishbowl discussion mechanism, where part of the residents discuss and the rest of them act as listeners in each round. Finally, we let the planner modify the plan based on residents' feedback. We deploy our method on two real-world regions in Beijing. Experiments show that our method achieves state-of-the-art performance in residents satisfaction and inclusion metrics, and also outperforms human experts in terms of service accessibility and ecology metrics.

Large language model empowered participatory urban planning

Jan 24, 2024

Abstract:Participatory urban planning is the mainstream of modern urban planning and involves the active engagement of different stakeholders. However, the traditional participatory paradigm encounters challenges in time and manpower, while the generative planning tools fail to provide adjustable and inclusive solutions. This research introduces an innovative urban planning approach integrating Large Language Models (LLMs) within the participatory process. The framework, based on the crafted LLM agent, consists of role-play, collaborative generation, and feedback iteration, solving a community-level land-use task catering to 1000 distinct interests. Empirical experiments in diverse urban communities exhibit LLM's adaptability and effectiveness across varied planning scenarios. The results were evaluated on four metrics, surpassing human experts in satisfaction and inclusion, and rivaling state-of-the-art reinforcement learning methods in service and ecology. Further analysis shows the advantage of LLM agents in providing adjustable and inclusive solutions with natural language reasoning and strong scalability. While implementing the recent advancements in emulating human behavior for planning, this work envisions both planners and citizens benefiting from low-cost, efficient LLM agents, which is crucial for enhancing participation and realizing participatory urban planning.

Large Language Models Empowered Agent-based Modeling and Simulation: A Survey and Perspectives

Dec 19, 2023

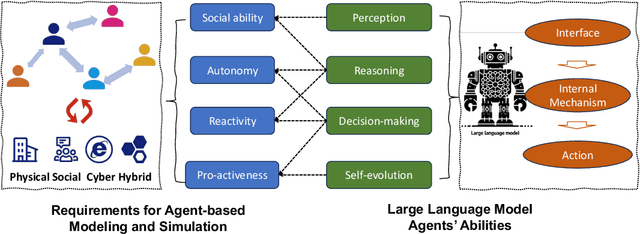

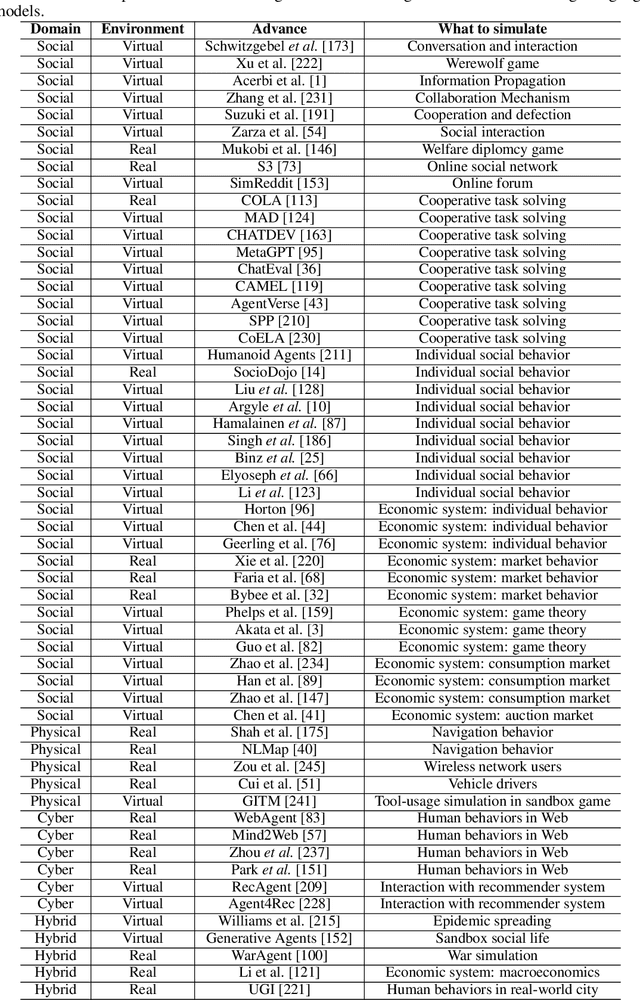

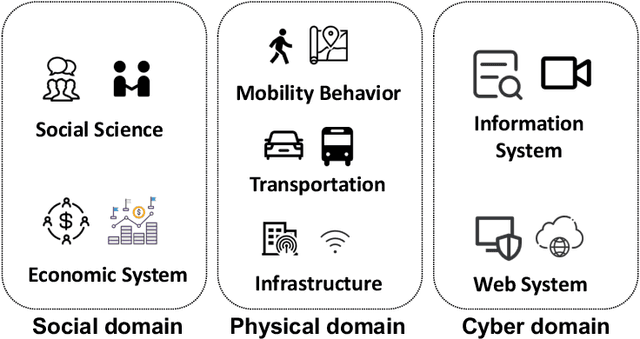

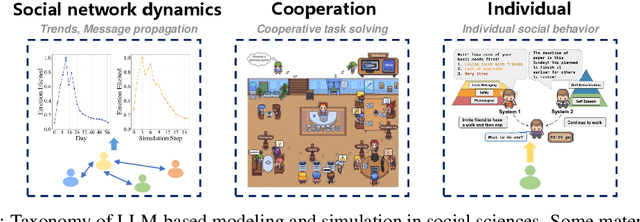

Abstract:Agent-based modeling and simulation has evolved as a powerful tool for modeling complex systems, offering insights into emergent behaviors and interactions among diverse agents. Integrating large language models into agent-based modeling and simulation presents a promising avenue for enhancing simulation capabilities. This paper surveys the landscape of utilizing large language models in agent-based modeling and simulation, examining their challenges and promising future directions. In this survey, since this is an interdisciplinary field, we first introduce the background of agent-based modeling and simulation and large language model-empowered agents. We then discuss the motivation for applying large language models to agent-based simulation and systematically analyze the challenges in environment perception, human alignment, action generation, and evaluation. Most importantly, we provide a comprehensive overview of the recent works of large language model-empowered agent-based modeling and simulation in multiple scenarios, which can be divided into four domains: cyber, physical, social, and hybrid, covering simulation of both real-world and virtual environments. Finally, since this area is new and quickly evolving, we discuss the open problems and promising future directions.

Towards Generative Modeling of Urban Flow through Knowledge-enhanced Denoising Diffusion

Sep 19, 2023Abstract:Although generative AI has been successful in many areas, its ability to model geospatial data is still underexplored. Urban flow, a typical kind of geospatial data, is critical for a wide range of urban applications. Existing studies mostly focus on predictive modeling of urban flow that predicts the future flow based on historical flow data, which may be unavailable in data-sparse areas or newly planned regions. Some other studies aim to predict OD flow among regions but they fail to model dynamic changes of urban flow over time. In this work, we study a new problem of urban flow generation that generates dynamic urban flow for regions without historical flow data. To capture the effect of multiple factors on urban flow, such as region features and urban environment, we employ diffusion model to generate urban flow for regions under different conditions. We first construct an urban knowledge graph (UKG) to model the urban environment and relationships between regions, based on which we design a knowledge-enhanced spatio-temporal diffusion model (KSTDiff) to generate urban flow for each region. Specifically, to accurately generate urban flow for regions with different flow volumes, we design a novel diffusion process guided by a volume estimator, which is learnable and customized for each region. Moreover, we propose a knowledge-enhanced denoising network to capture the spatio-temporal dependencies of urban flow as well as the impact of urban environment in the denoising process. Extensive experiments on four real-world datasets validate the superiority of our model over state-of-the-art baselines in urban flow generation. Further in-depth studies demonstrate the utility of generated urban flow data and the ability of our model for long-term flow generation and urban flow prediction. Our code is released at: https://github.com/tsinghua-fib-lab/KSTDiff-Urban-flow-generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge