Jingtao Ding

Inferring Network Evolutionary History via Structure-State Coupled Learning

Jan 05, 2026Abstract:Inferring a network's evolutionary history from a single final snapshot with limited temporal annotations is fundamental yet challenging. Existing approaches predominantly rely on topology alone, which often provides insufficient and noisy cues. This paper leverages network steady-state dynamics -- converged node states under a given dynamical process -- as an additional and widely accessible observation for network evolution history inference. We propose CS$^2$, which explicitly models structure-state coupling to capture how topology modulates steady states and how the two signals jointly improve edge discrimination for formation-order recovery. Experiments on six real temporal networks, evaluated under multiple dynamical processes, show that CS$^2$ consistently outperforms strong baselines, improving pairwise edge precedence accuracy by 4.0% on average and global ordering consistency (Spearman-$ρ$) by 7.7% on average. CS$^2$ also more faithfully recovers macroscopic evolution trajectories such as clustering formation, degree heterogeneity, and hub growth. Moreover, a steady-state-only variant remains competitive when reliable topology is limited, highlighting steady states as an independent signal for evolution inference.

PID-controlled Langevin Dynamics for Faster Sampling of Generative Models

Nov 16, 2025Abstract:Langevin dynamics sampling suffers from extremely low generation speed, fundamentally limited by numerous fine-grained iterations to converge to the target distribution. We introduce PID-controlled Langevin Dynamics (PIDLD), a novel sampling acceleration algorithm that reinterprets the sampling process using control-theoretic principles. By treating energy gradients as feedback signals, PIDLD combines historical gradients (the integral term) and gradient trends (the derivative term) to efficiently traverse energy landscapes and adaptively stabilize, thereby significantly reducing the number of iterations required to produce high-quality samples. Our approach requires no additional training, datasets, or prior information, making it immediately integrable with any Langevin-based method. Extensive experiments across image generation and reasoning tasks demonstrate that PIDLD achieves higher quality with fewer steps, making Langevin-based generative models more practical for efficiency-critical applications. The implementation can be found at \href{https://github.com/tsinghua-fib-lab/PIDLD}{https://github.com/tsinghua-fib-lab/PIDLD}.

Fine-Tuning Flow Matching via Maximum Likelihood Estimation of Reconstructions

Oct 02, 2025

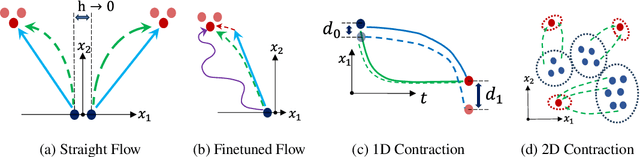

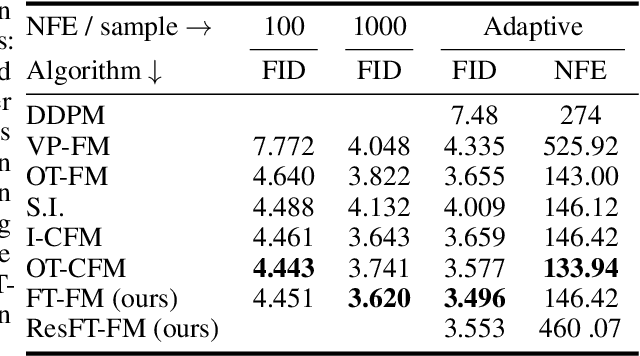

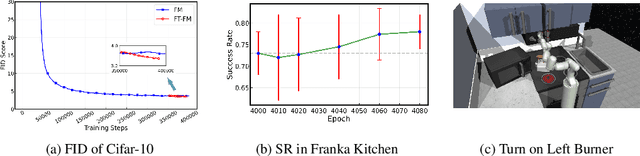

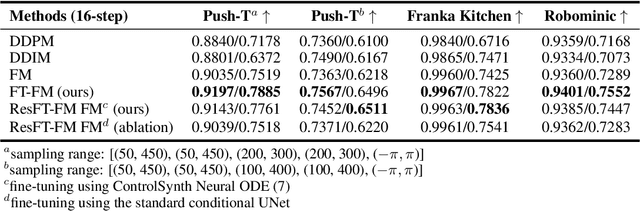

Abstract:Flow Matching (FM) algorithm achieves remarkable results in generative tasks especially in robotic manipulation. Building upon the foundations of diffusion models, the simulation-free paradigm of FM enables simple and efficient training, but inherently introduces a train-inference gap. Specifically, we cannot assess the model's output during the training phase. In contrast, other generative models including Variational Autoencoder (VAE), Normalizing Flow and Generative Adversarial Networks (GANs) directly optimize on the reconstruction loss. Such a gap is particularly evident in scenarios that demand high precision, such as robotic manipulation. Moreover, we show that FM's over-pursuit of straight predefined paths may introduce some serious problems such as stiffness into the system. These motivate us to fine-tune FM via Maximum Likelihood Estimation of reconstructions - an approach made feasible by FM's underlying smooth ODE formulation, in contrast to the stochastic differential equations (SDEs) used in diffusion models. This paper first theoretically analyzes the relation between training loss and inference error in FM. Then we propose a method of fine-tuning FM via Maximum Likelihood Estimation of reconstructions, which includes both straightforward fine-tuning and residual-based fine-tuning approaches. Furthermore, through specifically designed architectures, the residual-based fine-tuning can incorporate the contraction property into the model, which is crucial for the model's robustness and interpretability. Experimental results in image generation and robotic manipulation verify that our method reliably improves the inference performance of FM.

MoveFM-R: Advancing Mobility Foundation Models via Language-driven Semantic Reasoning

Sep 26, 2025Abstract:Mobility Foundation Models (MFMs) have advanced the modeling of human movement patterns, yet they face a ceiling due to limitations in data scale and semantic understanding. While Large Language Models (LLMs) offer powerful semantic reasoning, they lack the innate understanding of spatio-temporal statistics required for generating physically plausible mobility trajectories. To address these gaps, we propose MoveFM-R, a novel framework that unlocks the full potential of mobility foundation models by leveraging language-driven semantic reasoning capabilities. It tackles two key challenges: the vocabulary mismatch between continuous geographic coordinates and discrete language tokens, and the representation gap between the latent vectors of MFMs and the semantic world of LLMs. MoveFM-R is built on three core innovations: a semantically enhanced location encoding to bridge the geography-language gap, a progressive curriculum to align the LLM's reasoning with mobility patterns, and an interactive self-reflection mechanism for conditional trajectory generation. Extensive experiments demonstrate that MoveFM-R significantly outperforms existing MFM-based and LLM-based baselines. It also shows robust generalization in zero-shot settings and excels at generating realistic trajectories from natural language instructions. By synthesizing the statistical power of MFMs with the deep semantic understanding of LLMs, MoveFM-R pioneers a new paradigm that enables a more comprehensive, interpretable, and powerful modeling of human mobility. The implementation of MoveFM-R is available online at https://anonymous.4open.science/r/MoveFM-R-CDE7/.

UniMove: A Unified Model for Multi-city Human Mobility Prediction

Aug 09, 2025Abstract:Human mobility prediction is vital for urban planning, transportation optimization, and personalized services. However, the inherent randomness, non-uniform time intervals, and complex patterns of human mobility, compounded by the heterogeneity introduced by varying city structures, infrastructure, and population densities, present significant challenges in modeling. Existing solutions often require training separate models for each city due to distinct spatial representations and geographic coverage. In this paper, we propose UniMove, a unified model for multi-city human mobility prediction, addressing two challenges: (1) constructing universal spatial representations for effective token sharing across cities, and (2) modeling heterogeneous mobility patterns from varying city characteristics. We propose a trajectory-location dual-tower architecture, with a location tower for universal spatial encoding and a trajectory tower for sequential mobility modeling. We also design MoE Transformer blocks to adaptively select experts to handle diverse movement patterns. Extensive experiments across multiple datasets from diverse cities demonstrate that UniMove truly embodies the essence of a unified model. By enabling joint training on multi-city data with mutual data enhancement, it significantly improves mobility prediction accuracy by over 10.2\%. UniMove represents a key advancement toward realizing a true foundational model with a unified architecture for human mobility. We release the implementation at https://github.com/tsinghua-fib-lab/UniMove/.

Mamba Integrated with Physics Principles Masters Long-term Chaotic System Forecasting

May 29, 2025

Abstract:Long-term forecasting of chaotic systems from short-term observations remains a fundamental and underexplored challenge due to the intrinsic sensitivity to initial conditions and the complex geometry of strange attractors. Existing approaches often rely on long-term training data or focus on short-term sequence correlations, struggling to maintain predictive stability and dynamical coherence over extended horizons. We propose PhyxMamba, a novel framework that integrates a Mamba-based state-space model with physics-informed principles to capture the underlying dynamics of chaotic systems. By reconstructing the attractor manifold from brief observations using time-delay embeddings, PhyxMamba extracts global dynamical features essential for accurate forecasting. Our generative training scheme enables Mamba to replicate the physical process, augmented by multi-token prediction and attractor geometry regularization for physical constraints, enhancing prediction accuracy and preserving key statistical invariants. Extensive evaluations on diverse simulated and real-world chaotic systems demonstrate that PhyxMamba delivers superior long-term forecasting and faithfully captures essential dynamical invariants from short-term data. This framework opens new avenues for reliably predicting chaotic systems under observation-scarce conditions, with broad implications across climate science, neuroscience, epidemiology, and beyond. Our code is open-source at https://github.com/tsinghua-fib-lab/PhyxMamba.

TrajMoE: Spatially-Aware Mixture of Experts for Unified Human Mobility Modeling

May 24, 2025Abstract:Modeling human mobility across diverse cities is essential for applications such as urban planning, transportation optimization, and personalized services. However, generalization remains challenging due to heterogeneous spatial representations and mobility patterns across cities. Existing methods typically rely on numerical coordinates or require training city-specific models, limiting their scalability and transferability. We propose TrajMoE, a unified and scalable model for cross-city human mobility modeling. TrajMoE addresses two key challenges: (1) inconsistent spatial semantics across cities, and (2) diverse urban mobility patterns. To tackle these, we begin by designing a spatial semantic encoder that learns transferable location representations from POI-based functional semantics and visit patterns. Furthermore, we design a Spatially-Aware Mixture-of-Experts (SAMoE) Transformer that injects structured priors into experts specialized in distinct mobility semantics, along with a shared expert to capture city-invariant patterns and enable adaptive cross-city generalization. Extensive experiments demonstrate that TrajMoE achieves up to 27% relative improvement over competitive mobility foundation models after only one epoch of fine-tuning, and consistently outperforms full-data baselines using merely 5% of target city data. These results establish TrajMoE as a significant step toward realizing a truly generalizable, transferable, and pretrainable foundation model for human mobility.

Tuning Language Models for Robust Prediction of Diverse User Behaviors

May 23, 2025Abstract:Predicting user behavior is essential for intelligent assistant services, yet deep learning models often struggle to capture long-tailed behaviors. Large language models (LLMs), with their pretraining on vast corpora containing rich behavioral knowledge, offer promise. However, existing fine-tuning approaches tend to overfit to frequent ``anchor'' behaviors, reducing their ability to predict less common ``tail'' behaviors. In this paper, we introduce BehaviorLM, a progressive fine-tuning approach that addresses this issue. In the first stage, LLMs are fine-tuned on anchor behaviors while preserving general behavioral knowledge. In the second stage, fine-tuning uses a balanced subset of all behaviors based on sample difficulty to improve tail behavior predictions without sacrificing anchor performance. Experimental results on two real-world datasets demonstrate that BehaviorLM robustly predicts both anchor and tail behaviors and effectively leverages LLM behavioral knowledge to master tail behavior prediction with few-shot examples.

BehaveGPT: A Foundation Model for Large-scale User Behavior Modeling

May 23, 2025Abstract:In recent years, foundational models have revolutionized the fields of language and vision, demonstrating remarkable abilities in understanding and generating complex data; however, similar advances in user behavior modeling have been limited, largely due to the complexity of behavioral data and the challenges involved in capturing intricate temporal and contextual relationships in user activities. To address this, we propose BehaveGPT, a foundational model designed specifically for large-scale user behavior prediction. Leveraging transformer-based architecture and a novel pretraining paradigm, BehaveGPT is trained on vast user behavior datasets, allowing it to learn complex behavior patterns and support a range of downstream tasks, including next behavior prediction, long-term generation, and cross-domain adaptation. Our approach introduces the DRO-based pretraining paradigm tailored for user behavior data, which improves model generalization and transferability by equitably modeling both head and tail behaviors. Extensive experiments on real-world datasets demonstrate that BehaveGPT outperforms state-of-the-art baselines, achieving more than a 10% improvement in macro and weighted recall, showcasing its ability to effectively capture and predict user behavior. Furthermore, we measure the scaling law in the user behavior domain for the first time on the Honor dataset, providing insights into how model performance scales with increased data and parameter sizes.

Large language model as user daily behavior data generator: balancing population diversity and individual personality

May 23, 2025Abstract:Predicting human daily behavior is challenging due to the complexity of routine patterns and short-term fluctuations. While data-driven models have improved behavior prediction by leveraging empirical data from various platforms and devices, the reliance on sensitive, large-scale user data raises privacy concerns and limits data availability. Synthetic data generation has emerged as a promising solution, though existing methods are often limited to specific applications. In this work, we introduce BehaviorGen, a framework that uses large language models (LLMs) to generate high-quality synthetic behavior data. By simulating user behavior based on profiles and real events, BehaviorGen supports data augmentation and replacement in behavior prediction models. We evaluate its performance in scenarios such as pertaining augmentation, fine-tuning replacement, and fine-tuning augmentation, achieving significant improvements in human mobility and smartphone usage predictions, with gains of up to 18.9%. Our results demonstrate the potential of BehaviorGen to enhance user behavior modeling through flexible and privacy-preserving synthetic data generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge