Zengguang Hou

An Underwater, Fault-Tolerant, Laser-Aided Robotic Multi-Modal Dense SLAM System for Continuous Underwater In-Situ Observation

Apr 30, 2025Abstract:Existing underwater SLAM systems are difficult to work effectively in texture-sparse and geometrically degraded underwater environments, resulting in intermittent tracking and sparse mapping. Therefore, we present Water-DSLAM, a novel laser-aided multi-sensor fusion system that can achieve uninterrupted, fault-tolerant dense SLAM capable of continuous in-situ observation in diverse complex underwater scenarios through three key innovations: Firstly, we develop Water-Scanner, a multi-sensor fusion robotic platform featuring a self-designed Underwater Binocular Structured Light (UBSL) module that enables high-precision 3D perception. Secondly, we propose a fault-tolerant triple-subsystem architecture combining: 1) DP-INS (DVL- and Pressure-aided Inertial Navigation System): fusing inertial measurement unit, doppler velocity log, and pressure sensor based Error-State Kalman Filter (ESKF) to provide high-frequency absolute odometry 2) Water-UBSL: a novel Iterated ESKF (IESKF)-based tight coupling between UBSL and DP-INS to mitigate UBSL's degeneration issues 3) Water-Stereo: a fusion of DP-INS and stereo camera for accurate initialization and tracking. Thirdly, we introduce a multi-modal factor graph back-end that dynamically fuses heterogeneous sensor data. The proposed multi-sensor factor graph maintenance strategy efficiently addresses issues caused by asynchronous sensor frequencies and partial data loss. Experimental results demonstrate Water-DSLAM achieves superior robustness (0.039 m trajectory RMSE and 100\% continuity ratio during partial sensor dropout) and dense mapping (6922.4 points/m^3 in 750 m^3 water volume, approximately 10 times denser than existing methods) in various challenging environments, including pools, dark underwater scenes, 16-meter-deep sinkholes, and field rivers. Our project is available at https://water-scanner.github.io/.

Faster Person Re-Identification

Aug 16, 2020

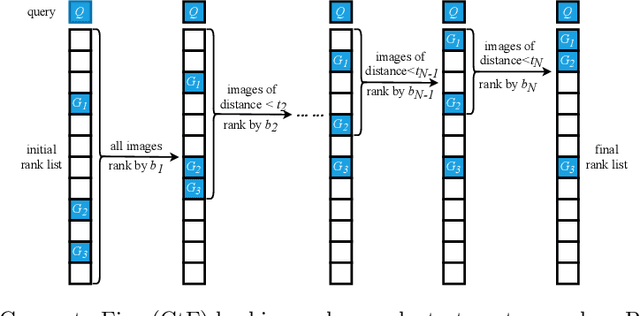

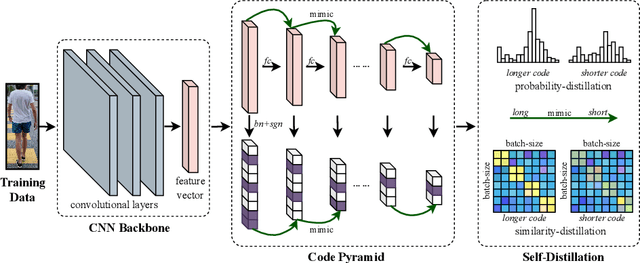

Abstract:Fast person re-identification (ReID) aims to search person images quickly and accurately. The main idea of recent fast ReID methods is the hashing algorithm, which learns compact binary codes and performs fast Hamming distance and counting sort. However, a very long code is needed for high accuracy (e.g. 2048), which compromises search speed. In this work, we introduce a new solution for fast ReID by formulating a novel Coarse-to-Fine (CtF) hashing code search strategy, which complementarily uses short and long codes, achieving both faster speed and better accuracy. It uses shorter codes to coarsely rank broad matching similarities and longer codes to refine only a few top candidates for more accurate instance ReID. Specifically, we design an All-in-One (AiO) framework together with a Distance Threshold Optimization (DTO) algorithm. In AiO, we simultaneously learn and enhance multiple codes of different lengths in a single model. It learns multiple codes in a pyramid structure, and encourage shorter codes to mimic longer codes by self-distillation. DTO solves a complex threshold search problem by a simple optimization process, and the balance between accuracy and speed is easily controlled by a single parameter. It formulates the optimization target as a $F_{\beta}$ score that can be optimised by Gaussian cumulative distribution functions. Experimental results on 2 datasets show that our proposed method (CtF) is not only 8% more accurate but also 5x faster than contemporary hashing ReID methods. Compared with non-hashing ReID methods, CtF is $50\times$ faster with comparable accuracy. Code is available at https://github.com/wangguanan/light-reid.

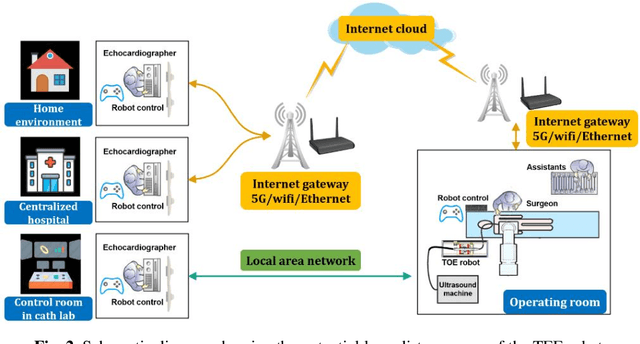

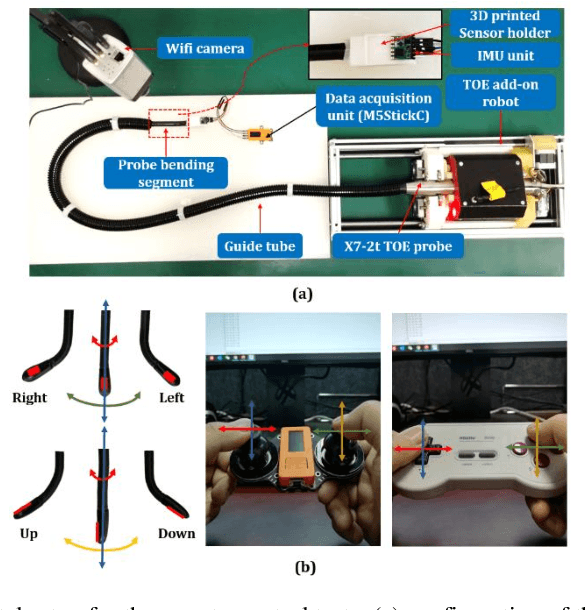

IoT-based Remote Control Study of a Robotic Trans-esophageal Ultrasound Probe via LAN and 5G

May 28, 2020

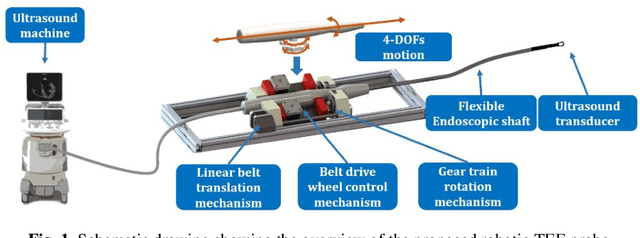

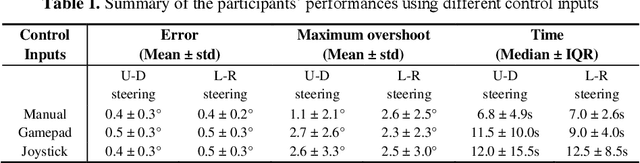

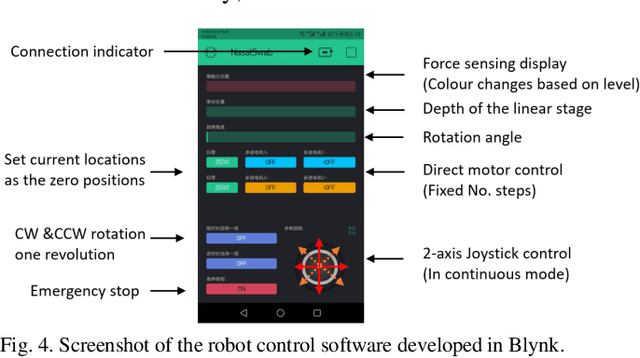

Abstract:A robotic trans-esophageal echocardiography (TEE) probe has been recently developed to address the problems with manual control in the X-ray envi-ronment when a conventional probe is used for interventional procedure guidance. However, the robot was exclusively to be used in local areas and the effectiveness of remote control has not been scientifically tested. In this study, we implemented an Internet-of-things (IoT)-based configuration to the TEE robot so the system can set up a local area network (LAN) or be configured to connect to an internet cloud over 5G. To investigate the re-mote control, backlash hysteresis effects were measured and analysed. A joy-stick-based device and a button-based gamepad were then employed and compared with the manual control in a target reaching experiment for the two steering axes. The results indicated different hysteresis curves for the left-right and up-down steering axes with the input wheel's deadbands found to be 15 deg and deg, respectively. Similar magnitudes of positioning errors at approximately 0.5 deg and maximum overshoots at around 2.5 deg were found when manually and robotically controlling the TEE probe. The amount of time to finish the task indicated a better performance using the button-based gamepad over joystick-based device, although both were worse than the manual control. It is concluded that the IoT-based remote control of the TEE probe is feasible and a trained user can accurately manipulate the probe. The main identified problem was the backlash hysteresis in the steering axes, which can result in continuous oscillations and overshoots.

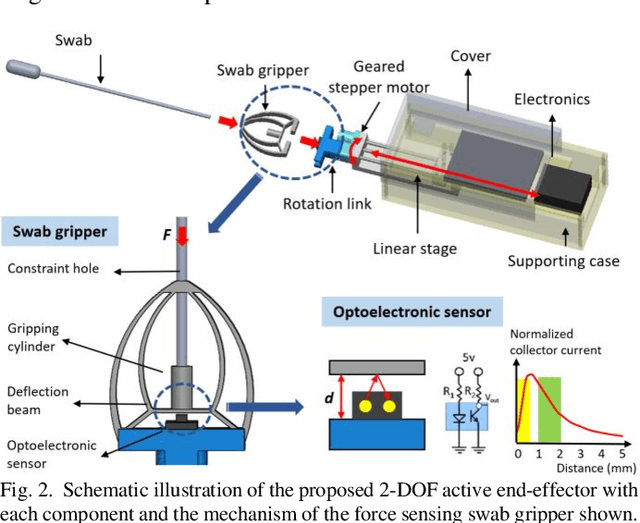

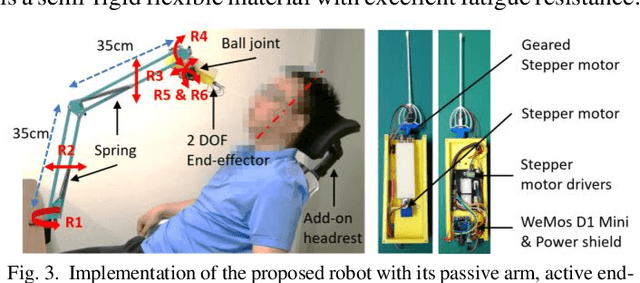

Design of a Low-cost Miniature Robot to Assist the COVID-19 Nasopharyngeal Swab Sampling

May 26, 2020

Abstract:Nasopharyngeal (NP) swab sampling is an effective approach for the diagnosis of coronavirus disease 2019 (COVID-19). Medical staffs carrying out the task of collecting NP specimens are in close contact with the suspected patient, thereby posing a high risk of cross-infection. We propose a low-cost miniature robot that can be easily assembled and remotely controlled. The system includes an active end-effector, a passive positioning arm, and a detachable swab gripper with integrated force sensing capability. The cost of the materials for building this robot is 55 USD and the total weight of the functional part is 0.23kg. The design of the force sensing swab gripper was justified using Finite Element (FE) modeling and the performances of the robot were validated with a simulation phantom and three pig noses. FE analysis indicated a 0.5mm magnitude displacement of the gripper's sensing beam, which meets the ideal detecting range of the optoelectronic sensor. Studies on both the phantom and the pig nose demonstrated the successful operation of the robot during the collection task. The average forces were found to be 0.35N and 0.85N, respectively. It is concluded that the proposed robot is promising and could be further developed to be used in vivo.

Cross-Modality Paired-Images Generation for RGB-Infrared Person Re-Identification

Feb 18, 2020

Abstract:RGB-Infrared (IR) person re-identification is very challenging due to the large cross-modality variations between RGB and IR images. The key solution is to learn aligned features to the bridge RGB and IR modalities. However, due to the lack of correspondence labels between every pair of RGB and IR images, most methods try to alleviate the variations with set-level alignment by reducing the distance between the entire RGB and IR sets. However, this set-level alignment may lead to misalignment of some instances, which limits the performance for RGB-IR Re-ID. Different from existing methods, in this paper, we propose to generate cross-modality paired-images and perform both global set-level and fine-grained instance-level alignments. Our proposed method enjoys several merits. First, our method can perform set-level alignment by disentangling modality-specific and modality-invariant features. Compared with conventional methods, ours can explicitly remove the modality-specific features and the modality variation can be better reduced. Second, given cross-modality unpaired-images of a person, our method can generate cross-modality paired images from exchanged images. With them, we can directly perform instance-level alignment by minimizing distances of every pair of images. Extensive experimental results on two standard benchmarks demonstrate that the proposed model favourably against state-of-the-art methods. Especially, on SYSU-MM01 dataset, our model can achieve a gain of 9.2% and 7.7% in terms of Rank-1 and mAP. Code is available at https://github.com/wangguanan/JSIA-ReID.

RGB-Infrared Cross-Modality Person Re-Identification via Joint Pixel and Feature Alignment

Oct 28, 2019

Abstract:RGB-Infrared (IR) person re-identification is an important and challenging task due to large cross-modality variations between RGB and IR images. Most conventional approaches aim to bridge the cross-modality gap with feature alignment by feature representation learning. Different from existing methods, in this paper, we propose a novel and end-to-end Alignment Generative Adversarial Network (AlignGAN) for the RGB-IR RE-ID task. The proposed model enjoys several merits. First, it can exploit pixel alignment and feature alignment jointly. To the best of our knowledge, this is the first work to model the two alignment strategies jointly for the RGB-IR RE-ID problem. Second, the proposed model consists of a pixel generator, a feature generator, and a joint discriminator. By playing a min-max game among the three components, our model is able to not only alleviate the cross-modality and intra-modality variations but also learn identity-consistent features. Extensive experimental results on two standard benchmarks demonstrate that the proposed model performs favorably against state-of-the-art methods. Especially, on SYSU-MM01 dataset, our model can achieve an absolute gain of 15.4% and 12.9% in terms of Rank-1 and mAP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge