Davinder Singh

IoT-based Remote Control Study of a Robotic Trans-esophageal Ultrasound Probe via LAN and 5G

May 28, 2020

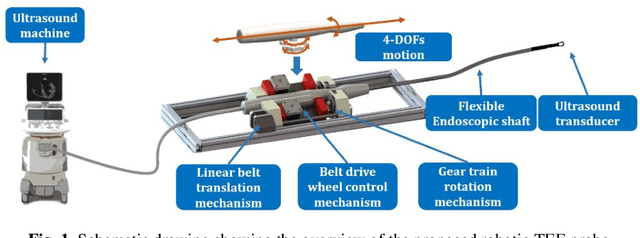

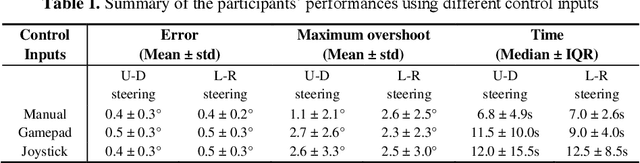

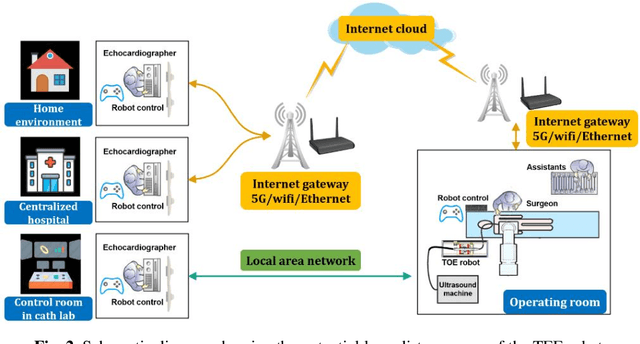

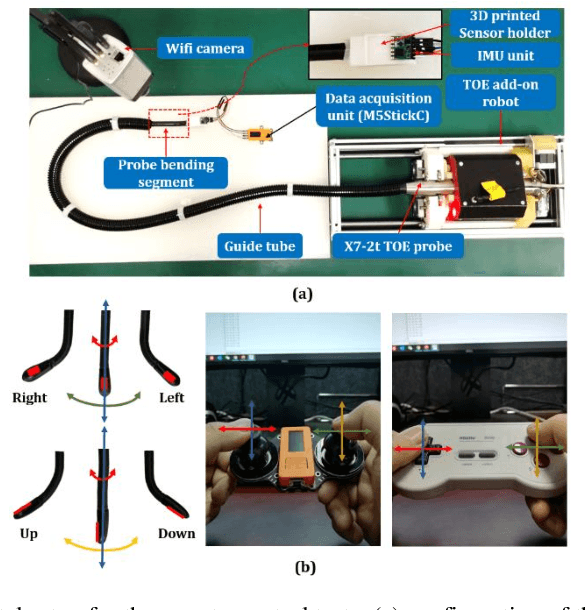

Abstract:A robotic trans-esophageal echocardiography (TEE) probe has been recently developed to address the problems with manual control in the X-ray envi-ronment when a conventional probe is used for interventional procedure guidance. However, the robot was exclusively to be used in local areas and the effectiveness of remote control has not been scientifically tested. In this study, we implemented an Internet-of-things (IoT)-based configuration to the TEE robot so the system can set up a local area network (LAN) or be configured to connect to an internet cloud over 5G. To investigate the re-mote control, backlash hysteresis effects were measured and analysed. A joy-stick-based device and a button-based gamepad were then employed and compared with the manual control in a target reaching experiment for the two steering axes. The results indicated different hysteresis curves for the left-right and up-down steering axes with the input wheel's deadbands found to be 15 deg and deg, respectively. Similar magnitudes of positioning errors at approximately 0.5 deg and maximum overshoots at around 2.5 deg were found when manually and robotically controlling the TEE probe. The amount of time to finish the task indicated a better performance using the button-based gamepad over joystick-based device, although both were worse than the manual control. It is concluded that the IoT-based remote control of the TEE probe is feasible and a trained user can accurately manipulate the probe. The main identified problem was the backlash hysteresis in the steering axes, which can result in continuous oscillations and overshoots.

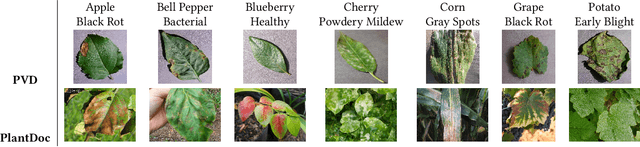

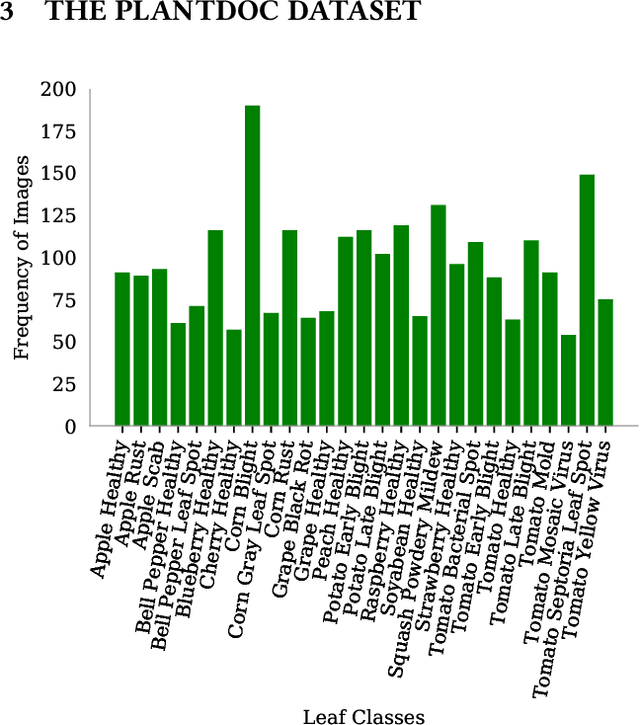

PlantDoc: A Dataset for Visual Plant Disease Detection

Nov 23, 2019

Abstract:India loses 35% of the annual crop yield due to plant diseases. Early detection of plant diseases remains difficult due to the lack of lab infrastructure and expertise. In this paper, we explore the possibility of computer vision approaches for scalable and early plant disease detection. The lack of availability of sufficiently large-scale non-lab data set remains a major challenge for enabling vision based plant disease detection. Against this background, we present PlantDoc: a dataset for visual plant disease detection. Our dataset contains 2,598 data points in total across 13 plant species and up to 17 classes of diseases, involving approximately 300 human hours of effort in annotating internet scraped images. To show the efficacy of our dataset, we learn 3 models for the task of plant disease classification. Our results show that modelling using our dataset can increase the classification accuracy by up to 31%. We believe that our dataset can help reduce the entry barrier of computer vision techniques in plant disease detection.

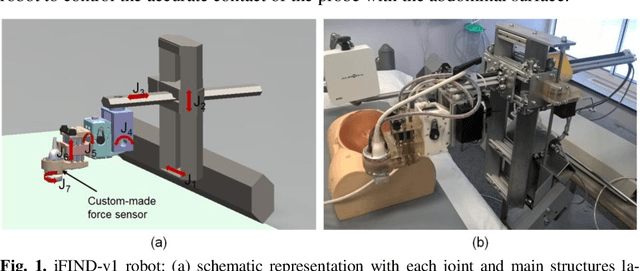

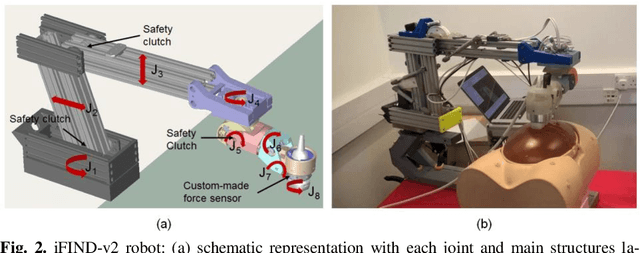

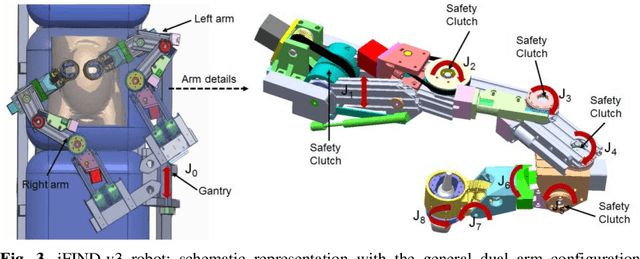

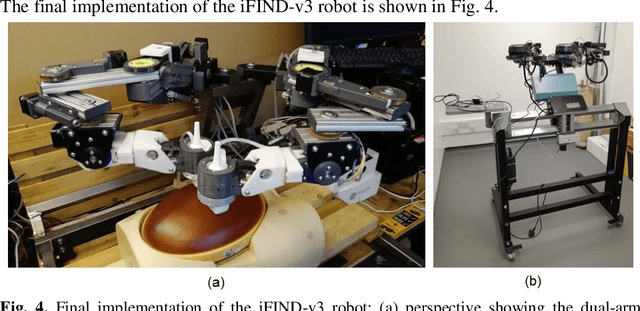

Robotic-assisted Ultrasound for Fetal Imaging: Evolution from Single-arm to Dual-arm System

Apr 10, 2019

Abstract:The development of robotic-assisted extracorporeal ultrasound systems has a long history and a number of projects have been proposed since the 1990s focusing on different technical aspects. These aim to resolve the deficiencies of on-site manual manipulation of hand-held ultrasound probes. This paper presents the recent ongoing developments of a series of bespoke robotic systems, including both single-arm and dual-arm versions, for a project known as intelligent Fetal Imaging and Diagnosis (iFIND). After a brief review of the development history of the extracorporeal ultrasound robotic system used for fetal and abdominal examinations, the specific aim of the iFIND robots, the design evolution, the implementation details of each version, and the initial clinical feedback of the iFIND robot series are presented. Based on the preliminary testing of these newly-proposed robots on 42 volunteers, the successful and re-liable working of the mechatronic systems were validated. Analysis of a participant questionnaire indicates a comfortable scanning experience for the volunteers and a good acceptance rate to being scanned by the robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge