Zeen Song

Causal Front-Door Adjustment for Robust Jailbreak Attacks on LLMs

Feb 05, 2026Abstract:Safety alignment mechanisms in Large Language Models (LLMs) often operate as latent internal states, obscuring the model's inherent capabilities. Building on this observation, we model the safety mechanism as an unobserved confounder from a causal perspective. Then, we propose the \textbf{C}ausal \textbf{F}ront-Door \textbf{A}djustment \textbf{A}ttack ({\textbf{CFA}}$^2$) to jailbreak LLM, which is a framework that leverages Pearl's Front-Door Criterion to sever the confounding associations for robust jailbreaking. Specifically, we employ Sparse Autoencoders (SAEs) to physically strip defense-related features, isolating the core task intent. We further reduce computationally expensive marginalization to a deterministic intervention with low inference complexity. Experiments demonstrate that {CFA}$^2$ achieves state-of-the-art attack success rates while offering a mechanistic interpretation of the jailbreaking process.

Group Causal Policy Optimization for Post-Training Large Language Models

Aug 07, 2025Abstract:Recent advances in large language models (LLMs) have broadened their applicability across diverse tasks, yet specialized domains still require targeted post training. Among existing methods, Group Relative Policy Optimization (GRPO) stands out for its efficiency, leveraging groupwise relative rewards while avoiding costly value function learning. However, GRPO treats candidate responses as independent, overlooking semantic interactions such as complementarity and contradiction. To address this challenge, we first introduce a Structural Causal Model (SCM) that reveals hidden dependencies among candidate responses induced by conditioning on a final integrated output forming a collider structure. Then, our causal analysis leads to two insights: (1) projecting responses onto a causally informed subspace improves prediction quality, and (2) this projection yields a better baseline than query only conditioning. Building on these insights, we propose Group Causal Policy Optimization (GCPO), which integrates causal structure into optimization through two key components: a causally informed reward adjustment and a novel KL regularization term that aligns the policy with a causally projected reference distribution. Comprehensive experimental evaluations demonstrate that GCPO consistently surpasses existing methods, including GRPO across multiple reasoning benchmarks.

Causal Reward Adjustment: Mitigating Reward Hacking in External Reasoning via Backdoor Correction

Aug 06, 2025Abstract:External reasoning systems combine language models with process reward models (PRMs) to select high-quality reasoning paths for complex tasks such as mathematical problem solving. However, these systems are prone to reward hacking, where high-scoring but logically incorrect paths are assigned high scores by the PRMs, leading to incorrect answers. From a causal inference perspective, we attribute this phenomenon primarily to the presence of confounding semantic features. To address it, we propose Causal Reward Adjustment (CRA), a method that mitigates reward hacking by estimating the true reward of a reasoning path. CRA trains sparse autoencoders on the PRM's internal activations to recover interpretable features, then corrects confounding by using backdoor adjustment. Experiments on math solving datasets demonstrate that CRA mitigates reward hacking and improves final accuracy, without modifying the policy model or retraining PRM.

Reward Model Generalization for Compute-Aware Test-Time Reasoning

May 23, 2025Abstract:External test-time reasoning enhances large language models (LLMs) by decoupling generation and selection. At inference time, the model generates multiple reasoning paths, and an auxiliary process reward model (PRM) is used to score and select the best one. A central challenge in this setting is test-time compute optimality (TCO), i.e., how to maximize answer accuracy under a fixed inference budget. In this work, we establish a theoretical framework to analyze how the generalization error of the PRM affects compute efficiency and reasoning performance. Leveraging PAC-Bayes theory, we derive generalization bounds and show that a lower generalization error of PRM leads to fewer samples required to find correct answers. Motivated by this analysis, we propose Compute-Aware Tree Search (CATS), an actor-critic framework that dynamically controls search behavior. The actor outputs sampling hyperparameters based on reward distributions and sparsity statistics, while the critic estimates their utility to guide budget allocation. Experiments on the MATH and AIME benchmarks with various LLMs and PRMs demonstrate that CATS consistently outperforms other external TTS methods, validating our theoretical predictions.

Learning to Think: Information-Theoretic Reinforcement Fine-Tuning for LLMs

May 15, 2025Abstract:Large language models (LLMs) excel at complex tasks thanks to advances in reasoning abilities. However, existing methods overlook the trade-off between reasoning effectiveness and computational efficiency, often encouraging unnecessarily long reasoning chains and wasting tokens. To address this, we propose Learning to Think (L2T), an information-theoretic reinforcement fine-tuning framework for LLMs to make the models achieve optimal reasoning with fewer tokens. Specifically, L2T treats each query-response interaction as a hierarchical session of multiple episodes and proposes a universal dense process reward, i.e., quantifies the episode-wise information gain in parameters, requiring no extra annotations or task-specific evaluators. We propose a method to quickly estimate this reward based on PAC-Bayes bounds and the Fisher information matrix. Theoretical analyses show that it significantly reduces computational complexity with high estimation accuracy. By immediately rewarding each episode's contribution and penalizing excessive updates, L2T optimizes the model via reinforcement learning to maximize the use of each episode and achieve effective updates. Empirical results on various reasoning benchmarks and base models demonstrate the advantage of L2T across different tasks, boosting both reasoning effectiveness and efficiency.

On the Generalization and Causal Explanation in Self-Supervised Learning

Oct 01, 2024Abstract:Self-supervised learning (SSL) methods learn from unlabeled data and achieve high generalization performance on downstream tasks. However, they may also suffer from overfitting to their training data and lose the ability to adapt to new tasks. To investigate this phenomenon, we conduct experiments on various SSL methods and datasets and make two observations: (1) Overfitting occurs abruptly in later layers and epochs, while generalizing features are learned in early layers for all epochs; (2) Coding rate reduction can be used as an indicator to measure the degree of overfitting in SSL models. Based on these observations, we propose Undoing Memorization Mechanism (UMM), a plug-and-play method that mitigates overfitting of the pre-trained feature extractor by aligning the feature distributions of the early and the last layers to maximize the coding rate reduction of the last layer output. The learning process of UMM is a bi-level optimization process. We provide a causal analysis of UMM to explain how UMM can help the pre-trained feature extractor overcome overfitting and recover generalization. We also demonstrate that UMM significantly improves the generalization performance of SSL methods on various downstream tasks.

On the Discriminability of Self-Supervised Representation Learning

Jul 18, 2024Abstract:Self-supervised learning (SSL) has recently achieved significant success in downstream visual tasks. However, a notable gap still exists between SSL and supervised learning (SL), especially in complex downstream tasks. In this paper, we show that the features learned by SSL methods suffer from the crowding problem, where features of different classes are not distinctly separated, and features within the same class exhibit large intra-class variance. In contrast, SL ensures a clear separation between classes. We analyze this phenomenon and conclude that SSL objectives do not constrain the relationships between different samples and their augmentations. Our theoretical analysis delves into how SSL objectives fail to enforce the necessary constraints between samples and their augmentations, leading to poor performance in complex tasks. We provide a theoretical framework showing that the performance gap between SSL and SL mainly stems from the inability of SSL methods to capture the aggregation of similar augmentations and the separation of dissimilar augmentations. To address this issue, we propose a learnable regulator called Dynamic Semantic Adjuster (DSA). DSA aggregates and separates samples in the feature space while being robust to outliers. Through extensive empirical evaluations on multiple benchmark datasets, we demonstrate the superiority of DSA in enhancing feature aggregation and separation, ultimately closing the performance gap between SSL and SL.

Not All Frequencies Are Created Equal:Towards a Dynamic Fusion of Frequencies in Time-Series Forecasting

Jul 18, 2024

Abstract:Long-term time series forecasting is a long-standing challenge in various applications. A central issue in time series forecasting is that methods should expressively capture long-term dependency. Furthermore, time series forecasting methods should be flexible when applied to different scenarios. Although Fourier analysis offers an alternative to effectively capture reusable and periodic patterns to achieve long-term forecasting in different scenarios, existing methods often assume high-frequency components represent noise and should be discarded in time series forecasting. However, we conduct a series of motivation experiments and discover that the role of certain frequencies varies depending on the scenarios. In some scenarios, removing high-frequency components from the original time series can improve the forecasting performance, while in others scenarios, removing them is harmful to forecasting performance. Therefore, it is necessary to treat the frequencies differently according to specific scenarios. To achieve this, we first reformulate the time series forecasting problem as learning a transfer function of each frequency in the Fourier domain. Further, we design Frequency Dynamic Fusion (FreDF), which individually predicts each Fourier component, and dynamically fuses the output of different frequencies. Moreover, we provide a novel insight into the generalization ability of time series forecasting and propose the generalization bound of time series forecasting. Then we prove FreDF has a lower bound, indicating that FreDF has better generalization ability. Extensive experiments conducted on multiple benchmark datasets and ablation studies demonstrate the effectiveness of FreDF.

Learning Invariant Causal Mechanism from Vision-Language Models

May 24, 2024

Abstract:Pre-trained large-scale models have become a major research focus, but their effectiveness is limited in real-world applications due to diverse data distributions. In contrast, humans excel at decision-making across various domains by learning reusable knowledge that remains invariant despite environmental changes in a complex world. Although CLIP, as a successful vision-language pre-trained model, demonstrates remarkable performance in various visual downstream tasks, our experiments reveal unsatisfactory results in specific domains. Our further analysis with causal inference exposes the current CLIP model's inability to capture the invariant causal mechanisms across domains, attributed to its deficiency in identifying latent factors generating the data. To address this, we propose the Invariant Causal Mechanism of CLIP (CLIP-ICM), an algorithm designed to provably identify invariant latent factors with the aid of interventional data, and perform accurate prediction on various domains. Theoretical analysis demonstrates that our method has a lower generalization bound in out-of-distribution (OOD) scenarios. Experimental results showcase the outstanding performance of CLIP-ICM.

Hacking Task Confounder in Meta-Learning

Dec 10, 2023

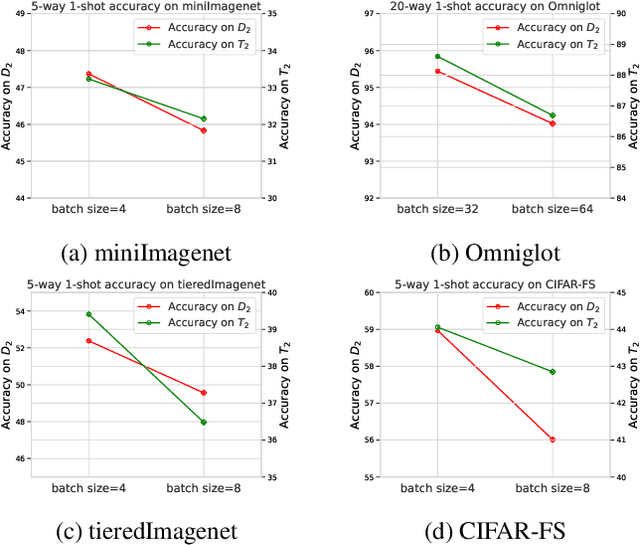

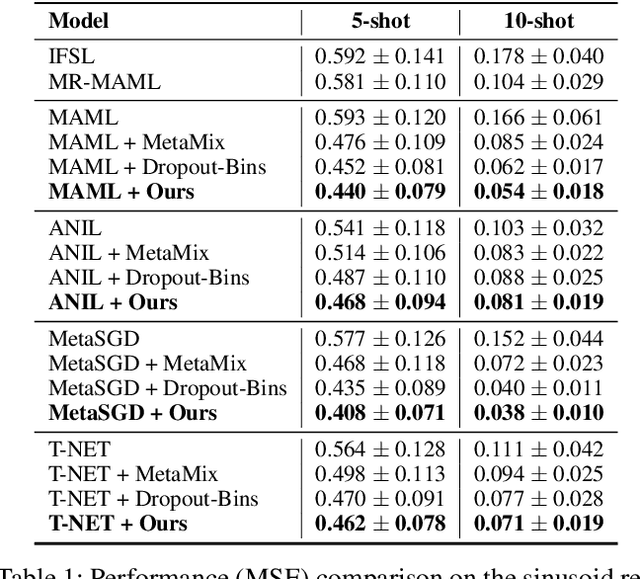

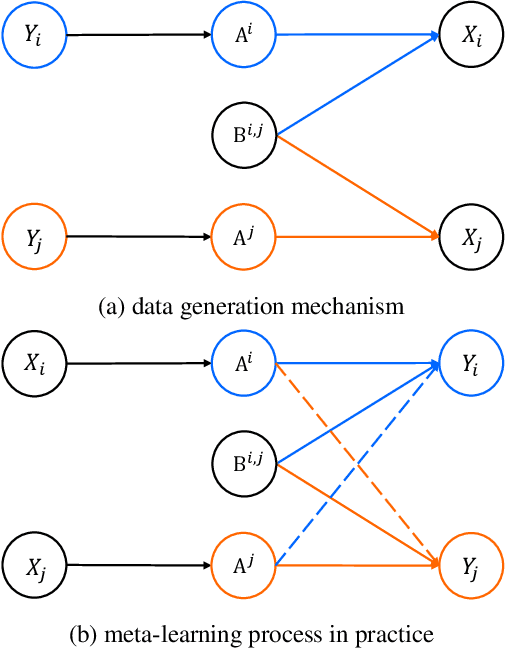

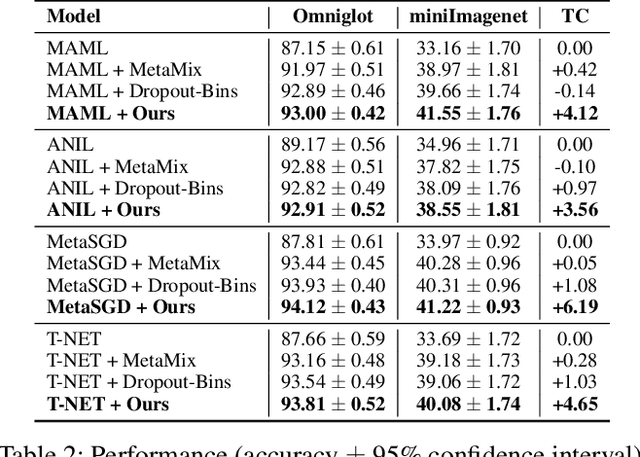

Abstract:Meta-learning enables rapid generalization to new tasks by learning meta-knowledge from a variety of tasks. It is intuitively assumed that the more tasks a model learns in one training batch, the richer knowledge it acquires, leading to better generalization performance. However, contrary to this intuition, our experiments reveal an unexpected result: adding more tasks within a single batch actually degrades the generalization performance. To explain this unexpected phenomenon, we conduct a Structural Causal Model (SCM) for causal analysis. Our investigation uncovers the presence of spurious correlations between task-specific causal factors and labels in meta-learning. Furthermore, the confounding factors differ across different batches. We refer to these confounding factors as ``Task Confounders". Based on this insight, we propose a plug-and-play Meta-learning Causal Representation Learner (MetaCRL) to eliminate task confounders. It encodes decoupled causal factors from multiple tasks and utilizes an invariant-based bi-level optimization mechanism to ensure their causality for meta-learning. Extensive experiments on various benchmark datasets demonstrate that our work achieves state-of-the-art (SOTA) performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge