Yuwei Cai

VGSwarm: A Vision-based Gene Regulation Network for UAVs Swarm Behavior Emergence

Jun 17, 2022

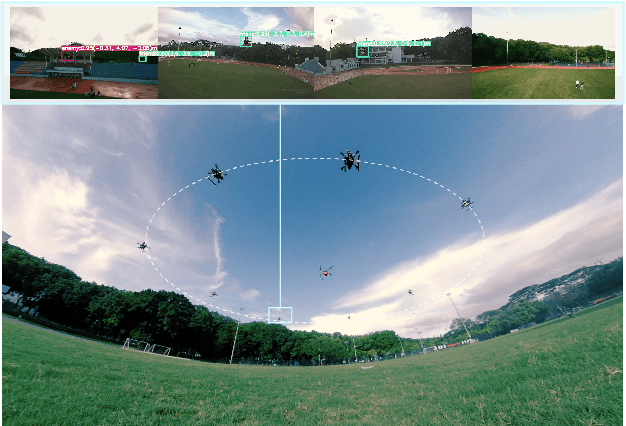

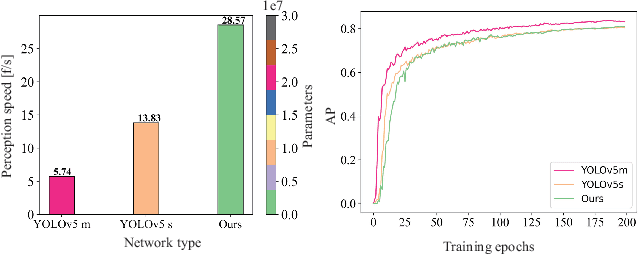

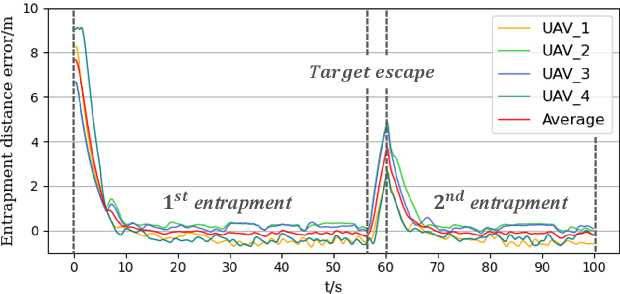

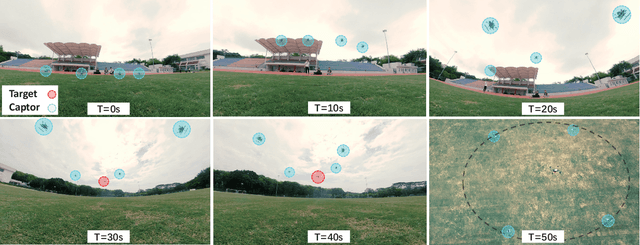

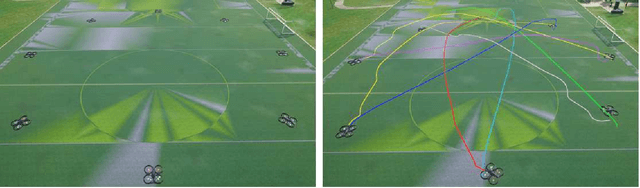

Abstract:UAVs (Unmanned Aerial Vehicles) dynamic encirclement is an emerging field with great potential. Researchers often get inspirations from biological systems, either from macro-world like fish schools or bird flocks etc, or from micro-world like gene regulatory networks. However, most swarm control algorithms rely on centralized control, global information acquisition, or communication between neighboring agents. In this work, we propose a distributed swarm control method based purely on vision without any direct communications, in which swarm agents of e.g. UAVs can generate an entrapping pattern to encircle an escaping target of UAV based purly on their installed omnidirectional vision sensors. A finite-state-machine describing the behavior model of each individual drone is also designed so that a swarm of drones can accomplish searching and entrapping of the target collectively. We verify the effectiveness and efficiency of the proposed method in various simulation and real-world experiments.

Vision-based Distributed Multi-UAV Collision Avoidance via Deep Reinforcement Learning for Navigation

Mar 05, 2022

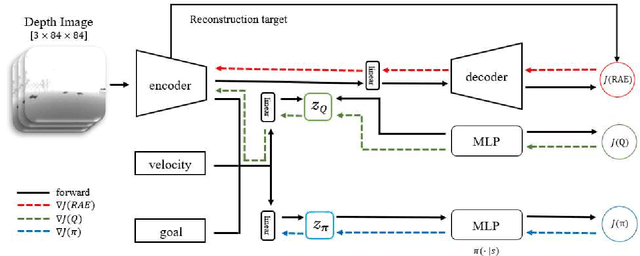

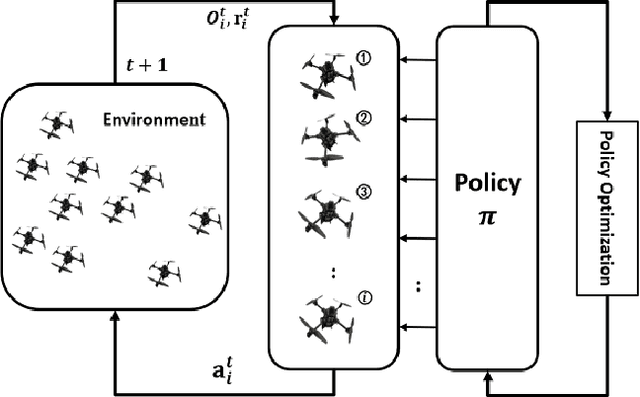

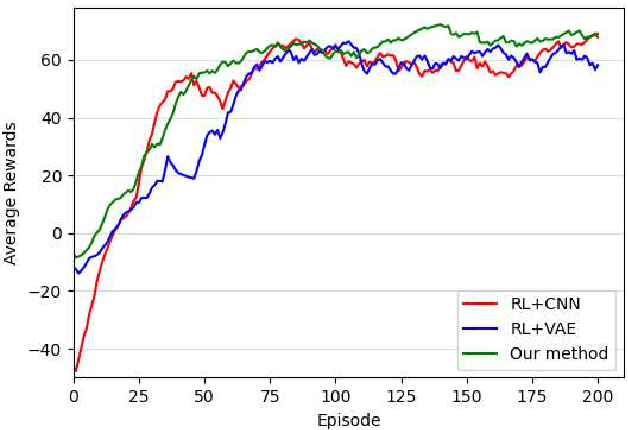

Abstract:Online path planning for multiple unmanned aerial vehicle (multi-UAV) systems is considered a challenging task. It needs to ensure collision-free path planning in real-time, especially when the multi-UAV systems can become very crowded on certain occasions. In this paper, we presented a vision-based decentralized collision-avoidance policy for multi-UAV systems, which takes depth images and inertial measurements as sensory inputs and outputs UAV's steering commands. The policy is trained together with the latent representation of depth images using a policy gradient-based reinforcement learning algorithm and autoencoder in the multi-UAV threedimensional workspaces. Each UAV follows the same trained policy and acts independently to reach the goal without colliding or communicating with other UAVs. We validate our policy in various simulated scenarios. The experimental results show that our learned policy can guarantee fully autonomous collision-free navigation for multi-UAV in the three-dimensional workspaces with good robustness and scalability.

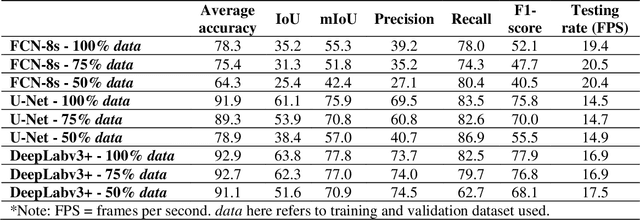

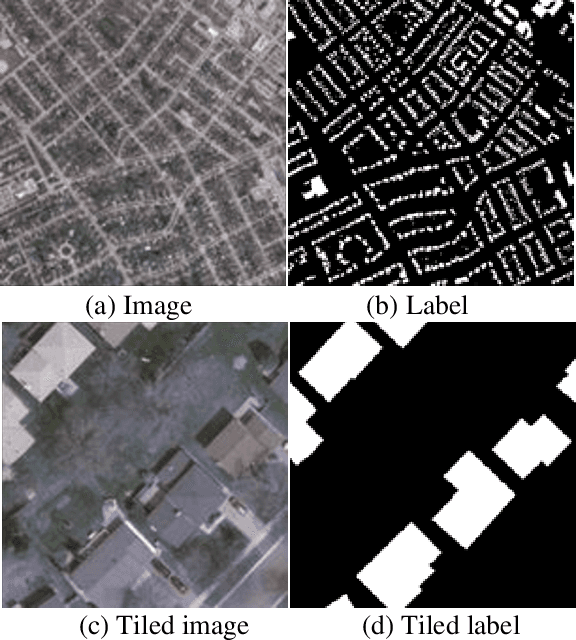

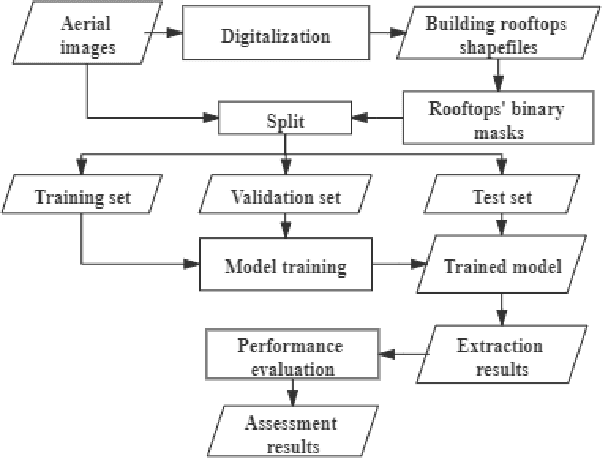

A comparative study of deep learning methods for building footprints detection using high spatial resolution aerial images

Mar 16, 2021

Abstract:Building footprints data is of importance in several urban applications and natural disaster management. In contrast to traditional surveying and mapping, using high spatial resolution aerial images, deep learning-based building footprints extraction methods can extract building footprints accurately and efficiently. With rapidly development of deep learning methods, it is hard for novice to harness the powerful tools in building footprints extraction. The paper aims at providing the whole process of building footprints extraction from high spatial resolution images using deep learning-based methods. In addition, we also compare the commonly used methods, including Fully Convolutional Networks (FCN)-8s, U-Net and DeepLabv3+. At the end of the work, we change the data size used in models training to explore the influence of data size to the performance of the algorithms. The experiments show that, in different data size, DeepLabv3+ is the best algorithm among them with the highest accuracy and moderate efficiency; FCN-8s has the worst accuracy and highest efficiency; U-Net shows the moderate accuracy and lowest efficiency. In addition, with more training data, algorithms converged faster with higher accuracy in extraction results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge