Bingliang Hu

VGSwarm: A Vision-based Gene Regulation Network for UAVs Swarm Behavior Emergence

Jun 17, 2022

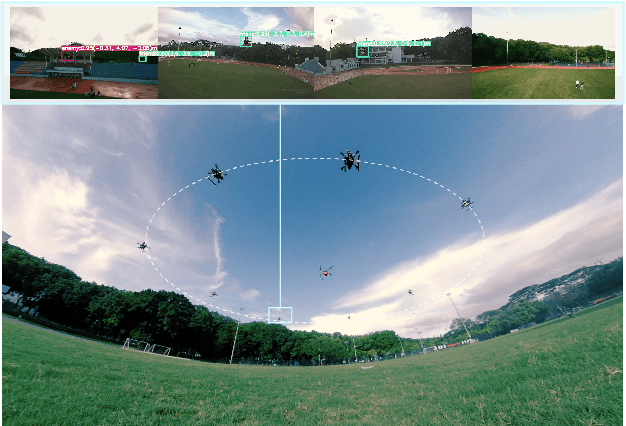

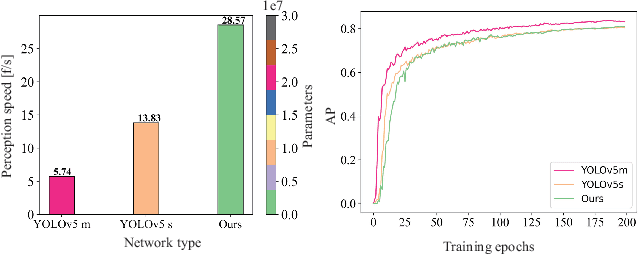

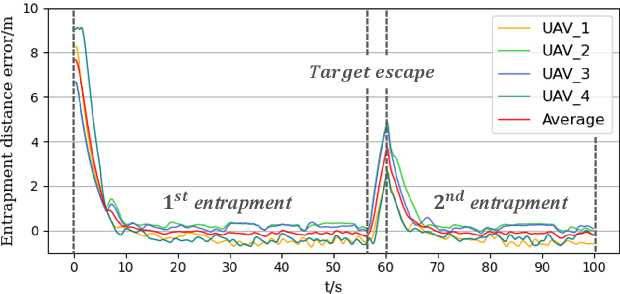

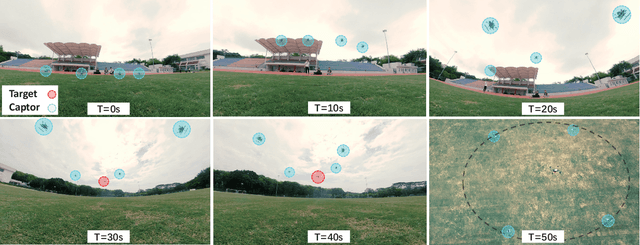

Abstract:UAVs (Unmanned Aerial Vehicles) dynamic encirclement is an emerging field with great potential. Researchers often get inspirations from biological systems, either from macro-world like fish schools or bird flocks etc, or from micro-world like gene regulatory networks. However, most swarm control algorithms rely on centralized control, global information acquisition, or communication between neighboring agents. In this work, we propose a distributed swarm control method based purely on vision without any direct communications, in which swarm agents of e.g. UAVs can generate an entrapping pattern to encircle an escaping target of UAV based purly on their installed omnidirectional vision sensors. A finite-state-machine describing the behavior model of each individual drone is also designed so that a swarm of drones can accomplish searching and entrapping of the target collectively. We verify the effectiveness and efficiency of the proposed method in various simulation and real-world experiments.

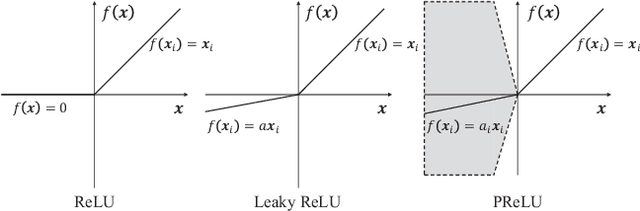

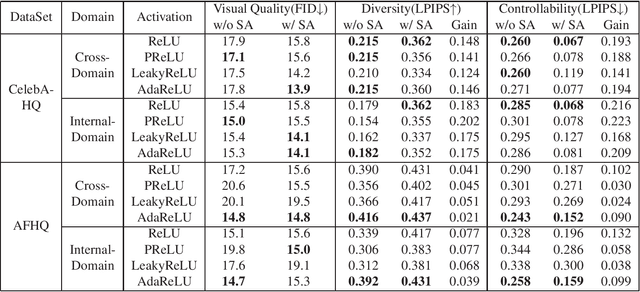

Delving into Rectifiers in Style-Based Image Translation

Nov 23, 2021

Abstract:While modern image translation techniques can create photorealistic synthetic images, they have limited style controllability, thus could suffer from translation errors. In this work, we show that the activation function is one of the crucial components in controlling the direction of image synthesis. Specifically, we explicitly demonstrated that the slope parameters of the rectifier could change the data distribution and be used independently to control the direction of translation. To improve the style controllability, two simple but effective techniques are proposed, including Adaptive ReLU (AdaReLU) and structural adaptive function. The AdaReLU can dynamically adjust the slope parameters according to the target style and can be utilized to increase the controllability by combining with Adaptive Instance Normalization (AdaIN). Meanwhile, the structural adaptative function enables rectifiers to manipulate the structure of feature maps more effectively. It is composed of the proposed structural convolution (StruConv), an efficient convolutional module that can choose the area to be activated based on the mean and variance specified by AdaIN. Extensive experiments show that the proposed techniques can greatly increase the network controllability and output diversity in style-based image translation tasks.

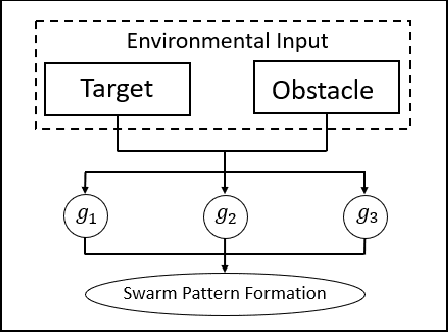

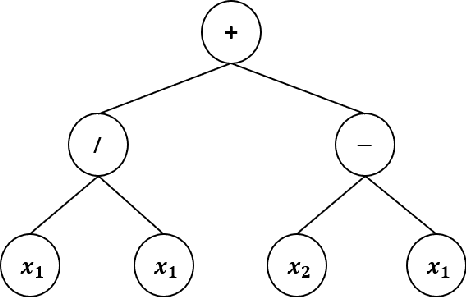

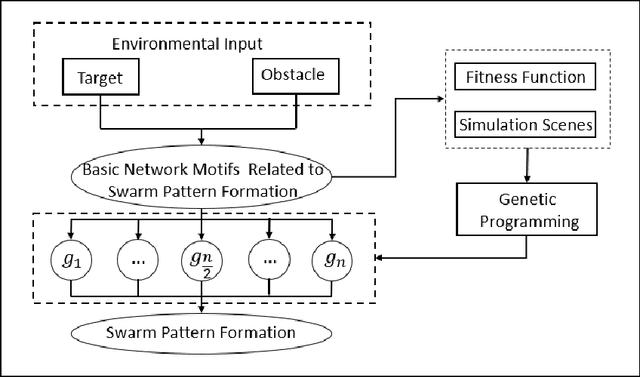

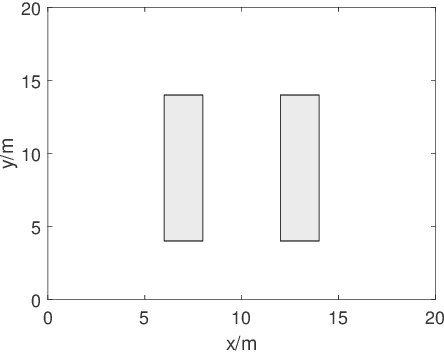

An Automatic Design Framework of Swarm Pattern Formation based on Multi-objective Genetic Programming

Nov 01, 2019

Abstract:Most existing swarm pattern formation methods depend on a predefined gene regulatory network (GRN) structure that requires designers' priori knowledge, which is difficult to adapt to complex and changeable environments. To dynamically adapt to the complex and changeable environments, we propose an automatic design framework of swarm pattern formation based on multi-objective genetic programming. The proposed framework does not need to define the structure of the GRN-based model in advance, and it applies some basic network motifs to automatically structure the GRN-based model. In addition, a multi-objective genetic programming (MOGP) combines with NSGA-II, namely MOGP-NSGA-II, to balance the complexity and accuracy of the GRN-based model. In evolutionary process, an MOGP-NSGA-II and differential evolution (DE) are applied to optimize the structures and parameters of the GRN-based model in parallel. Simulation results demonstrate that the proposed framework can effectively evolve some novel GRN-based models, and these GRN-based models not only have a simpler structure and a better performance, but also are robust to the complex and changeable environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge