Ze Shi

PanoVPR: Towards Unified Perspective-to-Equirectangular Visual Place Recognition via Sliding Windows across the Panoramic View

Mar 24, 2023

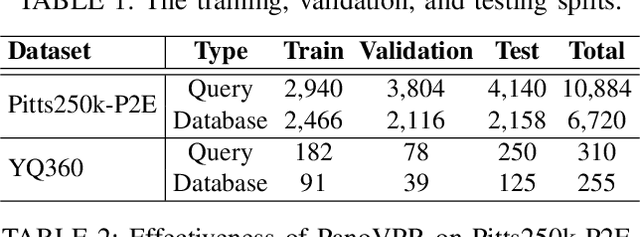

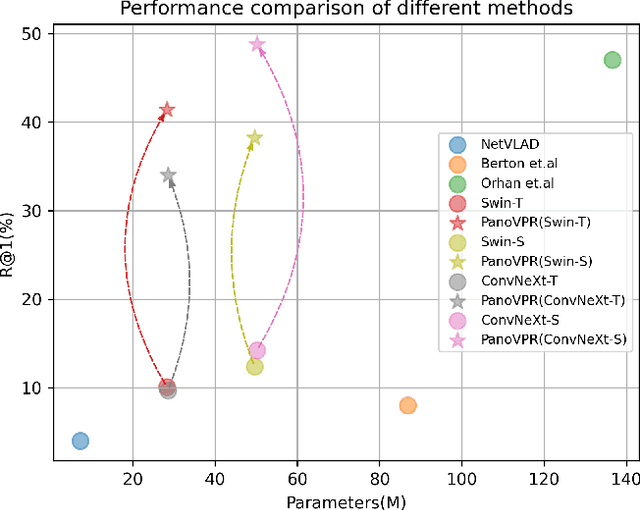

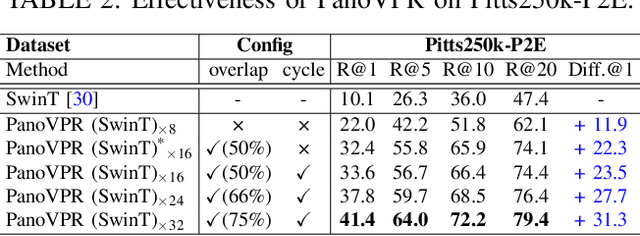

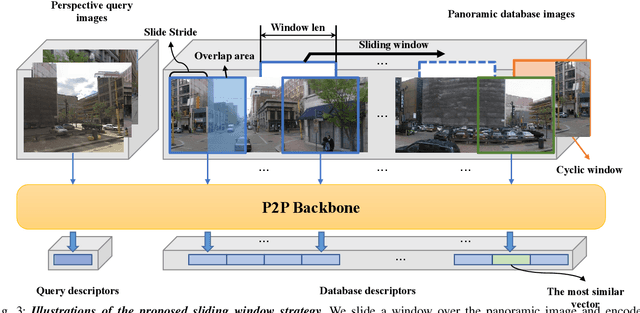

Abstract:Visual place recognition has received increasing attention in recent years as a key technology in autonomous driving and robotics. The current mainstream approaches use either the perspective view retrieval perspective view (P2P) paradigm or the equirectangular image retrieval equirectangular image (E2E) paradigm. However, a natural and practical idea is that users only have consumer-grade pinhole cameras to obtain query perspective images and retrieve them in panoramic database images from map providers. To this end, we propose PanoVPR, a sliding-window-based perspective-to-equirectangular (P2E) visual place recognition framework, which eliminates feature truncation caused by hard cropping by sliding windows over the whole equirectangular image and computing and comparing feature descriptors between windows. In addition, this unified framework allows for directly transferring the network structure used in perspective-to-perspective (P2P) methods without modification. To facilitate training and evaluation, we derive the pitts250k-P2E dataset from the pitts250k and achieve promising results, and we also establish a P2E dataset in a real-world scenario by a mobile robot platform, which we refer to YQ360. Code and datasets will be made available at https://github.com/zafirshi/PanoVPR.

Vision-based Distributed Multi-UAV Collision Avoidance via Deep Reinforcement Learning for Navigation

Mar 05, 2022

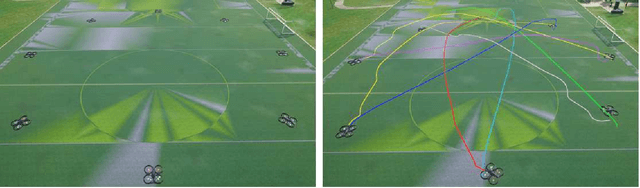

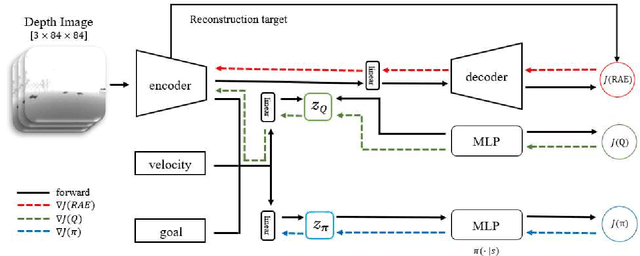

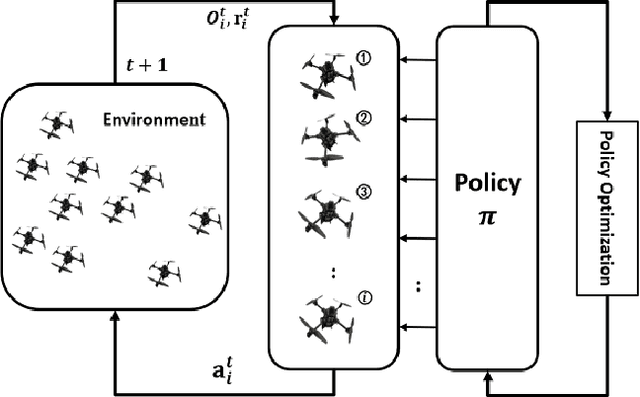

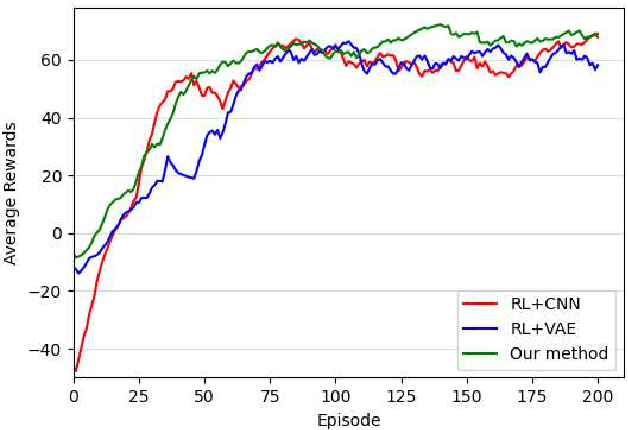

Abstract:Online path planning for multiple unmanned aerial vehicle (multi-UAV) systems is considered a challenging task. It needs to ensure collision-free path planning in real-time, especially when the multi-UAV systems can become very crowded on certain occasions. In this paper, we presented a vision-based decentralized collision-avoidance policy for multi-UAV systems, which takes depth images and inertial measurements as sensory inputs and outputs UAV's steering commands. The policy is trained together with the latent representation of depth images using a policy gradient-based reinforcement learning algorithm and autoencoder in the multi-UAV threedimensional workspaces. Each UAV follows the same trained policy and acts independently to reach the goal without colliding or communicating with other UAVs. We validate our policy in various simulated scenarios. The experimental results show that our learned policy can guarantee fully autonomous collision-free navigation for multi-UAV in the three-dimensional workspaces with good robustness and scalability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge