Yusheng Xu

Pairwise Point Cloud Registration using Graph Matching and Rotation-invariant Features

May 05, 2021

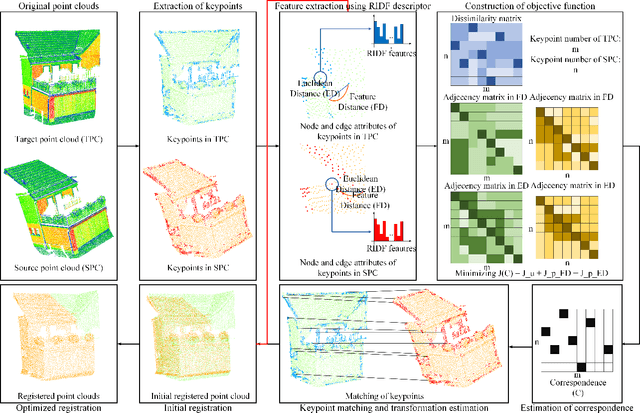

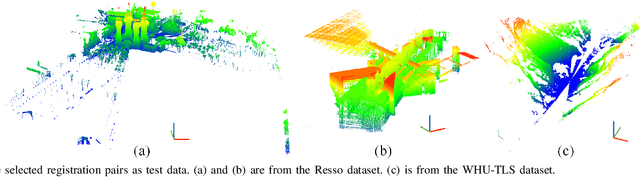

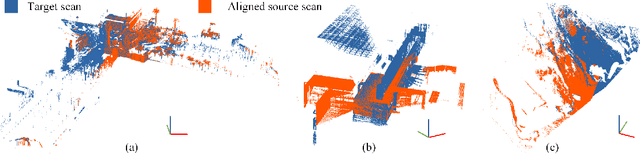

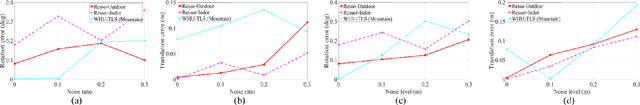

Abstract:Registration is a fundamental but critical task in point cloud processing, which usually depends on finding element correspondence from two point clouds. However, the finding of reliable correspondence relies on establishing a robust and discriminative description of elements and the correct matching of corresponding elements. In this letter, we develop a coarse-to-fine registration strategy, which utilizes rotation-invariant features and a new weighted graph matching method for iteratively finding correspondence. In the graph matching method, the similarity of nodes and edges in Euclidean and feature space are formulated to construct the optimization function. The proposed strategy is evaluated using two benchmark datasets and compared with several state-of-the-art methods. Regarding the experimental results, our proposed method can achieve a fine registration with rotation errors of less than 0.2 degrees and translation errors of less than 0.1m.

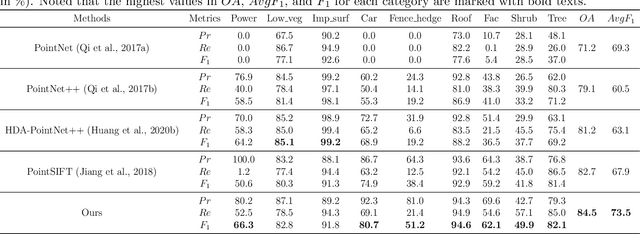

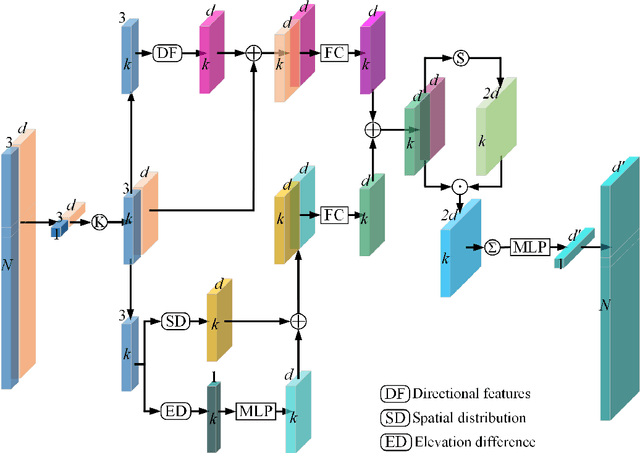

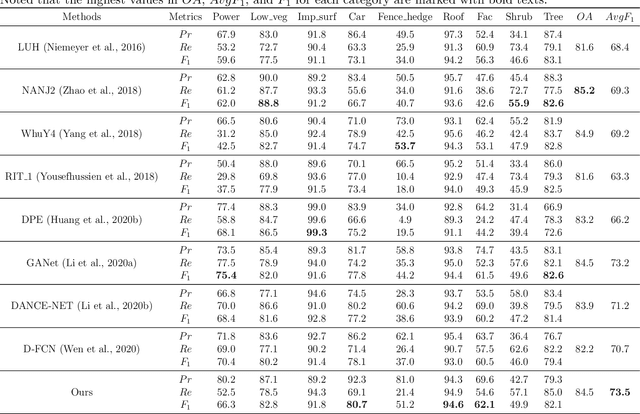

GraNet: Global Relation-aware Attentional Network for ALS Point Cloud Classification

Dec 24, 2020

Abstract:In this work, we propose a novel neural network focusing on semantic labeling of ALS point clouds, which investigates the importance of long-range spatial and channel-wise relations and is termed as global relation-aware attentional network (GraNet). GraNet first learns local geometric description and local dependencies using a local spatial discrepancy attention convolution module (LoSDA). In LoSDA, the orientation information, spatial distribution, and elevation differences are fully considered by stacking several local spatial geometric learning modules and the local dependencies are embedded by using an attention pooling module. Then, a global relation-aware attention module (GRA), consisting of a spatial relation-aware attention module (SRA) and a channel relation aware attention module (CRA), are investigated to further learn the global spatial and channel-wise relationship between any spatial positions and feature vectors. The aforementioned two important modules are embedded in the multi-scale network architecture to further consider scale changes in large urban areas. We conducted comprehensive experiments on two ALS point cloud datasets to evaluate the performance of our proposed framework. The results show that our method can achieve higher classification accuracy compared with other commonly used advanced classification methods. The overall accuracy (OA) of our method on the ISPRS benchmark dataset can be improved to 84.5% to classify nine semantic classes, with an average F1 measure (AvgF1) of 73.5%. In detail, we have following F1 values for each object class: powerlines: 66.3%, low vegetation: 82.8%, impervious surface: 91.8%, car: 80.7%, fence: 51.2%, roof: 94.6%, facades: 62.1%, shrub: 49.9%, trees: 82.1%. Besides, experiments were conducted using a new ALS point cloud dataset covering highly dense urban areas.

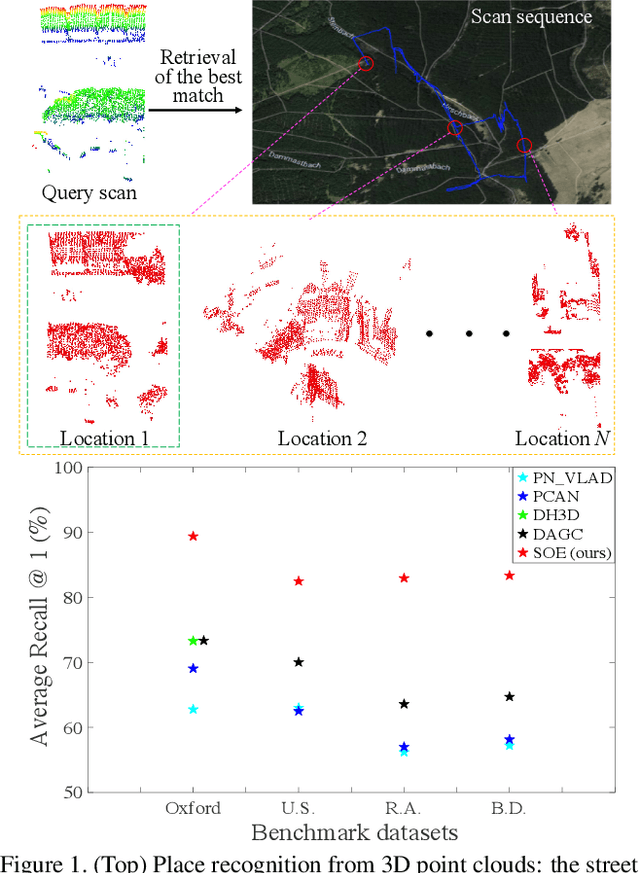

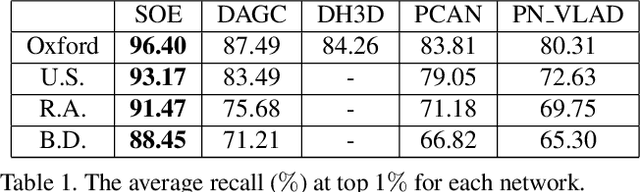

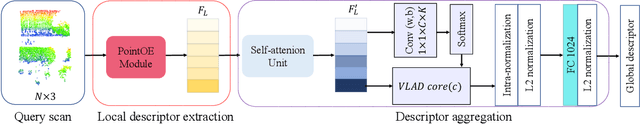

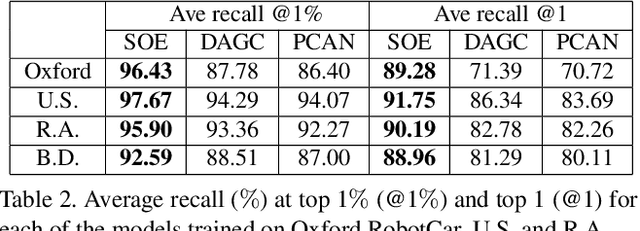

SOE-Net: A Self-Attention and Orientation Encoding Network for Point Cloud based Place Recognition

Nov 24, 2020

Abstract:We tackle the problem of place recognition from point cloud data and introduce a self-attention and orientation encoding network (SOE-Net) that fully explores the relationship between points and incorporates long-range context into point-wise local descriptors. Local information of each point from eight orientations is captured in a PointOE module, whereas long-range feature dependencies among local descriptors are captured with a self-attention unit. Moreover, we propose a novel loss function called Hard Positive Hard Negative quadruplet loss (HPHN quadruplet), that achieves better performance than the commonly used metric learning loss. Experiments on various benchmark datasets demonstrate promising performance of the proposed network. It significantly outperforms the current state-of-the-art approaches - the average recall at top 1 retrieval on the Oxford RobotCar dataset is improved by over 16%. Codes and the trained model will be made publicly available.

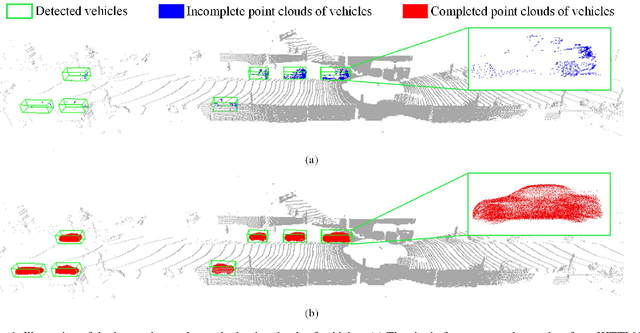

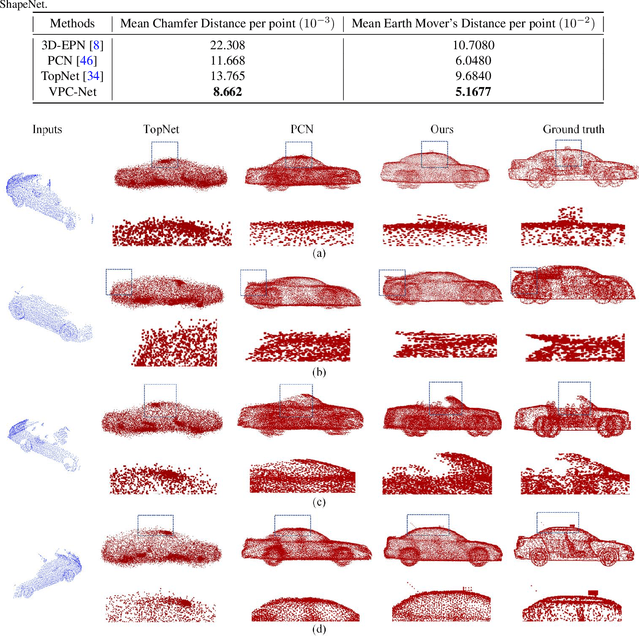

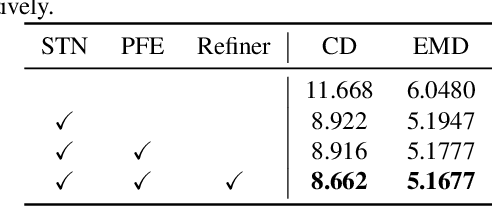

VPC-Net: Completion of 3D Vehicles from MLS Point Clouds

Aug 08, 2020

Abstract:Vehicles are the most concerned investigation target as a dynamic and essential component in the road environment of urban scenarios. To monitor their behaviors and extract their geometric characteristics, an accurate and instant measurement of the vehicles plays a vital role in remote sensing and computer vision field. 3D point clouds acquired from the mobile laser scanning (MLS) system deliver 3D information of unprecedented detail of road scenes along with the driving. They have proven to be an adequate data source in the fields of intelligent transportation and autonomous driving, especially for extracting vehicles. However, acquired 3D point clouds of vehicles from MLS systems are inevitably incomplete due to object occlusion or self-occlusion. To tackle this problem, we proposed a neural network to synthesize complete, dense, and uniform point clouds for vehicles from MLS data, named Vehicle Points Completion-Net (VPC-Net). In this network, we introduced a new encoder module to extract global features from the input instance, consisting of a spatial transformer network and point feature enhancement layer. Moreover, a new refiner module is also presented to preserve the vehicle details from inputs and refine the complete outputs with fine-grained information. Given the sparse and partial point clouds of vehicles, the network can generate complete and realistic structures, and keep the fine-grained details from the partial inputs. We evaluated the proposed VPC-Net in different experiments using synthetic and real-scan datasets and applied the results to 3D vehicle monitoring tasks. Quantitative and qualitative experiments demonstrate the promising performance of VPC-Net and show state-of-the-art results.

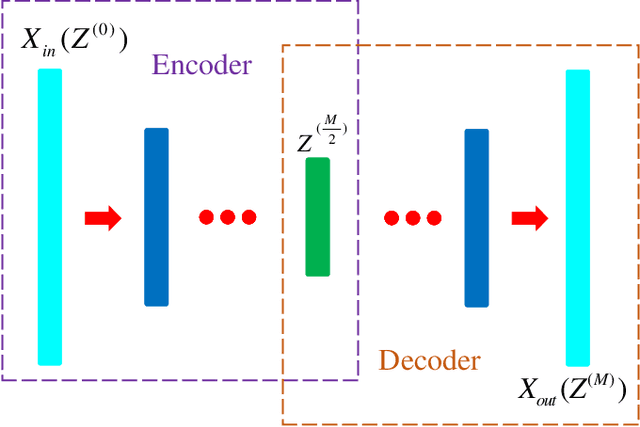

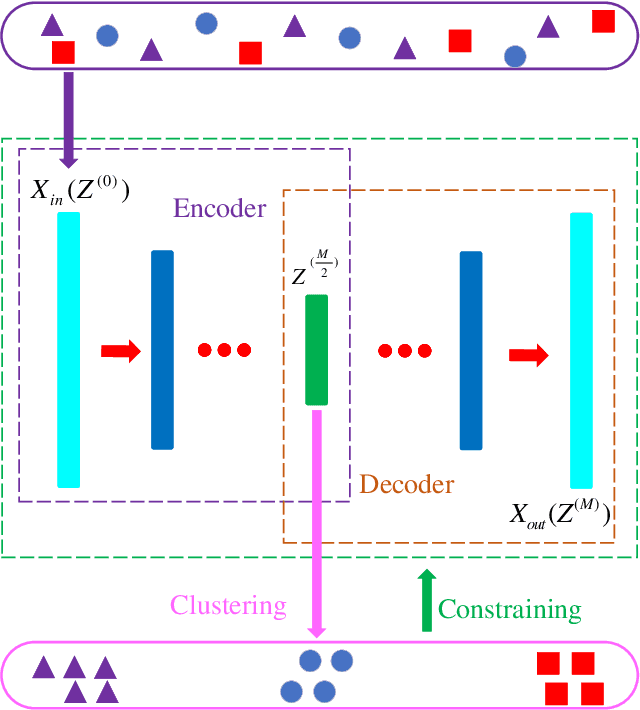

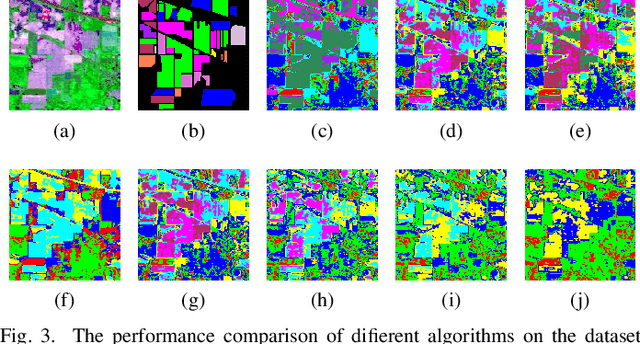

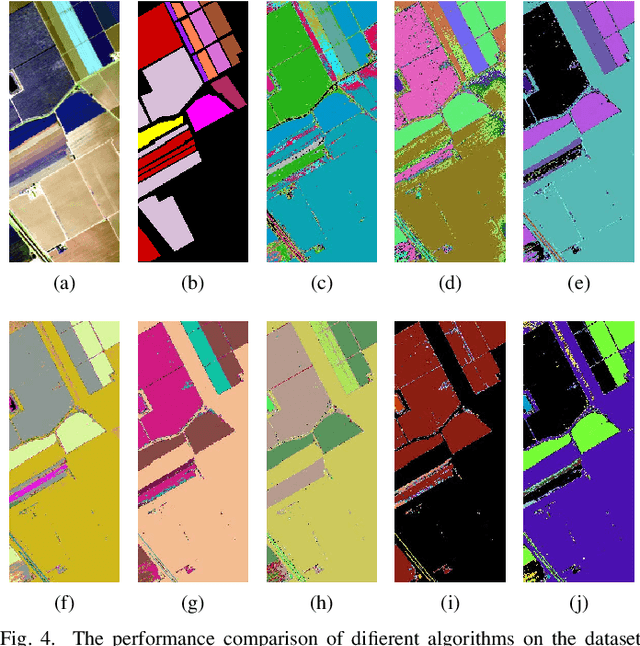

Deep Clustering With Intra-class Distance Constraint for Hyperspectral Images

Apr 01, 2019

Abstract:The high dimensionality of hyperspectral images often results in the degradation of clustering performance. Due to the powerful ability of deep feature extraction and non-linear feature representation, the clustering algorithm based on deep learning has become a hot research topic in the field of hyperspectral remote sensing. However, most deep clustering algorithms for hyperspectral images utilize deep neural networks as feature extractor without considering prior knowledge constraints that are suitable for clustering. To solve this problem, we propose an intra-class distance constrained deep clustering algorithm for high-dimensional hyperspectral images. The proposed algorithm constrains the feature mapping procedure of the auto-encoder network by intra-class distance so that raw images are transformed from the original high-dimensional space to the low-dimensional feature space that is more conducive to clustering. Furthermore, the related learning process is treated as a joint optimization problem of deep feature extraction and clustering. Experimental results demonstrate the intense competitiveness of the proposed algorithm in comparison with state-of-the-art clustering methods of hyperspectral images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge