VPC-Net: Completion of 3D Vehicles from MLS Point Clouds

Paper and Code

Aug 08, 2020

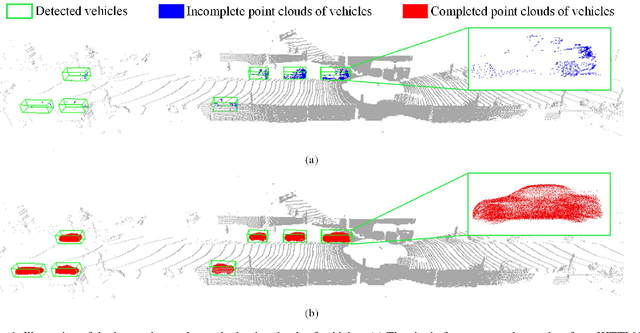

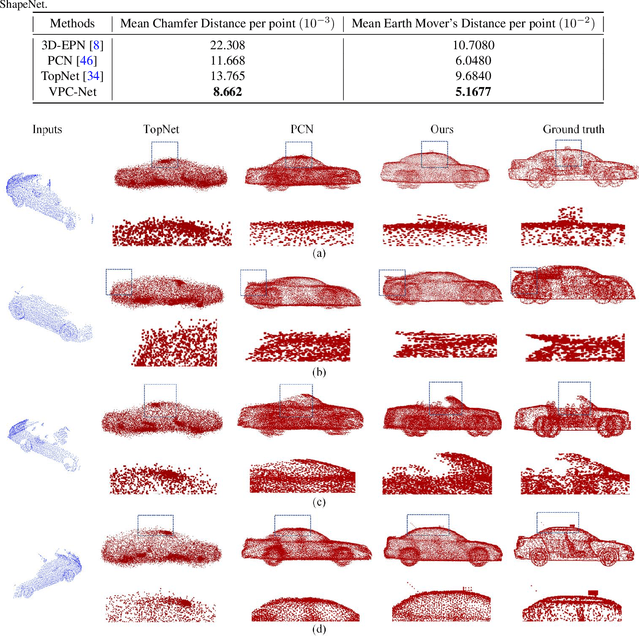

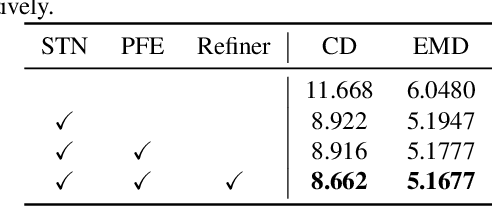

Vehicles are the most concerned investigation target as a dynamic and essential component in the road environment of urban scenarios. To monitor their behaviors and extract their geometric characteristics, an accurate and instant measurement of the vehicles plays a vital role in remote sensing and computer vision field. 3D point clouds acquired from the mobile laser scanning (MLS) system deliver 3D information of unprecedented detail of road scenes along with the driving. They have proven to be an adequate data source in the fields of intelligent transportation and autonomous driving, especially for extracting vehicles. However, acquired 3D point clouds of vehicles from MLS systems are inevitably incomplete due to object occlusion or self-occlusion. To tackle this problem, we proposed a neural network to synthesize complete, dense, and uniform point clouds for vehicles from MLS data, named Vehicle Points Completion-Net (VPC-Net). In this network, we introduced a new encoder module to extract global features from the input instance, consisting of a spatial transformer network and point feature enhancement layer. Moreover, a new refiner module is also presented to preserve the vehicle details from inputs and refine the complete outputs with fine-grained information. Given the sparse and partial point clouds of vehicles, the network can generate complete and realistic structures, and keep the fine-grained details from the partial inputs. We evaluated the proposed VPC-Net in different experiments using synthetic and real-scan datasets and applied the results to 3D vehicle monitoring tasks. Quantitative and qualitative experiments demonstrate the promising performance of VPC-Net and show state-of-the-art results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge