Yuchong Zhang

Multimodal "Puppeteer": An Exploration of Robot Teleoperation Via Virtual Counterpart with LLM-Driven Voice and Gesture Interaction in Augmented Reality

Jun 16, 2025Abstract:The integration of robotics and augmented reality (AR) holds transformative potential for advancing human-robot interaction (HRI), offering enhancements in usability, intuitiveness, accessibility, and collaborative task performance. This paper introduces and evaluates a novel multimodal AR-based robot puppeteer framework that enables intuitive teleoperation via virtual counterpart through large language model (LLM)-driven voice commands and hand gesture interactions. Utilizing the Meta Quest 3, users interact with a virtual counterpart robot in real-time, effectively "puppeteering" its physical counterpart within an AR environment. We conducted a within-subject user study with 42 participants performing robotic cube pick-and-place with pattern matching tasks under two conditions: gesture-only interaction and combined voice-and-gesture interaction. Both objective performance metrics and subjective user experience (UX) measures were assessed, including an extended comparative analysis between roboticists and non-roboticists. The results provide key insights into how multimodal input influences contextual task efficiency, usability, and user satisfaction in AR-based HRI. Our findings offer practical design implications for designing effective AR-enhanced HRI systems.

FLAME: A Federated Learning Benchmark for Robotic Manipulation

Mar 03, 2025Abstract:Recent progress in robotic manipulation has been fueled by large-scale datasets collected across diverse environments. Training robotic manipulation policies on these datasets is traditionally performed in a centralized manner, raising concerns regarding scalability, adaptability, and data privacy. While federated learning enables decentralized, privacy-preserving training, its application to robotic manipulation remains largely unexplored. We introduce FLAME (Federated Learning Across Manipulation Environments), the first benchmark designed for federated learning in robotic manipulation. FLAME consists of: (i) a set of large-scale datasets of over 160,000 expert demonstrations of multiple manipulation tasks, collected across a wide range of simulated environments; (ii) a training and evaluation framework for robotic policy learning in a federated setting. We evaluate standard federated learning algorithms in FLAME, showing their potential for distributed policy learning and highlighting key challenges. Our benchmark establishes a foundation for scalable, adaptive, and privacy-aware robotic learning.

Advancing User-Voice Interaction: Exploring Emotion-Aware Voice Assistants Through a Role-Swapping Approach

Feb 21, 2025Abstract:As voice assistants (VAs) become increasingly integrated into daily life, the need for emotion-aware systems that can recognize and respond appropriately to user emotions has grown. While significant progress has been made in speech emotion recognition (SER) and sentiment analysis, effectively addressing user emotions-particularly negative ones-remains a challenge. This study explores human emotional response strategies in VA interactions using a role-swapping approach, where participants regulate AI emotions rather than receiving pre-programmed responses. Through speech feature analysis and natural language processing (NLP), we examined acoustic and linguistic patterns across various emotional scenarios. Results show that participants favor neutral or positive emotional responses when engaging with negative emotional cues, highlighting a natural tendency toward emotional regulation and de-escalation. Key acoustic indicators such as root mean square (RMS), zero-crossing rate (ZCR), and jitter were identified as sensitive to emotional states, while sentiment polarity and lexical diversity (TTR) distinguished between positive and negative responses. These findings provide valuable insights for developing adaptive, context-aware VAs capable of delivering empathetic, culturally sensitive, and user-aligned responses. By understanding how humans naturally regulate emotions in AI interactions, this research contributes to the design of more intuitive and emotionally intelligent voice assistants, enhancing user trust and engagement in human-AI interactions.

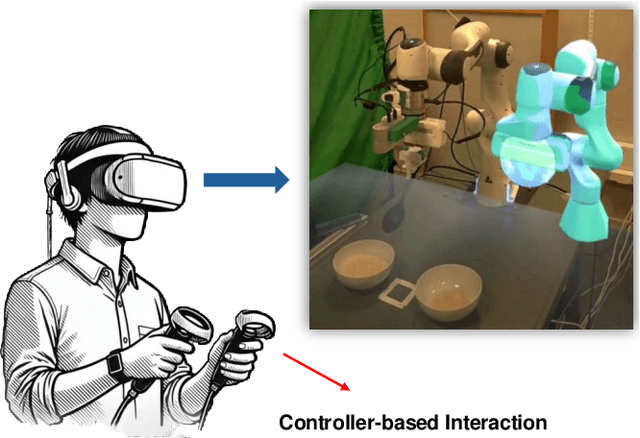

LLM-Driven Augmented Reality Puppeteer: Controller-Free Voice-Commanded Robot Teleoperation

Feb 13, 2025

Abstract:The integration of robotics and augmented reality (AR) presents transformative opportunities for advancing human-robot interaction (HRI) by improving usability, intuitiveness, and accessibility. This work introduces a controller-free, LLM-driven voice-commanded AR puppeteering system, enabling users to teleoperate a robot by manipulating its virtual counterpart in real time. By leveraging natural language processing (NLP) and AR technologies, our system -- prototyped using Meta Quest 3 -- eliminates the need for physical controllers, enhancing ease of use while minimizing potential safety risks associated with direct robot operation. A preliminary user demonstration successfully validated the system's functionality, demonstrating its potential for safer, more intuitive, and immersive robotic control.

The 1st InterAI Workshop: Interactive AI for Human-centered Robotics

Sep 17, 2024Abstract:The workshop is affiliated with 33nd IEEE International Conference on Robot and Human Interactive Communication (RO-MAN 2024) August 26~30, 2023 / Pasadena, CA, USA. It is designed as a half-day event, extending over four hours from 9:00 to 12:30 PST time. It accommodates both in-person and virtual attendees (via Zoom), ensuring a flexible participation mode. The agenda is thoughtfully crafted to include a diverse range of sessions: two keynote speeches that promise to provide insightful perspectives, two dedicated paper presentation sessions, an interactive panel discussion to foster dialogue among experts which facilitates deeper dives into specific topics, and a 15-minute coffee break. The workshop website: https://sites.google.com/view/interaiworkshops/home.

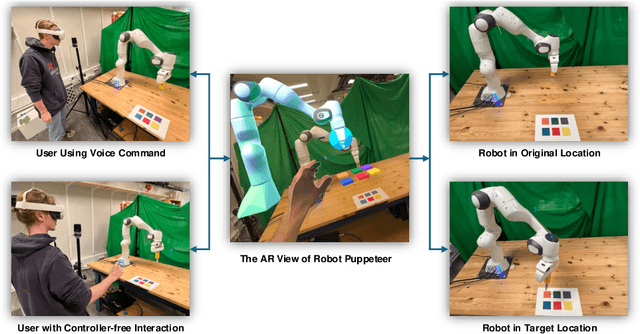

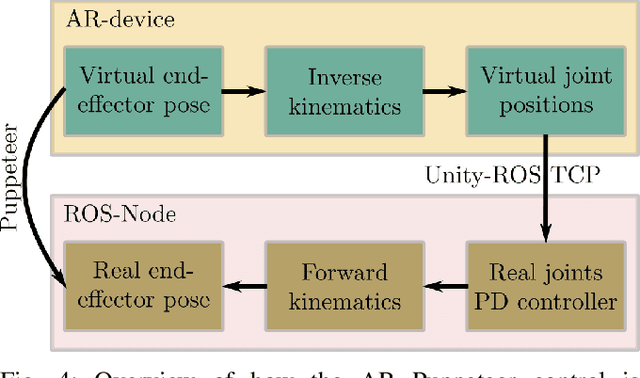

Puppeteer Your Robot: Augmented Reality Leader-Follower Teleoperation

Jul 16, 2024

Abstract:High-quality demonstrations are necessary when learning complex and challenging manipulation tasks. In this work, we introduce an approach to puppeteer a robot by controlling a virtual robot in an augmented reality setting. Our system allows for retaining the advantages of being intuitive from a physical leader-follower side while avoiding the unnecessary use of expensive physical setup. In addition, the user is endowed with additional information using augmented reality. We validate our system with a pilot study n=10 on a block stacking and rice scooping tasks where the majority rates the system favorably. Oculus App and corresponding ROS code are available on the project website: https://ar-puppeteer.github.io/

Vision Beyond Boundaries: An Initial Design Space of Domain-specific Large Vision Models in Human-robot Interaction

Apr 23, 2024Abstract:The emergence of Large Vision Models (LVMs) is following in the footsteps of the recent prosperity of Large Language Models (LLMs) in following years. However, there's a noticeable gap in structured research applying LVMs to Human-Robot Interaction (HRI), despite extensive evidence supporting the efficacy of vision models in enhancing interactions between humans and robots. Recognizing the vast and anticipated potential, we introduce an initial design space that incorporates domain-specific LVMs, chosen for their superior performance over normal models. We delve into three primary dimensions: HRI contexts, vision-based tasks, and specific domains. The empirical validation was implemented among 15 experts across six evaluated metrics, showcasing the primary efficacy in relevant decision-making scenarios. We explore the process of ideation and potential application scenarios, envisioning this design space as a foundational guideline for future HRI system design, emphasizing accurate domain alignment and model selection.

Will You Participate? Exploring the Potential of Robotics Competitions on Human-centric Topics

Mar 27, 2024

Abstract:This paper presents findings from an exploratory needfinding study investigating the research current status and potential participation of the competitions on the robotics community towards four human-centric topics: safety, privacy, explainability, and federated learning. We conducted a survey with 34 participants across three distinguished European robotics consortia, nearly 60% of whom possessed over five years of research experience in robotics. Our qualitative and quantitative analysis revealed that current mainstream robotic researchers prioritize safety and explainability, expressing a greater willingness to invest in further research in these areas. Conversely, our results indicate that privacy and federated learning garner less attention and are perceived to have lower potential. Additionally, the study suggests a lack of enthusiasm within the robotics community for participating in competitions related to these topics. Based on these findings, we recommend targeting other communities, such as the machine learning community, for future competitions related to these four human-centric topics.

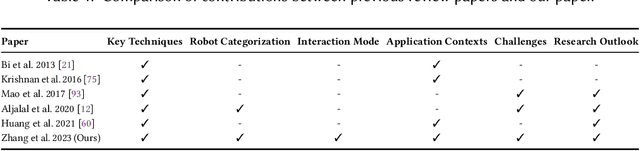

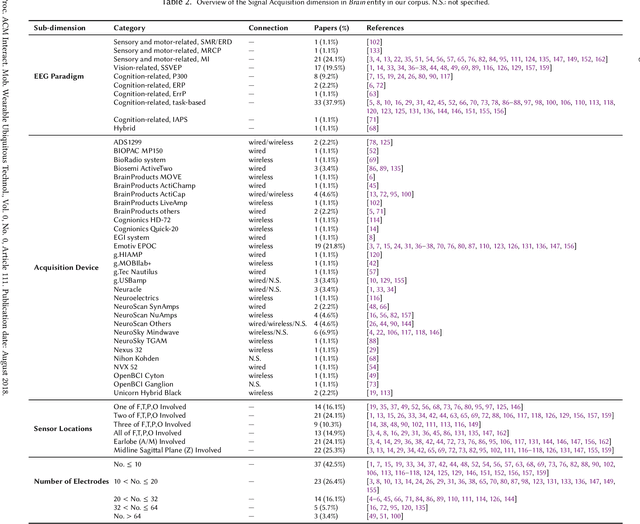

Mind Meets Robots: A Review of EEG-Based Brain-Robot Interaction Systems

Mar 13, 2024

Abstract:Brain-robot interaction (BRI) empowers individuals to control (semi-)automated machines through their brain activity, either passively or actively. In the past decade, BRI systems have achieved remarkable success, predominantly harnessing electroencephalogram (EEG) signals as the central component. This paper offers an up-to-date and exhaustive examination of 87 curated studies published during the last five years (2018-2023), focusing on identifying the research landscape of EEG-based BRI systems. This review aims to consolidate and underscore methodologies, interaction modes, application contexts, system evaluation, existing challenges, and potential avenues for future investigations in this domain. Based on our analysis, we present a BRI system model with three entities: Brain, Robot, and Interaction, depicting the internal relationships of a BRI system. We especially investigate the essence and principles on interaction modes between human brains and robots, a domain that has not yet been identified anywhere. We then discuss these entities with different dimensions encompassed. Within this model, we scrutinize and classify current research, reveal insights, specify challenges, and provide recommendations for future research trajectories in this field. Meanwhile, we envision our findings offer a design space for future human-robot interaction (HRI) research, informing the creation of efficient BRI frameworks.

FENDA-FL: Personalized Federated Learning on Heterogeneous Clinical Datasets

Sep 28, 2023

Abstract:Federated learning (FL) is increasingly being recognized as a key approach to overcoming the data silos that so frequently obstruct the training and deployment of machine-learning models in clinical settings. This work contributes to a growing body of FL research specifically focused on clinical applications along three important directions. First, an extension of the FENDA method (Kim et al., 2016) to the FL setting is proposed. Experiments conducted on the FLamby benchmarks (du Terrail et al., 2022a) and GEMINI datasets (Verma et al., 2017) show that the approach is robust to heterogeneous clinical data and often outperforms existing global and personalized FL techniques. Further, the experimental results represent substantive improvements over the original FLamby benchmarks and expand such benchmarks to include evaluation of personalized FL methods. Finally, we advocate for a comprehensive checkpointing and evaluation framework for FL to better reflect practical settings and provide multiple baselines for comparison.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge