Jonne van Haastregt

Puppeteer Your Robot: Augmented Reality Leader-Follower Teleoperation

Jul 16, 2024

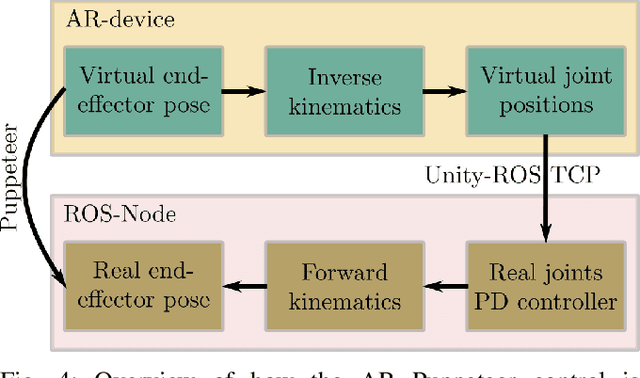

Abstract:High-quality demonstrations are necessary when learning complex and challenging manipulation tasks. In this work, we introduce an approach to puppeteer a robot by controlling a virtual robot in an augmented reality setting. Our system allows for retaining the advantages of being intuitive from a physical leader-follower side while avoiding the unnecessary use of expensive physical setup. In addition, the user is endowed with additional information using augmented reality. We validate our system with a pilot study n=10 on a block stacking and rice scooping tasks where the majority rates the system favorably. Oculus App and corresponding ROS code are available on the project website: https://ar-puppeteer.github.io/

A Robotic Skill Learning System Built Upon Diffusion Policies and Foundation Models

Mar 25, 2024

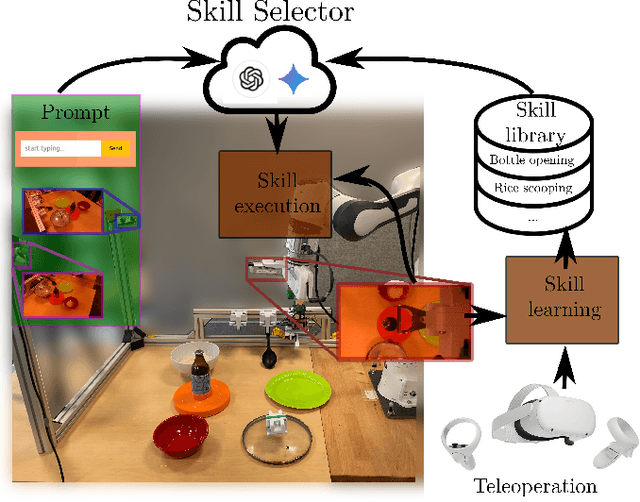

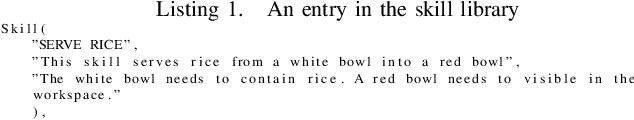

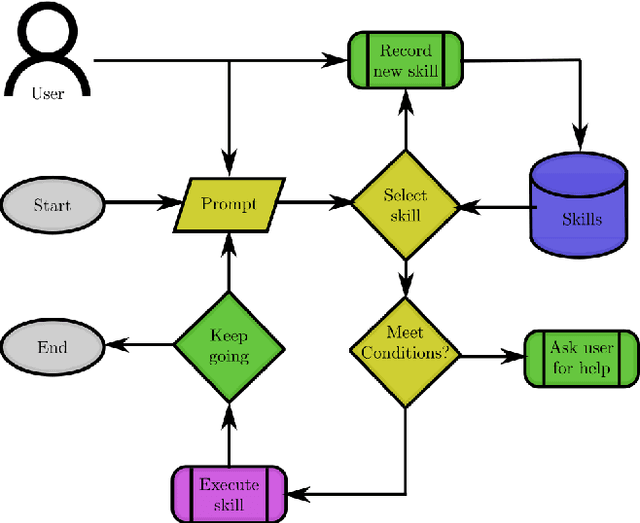

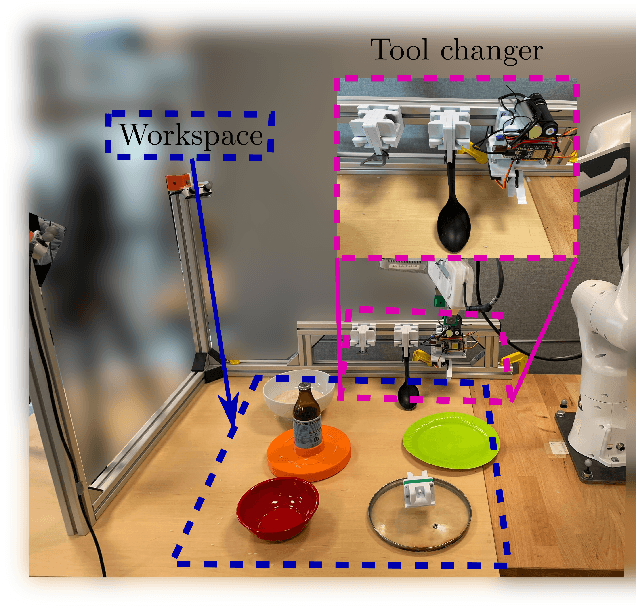

Abstract:In this paper, we build upon two major recent developments in the field, Diffusion Policies for visuomotor manipulation and large pre-trained multimodal foundational models to obtain a robotic skill learning system. The system can obtain new skills via the behavioral cloning approach of visuomotor diffusion policies given teleoperated demonstrations. Foundational models are being used to perform skill selection given the user's prompt in natural language. Before executing a skill the foundational model performs a precondition check given an observation of the workspace. We compare the performance of different foundational models to this end as well as give a detailed experimental evaluation of the skills taught by the user in simulation and the real world. Finally, we showcase the combined system on a challenging food serving scenario in the real world. Videos of all experimental executions, as well as the process of teaching new skills in simulation and the real world, are available on the project's website.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge