Farzaneh Taleb

Human-Aligned Image Models Improve Visual Decoding from the Brain

Feb 05, 2025Abstract:Decoding visual images from brain activity has significant potential for advancing brain-computer interaction and enhancing the understanding of human perception. Recent approaches align the representation spaces of images and brain activity to enable visual decoding. In this paper, we introduce the use of human-aligned image encoders to map brain signals to images. We hypothesize that these models more effectively capture perceptual attributes associated with the rapid visual stimuli presentations commonly used in visual brain data recording experiments. Our empirical results support this hypothesis, demonstrating that this simple modification improves image retrieval accuracy by up to 21% compared to state-of-the-art methods. Comprehensive experiments confirm consistent performance improvements across diverse EEG architectures, image encoders, alignment methods, participants, and brain imaging modalities.

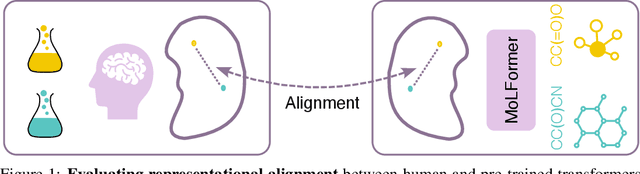

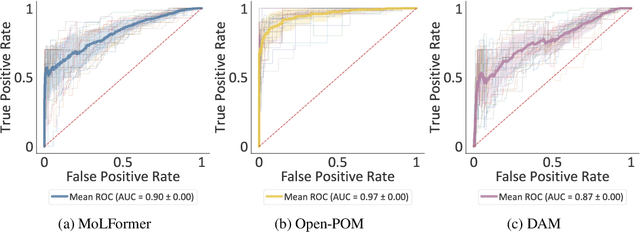

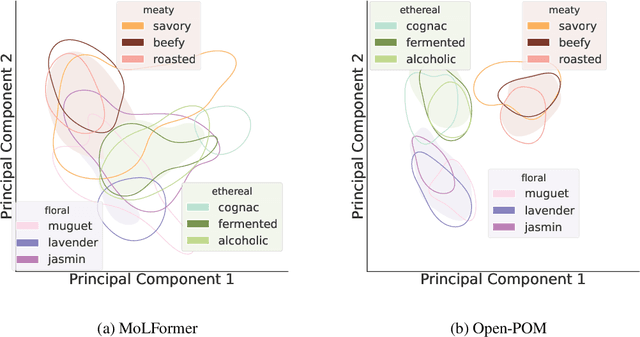

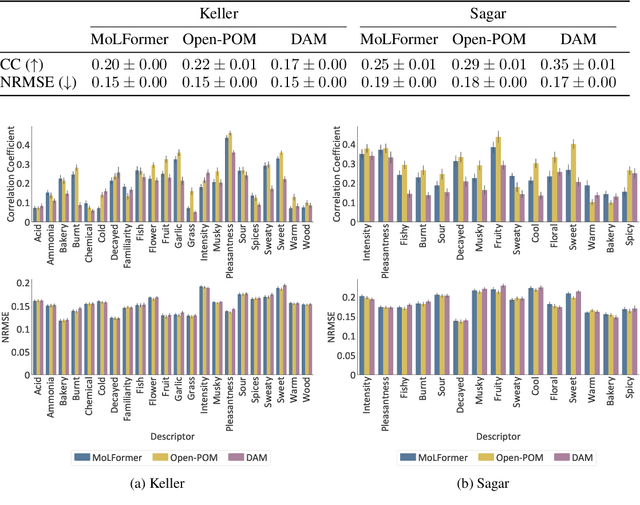

Can Transformers Smell Like Humans?

Nov 05, 2024

Abstract:The human brain encodes stimuli from the environment into representations that form a sensory perception of the world. Despite recent advances in understanding visual and auditory perception, olfactory perception remains an under-explored topic in the machine learning community due to the lack of large-scale datasets annotated with labels of human olfactory perception. In this work, we ask the question of whether pre-trained transformer models of chemical structures encode representations that are aligned with human olfactory perception, i.e., can transformers smell like humans? We demonstrate that representations encoded from transformers pre-trained on general chemical structures are highly aligned with human olfactory perception. We use multiple datasets and different types of perceptual representations to show that the representations encoded by transformer models are able to predict: (i) labels associated with odorants provided by experts; (ii) continuous ratings provided by human participants with respect to pre-defined descriptors; and (iii) similarity ratings between odorants provided by human participants. Finally, we evaluate the extent to which this alignment is associated with physicochemical features of odorants known to be relevant for olfactory decoding.

Mind Meets Robots: A Review of EEG-Based Brain-Robot Interaction Systems

Mar 13, 2024

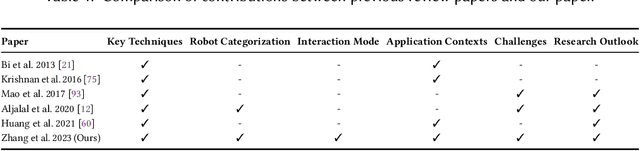

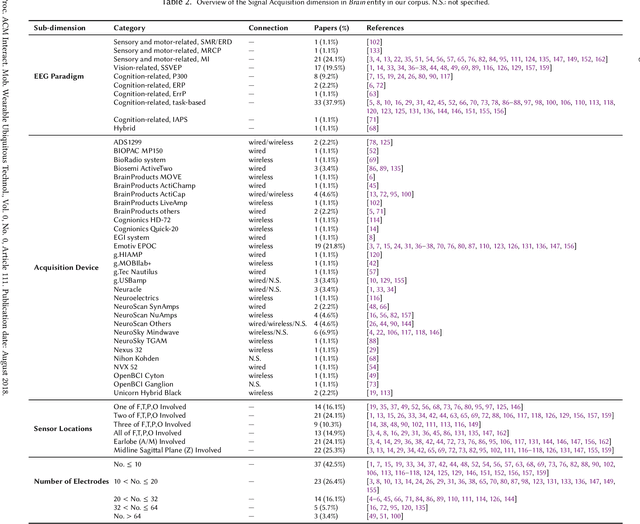

Abstract:Brain-robot interaction (BRI) empowers individuals to control (semi-)automated machines through their brain activity, either passively or actively. In the past decade, BRI systems have achieved remarkable success, predominantly harnessing electroencephalogram (EEG) signals as the central component. This paper offers an up-to-date and exhaustive examination of 87 curated studies published during the last five years (2018-2023), focusing on identifying the research landscape of EEG-based BRI systems. This review aims to consolidate and underscore methodologies, interaction modes, application contexts, system evaluation, existing challenges, and potential avenues for future investigations in this domain. Based on our analysis, we present a BRI system model with three entities: Brain, Robot, and Interaction, depicting the internal relationships of a BRI system. We especially investigate the essence and principles on interaction modes between human brains and robots, a domain that has not yet been identified anywhere. We then discuss these entities with different dimensions encompassed. Within this model, we scrutinize and classify current research, reveal insights, specify challenges, and provide recommendations for future research trajectories in this field. Meanwhile, we envision our findings offer a design space for future human-robot interaction (HRI) research, informing the creation of efficient BRI frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge