Yining Zhang

ALOHA: Empowering Multilingual Agent for University Orientation with Hierarchical Retrieval

May 13, 2025Abstract:The rise of Large Language Models~(LLMs) revolutionizes information retrieval, allowing users to obtain required answers through complex instructions within conversations. However, publicly available services remain inadequate in addressing the needs of faculty and students to search campus-specific information. It is primarily due to the LLM's lack of domain-specific knowledge and the limitation of search engines in supporting multilingual and timely scenarios. To tackle these challenges, we introduce ALOHA, a multilingual agent enhanced by hierarchical retrieval for university orientation. We also integrate external APIs into the front-end interface to provide interactive service. The human evaluation and case study show our proposed system has strong capabilities to yield correct, timely, and user-friendly responses to the queries in multiple languages, surpassing commercial chatbots and search engines. The system has been deployed and has provided service for more than 12,000 people.

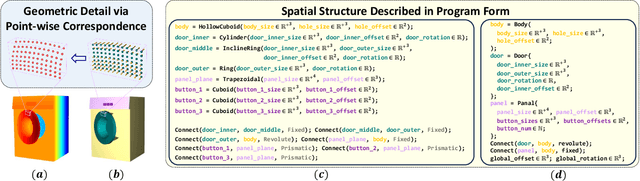

Arti-PG: A Toolbox for Procedurally Synthesizing Large-Scale and Diverse Articulated Objects with Rich Annotations

Dec 19, 2024

Abstract:The acquisition of substantial volumes of 3D articulated object data is expensive and time-consuming, and consequently the scarcity of 3D articulated object data becomes an obstacle for deep learning methods to achieve remarkable performance in various articulated object understanding tasks. Meanwhile, pairing these object data with detailed annotations to enable training for various tasks is also difficult and labor-intensive to achieve. In order to expeditiously gather a significant number of 3D articulated objects with comprehensive and detailed annotations for training, we propose Articulated Object Procedural Generation toolbox, a.k.a. Arti-PG toolbox. Arti-PG toolbox consists of i) descriptions of articulated objects by means of a generalized structure program along with their analytic correspondence to the objects' point cloud, ii) procedural rules about manipulations on the structure program to synthesize large-scale and diverse new articulated objects, and iii) mathematical descriptions of knowledge (e.g. affordance, semantics, etc.) to provide annotations to the synthesized object. Arti-PG has two appealing properties for providing training data for articulated object understanding tasks: i) objects are created with unlimited variations in shape through program-oriented structure manipulation, ii) Arti-PG is widely applicable to diverse tasks by easily providing comprehensive and detailed annotations. Arti-PG now supports the procedural generation of 26 categories of articulate objects and provides annotations across a wide range of both vision and manipulation tasks, and we provide exhaustive experiments which fully demonstrate its advantages. We will make Arti-PG toolbox publicly available for the community to use.

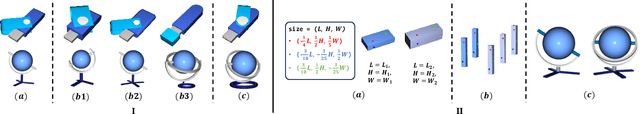

ConceptFactory: Facilitate 3D Object Knowledge Annotation with Object Conceptualization

Nov 01, 2024Abstract:We present ConceptFactory, a novel scope to facilitate more efficient annotation of 3D object knowledge by recognizing 3D objects through generalized concepts (i.e. object conceptualization), aiming at promoting machine intelligence to learn comprehensive object knowledge from both vision and robotics aspects. This idea originates from the findings in human cognition research that the perceptual recognition of objects can be explained as a process of arranging generalized geometric components (e.g. cuboids and cylinders). ConceptFactory consists of two critical parts: i) ConceptFactory Suite, a unified toolbox that adopts Standard Concept Template Library (STL-C) to drive a web-based platform for object conceptualization, and ii) ConceptFactory Asset, a large collection of conceptualized objects acquired using ConceptFactory suite. Our approach enables researchers to effortlessly acquire or customize extensive varieties of object knowledge to comprehensively study different object understanding tasks. We validate our idea on a wide range of benchmark tasks from both vision and robotics aspects with state-of-the-art algorithms, demonstrating the high quality and versatility of annotations provided by our approach. Our website is available at https://apeirony.github.io/ConceptFactory.

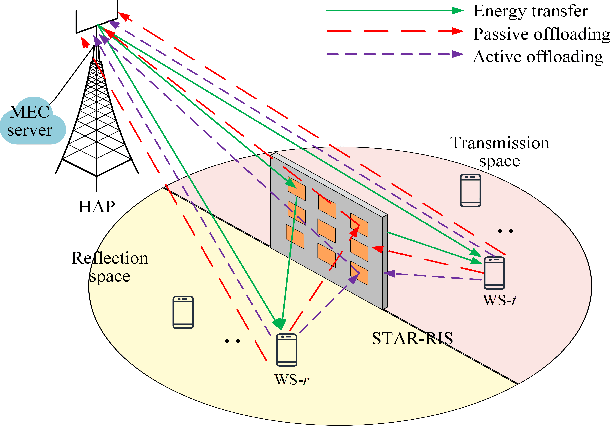

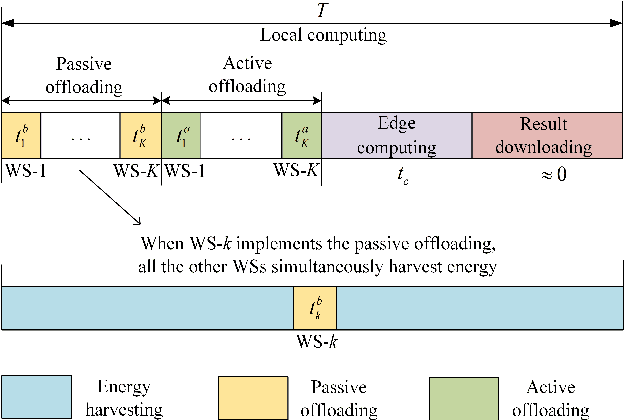

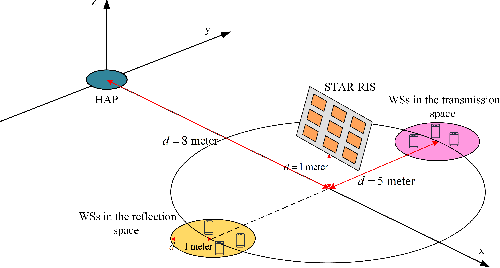

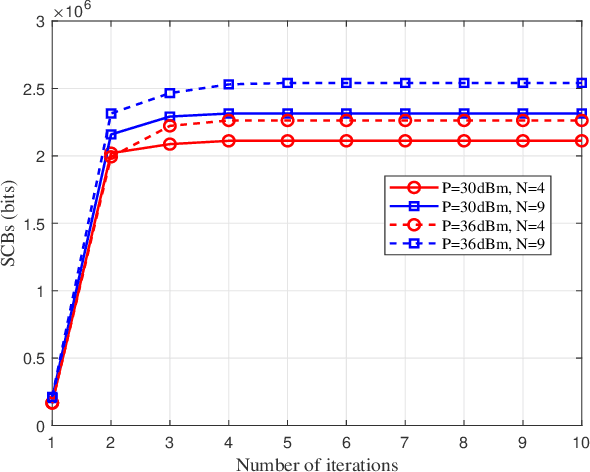

STAR-RIS Assisted Wireless-Powered and Backscattering Mobile Edge Computing Networks

Mar 05, 2024

Abstract:Wireless powered and backscattering mobile edge computing (WPB-MEC) network is a novel network paradigm to supply energy supplies and computing resource to wireless sensors (WSs). However, its performance is seriously affected by severe attenuations and inappropriate assumptions of infinite computing capability at the hybrid access point (HAP). To address the above issues, in this paper, we propose a simultaneously transmitting and reflecting reconfigurable intelligent surface (STAR-RIS) aided scheme for boosting the performance of WPB-MEC network under the constraint of finite computing capability. Specifically, energy-constrained WSs are able to offload tasks actively or passively from them to the HAP. In this process, the STAR-RIS is utilized to improve the quantity of harvested energy and strengthen the offloading efficiency by adapting its operating protocols. We then maximize the sum computational bits (SCBs) under the finite computing capability constraint. To handle the solving challenges, we first present interesting results in closed-form and then design a block coordinate descent (BCD) based algorithm, ensuring a near-optimal solution. Finally, simulation results are provided to confirm that our proposed scheme can improve the SCBs by 9.9 times compared to the local computing only scheme.

Fine-Grained and High-Faithfulness Explanations for Convolutional Neural Networks

Mar 16, 2023

Abstract:Recently, explaining CNNs has become a research hotspot. CAM (Class Activation Map)-based methods and LRP (Layer-wise Relevance Propagation) method are two common explanation methods. However, due to the small spatial resolution of the last convolutional layer, the CAM-based methods can often only generate coarse-grained visual explanations that provide a coarse location of the target object. LRP and its variants, on the other hand, can generate fine-grained explanations. But the faithfulness of the explanations is too low. In this paper, we propose FG-CAM (fine-grained CAM), which extends the CAM-based methods to generate fine-grained visual explanations with high faithfulness. FG-CAM uses the relationship between two adjacent layers of feature maps with resolution difference to gradually increase the explanation resolution, while finding the contributing pixels and filtering out the pixels that do not contribute at each step. Our method not only solves the shortcoming of CAM-based methods without changing their characteristics, but also generates fine-grained explanations that have higher faithfulness than LRP and its variants. We also present FG-CAM with denoising, which is a variant of FG-CAM and is able to generate less noisy explanations with almost no change in explanation faithfulness. Experimental results show that the performance of FG-CAM is almost unaffected by the explanation resolution. FG-CAM outperforms existing CAM-based methods significantly in the both shallow and intermediate convolutional layers, and outperforms LRP and its variations significantly in the input layer.

BabyAI++: Towards Grounded-Language Learning beyond Memorization

Apr 15, 2020

Abstract:Despite success in many real-world tasks (e.g., robotics), reinforcement learning (RL) agents still learn from tabula rasa when facing new and dynamic scenarios. By contrast, humans can offload this burden through textual descriptions. Although recent works have shown the benefits of instructive texts in goal-conditioned RL, few have studied whether descriptive texts help agents to generalize across dynamic environments. To promote research in this direction, we introduce a new platform, BabyAI++, to generate various dynamic environments along with corresponding descriptive texts. Moreover, we benchmark several baselines inherited from the instruction following setting and develop a novel approach towards visually-grounded language learning on our platform. Extensive experiments show strong evidence that using descriptive texts improves the generalization of RL agents across environments with varied dynamics.

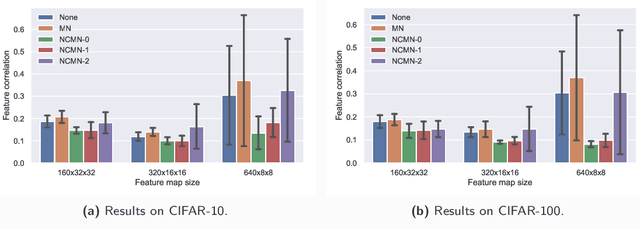

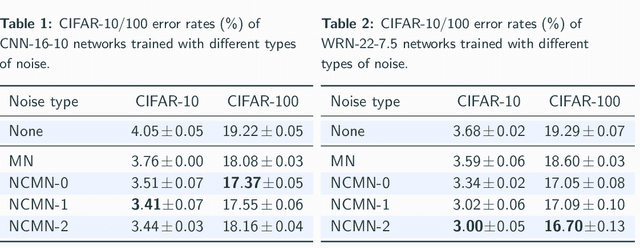

Removing the Feature Correlation Effect of Multiplicative Noise

Sep 19, 2018

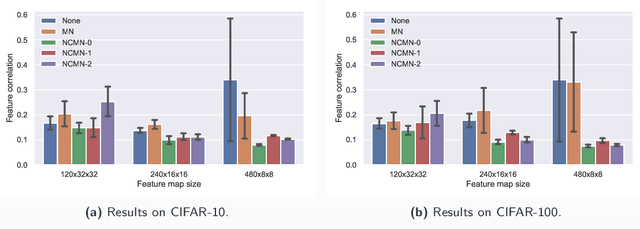

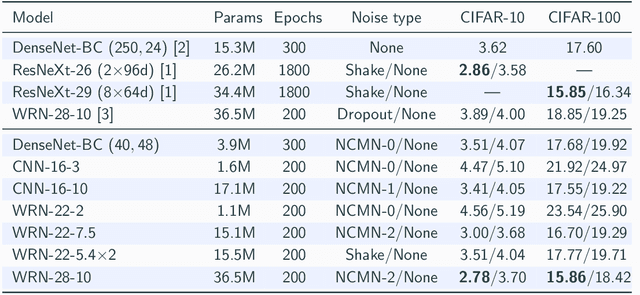

Abstract:Multiplicative noise, including dropout, is widely used to regularize deep neural networks (DNNs), and is shown to be effective in a wide range of architectures and tasks. From an information perspective, we consider injecting multiplicative noise into a DNN as training the network to solve the task with noisy information pathways, which leads to the observation that multiplicative noise tends to increase the correlation between features, so as to increase the signal-to-noise ratio of information pathways. However, high feature correlation is undesirable, as it increases redundancy in representations. In this work, we propose non-correlating multiplicative noise (NCMN), which exploits batch normalization to remove the correlation effect in a simple yet effective way. We show that NCMN significantly improves the performance of standard multiplicative noise on image classification tasks, providing a better alternative to dropout for batch-normalized networks. Additionally, we present a unified view of NCMN and shake-shake regularization, which explains the performance gain of the latter.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge