Yifei Xue

DisorientLiDAR: Physical Attacks on LiDAR-based Localization

Sep 16, 2025

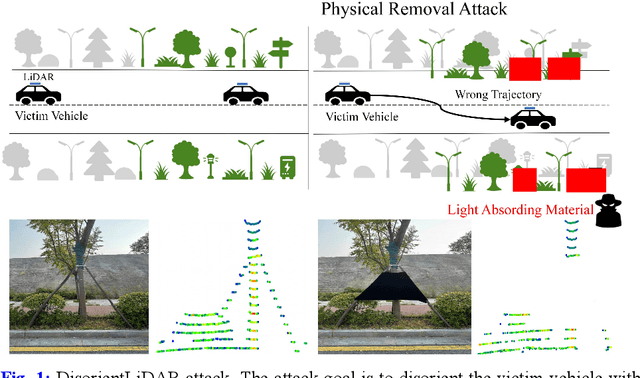

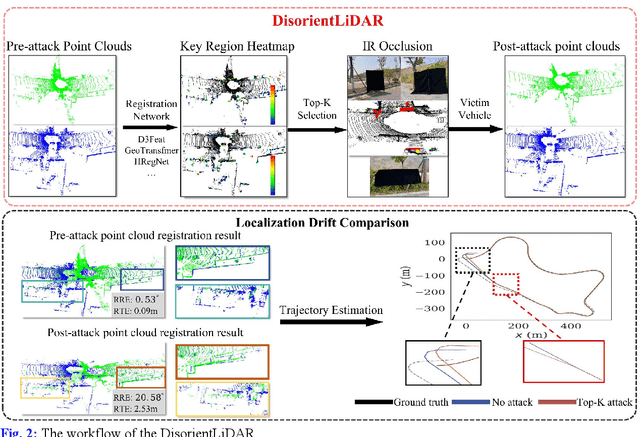

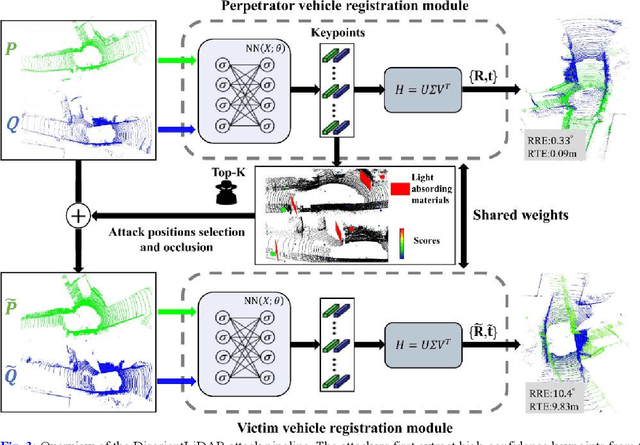

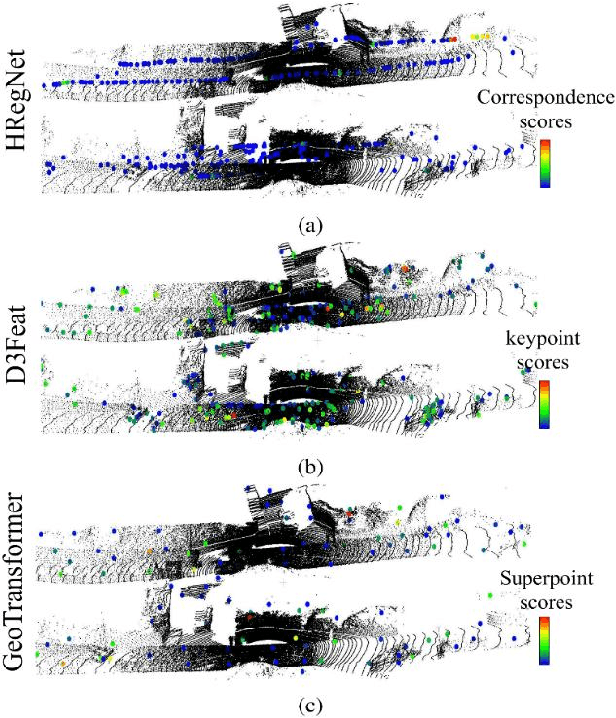

Abstract:Deep learning models have been shown to be susceptible to adversarial attacks with visually imperceptible perturbations. Even this poses a serious security challenge for the localization of self-driving cars, there has been very little exploration of attack on it, as most of adversarial attacks have been applied to 3D perception. In this work, we propose a novel adversarial attack framework called DisorientLiDAR targeting LiDAR-based localization. By reverse-engineering localization models (e.g., feature extraction networks), adversaries can identify critical keypoints and strategically remove them, thereby disrupting LiDAR-based localization. Our proposal is first evaluated on three state-of-the-art point-cloud registration models (HRegNet, D3Feat, and GeoTransformer) using the KITTI dataset. Experimental results demonstrate that removing regions containing Top-K keypoints significantly degrades their registration accuracy. We further validate the attack's impact on the Autoware autonomous driving platform, where hiding merely a few critical regions induces noticeable localization drift. Finally, we extended our attacks to the physical world by hiding critical regions with near-infrared absorptive materials, thereby successfully replicate the attack effects observed in KITTI data. This step has been closer toward the realistic physical-world attack that demonstrate the veracity and generality of our proposal.

PIS3R: Very Large Parallax Image Stitching via Deep 3D Reconstruction

Aug 06, 2025Abstract:Image stitching aim to align two images taken from different viewpoints into one seamless, wider image. However, when the 3D scene contains depth variations and the camera baseline is significant, noticeable parallax occurs-meaning the relative positions of scene elements differ substantially between views. Most existing stitching methods struggle to handle such images with large parallax effectively. To address this challenge, in this paper, we propose an image stitching solution called PIS3R that is robust to very large parallax based on the novel concept of deep 3D reconstruction. First, we apply visual geometry grounded transformer to two input images with very large parallax to obtain both intrinsic and extrinsic parameters, as well as the dense 3D scene reconstruction. Subsequently, we reproject reconstructed dense point cloud onto a designated reference view using the recovered camera parameters, achieving pixel-wise alignment and generating an initial stitched image. Finally, to further address potential artifacts such as holes or noise in the initial stitching, we propose a point-conditioned image diffusion module to obtain the refined result.Compared with existing methods, our solution is very large parallax tolerant and also provides results that fully preserve the geometric integrity of all pixels in the 3D photogrammetric context, enabling direct applicability to downstream 3D vision tasks such as SfM. Experimental results demonstrate that the proposed algorithm provides accurate stitching results for images with very large parallax, and outperforms the existing methods qualitatively and quantitatively.

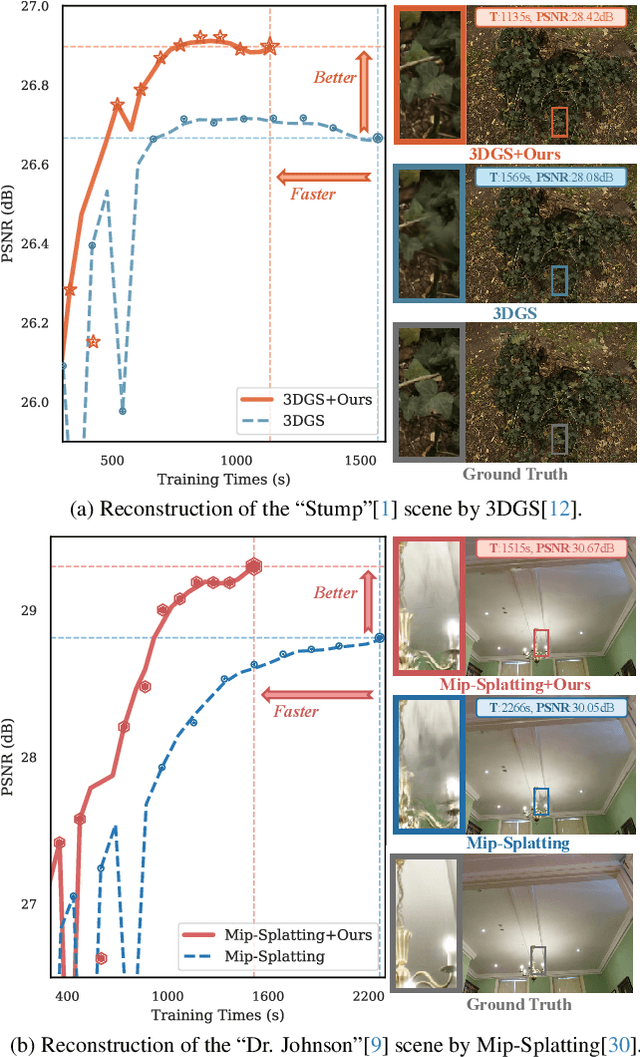

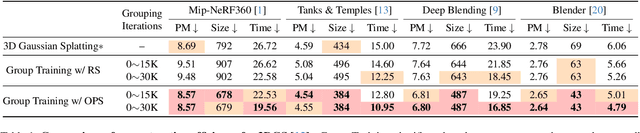

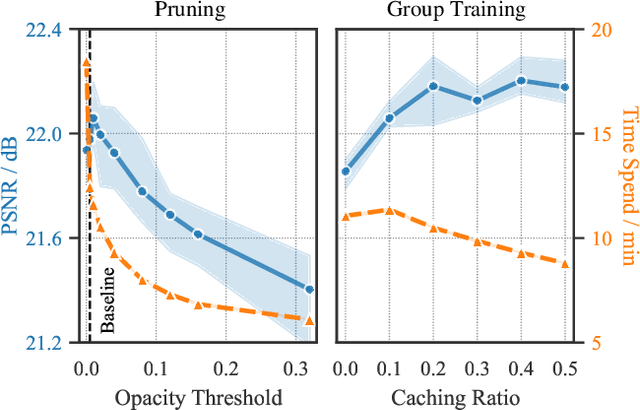

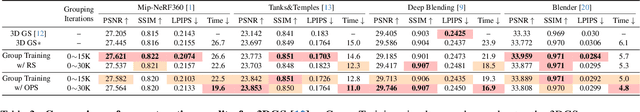

Faster and Better 3D Splatting via Group Training

Dec 10, 2024

Abstract:3D Gaussian Splatting (3DGS) has emerged as a powerful technique for novel view synthesis, demonstrating remarkable capability in high-fidelity scene reconstruction through its Gaussian primitive representations. However, the computational overhead induced by the massive number of primitives poses a significant bottleneck to training efficiency. To overcome this challenge, we propose Group Training, a simple yet effective strategy that organizes Gaussian primitives into manageable groups, optimizing training efficiency and improving rendering quality. This approach shows universal compatibility with existing 3DGS frameworks, including vanilla 3DGS and Mip-Splatting, consistently achieving accelerated training while maintaining superior synthesis quality. Extensive experiments reveal that our straightforward Group Training strategy achieves up to 30% faster convergence and improved rendering quality across diverse scenarios.

Predictable Emergent Abilities of LLMs: Proxy Tasks Are All You Need

Dec 10, 2024

Abstract:While scaling laws optimize training configurations for large language models (LLMs) through experiments on smaller or early-stage models, they fail to predict emergent abilities due to the absence of such capabilities in these models. To address this, we propose a method that predicts emergent abilities by leveraging proxy tasks. We begin by establishing relevance metrics between the target task and candidate tasks based on performance differences across multiple models. These candidate tasks are then validated for robustness with small model ensembles, leading to the selection of the most appropriate proxy tasks. The predicted performance on the target task is then derived by integrating the evaluation results of these proxies. In a case study on tool utilization capabilities, our method demonstrated a strong correlation between predicted and actual performance, confirming its effectiveness.

RSL-BA: Rolling Shutter Line Bundle Adjustment

Aug 10, 2024

Abstract:The line is a prevalent element in man-made environments, inherently encoding spatial structural information, thus making it a more robust choice for feature representation in practical applications. Despite its apparent advantages, previous rolling shutter bundle adjustment (RSBA) methods have only supported sparse feature points, which lack robustness, particularly in degenerate environments. In this paper, we introduce the first rolling shutter line-based bundle adjustment solution, RSL-BA. Specifically, we initially establish the rolling shutter camera line projection theory utilizing Pl\"ucker line parameterization. Subsequently, we derive a series of reprojection error formulations which are stable and efficient. Finally, we theoretically and experimentally demonstrate that our method can prevent three common degeneracies, one of which is first discovered in this paper. Extensive synthetic and real data experiments demonstrate that our method achieves efficiency and accuracy comparable to existing point-based rolling shutter bundle adjustment solutions.

BirdNeRF: Fast Neural Reconstruction of Large-Scale Scenes From Aerial Imagery

Feb 11, 2024Abstract:In this study, we introduce BirdNeRF, an adaptation of Neural Radiance Fields (NeRF) designed specifically for reconstructing large-scale scenes using aerial imagery. Unlike previous research focused on small-scale and object-centric NeRF reconstruction, our approach addresses multiple challenges, including (1) Addressing the issue of slow training and rendering associated with large models. (2) Meeting the computational demands necessitated by modeling a substantial number of images, requiring extensive resources such as high-performance GPUs. (3) Overcoming significant artifacts and low visual fidelity commonly observed in large-scale reconstruction tasks due to limited model capacity. Specifically, we present a novel bird-view pose-based spatial decomposition algorithm that decomposes a large aerial image set into multiple small sets with appropriately sized overlaps, allowing us to train individual NeRFs of sub-scene. This decomposition approach not only decouples rendering time from the scene size but also enables rendering to scale seamlessly to arbitrarily large environments. Moreover, it allows for per-block updates of the environment, enhancing the flexibility and adaptability of the reconstruction process. Additionally, we propose a projection-guided novel view re-rendering strategy, which aids in effectively utilizing the independently trained sub-scenes to generate superior rendering results. We evaluate our approach on existing datasets as well as against our own drone footage, improving reconstruction speed by 10x over classical photogrammetry software and 50x over state-of-the-art large-scale NeRF solution, on a single GPU with similar rendering quality.

DFR: Depth from Rotation by Uncalibrated Image Rectification with Latitudinal Motion Assumption

Jul 11, 2023Abstract:Despite the increasing prevalence of rotating-style capture (e.g., surveillance cameras), conventional stereo rectification techniques frequently fail due to the rotation-dominant motion and small baseline between views. In this paper, we tackle the challenge of performing stereo rectification for uncalibrated rotating cameras. To that end, we propose Depth-from-Rotation (DfR), a novel image rectification solution that analytically rectifies two images with two-point correspondences and serves for further depth estimation. Specifically, we model the motion of a rotating camera as the camera rotates on a sphere with fixed latitude. The camera's optical axis lies perpendicular to the sphere's surface. We call this latitudinal motion assumption. Then we derive a 2-point analytical solver from directly computing the rectified transformations on the two images. We also present a self-adaptive strategy to reduce the geometric distortion after rectification. Extensive synthetic and real data experiments demonstrate that the proposed method outperforms existing works in effectiveness and efficiency by a significant margin.

Revisiting Rolling Shutter Bundle Adjustment: Toward Accurate and Fast Solution

Oct 10, 2022

Abstract:We propose a robust and fast bundle adjustment solution that estimates the 6-DoF pose of the camera and the geometry of the environment based on measurements from a rolling shutter (RS) camera. This tackles the challenges in the existing works, namely relying on additional sensors, high frame rate video as input, restrictive assumptions on camera motion, readout direction, and poor efficiency. To this end, we first investigate the influence of normalization to the image point on RSBA performance and show its better approximation in modelling the real 6-DoF camera motion. Then we present a novel analytical model for the visual residual covariance, which can be used to standardize the reprojection error during the optimization, consequently improving the overall accuracy. More importantly, the combination of normalization and covariance standardization weighting in RSBA (NW-RSBA) can avoid common planar degeneracy without needing to constrain the filming manner. Besides, we propose an acceleration strategy for NW-RSBA based on the sparsity of its Jacobian matrix and Schur complement. The extensive synthetic and real data experiments verify the effectiveness and efficiency of the proposed solution over the state-of-the-art works. We also demonstrate the proposed method can be easily implemented and plug-in famous GSSfM and GSSLAM systems as completed RSSfM and RSSLAM solutions.

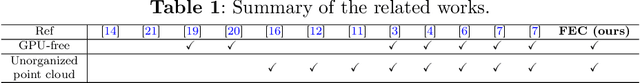

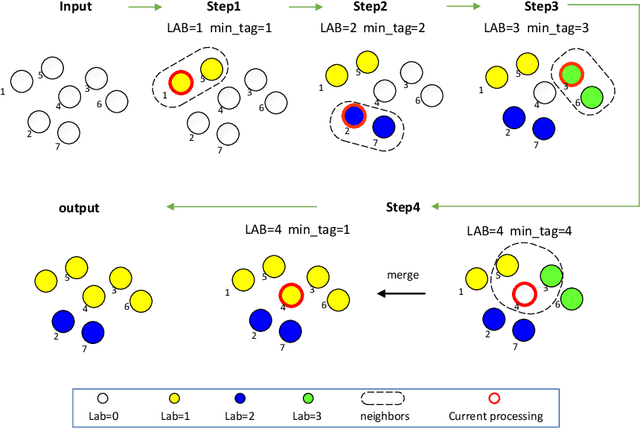

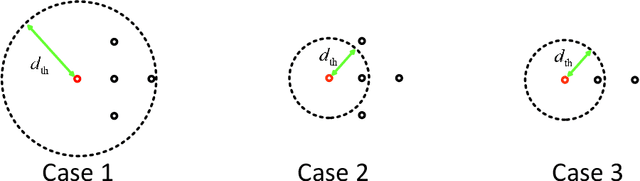

FEC: Fast Euclidean Clustering for Point Cloud Segmentation

Aug 16, 2022

Abstract:Segmentation from point cloud data is essential in many applications such as remote sensing, mobile robots, or autonomous cars. However, the point clouds captured by the 3D range sensor are commonly sparse and unstructured, challenging efficient segmentation. In this paper, we present a fast solution to point cloud instance segmentation with small computational demands. To this end, we propose a novel fast Euclidean clustering (FEC) algorithm which applies a pointwise scheme over the clusterwise scheme used in existing works. Our approach is conceptually simple, easy to implement (40 lines in C++), and achieves two orders of magnitudes faster against the classical segmentation methods while producing high-quality results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge